Databricks SQL continues to evolve with new options and efficiency enhancements designed to make it less complicated, sooner, and extra cost-efficient. Constructed on the lakehouse structure inside the Databricks Knowledge Intelligence Platform, it’s trusted by over 11,000 prospects to energy their information workloads.

On this weblog, we’ll cowl key updates from the previous three months, together with our recognition within the 2024 Gartner® Magic Quadrant™ for Cloud Database Administration Programs, enhancements in AI/BI, clever experiences, administration, and extra.

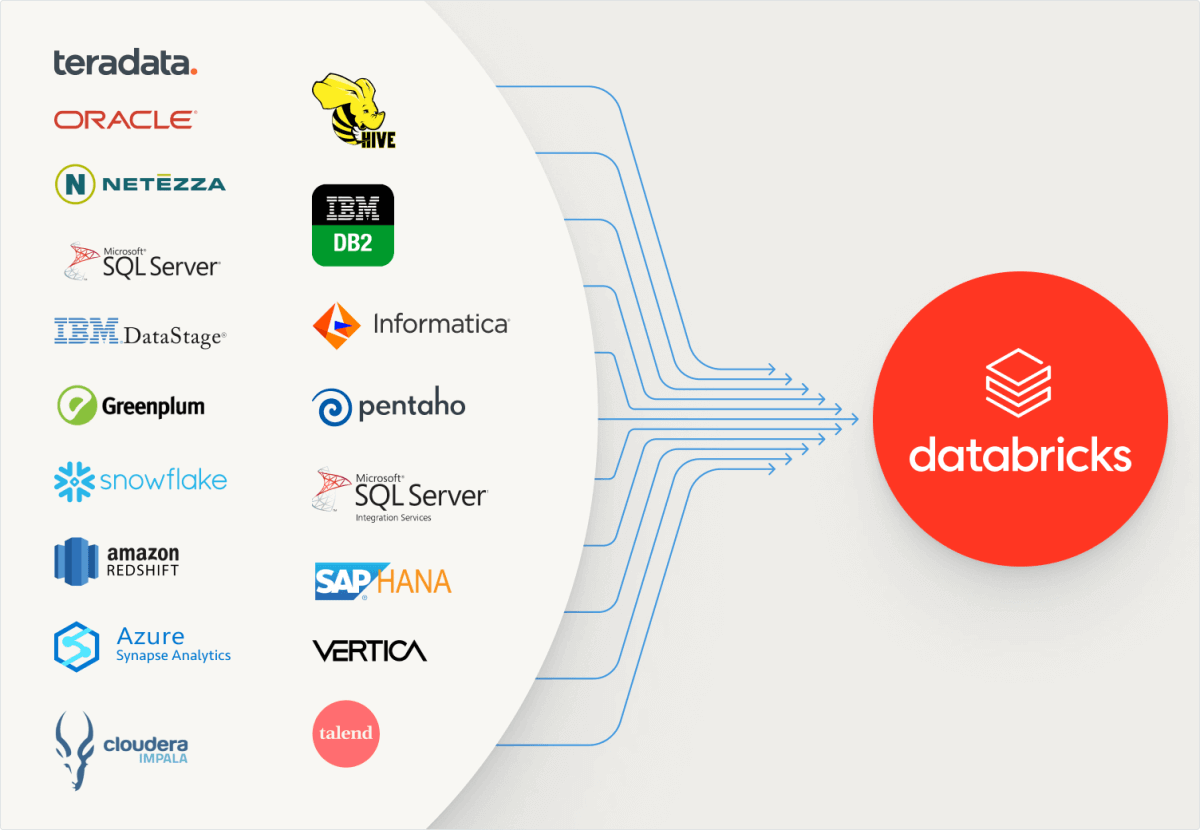

Accelerated information migrations with BladeBridge

Databricks welcomes BladeBridge, a confirmed supplier of AI-powered migration options for enterprise information warehouses. Collectively, Databricks and BladeBridge will assist enterprises speed up the work required emigrate legacy information warehouses like Oracle, SQL Server, Snowflake, and Redshift to Databricks SQL (DBSQL), the info warehouse constructed on Databricks’ category-defining lakehouse. BladeBridge will present prospects with clear perception into the scope of conversion, configurable code transpiling, LLM-powered conversion, and simple validation of migrated programs.

BladeBridge offers an AI-enhanced strategy emigrate over 20 legacy information warehouses and ETL instruments – together with Teradata, Oracle, Snowflake, SQL Server, Amazon Redshift, Azure Synapse Analytics, and Hive – greater than 50% sooner than conventional approaches. To study extra, learn the announcement weblog.

Clever experiences

We’re targeted on making the SQL analyst expertise extra intuitive, environment friendly, and fascinating. By embedding AI all through our merchandise, you may spend much less time on repetitive duties and extra time on high-value work.

AI/BI

For the reason that AI/BI launch in fall 2024, we’ve rolled out new options in Genie and Dashboards, with extra on the way in which. Current Dashboard updates embrace:

- improved AI help for producing charts

- copy-paste assist for widgets throughout pages

- upgraded pivot tables and level maps for higher information exploration

For Genie, we’ve enhanced:

- query benchmarking for extra correct evaluations

- editable, resizable visualizations for versatile reporting

- reply high quality, with enhancements in the way it handles rating outcomes and managing date and time-related queries

We’ll dive deeper into these updates in an upcoming weblog, however when you’re desirous to discover now, try the most recent AI/BI launch notes.

SQL editor

We’re continuously bettering the SQL authoring expertise that will help you work extra effectively. One of many largest updates final quarter was Git assist for queries, making it simpler to model management your SQL and combine with CI/CD pipelines.

*Git assist for queries is accessible when the brand new SQL editor is enabled.

We’ve additionally added new options to streamline your workflow:

- A number of end result statements: View and evaluate outputs from completely different SQL statements aspect by aspect.

- Full end result desk filtering: Apply filters to total datasets, not simply the portion loaded in your browser.

- Sooner tab switching: As much as 80% sooner for loaded tabs and 62% sooner for unloaded tabs, making navigation smoother.

- Adjustable font dimension: You possibly can rapidly change the SQL editor font dimension with keyboard shortcuts (Alt + / Alt—on Home windows/Linux, Choose + / Choose—on macOS).

- Improved feedback with @mentions: Collaborate in real-time by mentioning teammates instantly in feedback utilizing “@” adopted by their username. They’ll obtain e-mail notifications, conserving everybody within the loop.

Predictive optimization of your platform

Predictive optimizations use AI to mechanically handle efficiency for your whole workloads. We’re continuously bettering and including options on this space to remove the necessity for handbook tuning throughout the platform.

Predictive optimization for statistics

The information lakehouse makes use of two distinct varieties of statistics: data-skipping statistics (also called Delta stats) and question optimizer statistics. Knowledge-skipping statistics are collected mechanically, however as information grows and utilization diversifies, figuring out when to run the ANALYZE command turns into advanced. You even have to keep up your question optimizer statistics actively.

We’re excited to introduce the gated Public Preview of Predictive Optimization for statistics. Predictive Optimization is now typically accessible as an AI-driven strategy to streamlining optimization processes. This characteristic at present helps important information structure and cleanup duties, and early suggestions from customers highlights its effectiveness in simplifying routine information upkeep. With the addition of computerized statistics administration, Predictive Optimization delivers worth and simplifies operations by the next developments:

- Clever number of data-skipping statistics, eliminating the necessity for column order administration

- Computerized assortment of question optimization statistics, eradicating the need to run ANALYZE after information loading

- As soon as collected, statistics inform question execution methods and, on common, drive higher efficiency and decrease prices

Using up-to-date statistics considerably enhances efficiency and complete value of possession (TCO). Comparative evaluation of question execution with and with out statistics revealed a mean efficiency improve of twenty-two% throughout noticed workloads. Databricks applies these statistics to refine information scanning processes and choose essentially the most environment friendly question execution plan. This strategy exemplifies the capabilities of the Knowledge Intelligence Platform in delivering tangible worth to customers.

To study extra, learn this weblog.

World-class value/efficiency

The question engine continues to be optimized to scale compute prices with close to linearity to information quantity. Our purpose is ever-better efficiency in a world of ever-increasing concurrency–with ever-decreasing latency.

Improved efficiency throughout the board

Databricks SQL has seen a exceptional 77% efficiency enchancment since its launch in 2022, delivering sooner BI queries, extra responsive dashboards, and faster information exploration. Within the final 5-months of the 12 months alone, BI workloads are 14% sooner, ETL jobs clock in 9% sooner, and exploration workloads have improved by 13%. On high of that, we rolled out enhanced concurrency options and superior compression in Non-public Preview, making certain you save on each time and price.

Databricks named a pacesetter in 2024 Gartner® Magic Quadrant™ for Cloud Database Administration Programs

For the fourth 12 months in a row, Databricks has been named a Chief within the 2024 Gartner® Magic Quadrant™ for Cloud Database Administration Programs. This 12 months, we’ve made positive aspects in each the Capacity to Execute and the Completeness of our Imaginative and prescient. The analysis coated the Databricks Knowledge Intelligence Platform throughout AWS, Google Cloud, and Azure, alongside 19 different distributors.

Administration and administration

We’re increasing capabilities to assist workspace directors configure and handle SQL warehouses, together with system tables and a brand new chart to troubleshoot warehouse efficiency.

Price administration

To offer you visibility into how your group is utilizing Databricks, you should utilize the billing and price information in your system tables. To make that simpler, we now have a pre-built AI/BI Price Dashboard. The dashboard organizes your consumption information utilizing greatest practices for tagging, and helps you create budgets to handle your spend at a company, enterprise unit or challenge stage. You possibly can then set price range alerts while you exceed the price range (and monitor down which challenge / workload / consumer overspent).

For extra info, try this value administration weblog.

System tables

We suggest system tables to look at important particulars about your Databricks account, together with value info, information entry, workload efficiency, and so on. Particularly, they’re Databricks-owned tables you may entry from varied surfaces, normally with low latency.

Warehouses

The Warehouses system desk (system.compute.warehouses desk) information when SQL warehouses are created, edited, and deleted. You should utilize the desk to observe adjustments to warehouse settings, together with the warehouse identify, kind, dimension, channel, tags, auto-stop, and autoscaling settings. Every row is a snapshot of a SQL warehouse’s properties at a particular cut-off date. A brand new snapshot is created when the properties change. For extra particulars, see Warehouses system desk reference. This characteristic is in Public Preview.

Question historical past

The Question historical past desk (system. question.historical past) contains information for queries run utilizing SQL warehouses or serverless compute for notebooks and jobs. The desk consists of account-wide information from all workspaces in the identical area from which you entry the desk. This characteristic is in Public Preview.

For extra particulars, see the Question historical past system desk reference.

Accomplished question depend chart to assist troubleshoot warehouse efficiency

A Accomplished question depend chart (Public Preview) is now accessible on the SQL warehouse monitoring UI. This new chart reveals the variety of queries completed in a time window. The chart can be utilized alongside the height working queries and working clusters chart to visualise adjustments to warehouse throughput as clusters spin up or down relying in your workload visitors and warehouse settings. For extra info, see Monitor a SQL warehouse.

Expanded areas and compliance availability for Databricks SQL Serverless

Availability and compliance are prolonged for Databricks SQL Serverless warehouses.

- New Serverless areas:

- GCP is now typically accessible throughout the present seven areas.

- AWS provides the eu-west-2 area for London.

- Azure provides 4 areas: France Central, Sweden Central, Germany West Central, and UAE North.

- Serverless compliance by area

- HIPAA: HIPAA compliance is accessible in all areas the place Serverless SQL is accessible throughout all cloud suppliers (Azure, AWS, and GCP).

- AWS US-East-1: PCI-DSS, FedRamp Mod compliance now GA

- AWS AP-Southeast-2: PCI-DSS and IRAP compliance now GA

- Serverless Safety

- Non-public Hyperlink: Non-public hyperlink helps you utilize a non-public community out of your customers to your information and again once more. It’s now typically accessible.

- Safe Egress helps safely management outbound information entry, making certain safety and compliance. Configure egress controls is now accessible in Public Preview.

Integration with Knowledge Intelligence Platform

These options for Databricks SQL are a part of the Databricks Knowledge Intelligence Platform. Databricks SQL advantages from the platform’s capabilities of simplicity, unified governance, and openness of the lakehouse structure. The next are a couple of new platform options useful for Databricks SQL.

SQL language enhancements: Collations

Constructing world enterprise purposes means dealing with numerous languages and inconsistent information entry. Collations streamline information processing by defining guidelines for sorting and evaluating textual content in ways in which respect language and case sensitivity. Collations make databases language- and context-aware, making certain they deal with textual content as customers count on.

We’re excited that collations at the moment are accessible in Public Preview with Databricks SQL. Learn the collations weblog for extra particulars.

Materialized views and streaming tables

Materialized views (MVs) and streaming tables (STs) at the moment are Typically Out there in Databricks SQL on AWS, Azure, and GCP. Streaming tables supply easy, incremental ingestion from sources like cloud storage and message buses with just some strains of SQL. Materialized views precompute and incrementally replace the outcomes of queries so your dashboards and queries can run considerably sooner than earlier than. Collectively, they mean you can create environment friendly and scalable information pipelines utilizing SQL, from ingestion to transformation.

For extra particulars, learn the MV and ST announcement weblog.

Simpler scheduling for Databricks SQL streaming tables and materialized views

We’ve launched EVERY syntax for scheduling MV and ST refreshes utilizing DDL. EVERY simplifies time-based scheduling by eradicating the necessity to write advanced CRON expressions. For customers who want extra flexibility, CRON scheduling will proceed to be supported.

For extra particulars, learn the documentation for ALTER MATERIALIZED VIEW, ALTER STREAMING TABLE, CREATE MATERIALIZED VIEW, and CREATE STREAMING TABLE.

Streaming tables assist for time journey queries

Now you can use time journey to question earlier streaming desk variations based mostly on timestamps or desk variations (as recorded within the transaction log). Chances are you’ll must refresh your streaming desk earlier than utilizing time journey queries.

Time journey queries are usually not supported for materialized views.

Question historical past assist for Delta Dwell Tables

Question Historical past and Question Profile now cowl queries executed by a DLT pipeline. Furthermore, question insights for Databricks SQL materialized views (MVs) and streaming tables (STs) have been improved. These queries will be accessed from the Question Historical past web page alongside queries executed on SQL Warehouses and Serverless Compute. They’re additionally listed within the context of the Pipeline UI, Notebooks, and the SQL editor.

This characteristic is accessible in Public Preview. For extra particulars, see Entry question historical past for Delta Dwell Desk pipelines.

Cross-platform view sharing for Azure Databricks

Databricks recipients can now question shared views utilizing any Databricks compute useful resource. Beforehand, if a recipient’s Azure Databricks account differed from the supplier’s, recipients may solely question a shared view utilizing a serverless SQL warehouse. See Learn shared views.

View sharing additionally now extends to open-sharing connectors. See Learn information shared utilizing Delta Sharing open sharing (for recipients).

This functionality is now in Public Preview.

Extra particulars on new improvements

We hope you get pleasure from this bounty of improvements in Databricks SQL. You possibly can at all times test this What’s New publish for the earlier three months. Under is a whole stock of launches we have blogged about during the last quarter:

As at all times, we proceed to work to convey you much more cool options. Keep tuned to the quarterly roadmap webinars to study what’s on the horizon for Knowledge Warehousing and AI/BI. It is an thrilling time to be working with information, and we’re excited to accomplice with Knowledge Architects, Analysts, BI Analysts, and extra to democratize information and AI inside your organizations!

What’s subsequent

Here’s a brief preview of the options we’re engaged on. None of those have dedicated timeframes but, so don’t ask. 🙂

Simply migrate your information warehouse to Databricks SQL

Prospects of each dimension can considerably cut back value and decrease threat when modernizing their information infrastructure away from proprietary, costly, and siloed platforms which have outlined the historical past of knowledge warehousing. We’re engaged on increasing free tooling that will help you analyze what it will take emigrate out of your present warehouse to Databricks SQL and assist you to convert your code to make the most of new Databricks SQL options.

Supercharge your BI workloads

Efficiency is vital when loading enterprise intelligence dashboards. We’re bettering the the latency of BI queries each quarter so you may energy up your favourite BI instruments like Energy BI, Tableau, Looker and Sigma with Databricks SQL.

Simplify managing and monitoring warehouses

We’re investing in additional options and instruments that will help you simply handle and monitor your warehouse. This contains system desk enhancements, adjustments through the UI, and our APIs.

To study extra about Databricks SQL, go to our web site or learn the documentation. You can too try the product tour for Databricks SQL. Suppose you need to migrate your current warehouse to a high-performance, serverless information warehouse with an incredible consumer expertise and decrease complete value. In that case, Databricks SQL is the answer — strive it without spending a dime.

To take part in non-public or gated public previews, contact your Databricks account staff.