(Yurchanka-Siarhei/Shutterstock)

AI brokers are set to remodel enterprise operations with autonomous problem-solving, adaptive workflows, and scalability. However the actual problem isn’t constructing higher fashions.

Brokers want entry to information, instruments, and the flexibility to share info throughout techniques, with their outputs accessible to be used by a number of companies—together with different brokers. This isn’t an AI downside, it’s an infrastructure and information interoperability downside. It requires greater than stitching collectively chains of instructions; it calls for an event-driven structure (EDA) powered by streams of information.

As HubSpot CTO Dharmesh Shah just lately put it, “Brokers are the brand new apps.” Assembly this potential requires investing in the appropriate design patterns from the beginning. This text explores why EDA is the important thing to scaling brokers and unlocking their full potential in fashionable enterprise techniques.

The Rise of Agentic AI

Whereas AI has come a great distance, we’re hitting the bounds of fastened workflows and even LLMs.

Google’s Gemini is reportedly failing to satisfy inner expectations regardless of being skilled on a bigger set of information. Comparable outcomes have been reported by OpenAI and their next-generation Orion mannequin.

Salesforce CEO Marc Benioff just lately stated on The Wall Avenue Journal’s “Way forward for All the pieces” podcast that we’ve reached the higher limits of what LLMs can do. He believes the longer term lies with autonomous brokers—techniques that may assume, adapt, and act independently—reasonably than fashions like GPT-4.

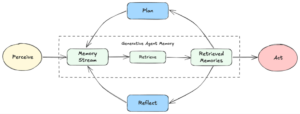

Brokers convey one thing new to the desk: dynamic, context-driven workflows. In contrast to fastened workflows, agentic techniques work out the subsequent steps on the fly, adapting to the state of affairs at hand. That makes them best for tackling the sorts of unpredictable, interconnected issues companies face at the moment.

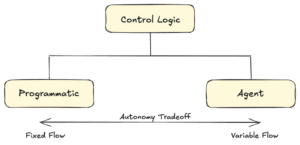

Brokers flip conventional management logic on its head.

As a substitute of inflexible applications dictating each transfer, brokers use LLMs to drive choices. They will motive, use instruments, and entry reminiscence—all dynamically. This flexibility permits for workflows that evolve in actual time, making brokers way more highly effective than something constructed on fastened logic.

The Challenges with Scaling Clever Brokers

Scaling brokers—whether or not a single agent or a collaborative system—hinges on their capability to entry and share information effortlessly. Brokers want to collect info from a number of sources, together with different brokers, instruments, and exterior techniques, to make choices and take motion.

Connecting brokers to the instruments and information they want is essentially a distributed techniques downside. This complexity mirrors the challenges confronted in designing microservices, the place parts should talk effectively with out creating bottlenecks or inflexible dependencies.

Like microservices, brokers should talk effectively and guarantee their outputs are helpful throughout the broader system. And like all service, their outputs shouldn’t simply loop again into the AI software—they need to circulate into different vital techniques like information warehouses, CRMs, CDPs, and buyer success platforms.

Positive, you might join brokers and instruments by means of RPC and APIs, however that’s a recipe for tightly coupled techniques. Tight coupling makes it more durable to scale, adapt, or assist a number of shoppers of the identical information. Brokers want flexibility. Their outputs should seamlessly feed into different brokers, companies, and platforms with out locking every part into inflexible dependencies.

What’s the answer?

Free coupling by means of an event-driven structure. It’s the spine that permits brokers to share info, act in actual time, and combine with the broader ecosystem—with out the complications of tight coupling.

Occasion-Pushed Architectures: A Primer

Within the early days, software program techniques had been monoliths. All the pieces lived in a single, tightly built-in codebase. Whereas easy to construct, monoliths turned a nightmare as they grew.

Scaling was a blunt instrument: you needed to scale your entire software, even when just one half wanted it. This inefficiency led to bloated techniques and brittle architectures that couldn’t deal with progress.

Microservices modified this.

By breaking purposes into smaller, independently deployable parts, groups may scale and replace particular elements with out touching the entire system. However this created a brand new problem: how do all these smaller companies talk successfully?

If we join companies by means of direct RPC or API calls, we create an enormous mess of interdependencies. If one service goes down, it impacts all nodes alongside the linked path.

EDA solved the issue.

As a substitute of tightly coupled, synchronous communication, EDA permits parts to speak asynchronously by means of occasions. Providers don’t wait on one another—they react to what’s occurring in real-time.

This method made techniques extra resilient and adaptable, permitting them to deal with the complexity of recent workflows. It wasn’t only a technical breakthrough; it was a survival technique for techniques below stress.

The Rise and Fall of Early Social Giants

The rise and fall of early social networks like Friendster underscore the significance of scalable structure. Friendster captured large consumer bases early on, however their techniques couldn’t deal with the demand. Efficiency points drove customers away, and the platform in the end failed.

On the flip aspect, Fb thrived not simply due to its options however as a result of it invested in scalable infrastructure. It didn’t crumble below the burden of success—it rose to dominate.

Immediately, we threat seeing the same story play out with AI brokers.

Like early social networks, brokers will expertise speedy progress and adoption. Constructing brokers isn’t sufficient. The actual query is whether or not your structure can deal with the complexity of distributed information, instrument integrations, and multi-agent collaboration. With out the appropriate basis, your agent stack may disintegrate similar to the early casualties of social media.

The Future is Occasion-Pushed Brokers

The way forward for AI isn’t nearly constructing smarter brokers—it’s about creating techniques that may evolve and scale because the expertise advances. With the AI stack and underlying fashions altering quickly, inflexible designs rapidly change into obstacles to innovation. To maintain tempo, we’d like architectures that prioritize flexibility, adaptability, and seamless integration. EDA is the inspiration for this future, enabling brokers to thrive in dynamic environments whereas remaining resilient and scalable.

Brokers as Microservices with Informational Dependencies

Brokers are much like microservices: they’re autonomous, decoupled, and able to dealing with duties independently. However brokers go additional.

Whereas microservices sometimes course of discrete operations, brokers depend on shared, context-rich info to motive, make choices, and collaborate. This creates distinctive calls for for managing dependencies and making certain real-time information flows.

As an example, an agent would possibly pull buyer information from a CRM, analyze dwell analytics, and use exterior instruments—all whereas sharing updates with different brokers. These interactions require a system the place brokers can work independently however nonetheless trade vital info fluidly.

EDA solves this problem by performing as a “central nervous system” for information. It permits brokers to broadcast occasions asynchronously, making certain that info flows dynamically with out creating inflexible dependencies. This decoupling lets brokers function autonomously whereas integrating seamlessly into broader workflows and techniques.

Decoupling Whereas Conserving Context Intact

Constructing versatile techniques doesn’t imply sacrificing context. Conventional, tightly coupled designs typically bind workflows to particular pipelines or applied sciences, forcing groups to navigate bottlenecks and dependencies. Adjustments in a single a part of the stack ripple by means of the system, slowing innovation and scaling efforts.

EDA eliminates these constraints. By decoupling workflows and enabling asynchronous communication, EDA permits totally different elements of the stack—brokers, information sources, instruments, and software layers—to perform independently.

Take at the moment’s AI stack, for instance. MLOps groups handle pipelines like RAG, information scientists choose fashions, and software builders construct the interface and backend. A tightly coupled design forces all these groups into pointless interdependencies, slowing supply and making it more durable to adapt as new instruments and methods emerge.

In distinction, an event-driven system ensures that workflows keep loosely coupled, permitting every workforce to innovate independently.

Utility layers don’t want to grasp the AI’s internals—they merely eat outcomes when wanted. This decoupling additionally ensures AI insights don’t stay siloed. Outputs from brokers can seamlessly combine into CRMs, CDPs, analytics instruments, and extra, making a unified, adaptable ecosystem.

Scaling Brokers with Occasion-Pushed Structure

EDA is the spine of this transition to agentic techniques.

Its capability to decouple workflows whereas enabling real-time communication ensures that brokers can function effectively at scale. As mentioned right here, platforms like Apache Kafka exemplify some great benefits of EDA in an agent-driven system:

Horizontal Scalability:

Kafka’s distributed design helps the addition of latest brokers or shoppers with out bottlenecks, making certain the system grows effortlessly.

Low Latency:

Actual-time occasion processing permits brokers to reply immediately to adjustments, making certain quick and dependable workflows.

Free Coupling:

By speaking by means of Kafka matters reasonably than direct dependencies, brokers stay impartial and scalable.

Occasion Persistence:

Sturdy message storage ensures that no information is misplaced in transit, which is vital for high-reliability workflows.

Brokers as occasion producers and shoppers on a real-time streaming platform (Supply: Sean Falconer)

Knowledge streaming permits the continual circulate of information all through a enterprise. A central nervous system acts because the unified spine for real-time information circulate, seamlessly connecting disparate techniques, purposes, and information sources to allow environment friendly agent communication and decision-making.

This structure is a pure match for frameworks like Anthropic’s Mannequin Context Protocol (MCP).

MCP supplies a common customary for integrating AI techniques with exterior instruments, information sources, and purposes, making certain safe and seamless entry to up-to-date info. By simplifying these connections, MCP reduces growth effort whereas enabling context-aware decision-making.

EDA addresses most of the challenges MCP goals to unravel. MCP requires seamless entry to various information sources, real-time responsiveness, and scalability to assist advanced multi-agent workflows. By decoupling techniques and enabling asynchronous communication, EDA simplifies integration and ensures brokers can eat and produce occasions with out inflexible dependencies.

Occasion-Pushed Brokers Will Outline the Way forward for AI

The AI panorama is evolving quickly, and architectures should evolve with it.

And companies are prepared. A Discussion board Ventures survey discovered that 48% of senior IT leaders are ready to combine AI brokers into operations, with 33% saying they’re very ready. This exhibits a transparent demand for techniques that may scale and deal with complexity.

EDA is the important thing to constructing agent techniques which are versatile, resilient, and scalable. It decouples parts, permits real-time workflows, and ensures brokers can combine seamlessly into broader ecosystems.

Those that undertake EDA gained’t simply survive—they’ll acquire a aggressive edge on this new wave of AI innovation. The remainder? They threat being left behind, casualties of their very own incapacity to scale.

In regards to the creator: Sean Falconer is an AI Entrepreneur in Residence at Confluent the place he works on AI technique and thought management. Sean’s been a tutorial, startup founder, and Googler. He has revealed works overlaying a variety of matters from AI to quantum computing. Sean additionally hosts the favored engineering podcasts Software program Engineering Each day and Software program Huddle. You’ll be able to join with Sean on LinkedIn.

Associated Gadgets:

The Actual-Time Way forward for Knowledge In line with Jay Kreps

Distributed Stream Processing with Apache Kafka

Conserving Knowledge Non-public and Safe with Agentic AI