One of many primary hindrances to getting worth from our knowledge is that now we have to get knowledge right into a type that’s prepared for evaluation. It sounds easy, but it surely hardly ever is. Take into account the hoops now we have to leap by means of when working with semi-structured knowledge, like JSON, in relational databases corresponding to PostgreSQL and MySQL.

JSON in Relational Databases

Previously, when it got here to working with JSON knowledge, we’ve had to decide on between instruments and platforms that labored nicely with JSON or instruments that offered good help for analytics. JSON is an effective match for doc databases, corresponding to MongoDB. It’s not such a terrific match for relational databases (though a quantity have carried out JSON features and kinds, which we are going to focus on beneath).

In software program engineering phrases, that is what’s often known as a excessive impedance mismatch. Relational databases are nicely suited to constantly structured knowledge with the identical attributes showing time and again, row after row. JSON, alternatively, is nicely suited to capturing knowledge that varies content material and construction, and has change into a particularly frequent format for knowledge trade.

Now, think about what now we have to do to load JSON knowledge right into a relational database. Step one is knowing the schema of the JSON knowledge. This begins with figuring out all attributes within the file and figuring out their knowledge sort. Some knowledge varieties, like integers and strings, will map neatly from JSON to relational database knowledge varieties.

Different knowledge varieties require extra thought. Dates, for instance, could have to be reformatted or solid right into a date or datetime knowledge sort.

Complicated knowledge varieties, like arrays and lists, don’t map on to native, relational knowledge buildings, so extra effort is required to cope with this case.

Technique 1: Mapping JSON to a Desk Construction

We might map JSON right into a desk construction, utilizing the database’s built-in JSON features. For instance, assume a desk referred to as company_regions maintains tuples together with an id, a area, and a nation. One might insert a JSON construction utilizing the built-in json_populate_record perform in PostgreSQL, as within the instance:

INSERT INTO company_regions

SELECT *

FROM json_populate_record(NULL::company_regions,

'{"region_id":"10","company_regions":"British Columbia","nation":"Canada"}')

The benefit of this method is that we get the total advantages of relational databases, like the flexibility to question with SQL, with equal efficiency to querying structured knowledge. The first drawback is that now we have to speculate extra time to create extraction, transformation, and cargo (ETL) scripts to load this knowledge—that’s time that we may very well be analyzing knowledge, as an alternative of remodeling it. Additionally, complicated knowledge, like arrays and nesting, and surprising knowledge, corresponding to a a mixture of string and integer varieties for a specific attribute, will trigger issues for the ETL pipeline and database.

Technique 2: Storing JSON in a Desk Column

An alternative choice is to retailer the JSON in a desk column. This characteristic is on the market in some relational database techniques—PostgreSQL and MySQL help columns of JSON sort.

In PostgreSQL for instance, if a desk referred to as company_divisions has a column referred to as division_info and saved JSON within the type of {"division_id": 10, "division_name":"Monetary Administration", "division_lead":"CFO"}, one might question the desk utilizing the ->> operator. For instance:

SELECT

division_info->>'division_id' AS id,

division_info->>'division_name' AS identify,

division_info->>'division_lead' AS lead

FROM

company_divisions

If wanted, we will additionally create indexes on knowledge in JSON columns to hurry up queries inside PostgreSQL.

This method has the benefit of requiring much less ETL code to remodel and cargo the information, however we lose a number of the benefits of a relational mannequin. We will nonetheless use SQL, however querying and analyzing the information within the JSON column will probably be much less performant, as a consequence of lack of statistics and fewer environment friendly indexing, than if we had reworked it right into a desk construction with native varieties.

A Higher Various: Normal SQL on Absolutely Listed JSON

There’s a extra pure option to obtain SQL analytics on JSON. As a substitute of attempting to map knowledge that naturally suits JSON into relational tables, we will use SQL to question JSON knowledge straight.

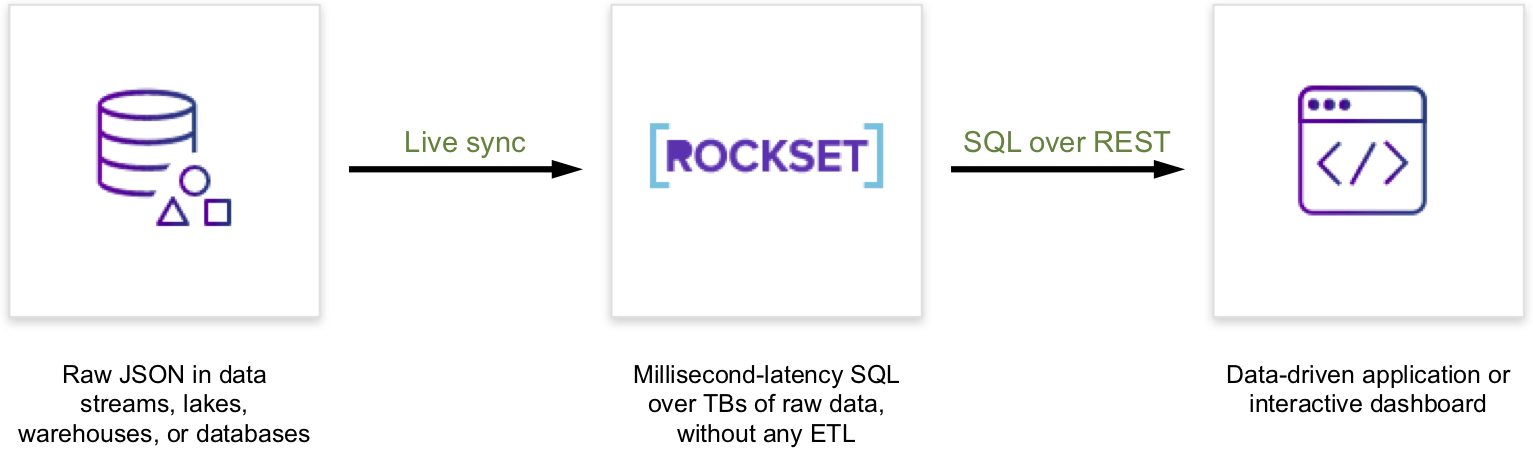

Rockset indexes JSON knowledge as is and gives finish customers with a SQL interface for querying knowledge to energy apps and dashboards.

It constantly indexes new knowledge because it arrives in knowledge sources, so there aren’t any prolonged durations of time the place the information queried is out of sync with knowledge sources. One other profit is that since Rockset doesn’t want a hard and fast schema, customers can proceed to ingest and index from knowledge sources even when their schemas change.

The efficiencies gained are evident: we get to depart behind cumbersome ETL code, reduce our knowledge pipeline, and leverage robotically generated indexes over all our knowledge for higher question efficiency.