You could have heard the well-known quote “Information is the brand new Oil” by British mathematician Clive Humby it’s the most influential quote that describes the significance of knowledge within the twenty first century however, after the explosive growth of the Massive Language Mannequin and its coaching what we don’t have proper is the info. as a result of the event velocity and coaching velocity of the LLM mannequin almost surpass the info technology velocity of people. The answer is making the info extra refined and particular to the duty or the Artificial knowledge technology. The previous is the extra area skilled loaded duties however the latter is extra outstanding to the large starvation of right this moment’s issues.

The high-quality coaching knowledge stays a important bottleneck. This weblog submit explores a sensible method to producing artificial knowledge utilizing LLama 3.2 and Ollama. It’ll exhibit how we are able to create structured instructional content material programmatically.

Studying Outcomes

- Perceive the significance and strategies of Native Artificial Information Technology for enhancing machine studying mannequin coaching.

- Learn to implement Native Artificial Information Technology to create high-quality datasets whereas preserving privateness and safety.

- Achieve sensible data of implementing strong error dealing with and retry mechanisms in knowledge technology pipelines.

- Study JSON validation, cleansing strategies, and their function in sustaining constant and dependable outputs.

- Develop experience in designing and using Pydantic fashions for making certain knowledge schema integrity.

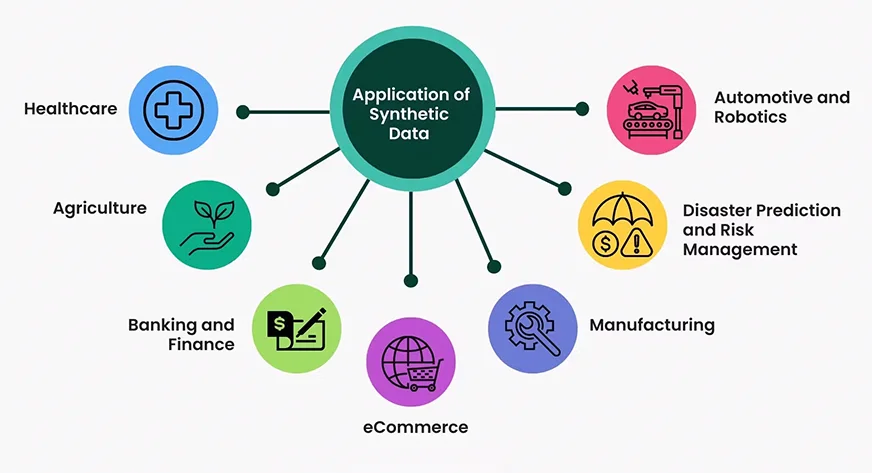

What’s Artificial Information?

Artificial knowledge refers to artificially generated data that mimics the traits of real-world knowledge whereas preserving important patterns and statistical properties. It’s created utilizing algorithms, simulations, or AI fashions to deal with privateness issues, increase restricted knowledge, or check techniques in managed situations. In contrast to actual knowledge, artificial knowledge might be tailor-made to particular necessities, making certain variety, steadiness, and scalability. It’s extensively utilized in fields like machine studying, healthcare, finance, and autonomous techniques to coach fashions, validate algorithms, or simulate environments. Artificial knowledge bridges the hole between knowledge shortage and real-world functions whereas decreasing moral and compliance dangers.

Why We Want Artificial Information At the moment?

The demand for artificial knowledge has grown exponentially attributable to a number of elements

- Information Privateness Rules: With GDPR and comparable rules, artificial knowledge presents a protected various for growth and testing

- Price Effectivity: COllecting and annotating actual knowledge is dear and time-consuming.

- Scalabilities: Artificial knowledge might be generated in Massive portions with managed variations

- Edge Case Protection: We are able to generate knowledge for uncommon situations that is likely to be troublesome to gather naturally

- Fast Prototyping: Fast iteration on ML fashions with out ready for actual knowledge assortment.

- Much less Biased: The information collected from the true world could also be error inclined and filled with gender biases, racistic textual content, and never protected for youngsters’s phrases so to make a mannequin with the sort of knowledge, the mannequin’s conduct can be inherently with these biases. With artificial knowledge, we are able to management these behaviors simply.

Affect on LLM and Small LM Efficiency

Artificial knowledge has proven promising leads to enhancing each giant and small language fashions

- Positive-tuning Effectivity: Fashions fine-tuned on high-quality artificial knowledge typically present comparable efficiency to these educated on actual knowledge

- Area Adaptation: Artificial knowledge helps bridge area gaps in specialised functions

- Information Augmentation: Combining artificial and actual knowledge typically yields higher outcomes utilizing both alone.

Undertaking Construction and Surroundings Setup

Within the following part, we’ll break down the challenge structure and information you thru configuring the required setting.

challenge/

├── primary.py

├── necessities.txt

├── README.md

└── english_QA_new.jsonNow we are going to arrange our challenge setting utilizing conda. Observe under steps

Create Conda Surroundings

$conda create -n synthetic-data python=3.11

# activate the newly created env

$conda activate synthetic-dataSet up Libraries in conda env

pip set up pydantic langchain langchain-community

pip set up langchain-ollamaNow we’re all set as much as begin the code implementation

Undertaking Implementation

On this part, we’ll delve into the sensible implementation of the challenge, protecting every step intimately.

Importing Libraries

Earlier than beginning the challenge we are going to create a file identify primary.py within the challenge root and import all of the libraries on that file:

from pydantic import BaseModel, Area, ValidationError

from langchain.prompts import PromptTemplate

from langchain_ollama import OllamaLLM

from typing import Record

import json

import uuid

import re

from pathlib import Path

from time import sleepNow it’s time to proceed the code implementation half on the principle.py file

First, we begin with implementing the Information Schema.

EnglishQuestion knowledge schema is a Pydantic mannequin that ensures our generated knowledge follows a constant construction with required fields and automated ID technology.

Code Implementation

class EnglishQuestion(BaseModel):

id: str = Area(

default_factory=lambda: str(uuid.uuid4()),

description="Distinctive identifier for the query",

)

class: str = Area(..., description="Query Sort")

query: str = Area(..., description="The English language query")

reply: str = Area(..., description="The right reply to the query")

thought_process: str = Area(

..., description="Rationalization of the reasoning course of to reach on the reply"

)Now, that now we have created the EnglishQuestion knowledge class.

Second, we are going to begin implementing the QuestionGenerator class. This class is the core of challenge implementation.

QuestionGenerator Class Construction

class QuestionGenerator:

def __init__(self, model_name: str, output_file: Path):

go

def clean_json_string(self, textual content: str) -> str:

go

def parse_response(self, outcome: str) -> EnglishQuestion:

go

def generate_with_retries(self, class: str, retries: int = 3) -> EnglishQuestion:

go

def generate_questions(

self, classes: Record[str], iterations: int

) -> Record[EnglishQuestion]:

go

def save_to_json(self, query: EnglishQuestion):

go

def load_existing_data(self) -> Record[dict]:

goLet’s step-by-step implement the important thing strategies

Initialization

Initialize the category with a language mannequin, a immediate template, and an output file. With this, we are going to create an occasion of OllamaLLM with model_name and arrange a PromptTemplate for producing QA in a strict JSON format.

Code Implementation:

def __init__(self, model_name: str, output_file: Path):

self.llm = OllamaLLM(mannequin=model_name)

self.prompt_template = PromptTemplate(

input_variables=["category"],

template="""

Generate an English language query that assessments understanding and utilization.

Deal with {class}.Query can be like fill within the blanks,One liner and mut not be MCQ sort. write Output on this strict JSON format:

{{

"query": "<your particular query>",

"reply": "<the proper reply>",

"thought_process": "<Clarify reasoning to reach on the reply>"

}}

Don't embody any textual content outdoors of the JSON object.

""",

)

self.output_file = output_file

self.output_file.contact(exist_ok=True)JSON Cleansing

Responses we are going to get from the LLM through the technology course of may have many pointless further characters which can poise the generated knowledge, so you could go these knowledge by means of a cleansing course of.

Right here, we are going to repair the frequent formatting difficulty in JSON keys/values utilizing regex, changing problematic characters reminiscent of newline, and particular characters.

Code implementation:

def clean_json_string(self, textual content: str) -> str:

"""Improved model to deal with malformed or incomplete JSON."""

begin = textual content.discover("{")

finish = textual content.rfind("}")

if begin == -1 or finish == -1:

increase ValueError(f"No JSON object discovered. Response was: {textual content}")

json_str = textual content[start : end + 1]

# Take away any particular characters that may break JSON parsing

json_str = json_str.change("n", " ").change("r", " ")

json_str = re.sub(r"[^x20-x7E]", "", json_str)

# Repair frequent JSON formatting points

json_str = re.sub(

r'(?<!)"([^"]*?)(?<!)":', r'"1":', json_str

) # Repair key formatting

json_str = re.sub(

r':s*"([^"]*?)(?<!)"(?=s*[,}])', r': "1"', json_str

) # Repair worth formatting

return json_strResponse Parsing

The parsing technique will use the above cleansing course of to wash the responses from the LLM, validate the response for consistency, convert the cleaned JSON right into a Python dictionary, and map the dictionary to an EnglishQuestion object.

Code Implementation:

def parse_response(self, outcome: str) -> EnglishQuestion:

"""Parse the LLM response and validate it towards the schema."""

cleaned_json = self.clean_json_string(outcome)

parsed_result = json.hundreds(cleaned_json)

return EnglishQuestion(**parsed_result)Information Persistence

For, persistent knowledge technology, though we are able to use some NoSQL Databases(MongoDB, and so on) for this, right here we use a easy JSON file to retailer the generated knowledge.

Code Implementation:

def load_existing_data(self) -> Record[dict]:

"""Load present questions from the JSON file."""

attempt:

with open(self.output_file, "r") as f:

return json.load(f)

besides (FileNotFoundError, json.JSONDecodeError):

return []Sturdy Technology

On this knowledge technology section, now we have two most vital strategies:

- Generate with retry mechanism

- Query Technology technique

The aim of the retry mechanism is to drive automation to generate a response in case of failure. It tries producing a query a number of instances(the default is 3 times) and can log errors and add a delay between retries. It’ll additionally increase an exception if all makes an attempt fail.

Code Implementation:

def generate_with_retries(self, class: str, retries: int = 3) -> EnglishQuestion:

for try in vary(retries):

attempt:

outcome = self.prompt_template | self.llm

response = outcome.invoke(enter={"class": class})

return self.parse_response(response)

besides Exception as e:

print(

f"Try {try + 1}/{retries} failed for class '{class}': {e}"

)

sleep(2) # Small delay earlier than retry

increase ValueError(

f"Didn't course of class '{class}' after {retries} makes an attempt."

)The Query technology technique will generate a number of questions for an inventory of classes and save them within the storage(right here JSON file). It’ll iterate over the classes and name generating_with_retries technique for every class. And within the final, it should save every efficiently generated query utilizing save_to_json technique.

def generate_questions(

self, classes: Record[str], iterations: int

) -> Record[EnglishQuestion]:

"""Generate a number of questions for an inventory of classes."""

all_questions = []

for _ in vary(iterations):

for class in classes:

attempt:

query = self.generate_with_retries(class)

self.save_to_json(query)

all_questions.append(query)

print(f"Efficiently generated query for class: {class}")

besides (ValidationError, ValueError) as e:

print(f"Error processing class '{class}': {e}")

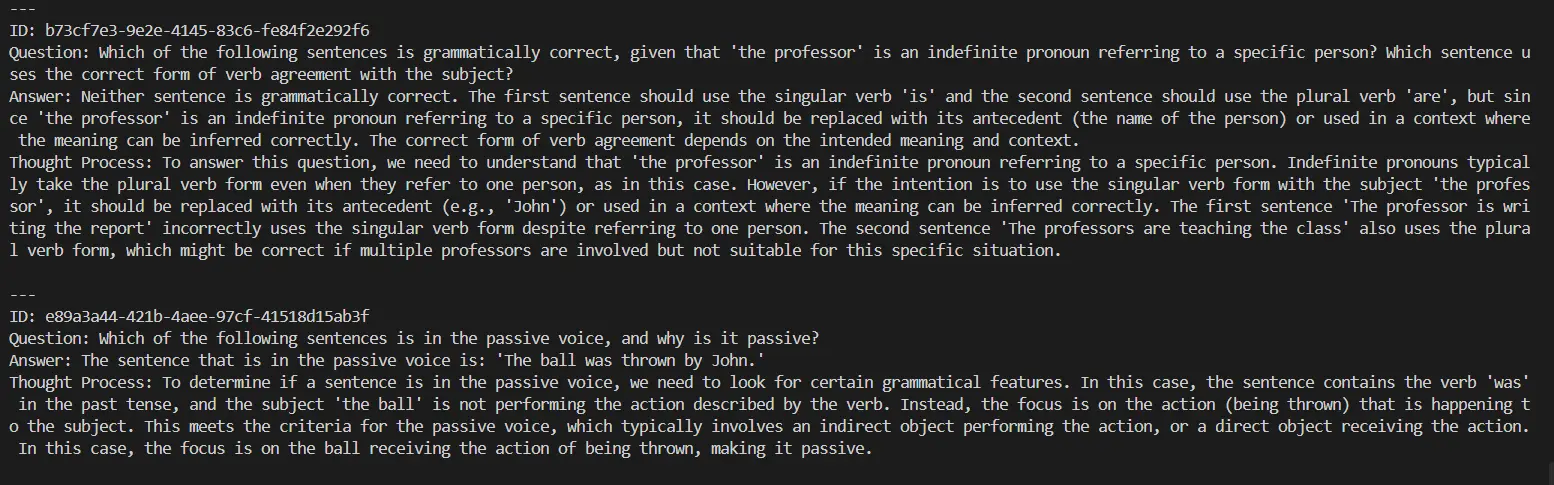

return all_questionsDisplaying the outcomes on the terminal

To get some concept of what are the responses producing from LLM right here is an easy printing operate.

def display_questions(questions: Record[EnglishQuestion]):

print("nGenerated English Questions:")

for query in questions:

print("n---")

print(f"ID: {query.id}")

print(f"Query: {query.query}")

print(f"Reply: {query.reply}")

print(f"Thought Course of: {query.thought_process}")Testing the Automation

Earlier than operating your challenge create an english_QA_new.json file on the challenge root.

if __name__ == "__main__":

OUTPUT_FILE = Path("english_QA_new.json")

generator = QuestionGenerator(model_name="llama3.2", output_file=OUTPUT_FILE)

classes = [

"word usage",

"Phrasal Ver",

"vocabulary",

"idioms",

]

iterations = 2

generated_questions = generator.generate_questions(classes, iterations)

display_questions(generated_questions)

Now, Go to the terminal and kind:

python primary.pyOutput:

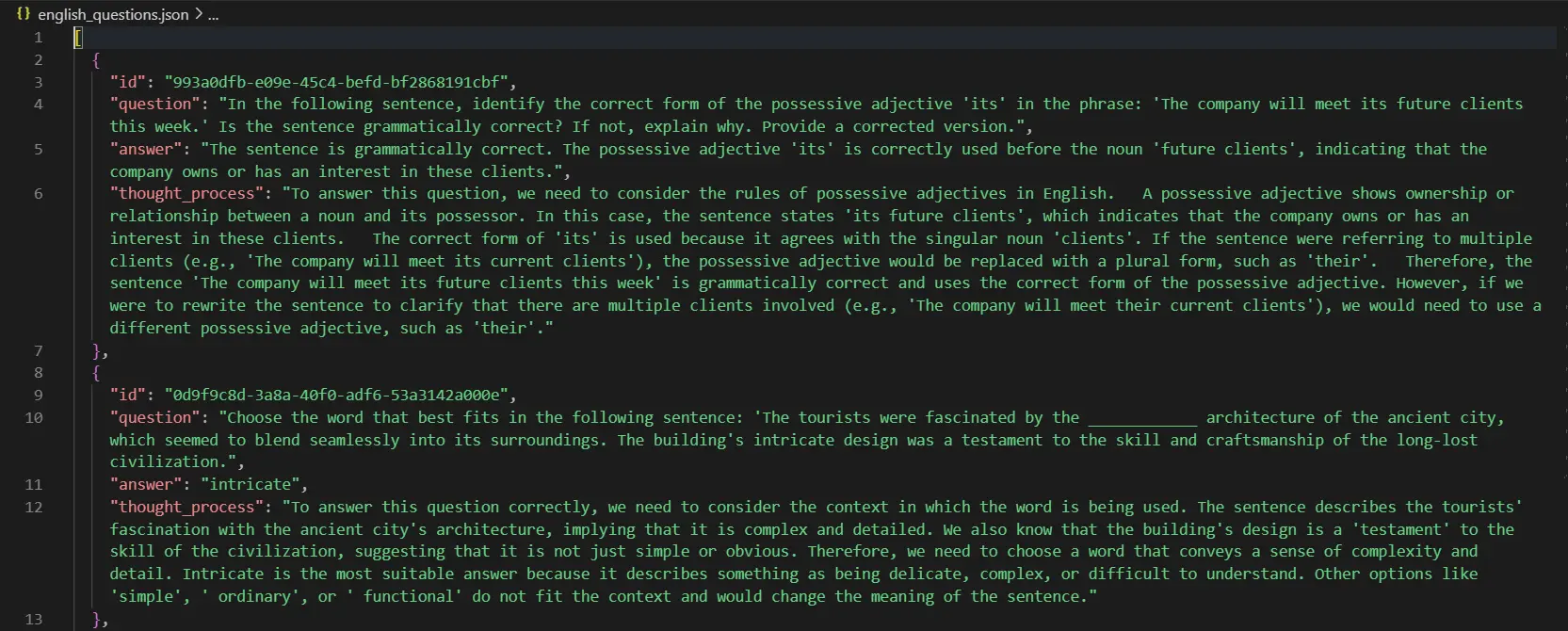

These questions can be saved in your challenge root. Saved Query appear like:

All of the code used on this challenge is right here.

Conclusion

Artificial knowledge technology has emerged as a strong answer to deal with the rising demand for high-quality coaching datasets within the period of speedy developments in AI and LLMs. By leveraging instruments like LLama 3.2 and Ollama, together with strong frameworks like Pydantic, we are able to create structured, scalable, and bias-free datasets tailor-made to particular wants. This method not solely reduces dependency on pricey and time-consuming real-world knowledge assortment but in addition ensures privateness and moral compliance. As we refine these methodologies, artificial knowledge will proceed to play a pivotal function in driving innovation, enhancing mannequin efficiency, and unlocking new potentialities in numerous fields.

Key Takeaways

- Native Artificial Information Technology permits the creation of numerous datasets that may enhance mannequin accuracy with out compromising privateness.

- Implementing Native Artificial Information Technology can considerably improve knowledge safety by minimizing reliance on real-world delicate knowledge.

- Artificial knowledge ensures privateness, reduces biases, and lowers knowledge assortment prices.

- Tailor-made datasets enhance adaptability throughout numerous AI and LLM functions.

- Artificial knowledge paves the way in which for moral, environment friendly, and modern AI growth.

Incessantly Requested Questions

A. Ollama offers native deployment capabilities, decreasing value and latency whereas providing extra management over the technology course of.

A. To keep up high quality, The implementation makes use of Pydantic validation, retry mechanisms, and JSON cleansing. Extra metrics and keep validation might be carried out.

A. Native LLMs might need lower-quality output in comparison with bigger fashions, and technology velocity might be restricted by native computing sources.

A. Sure, artificial knowledge ensures privateness by eradicating identifiable data and promotes moral AI growth by addressing knowledge biases and decreasing the dependency on real-world delicate knowledge.

A. Challenges embody making certain knowledge realism, sustaining area relevance, and aligning artificial knowledge traits with real-world use circumstances for efficient mannequin coaching.