Cloudera DataFlow for the Public Cloud (CDF-PC) is an entire self-service streaming knowledge seize and motion platform based mostly on Apache NiFi. It permits builders to interactively design knowledge flows in a drag and drop designer, which will be deployed as constantly working, auto-scaling move deployments or event-driven serverless features. CDF-PC comes with a monitoring dashboard out of the field for knowledge move well being and efficiency monitoring. Key efficiency indicators (KPIs) and related alerts assist prospects monitor what issues for his or her use circumstances.

Many organizations have invested in central monitoring and observability instruments similar to Prometheus and Grafana and are on the lookout for methods to combine key knowledge move metrics into their current structure.

On this weblog we are going to dive into how CDF-PC’s help for NiFi reporting duties can be utilized to observe key metrics in Prometheus and Grafana.

Goal structure: connecting the items

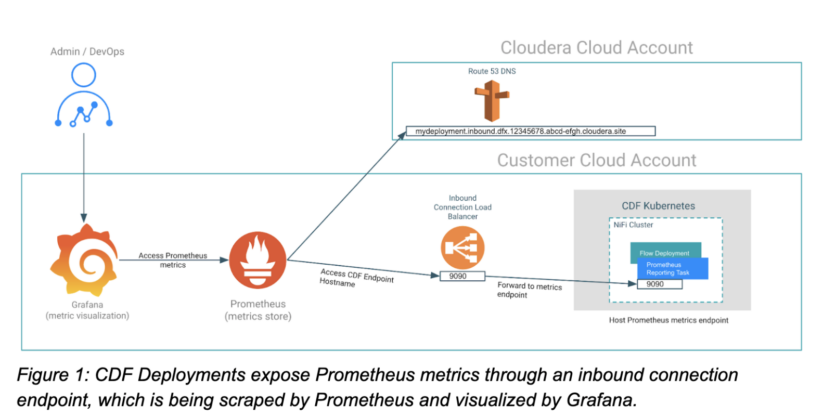

The important thing to insightful Grafana dashboards is gaining access to related utility metrics. In our case, these are NiFi metrics of our move deployment. We due to this fact want to have the ability to expose NiFi metrics for Prometheus so it could scrape them earlier than we are able to construct dashboards in Grafana. CDF-PC’s help for Prometheus reporting duties and inbound connections permits Prometheus to scrape metrics in actual time. As soon as the metrics are in Prometheus, querying it and constructing dashboards on high of it in Grafana is simple. So let’s take a more in-depth have a look at how we get from having metrics in our move deployment to a completely featured Grafana dashboard by implementing the goal structure proven in Determine 1 beneath.

Configuring a CDF deployment to be scraped by Prometheus

Beginning with CDF-PC 2.6.1, now you can programmatically create NiFi reporting duties to make related metrics obtainable to varied third social gathering monitoring methods. The Prometheus reporting job that we’ll use for this instance creates an HTTP(S) metrics endpoint that may be scraped by Prometheus brokers or servers. To make use of this reporting job in a CDF-PC deployment, we’ve to finish the next steps:

- Make sure that the HTTP(s) metrics endpoint is reachable from Prometheus by configuring an inbound connections endpoint when creating the deployment.

- Create and configure the Prometheus reporting job utilizing the CDP CLI after profitable deployment creation.

Making a deployment with an inbound connections endpoint

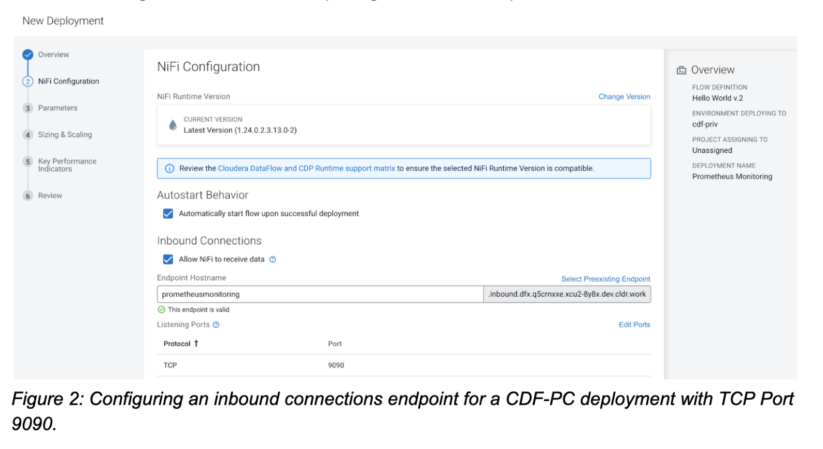

When making a deployment, CDF-PC provides you the choice to permit NiFi to obtain knowledge by configuring an inbound connections endpoint. When the choice is checked, CDF-PC will recommend an endpoint hostname that you could customise as wanted.

The inbound connections endpoint provides exterior functions the power to ship knowledge to a deployment, or in our case, hook up with a deployment to scrape its metrics. Along with the endpoint hostname we even have to offer at the very least one port that we wish to expose. In our case we’re utilizing Port 9090 and are exposing it with the TCP protocol.

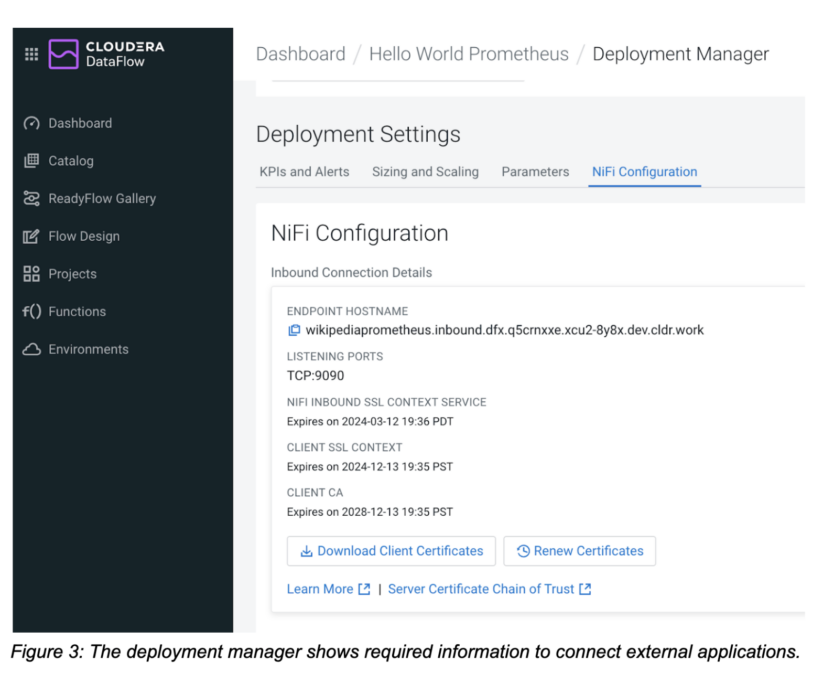

After you’ve gotten created your deployment with an inbound connection endpoint, navigate to the NiFi configuration tab within the deployment supervisor the place you will notice all related info to attach exterior functions to the deployment. Now that the deployment has been created, we are able to transfer on to the following step—creating the reporting job.

Creating and configuring the NiFi Prometheus reporting job

We will now use the CDP CLI to create and configure the Prometheus reporting job. Obtain and configure the CDP CLI. Just remember to are working at the very least model 0.9.101 by working cdp –model.

The command we’re going to make use of is the cdp dfworkload create-reporting-task command. It requires the deployment CRN, setting CRN and a JSON definition of the reporting job that we wish to create. Copy the deployment CRN from the deployment supervisor, get the setting CRN for the related CDP setting, and begin establishing the command.

cdp dfworkload create-reporting-task --deployment-crn crn:cdp:df:us-west-1:9d74eee4-1cad-45d7-b645-7ccf9edbb73d:deployment:eb2717f3-1bdf-4150-bd33-5b15d715bc7d/5cdc4d43-2991-4d4c-99fc-c400cd15853d --environment-crn crn:cdp:environments:us-west-1:9d74eee4-1cad-45d7-b645-7ccf9edbb73d:setting:bf58748f-7ef4-477a-9c63-448b51e5c98f

The lacking piece is offering the details about which reporting job we wish to create. All supported reporting duties will be handed in utilizing their configuration JSON file. Right here’s the JSON configuration for our Prometheus reporting job. It consists of a Prometheus-specific properties part adopted by generic reporting job configuration properties similar to whether or not the reporting job needs to be began, how continuously it ought to run, and the way it needs to be scheduled.

{ "title": "PrometheusReportingTask", "sort": "org.apache.nifi.reporting.prometheus.PrometheusReportingTask", "properties": { "prometheus-reporting-task-metrics-endpoint-port": "9090", "prometheus-reporting-task-metrics-strategy": "All Elements", "prometheus-reporting-task-instance-id": "${hostname(true)}", "prometheus-reporting-task-client-auth": "No Authentication", "prometheus-reporting-task-metrics-send-jvm": "false" }, "propertyDescriptors": {}, "scheduledState": "RUNNING", "schedulingPeriod": "60 sec", "schedulingStrategy": "TIMER_DRIVEN", "componentType": "REPORTING_TASK" }

| Configuration Property | Description |

| prometheus-reporting-task-metrics-endpoint-port | The port that this reporting job will use to reveal metrics. This port should match the port you specified earlier when configuring the inbound connection endpoint. |

| prometheus-reporting-task-metrics-strategy | Defines granularity on which to report metrics. Supported values are “All Elements,” “Root Course of Group,” and “All Course of Teams.” Use this to restrict metrics as wanted. |

| prometheus-reporting-task-instance-id | The ID that shall be despatched alongside the metrics. You need to use this property to determine your deployments in Prometheus. |

| prometheus-reporting-task-client-auth | Does the endpoint require authentication? Supported values are “No Authentication,” “Need Authentication,” or “Want Authentication”. |

| prometheus-reporting-task-metrics-send-jvm | Defines whether or not JVM metrics are additionally uncovered. Supported values are “true” and “false.” |

Desk 1: Prometheus configuration properties of the NiFi Prometheus reporting job.

You’ll be able to both cross the JSON file as a parameter to the CLI command or reference a file. Let’s assume we’re saving the above JSON content material in a file known as prometheus_reporting_task.json.

Now we are able to assemble our last CLI command that can create the specified reporting job:

cdp dfworkload create-reporting-task --deployment-crn crn:cdp:df:us-west-1:9d74eee4-1cad-45d7-b645-7ccf9edbb73d:deployment:eb2717f3-1bdf-4150-bd33-5b15d715bc7d/5cdc4d43-2991-4d4c-99fc-c400cd15853d --environment-crn crn:cdp:environments:us-west-1:9d74eee4-1cad-45d7-b645-7ccf9edbb73d:setting:bf58748f-7ef4-477a-9c63-448b51e5c98f --file-path prometheus_reporting_task.json

After executing the command, it is best to get a response again that accommodates the reporting job CRN: { "crn": "crn:cdp:df:us-west-1:9d74eee4-1cad-45d7-b645-7ccf9edbb73d:reportingTask:eb2717f3-1bdf-4150-bd33-5b15d715bc7d/66a746af-018c-1000-0000-00005212b3ea" }

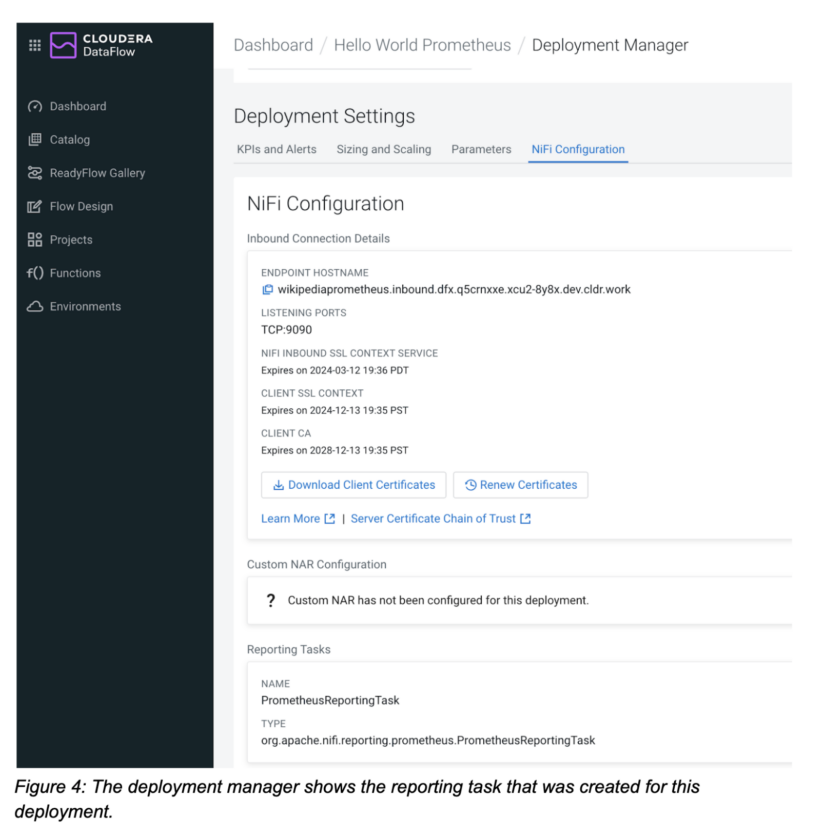

To substantiate that the reporting job was created, navigate to the NiFi configuration tab within the deployment supervisor and confirm that the reporting job part displays the reporting duties you created utilizing the CLI.

Now that our move deployment and reporting job have been created, we are able to transfer on to the following step and configure the Prometheus server to scrape this deployment.

Configuring Prometheus to observe a CDF deployment

To outline a brand new scraping goal for Prometheus, we have to edit the Prometheus configuration file. Open the prometheus.yaml file so as to add the CDF deployment as a goal.

Create a brand new job, e.g. with CDF deployment as its title. Subsequent, copy the endpoint hostname of your CDF deployment from the deployment supervisor and add it as a brand new goal.

scrape_configs:

# The job title is added as a label `job=<job_name>` to any timeseries scraped from this config. - job_name: "CDF Deployment" # metrics_path defaults to '/metrics' # scheme defaults to 'http'. static_configs: - targets: ["wikipediaprometheus.inbound.dfx.q5crnxxe.xcu2-8y8x.dev.cldr.work:9090"]

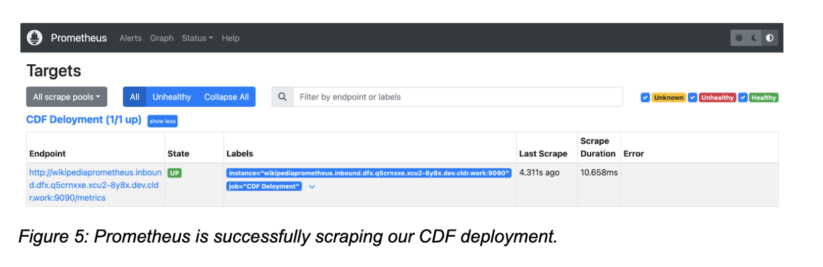

Apply the configuration adjustments and navigate to the Prometheus net console to substantiate that our CDF deployment is being scraped. Go to the Standing→Targets and confirm that your CDF Deployment is “Up.”

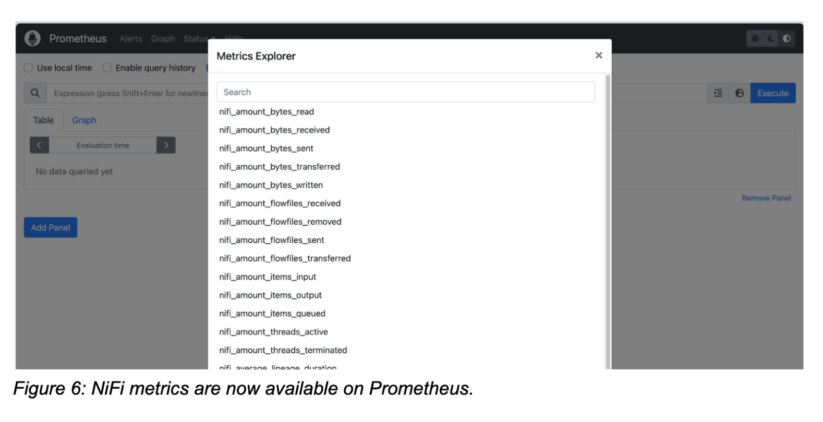

As soon as Prometheus has began scraping, you’ll be able to discover all NiFi metrics within the metrics explorer and begin constructing your Prometheus queries.

Pattern Grafana dashboard

Grafana is a well-liked alternative for visualizing Prometheus metrics, and it makes it simple to observe key NiFi metrics of our deployment.

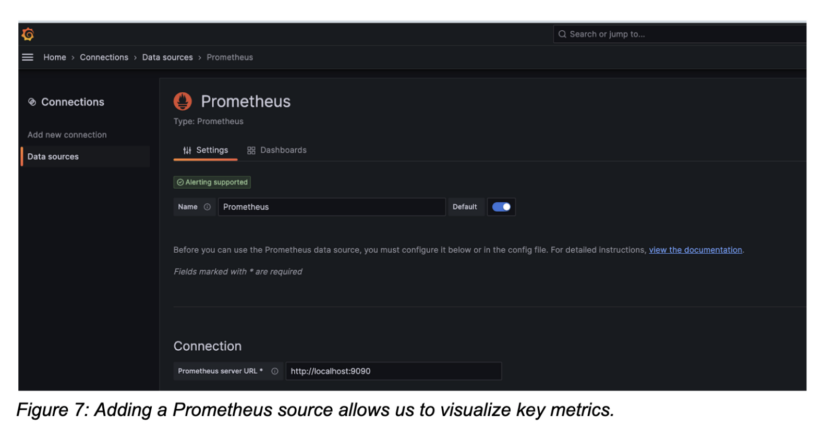

Create a Prometheus connection to make all metrics and queries obtainable in Grafana.

Now that Grafana is linked to Prometheus, you’ll be able to create a brand new dashboard and add visualizations.

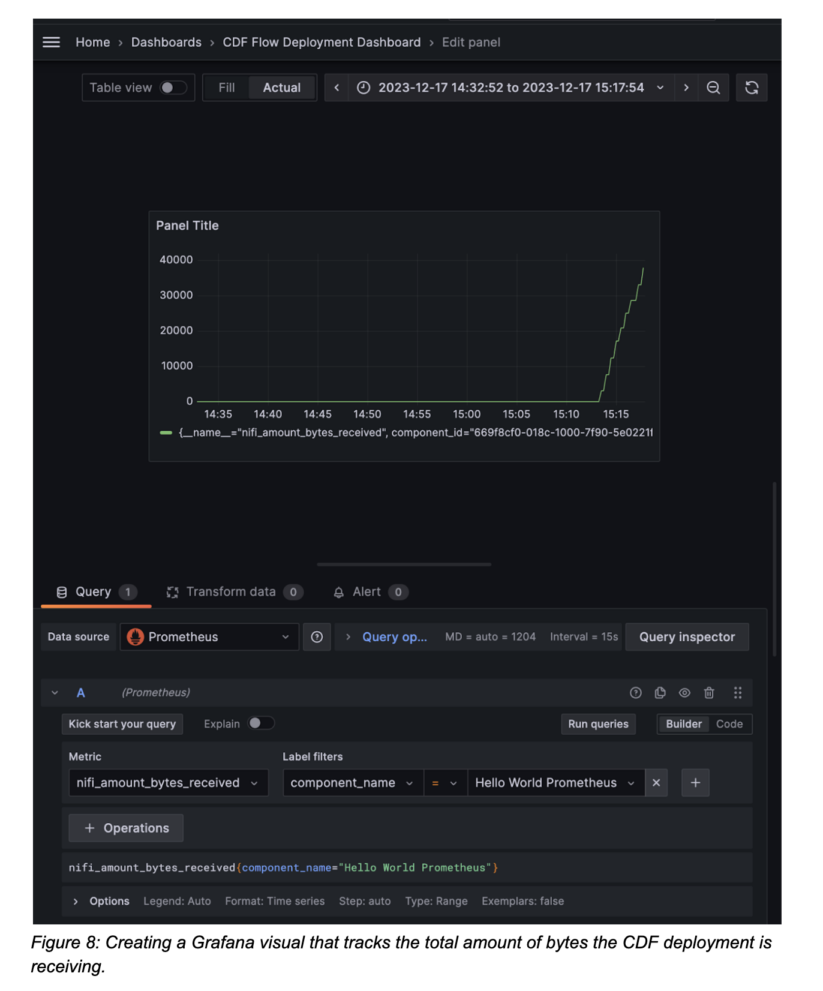

Let’s say we wish to create a graph that represents the information that this deployment has acquired from exterior sources. Choose “add visualization” in your dashboard and ensure your Prometheus connection is chosen as the information supply.

Choose the nifi_amount_bytes_received metric. Use the label filters to slender down the part within the move. Through the use of component_name and “Hi there World Prometheus,” we’re monitoring the bytes acquired aggregated by all the course of group and due to this fact the move. Alternatively you’ll be able to monitor all parts if no filter is outlined or monitor particular person processors too.

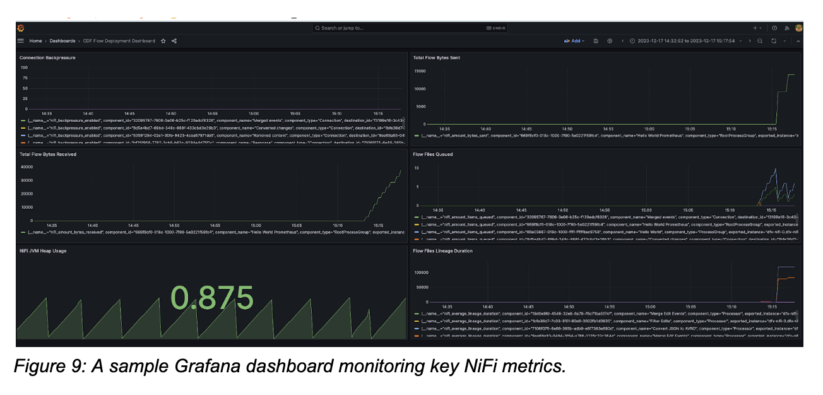

With all NiFi metrics being obtainable in Grafana, we are able to now construct a full dashboard monitoring all related metrics. Within the instance beneath we’re monitoring complete bytes acquired/despatched, the variety of move recordsdata queued in all parts, the common lineage length and the present NiFi JVM heap utilization which assist us perceive how our flows are doing.

Conclusion

Conclusion

The NiFi Prometheus reporting job, along with CDF inbound connections makes it simple to observe key metrics in Prometheus and create Grafana dashboards. With the not too long ago added create-reporting-task CDF CLI command, prospects can now automate establishing Prometheus monitoring for each new deployment as a part of their normal CI/CD pipeline.

Check out CDF-PC utilizing the general public 5 day trial and take a look at the Prometheus monitoring demo video beneath for a step-by-step tutorial.