Elasticsearch has lengthy been used for all kinds of real-time analytics use circumstances, together with log storage and evaluation and search functions. The rationale it’s so in style is due to the way it indexes knowledge so it’s environment friendly for search. Nevertheless, this comes with a price in that becoming a member of paperwork is much less environment friendly.

There are methods to construct relationships in Elasticsearch paperwork, commonest are: nested objects, parent-child joins, and utility aspect joins. Every of those has completely different use circumstances and downsides versus the pure SQL becoming a member of strategy that’s offered by applied sciences like Rockset.

On this publish, I’ll discuss by way of a typical Elasticsearch and Rockset use case, stroll by way of how you might implement it with application-side joins in Elasticsearch, after which present how the identical performance is offered in Rockset.

Use Case: On-line Market

Elasticsearch could be an excellent device to make use of for a web-based market as the commonest method to discover merchandise is through search. Distributors add merchandise together with product data and descriptions that each one should be listed so customers can discover them utilizing the search functionality on the web site.

This can be a frequent use case for a device like Elasticsearch as it might present quick search outcomes throughout not solely product names however descriptions too, serving to to return essentially the most related outcomes.

Customers looking for merchandise is not going to solely need essentially the most related outcomes displayed on the prime however essentially the most related with the very best opinions or most purchases. We may also must retailer this knowledge in Elasticsearch. This implies we may have 3 sorts of knowledge:

- product – all metadata a few product together with its identify, description, worth, class, and picture

- buy – a log of all purchases of a particular product, together with date and time of buy, person id, and amount

- overview – buyer opinions in opposition to a particular product together with a star score and full-text overview

On this publish, I gained’t be exhibiting you learn how to get this knowledge into Elasticsearch, solely learn how to use it. Whether or not you might have every of some of these knowledge in a single index or separate doesn’t matter as we will likely be accessing them individually and becoming a member of them inside our utility.

Constructing with Elasticsearch

In Elasticsearch I’ve three indexes, one for every of the info varieties: product, buy, and overview. What we need to construct is an utility that means that you can seek for a product and order the outcomes by most purchases or greatest overview scores.

To do that we might want to construct three separate queries.

- Discover related merchandise based mostly on search phrases

- Rely the variety of purchases for every returned product

- Common the star score for every returned product

These three queries will likely be executed and the info joined collectively inside the utility, earlier than returning it to the entrance finish to show the outcomes. It’s because Elasticsearch doesn’t natively help SQL like joins.

To do that, I’ve constructed a easy search web page utilizing Vue and used Axios to make calls to my API. The API I’ve constructed is a straightforward node specific API that may be a wrapper across the Elasticsearch API. This may enable the entrance finish to move within the search phrases and have the API execute the three queries and carry out the be part of earlier than sending the info again to the entrance finish.

This is a crucial design consideration when constructing an utility on prime of Elasticsearch, particularly when application-side joins are required. You don’t need the shopper to affix knowledge collectively regionally on a person’s machine so a server-side utility is required to maintain this.

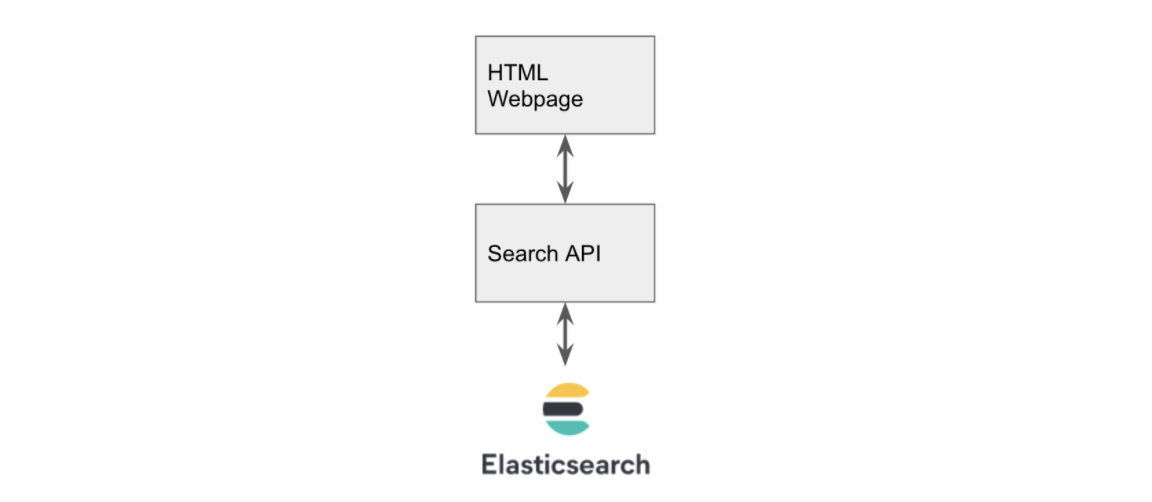

The applying structure is proven in Fig 1.

Fig 1. Utility Structure

Constructing the Entrance Finish

The entrance finish consists of a easy search field and button. It shows every end in a field with the product identify on the prime and the outline and worth under. The essential half is the script tag inside this HTML file that sends the info to our API. The code is proven under.

<script>

new Vue({

el: "#app",

knowledge: {

outcomes: [],

question: "",

},

strategies: {

// make request to our API passing in question string

search: perform () {

axios

.get("http://127.0.0.1:3001/search?q=" + this.question)

.then((response) => {

this.outcomes = response.knowledge;

});

},

// this perform is named on button press which calls search

submitBut: perform () {

this.search();

},

},

});

</script>

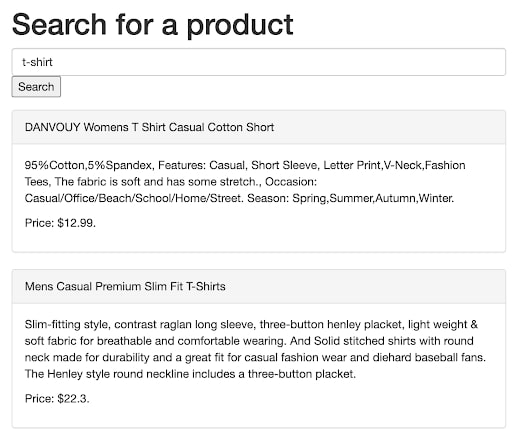

It makes use of Axios to name our API that’s operating on port 3001. When the search button is clicked, it calls the /search endpoint and passes within the search string from the search field. The outcomes are then displayed on the web page as proven in Fig 2.

Fig 2. Instance of the entrance finish displaying outcomes

For this to work, we have to construct an API that calls Elasticsearch on our behalf. To do that we will likely be utilizing NodeJS to construct a easy Specific API.

The API wants a /search endpoint that when known as with the parameters ?q=<search time period> it may possibly carry out a match request to Elasticsearch. There are many weblog posts detailing learn how to construct an Specific API, I’ll think about what’s required on prime of this to make calls to Elasticsearch.

Firstly we have to set up and use the Elasticsearch NodeJS library to instantiate a shopper.

const elasticsearch = require("elasticsearch");

const shopper = new elasticsearch.Consumer({

hosts: ["http://localhost:9200"],

});

Then we have to outline our search endpoint that makes use of this shopper to seek for our merchandise in Elasticsearch.

app.get("/search", perform (req, res) {

// construct the question we need to move to ES

let physique = {

measurement: 200,

from: 0,

question: {

bool: {

ought to: [

{ match: { title: req.query["q"] } },

{ match: { description: req.question["q"] } },

],

},

},

};

// inform ES to carry out the search on the 'product' index and return the outcomes

shopper

.search({ index: "product", physique: physique })

.then((outcomes) => {

res.ship(outcomes.hits.hits);

})

.catch((err) => {

console.log(err);

res.ship([]);

});

});

Observe that within the question we’re asking Elasticsearch to search for our search time period in both the product title or description utilizing the “ought to” key phrase.

As soon as this API is up and operating our entrance finish ought to now be capable to seek for and show outcomes from Elasticsearch as proven in Fig 2.

Counting the Variety of Purchases

Now we have to get the variety of purchases made for every of the returned merchandise and be part of it to our product checklist. We’ll be doing this within the API by making a easy perform that calls Elasticsearch and counts the variety of purchases for the returned product_id’s.

const getNumberPurchases = async (outcomes) => {

const productIds = outcomes.hits.hits.map((product) => product._id);

let physique = {

measurement: 200,

from: 0,

question: {

bool: {

filter: [{ terms: { product_id: productIds } }],

},

},

aggs: {

group_by_product: {

phrases: { subject: "product_id" },

},

},

};

const purchases = await shopper

.search({ index: "buy", physique: physique })

.then((outcomes) => {

return outcomes.aggregations.group_by_product.buckets;

});

return purchases;

};

To do that we search the acquisition index and filter utilizing a listing of product_id’s that have been returned from our preliminary search. We add an aggregation that teams by product_id utilizing the phrases key phrase which by default returns a rely.

Common Star Ranking

We repeat the method for the typical star score however the payload we ship to Elasticsearch is barely completely different as a result of this time we would like a median as an alternative of a rely.

let physique = {

measurement: 200,

from: 0,

question: {

bool: {

filter: [{ terms: { product_id: productIds } }],

},

},

aggs: {

group_by_product: {

phrases: { subject: "product_id" },

aggs: {

average_rating: { avg: { subject: "score" } },

},

},

},

};

To do that we add one other aggs that calculates the typical of the score subject. The remainder of the code stays the identical other than the index identify we move into the search name, we need to use the overview index for this.

Becoming a member of the Outcomes

Now we’ve all our knowledge being returned from Elasticsearch, we now want a method to be part of all of it collectively so the variety of purchases and the typical score may be processed alongside every of the merchandise permitting us to type by essentially the most bought or greatest rated.

First, we construct a generic mapping perform that creates a lookup. Every key of this object will likely be a product_id and its worth will likely be an object that incorporates the variety of purchases and the typical score.

const buildLookup = (map = {}, knowledge, key, inputFieldname, outputFieldname) => {

const dataMap = map;

knowledge.map((merchandise) => {

if (!dataMap[item[key]]) {

dataMap[item[key]] = {};

}

dataMap[item[key]][outputFieldname] = merchandise[inputFieldname];

});

return dataMap;

};

We name this twice, the primary time passing within the purchases and the second time the rankings (together with the output of the primary name).

const pMap = buildLookup({},purchases, 'key', 'doc_count', 'number_purchases')

const rMap = buildLookup(pMap,rankings, 'key', 'average_rating', 'average_rating')

This returns an object that appears as follows:

{

'2': { number_purchases: 57, average_rating: 2.8461538461538463 },

'20': { number_purchases: 45, average_rating: 2.7586206896551726 }

}

There are two merchandise right here, product_id 2 and 20. Every of them has quite a few purchases and a median score. We are able to now use this map and be part of it again onto our preliminary checklist of merchandise.

const be part of = (knowledge, joinData, key) => {

return knowledge.map((merchandise) => {

merchandise.stats = joinData[item[key]];

return merchandise;

});

};

To do that I created a easy be part of perform that takes the preliminary knowledge, the info that you simply need to be part of, and the important thing required.

One of many merchandise returned from Elasticsearch appears to be like as follows:

{

"_index": "product",

"_type": "product",

"_id": "20",

"_score": 3.750173,

"_source": {

"title": "DANVOUY Womens T Shirt Informal Cotton Quick",

"worth": 12.99,

"description": "95percentCotton,5percentSpandex, Options: Informal, Quick Sleeve, Letter Print,V-Neck,Vogue Tees, The material is mushy and has some stretch., Event: Informal/Workplace/Seaside/Faculty/Residence/Road. Season: Spring,Summer time,Autumn,Winter.",

"class": "ladies clothes",

"picture": "https://fakestoreapi.com/img/61pHAEJ4NML._AC_UX679_.jpg"

}

}

The important thing we would like is _id and we need to use that to search for the values from our map. Proven above. With a name to our be part of perform like so: be part of(merchandise, rMap, '_id'), we get our product returned however with a brand new stats property on it containing the purchases and score.

{

"_index": "product",

"_type": "product",

"_id": "20",

"_score": 3.750173,

"_source": {

"title": "DANVOUY Womens T Shirt Informal Cotton Quick",

"worth": 12.99,

"description": "95percentCotton,5percentSpandex, Options: Informal, Quick Sleeve, Letter Print,V-Neck,Vogue Tees, The material is mushy and has some stretch., Event: Informal/Workplace/Seaside/Faculty/Residence/Road. Season: Spring,Summer time,Autumn,Winter.",

"class": "ladies clothes",

"picture": "https://fakestoreapi.com/img/61pHAEJ4NML._AC_UX679_.jpg"

},

"stats": { "number_purchases": 45, "average_rating": 2.7586206896551726 }

}

Now we’ve our knowledge in an appropriate format to be returned to the entrance finish and used for sorting.

As you may see, there’s numerous work concerned on the server-side right here to get this to work. It solely turns into extra complicated as you add extra stats or begin to introduce massive end result units that require pagination.

Constructing with Rockset

Let’s take a look at implementing the identical characteristic set however utilizing Rockset. The entrance finish will keep the identical however we’ve two choices in relation to querying Rockset. We are able to both proceed to make use of the bespoke API to deal with our calls to Rockset (which is able to in all probability be the default strategy for many functions) or we are able to get the entrance finish to name Rockset straight utilizing its inbuilt API.

On this publish, I’ll give attention to calling the Rockset API straight from the entrance finish simply to showcase how easy it’s. One factor to notice is that Elasticsearch additionally has a local API however we have been unable to make use of it for this exercise as we wanted to affix knowledge collectively, one thing we don’t need to be doing on the client-side, therefore the necessity to create a separate API layer.

Seek for Merchandise in Rockset

To duplicate the effectiveness of the search outcomes we get from Elasticsearch we must do a little bit of processing on the outline and title subject in Rockset, thankfully, all of this may be completed on the fly when the info is ingested into Rockset.

We merely must arrange a subject mapping that can name Rockset’s Tokenize perform as the info is ingested, this may create a brand new subject that’s an array of phrases. The Tokenize perform takes a string and breaks it up into “tokens” (phrases) which might be then in a greater format for search later.

Now our knowledge is prepared for looking out, we are able to construct a question to carry out the seek for our time period throughout our new tokenized fields. We’ll be doing this utilizing Vue and Axios once more, however this time Axios will likely be making the decision on to the Rockset API.

search: perform() {

var knowledge = JSON.stringify({"sql":{"question":"choose * from commons."merchandise" WHERE SEARCH(CONTAINS(title_tokens, '" + this.question + "'),CONTAINS(description_tokens, '" + this.question+"') )OPTION(match_all = false)","parameters":[]}});

var config = {

methodology: 'publish',

url: 'https://api.rs2.usw2.rockset.com/v1/orgs/self/queries',

headers: {

'Authorization': 'ApiKey <API KEY>',

'Content material-Kind': 'utility/json'

},

knowledge : knowledge

};

axios(config)

.then( response => {

this.outcomes = response.knowledge.outcomes;

})

}

The search perform has been modified as above to provide a the place clause that calls Rockset’s Search perform. We name Search and ask it to return any outcomes for both of our Tokenised fields utilizing Comprises, the OPTION(match_all = false) tells Rockset that solely one in every of our fields must comprise our search time period. We then move this assertion to the Rockset API and set the outcomes when they’re returned to allow them to be displayed.

Calculating Stats in Rockset

Now we’ve the identical core search performance, we now need to add the variety of purchases and common star score for every of our merchandise, so it may possibly once more be used for sorting our outcomes.

When utilizing Elasticsearch, this required constructing some server-side performance into our API to make a number of requests to Elasticsearch after which be part of all the outcomes collectively. With Rockset we merely make an replace to the choose assertion we use when calling the Rockset API. Rockset will maintain the calculations and joins multi functional name.

"SELECT

merchandise.*, purchases.number_purchases, opinions.average_rating

FROM

commons.merchandise

LEFT JOIN (choose product_id, rely(*) as number_purchases

FROM commons.purchases

GROUP BY 1) purchases on merchandise.id = purchases.product_id

LEFT JOIN (choose product_id, AVG(CAST(score as int)) average_rating

FROM commons.opinions

GROUP BY 1) opinions on merchandise.id = opinions.product_id

WHERE" + whereClause

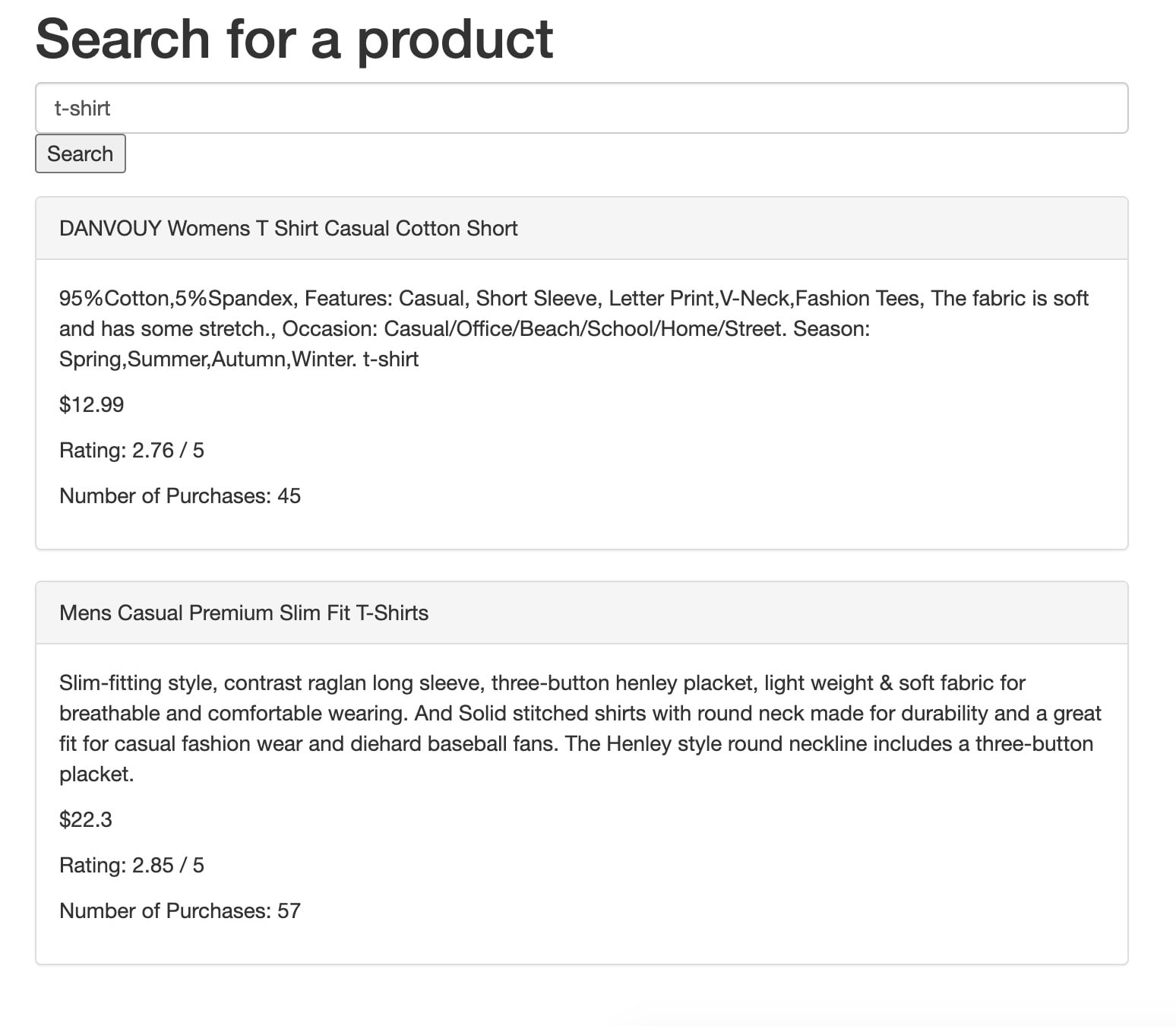

Our choose assertion is altered to comprise two left joins that calculate the variety of purchases and the typical score. All the work is now completed natively in Rockset. Fig 3 exhibits how these can then be displayed on the search outcomes. It’s now a trivial exercise to take this additional and use these fields to filter and kind the outcomes.

Fig 3. Outcomes exhibiting score and variety of purchases as returned from Rockset

Function Comparability

Right here’s a fast take a look at the place the work is being completed by every resolution.

| Exercise | The place is the work being completed? Elasticsearch Resolution | The place is the work being completed? Rockset Resolution |

|---|---|---|

| Search | Elasticsearch | Rockset |

| Calculating Stats | Elasticsearch | Rockset |

| Becoming a member of Stats to Search Outcomes | Bespoke API | Rockset |

As you may see it’s pretty comparable apart from the becoming a member of half. For Elasticsearch, we’ve constructed bespoke performance to affix the datasets collectively because it isn’t doable natively. The Rockset strategy requires no further effort because it helps SQL joins. This implies Rockset can maintain the end-to-end resolution.

Total we’re making fewer API calls and doing much less work outdoors of the database making for a extra elegant and environment friendly resolution.

Conclusion

Though Elasticsearch has been the default knowledge retailer for seek for a really very long time, its lack of SQL-like be part of help makes constructing some fairly trivial functions fairly tough. You will have to handle joins natively inside your utility which means extra code to write down, take a look at, and preserve. Another resolution could also be to denormalize your knowledge when writing to Elasticsearch, however that additionally comes with its personal points, reminiscent of amplifying the quantity of storage wanted and requiring further engineering overhead.

Through the use of Rockset, we could need to Tokenize our search fields on ingestion nevertheless we make up for it in firstly, the simplicity of processing this knowledge on ingestion in addition to simpler querying, becoming a member of, and aggregating knowledge. Rockset’s highly effective integrations with current knowledge storage options like S3, MongoDB, and Kafka additionally imply that any additional knowledge required to complement your resolution can rapidly be ingested and stored updated. Learn extra about how Rockset compares to Elasticsearch and discover learn how to migrate to Rockset.

When deciding on a database on your real-time analytics use case, you will need to take into account how a lot question flexibility you’ll have ought to it is advisable be part of knowledge now or sooner or later. This turns into more and more related when your queries could change steadily, when new options should be carried out or when new knowledge sources are launched. To expertise how Rockset gives full-featured SQL queries on complicated, semi-structured knowledge, you may get began with a free Rockset account.

Lewis Gavin has been an information engineer for 5 years and has additionally been running a blog about expertise inside the Knowledge group for 4 years on a private weblog and Medium. Throughout his pc science diploma, he labored for the Airbus Helicopter staff in Munich enhancing simulator software program for navy helicopters. He then went on to work for Capgemini the place he helped the UK authorities transfer into the world of Large Knowledge. He’s at the moment utilizing this expertise to assist remodel the info panorama at easyfundraising.org.uk, a web-based charity cashback website, the place he’s serving to to form their knowledge warehousing and reporting functionality from the bottom up.