In as we speak’s data-driven world, organizations depend on information analysts to interpret complicated datasets, uncover actionable insights, and drive decision-making. However what if we might improve the effectivity and scalability of this course of utilizing AI? Enter the Information Evaluation Agent, to automate analytical duties, execute code, and adaptively reply to information queries. LangGraph, CrewAI, and AutoGen are three common frameworks used to construct AI Brokers. We will likely be utilizing and evaluating all three on this article to construct a easy information evaluation agent.

Working of Information Evaluation Agent

The info evaluation agent will first take the question from the consumer and generate the code to learn the file and analyze the information within the file. Then the generated code will likely be executed utilizing the Python repl device. The results of the code is shipped again to the agent. The agent then analyzes the outcome acquired from the code execution device and replies to the consumer question. LLMs can generate arbitrary code, so we should fastidiously execute the LLM-generated code in a neighborhood surroundings.

Constructing a Information Evaluation Agent with LangGraph

If you’re new to this matter or want to brush up in your data of LangGraph, right here’s an article I might suggest: What’s LangGraph?

Pre-requisites

Earlier than constructing brokers, guarantee you may have the required API keys for the required LLMs.

Load the .env file with the API keys wanted.

from dotenv import load_dotenv

load_dotenv(./env)Key Libraries Required

langchain – 0.3.7

langchain-experimental – 0.3.3

langgraph – 0.2.52

crewai – 0.80.0

Crewai-tools – 0.14.0

autogen-agentchat – 0.2.38

Now that we’re all set, let’s start constructing our agent.

Steps to Construct a Information Evaluation Agent with LangGraph

1. Import the required libraries.

import pandas as pd

from IPython.show import Picture, show

from typing import Checklist, Literal, Optionally available, TypedDict, Annotated

from langchain_core.instruments import device

from langchain_core.messages import ToolMessage

from langchain_experimental.utilities import PythonREPL

from langchain_openai import ChatOpenAI

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

from langgraph.prebuilt import ToolNode, tools_condition

from langgraph.checkpoint.reminiscence import MemorySaver

2. Let’s outline the state.

class State(TypedDict):

messages: Annotated[list, add_messages]

graph_builder = StateGraph(State)

3. Outline the LLM and the code execution operate and bind the operate to the LLM.

llm = ChatOpenAI(mannequin="gpt-4o-mini", temperature=0.1)

@device

def python_repl(code: Annotated[str, "filename to read the code from"]):

"""Use this to execute python code learn from a file. If you wish to see the output of a worth,

Just be sure you learn the code from accurately

it is best to print it out with `print(...)`. That is seen to the consumer."""

strive:

outcome = PythonREPL().run(code)

print("RESULT CODE EXECUTION:", outcome)

besides BaseException as e:

return f"Didn't execute. Error: {repr(e)}"

return f"Executed:n```pythonn{code}n```nStdout: {outcome}"

llm_with_tools = llm.bind_tools([python_repl])

4. Outline the operate for the agent to answer and add it as a node to the graph.

def chatbot(state: State):

return {"messages": [llm_with_tools.invoke(state["messages"])]}

graph_builder.add_node("agent", chatbot)5. Outline the ToolNode and add it to the graph.

code_execution = ToolNode(instruments=[python_repl])

graph_builder.add_node("instruments", code_execution)

If the LLM returns a device name, we have to route it to the device node; in any other case, we will finish it. Let’s outline a operate for routing. Then we will add different edges.

def route_tools(state: State,):

"""

Use within the conditional_edge to path to the ToolNode if the final message

has device calls. In any other case, path to the tip.

"""

if isinstance(state, listing):

ai_message = state[-1]

elif messages := state.get("messages", []):

ai_message = messages[-1]

else:

increase ValueError(f"No messages present in enter state to tool_edge: {state}")

if hasattr(ai_message, "tool_calls") and len(ai_message.tool_calls) > 0:

return "instruments"

return END

graph_builder.add_conditional_edges(

"agent",

route_tools,

{"instruments": "instruments", END: END},

)

graph_builder.add_edge("instruments", "agent")6. Allow us to additionally add the reminiscence in order that we will chat with the agent.

reminiscence = MemorySaver()

graph = graph_builder.compile(checkpointer=reminiscence)

7. Compile and show the graph.

graph = graph_builder.compile(checkpointer=reminiscence)

show(Picture(graph.get_graph().draw_mermaid_png()))

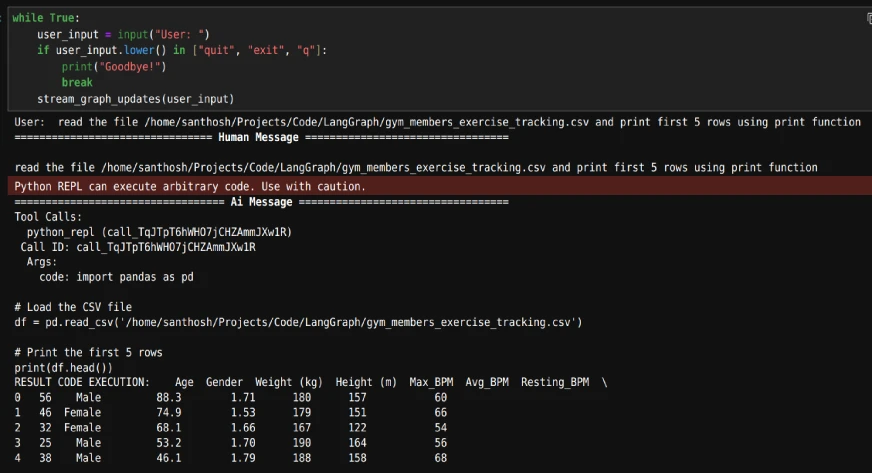

8. Now we will begin the chat. Since we’ve got added reminiscence, we’ll give every dialog a novel thread_id and begin the dialog on that thread.

config = {"configurable": {"thread_id": "1"}}

def stream_graph_updates(user_input: str):

occasions = graph.stream(

{"messages": [("user", user_input)]}, config, stream_mode="values"

)

for occasion in occasions:

occasion["messages"][-1].pretty_print()

whereas True:

user_input = enter("Person: ")

if user_input.decrease() in ["quit", "exit", "q"]:

print("Goodbye!")

break

stream_graph_updates(user_input)Whereas the loop is working, we begin by giving the trail of the file after which asking any questions primarily based on the information.

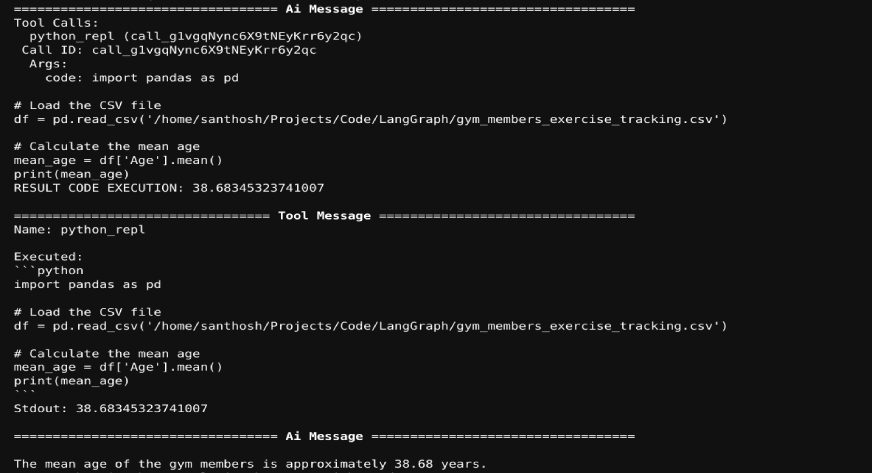

The output will likely be as follows:

As we’ve got included reminiscence, we will ask any questions on the dataset within the chat. The agent will generate the required code and the code will likely be executed. The code execution outcome will likely be despatched again to the LLM. An instance is proven beneath:

Additionally Learn: How one can Create Your Customized Information Digest Agent with LangGraph

Constructing a Information Evaluation Agent with CrewAI

Now, we’ll use CrewAI for information evaluation process.

1. Import the required libraries.

from crewai import Agent, Job, Crew

from crewai.instruments import device

from crewai_tools import DirectoryReadTool, FileReadTool

from langchain_experimental.utilities import PythonREPL

2. We are going to construct one agent for producing the code and one other for executing that code.

coding_agent = Agent(

function="Python Developer",

objective="Craft well-designed and thought-out code to reply the given downside",

backstory="""You're a senior Python developer with in depth expertise in software program and its greatest practices.

You may have experience in writing clear, environment friendly, and scalable code. """,

llm='gpt-4o',

human_input=True,

)

coding_task = Job(

description="""Write code to reply the given downside

assign the code output to the 'outcome' variable

Drawback: {downside},

""",

expected_output="code to get the outcome for the issue. output of the code must be assigned to the 'outcome' variable",

agent=coding_agent

)

3. To execute the code, we’ll use PythonREPL(). Outline it as a crewai device.

@device("repl")

def repl(code: str) -> str:

"""Helpful for executing Python code"""

return PythonREPL().run(command=code)

4. Outline executing agent and duties with entry to repl and FileReadTool()

executing_agent = Agent(

function="Python Executor",

objective="Run the acquired code to reply the given downside",

backstory="""You're a Python developer with in depth expertise in software program and its greatest practices.

"You may execute code, debug, and optimize Python options successfully.""",

llm='gpt-4o-mini',

human_input=True,

instruments=[repl, FileReadTool()]

)

executing_task = Job(

description="""Execute the code to reply the given downside

assign the code output to the 'outcome' variable

Drawback: {downside},

""",

expected_output="the outcome for the issue",

agent=executing_agent

)

5. Construct the crew with each brokers and corresponding duties.

analysis_crew = Crew(

brokers=[coding_agent, executing_agent],

duties=[coding_task, executing_task],

verbose=True

)

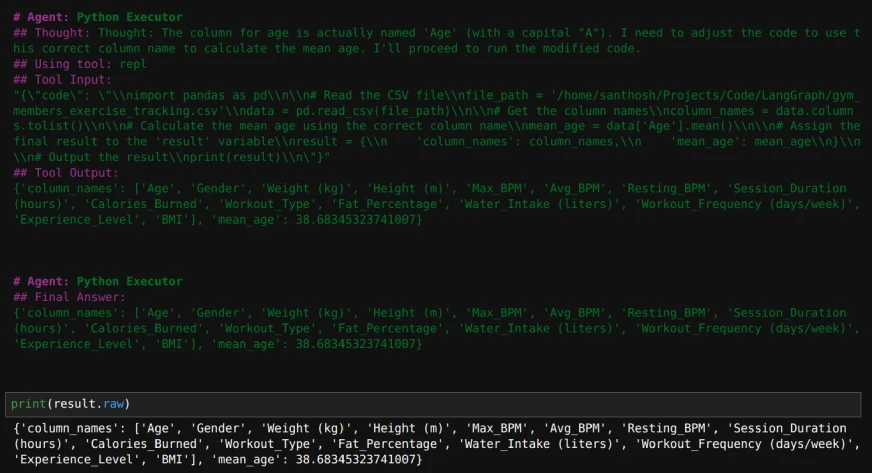

6. Run the crew with the next inputs.

inputs = {'downside': """learn this file and return the column names and discover imply age

"/dwelling/santhosh/Tasks/Code/LangGraph/gym_members_exercise_tracking.csv""",}

outcome = analysis_crew.kickoff(inputs=inputs)

print(outcome.uncooked)

Right here’s how the output will seem like:

Additionally Learn: Construct LLM Brokers on the Fly With out Code With CrewAI

Constructing a Information Evaluation Agent with AutoGen

1. Import the required libraries.

from autogen import ConversableAgent

from autogen.coding import LocalCommandLineCodeExecutor, DockerCommandLineCodeExecutor

2. Outline the code executor and an agent to make use of the code executor.

executor = LocalCommandLineCodeExecutor(

timeout=10, # Timeout for every code execution in seconds.

work_dir="./Information", # Use the listing to retailer the code information.

)

code_executor_agent = ConversableAgent(

"code_executor_agent",

llm_config=False,

code_execution_config={"executor": executor},

human_input_mode="ALWAYS",

)

3. Outline an agent to write down the code with a customized system message.

Take the code_writer system message from https://microsoft.github.io/autogen/0.2/docs/tutorial/code-executors/

code_writer_agent = ConversableAgent(

"code_writer_agent",

system_message=code_writer_system_message,

llm_config={"config_list": [{"model": "gpt-4o-mini"}]},

code_execution_config=False,

)4. Outline the issue to unravel and provoke the chat.

downside = """Learn the file on the path '/dwelling/santhosh/Tasks/Code/LangGraph/gym_members_exercise_tracking.csv'

and print imply age of the individuals."""

chat_result = code_executor_agent.initiate_chat(

code_writer_agent,

message=downside,

)

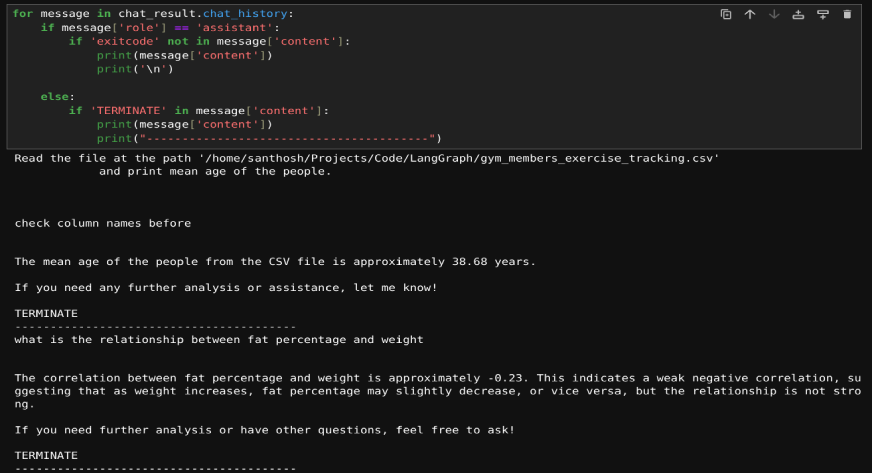

As soon as the chat begins, we will additionally ask any subsequent questions on the dataset talked about above. If the code encounters any error, we will ask to switch the code. If the code is ok, we will simply press ‘enter’ to proceed executing the code.

5. We are able to additionally print the questions requested by us and their solutions, if required, utilizing this code.

for message in chat_result.chat_history:

if message['role'] == 'assistant':

if 'exitcode' not in message['content']:

print(message['content'])

print('n')

else:

if 'TERMINATE' in message['content']:

print(message['content'])

print("----------------------------------------")Right here’s the outcome:

Additionally Learn: Palms-on Information to Constructing Multi-Agent Chatbots with AutoGen

LangGraph vs CrewAI vs AutoGen

Now that you just’ve discovered to construct a knowledge evaluation agent with all the three frameworks, let’s discover the variations between them, relating to code execution:

| Framework | Key Options | Strengths | Greatest Suited For |

|---|---|---|---|

| LangGraph | – Graph-based construction (nodes symbolize brokers/instruments, edges outline interactions) – Seamless integration with PythonREPL |

– Extremely versatile for creating structured, multi-step workflows – Secure and environment friendly code execution with reminiscence preservation throughout duties |

Complicated, process-driven analytical duties that demand clear, customizable workflows |

| CrewAI | – Collaboration-focused – A number of brokers working in parallel with predefined roles – Integrates with LangChain instruments |

– Job-oriented design – Wonderful for teamwork and function specialization – Helps protected and dependable code execution with PythonREPL |

Collaborative information evaluation, code overview setups, process decomposition, and role-based execution |

| AutoGen | – Dynamic and iterative code execution – Conversable brokers for interactive execution and debugging – Constructed-in chat function |

– Adaptive and conversational workflows – Deal with dynamic interplay and debugging – Excellent for fast prototyping and troubleshooting |

Speedy prototyping, troubleshooting, and environments the place duties and necessities evolve incessantly |

Conclusion

On this article, we demonstrated how you can construct information evaluation brokers utilizing LangGraph, CrewAI, and AutoGen. These frameworks allow brokers to generate, execute, and analyze code to handle information queries effectively. By automating repetitive duties, these instruments make information evaluation sooner and extra scalable. The modular design permits customization for particular wants, making them helpful for information professionals. These brokers showcase the potential of AI to simplify workflows and extract insights from information with ease.

To know extra about AI Brokers, checkout our unique Agentic AI Pioneer Program!

Regularly Requested Questions

A. These frameworks automate code technology and execution, enabling sooner information processing and insights. They streamline workflows, scale back guide effort, and improve productiveness for data-driven duties.

A. Sure, the brokers could be personalized to deal with numerous datasets and complicated analytical queries by integrating applicable instruments and adjusting their workflows.

A. LLM-generated code might embrace errors or unsafe operations. At all times validate the code in a managed surroundings to make sure accuracy and safety earlier than execution.

A. Reminiscence integration permits brokers to retain the context of previous interactions, enabling adaptive responses and continuity in complicated or multi-step queries.

A. These brokers can automate duties similar to studying information, performing information cleansing, producing summaries, executing statistical analyses, and answering consumer queries concerning the information.