Introduction

Stateful stream processing refers to processing a steady stream of occasions in real-time whereas sustaining state primarily based on the occasions seen up to now. This enables the system to trace modifications and patterns over time within the occasion stream, and allows making selections or taking actions primarily based on this data.

Stateful stream processing in Apache Spark Structured Streaming is supported utilizing built-in operators (similar to windowed aggregation, stream-stream be a part of, drop duplicates and so forth.) for predefined logic and utilizing flatMapGroupWithState or mapGroupWithState for arbitrary logic. The arbitrary logic permits customers to write down their customized state manipulation code of their pipelines. Nevertheless, because the adoption of streaming grows within the enterprise, extra advanced and complicated streaming functions demand a number of further options to make it simpler for builders to write down stateful streaming pipelines.

With the intention to help these new, rising stateful streaming functions or operational use instances, the Spark group is introducing a brand new Spark operator known as transformWithState. This operator will enable for versatile knowledge modeling, composite varieties, timers, TTL, chaining stateful operators after transformWithState, schema evolution, reusing state from a unique question and integration with a number of different Databricks options similar to Unity Catalog, Delta Reside Tables, and Spark Join. Utilizing this operator, clients can develop and run their mission-critical, advanced stateful operational use-cases reliably and effectively on the Databricks platform utilizing well-liked languages similar to Scala, Java or Python.

Purposes/Use Instances utilizing Stateful Stream Processing

Many event-driven functions depend on performing stateful computations to set off actions or emit output occasions which can be normally written to a different occasion log/message bus similar to Apache Kafka/Apache Pulsar/Google Pub-Sub and so forth. These functions normally implement a state machine that validates guidelines, detects anomalies, tracks classes, and so forth., and generates the derived outcomes, that are normally used to set off actions on downstream methods:

- Enter occasions

- State

- Time (skill to work with processing time and occasion time)

- Output occasions

Examples of such functions embody Person Expertise Monitoring, Anomaly Detection, Enterprise Course of Monitoring, and Resolution Bushes.

Introducing transformWithState: A Extra Highly effective Stateful Processing API

Apache Spark now introduces transformWithState, a next-generation stateful processing operator designed to make constructing advanced, real-time streaming functions extra versatile, environment friendly, and scalable. This new API unlocks superior capabilities for state administration, occasion processing, timer administration and schema evolution, enabling customers to implement subtle streaming logic with ease.

Excessive-Stage Design

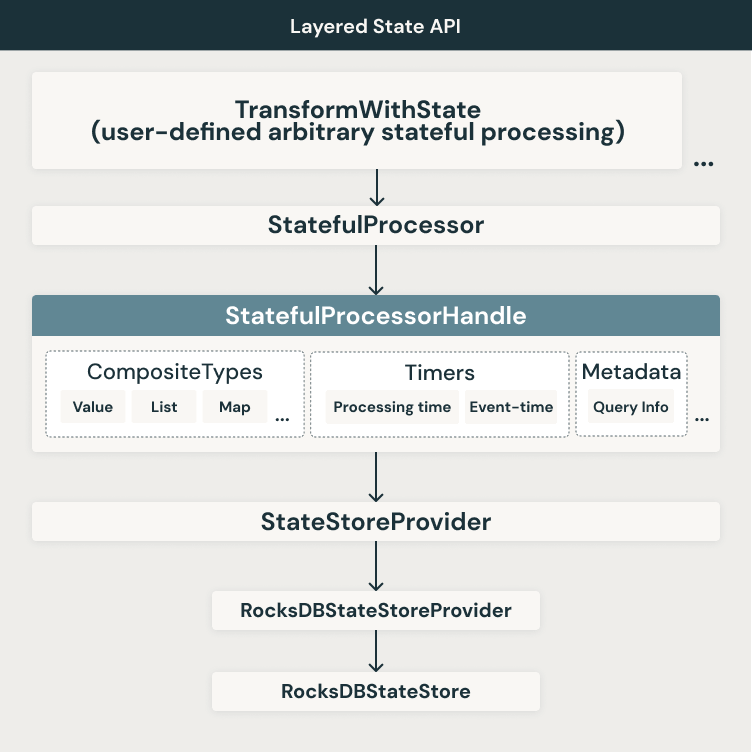

We’re introducing a brand new layered, versatile, extensible API method to handle the aforementioned limitations. A high-level structure diagram of the layered structure and the related options at varied layers is proven under.

As proven within the determine, we proceed to make use of the state backends accessible in the present day. At the moment, Apache Spark helps two state retailer backends:

- HDFSBackedStateStoreProvider

- RocksDBStateStoreProvider

The brand new transformWithState operator will initially be supported solely with the RocksDB state retailer supplier. We make use of varied RocksDB performance round digital column households, vary scans, merge operators, and so forth. to make sure optimum efficiency for the varied options used inside transformWithState. On high of this layer, we construct one other abstraction layer that makes use of the StatefulProcessorHandle to work with composite varieties, timers, question metadata and so forth. On the operator degree, we allow using a StatefulProcessor that may embed the appliance logic used to ship these highly effective streaming functions. Lastly you need to use the StatefulProcessor inside Apache Spark queries primarily based on the DataFrame APIs.

Right here is an instance of an Apache Spark streaming question utilizing the transformWithState operator:

Key Options with transformWithState

Versatile Knowledge Modeling with State Variables

With transformWithState, customers can now outline a number of unbiased state variables inside a StatefulProcessor primarily based on the object-oriented programming mannequin. These variables operate like personal class members, permitting for granular state administration with out requiring a monolithic state construction. This makes it simple to evolve software logic over time by including or modifying state variables with out restarting queries from a brand new checkpoint listing.

Timers and Callbacks for Occasion-Pushed Processing

Customers can now register timers to set off event-driven software logic. The API helps each processing time (wall clock-based) and occasion time (column-based) timers. When a timer fires, a callback is issued, permitting for environment friendly occasion dealing with, state updates, and output era. The power to record, register, and delete timers ensures exact management over occasion processing.

Native Help for Composite Knowledge Varieties

State administration is now extra intuitive with built-in help for composite knowledge buildings:

- ValueState: Shops a single worth per grouping key.

- ListState: Maintains a listing of values per key, supporting environment friendly append operations.

- MapState: Allows key-value storage inside every grouping key with environment friendly level lookups

Spark robotically encodes and persists these state varieties, decreasing the necessity for guide serialization and enhancing efficiency.

Automated State Expiry with TTL

For compliance and operational effectivity, transformWithState introduces native time-to-live (TTL) help for state variables. This enables customers to outline expiration insurance policies, guaranteeing that outdated state knowledge is robotically eliminated with out requiring guide cleanup.

Chaining Operators After transformWithState

With this new API, stateful operators can now be chained after transformWithState, even when utilizing event-time because the time mode. By explicitly referencing event-time columns within the output schema, downstream operators can carry out late file filtering and state eviction seamlessly—eliminating the necessity for advanced workarounds involving a number of pipelines and exterior storage.

Simplified State Initialization

Customers can initialize state from current queries, making it simpler to restart or clone streaming jobs. The API permits seamless integration with the state knowledge supply reader, enabling new queries to leverage beforehand written state with out advanced migration processes.

Schema Evolution for Stateful Queries

transformWithState helps schema evolution, permitting for modifications similar to:

- Including or eradicating fields

- Reordering fields

- Updating knowledge varieties

Apache Spark robotically detects and applies appropriate schema updates, guaranteeing queries can proceed operating throughout the identical checkpoint listing. This eliminates the necessity for full state rebuilds and reprocessing, considerably decreasing downtime and operational complexity.

Native Integration with the State Knowledge Supply Reader

For simpler debugging and observability, transformWithState is natively built-in with the state knowledge supply reader. Customers can examine state variables and question state knowledge instantly, streamlining troubleshooting and evaluation, together with superior options similar to readChangeFeed and so forth.

Availability

The transformWithState API is out there now with the Databricks Runtime 16.2 launch in No-Isolation and Unity Catalog Devoted Clusters. Help for Unity Catalog Customary Clusters and Serverless Compute will likely be added quickly. The API can also be slated to be accessible in open-source with the Apache Spark™ 4.0 launch.

Conclusion

We imagine that each one the function enhancements packed throughout the new transformWithState API will enable for constructing a brand new class of dependable, scalable and mission-critical operational workloads powering a very powerful use-cases for our clients and customers, all throughout the consolation and ease-of-use of the Apache Spark DataFrame APIs. Importantly, these modifications additionally set the muse for future enhancements to built-in in addition to new stateful operators in Apache Spark Structured Streaming. We’re excited in regards to the state administration enhancements in Apache Spark™ Structured Streaming over the previous few years and sit up for the deliberate roadmap developments on this space within the close to future.

You’ll be able to learn extra about stateful stream processing and transformWithState on Databricks right here.