Robert Triggs / Android Authority

Cloud AI could be spectacular, however I yearn for the added safety that solely offline, native processing gives, particularly in mild of DeepSeek reporting consumer information again to China. Changing CoPilot with DeepSeek on my laptop computer yesterday made me marvel if I may run giant language fashions offline on my smartphone as nicely. In any case, at the moment’s flagship telephones declare to be lots highly effective, have swimming pools of RAM, and have devoted AI accelerators that solely probably the most trendy PCs or costly GPUs can greatest. Certainly, it may be accomplished.

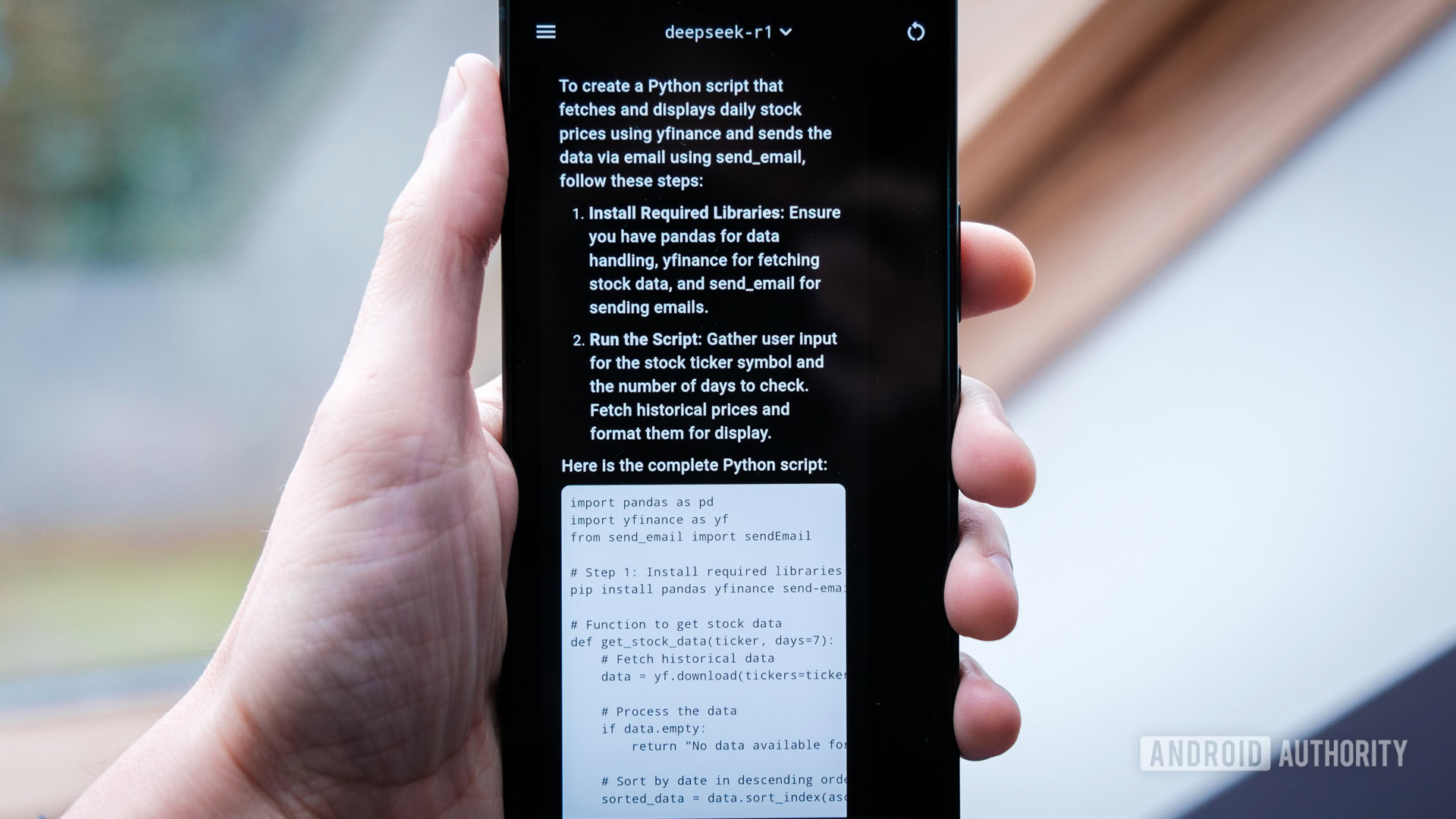

Properly, it seems you possibly can run a condensed model of DeepSeek (and plenty of different giant language fashions) regionally in your cellphone with out being tethered to an web connection. Whereas the responses aren’t as quick or correct as these of the full-sized cloud mannequin, the telephones I examined can churn out solutions at a brisk studying tempo, making them very usable. Importantly, the smaller fashions are nonetheless good at aiding with problem-solving, explaining advanced subjects, and even producing working code, similar to its larger sibling.

Are you interested by DeepSeek’s AI mannequin?

4850 votes

I’m very impressed with the outcomes, provided that it runs on one thing that matches in my pocket. I can’t advocate that you simply all sprint out to repeat me, however those that are actually within the ever-developing AI panorama ought to in all probability strive working native fashions for themselves.

Robert Triggs / Android Authority

Putting in an offline LLM in your cellphone generally is a ache, and the expertise is just not as seamless as utilizing Google’s Gemini. My time spent digging and tinkering additionally revealed that smartphones aren’t probably the most beginner-friendly environments for experimenting with or growing new AI instruments. That’ll want to vary if we’re ever going to have a aggressive market for compelling AI apps, permitting customers to interrupt free from at the moment’s OEM shackles.

Native fashions run surprisingly nicely on Android, however setting them up is just not for the fainthearted.

Surprisingly, efficiency wasn’t actually the difficulty right here; the admittedly cutting-edge Snapdragon 8 Elite smartphones I examined run reasonably sized seven and eight-billion parameter fashions surprisingly nicely on simply their CPU, with an output pace of 11 tokens per second — a bit sooner than most individuals can learn. You’ll be able to even run the 14-billion parameter Phi-4 if in case you have sufficient RAM, although the output falls to a nonetheless satisfactory six tokens per second. Nevertheless, working LLMs is the toughest I’ve pushed trendy smartphone CPUs outdoors of benchmarking, and it ends in some fairly heat handsets.

Impressively, even the growing old Pixel 7 Professional can run smaller three billion parameter fashions, like Meta’s llama3.2, at a satisfactory 5 tokens per second, however making an attempt the bigger DeepSeek is admittedly pushing the restrict of what older telephones can do. Sadly, there’s presently no NPU or GPU acceleration out there for any smartphone utilizing the strategies I attempted, which might give older telephones an actual shot within the arm. As such, bigger fashions are an absolute no-go, even on Android’s strongest chips.

With out web entry or assistant features, few will discover native LLM’s very helpful on their very own.

Even with highly effective trendy handsets, I feel the overwhelming majority of individuals will discover the use instances for working an LLM on their cellphone very restricted. Spectacular fashions like DeepSeek, Llama, and Phi are nice assistants for engaged on big-screen PC initiatives, however you’ll battle to utilize their skills on a tiny smartphone. Presently, in cellphone kind, they will’t entry the web or work together with exterior features like Google Assistant routines, and it’s a nightmare to go them paperwork to summarize by way of the command line. There’s a cause cellphone manufacturers are embedding AI instruments into apps just like the Gallery: concentrating on extra particular use instances is one of the best ways for most individuals to work together with fashions of varied sorts.

So, whereas it’s very promising that smartphones can run among the higher compact LLMs on the market, there’s an extended technique to go earlier than the ecosystem is near supporting client alternative in assistants. As you’ll see beneath, smartphones aren’t in focus for these coaching or working the newest fashions, not to mention optimizing for the wide selection of {hardware} acceleration capabilities the platform has to supply. That stated, I’d like to see extra developer funding right here, as there’s clearly promise.

Should you nonetheless wish to strive working DeepSeek or most of the different in style giant language fashions on the safety of your individual smartphone, I’ve detailed two choices to get you began.

set up DeepSeek in your cellphone (the simple means)

Robert Triggs / Android Authority

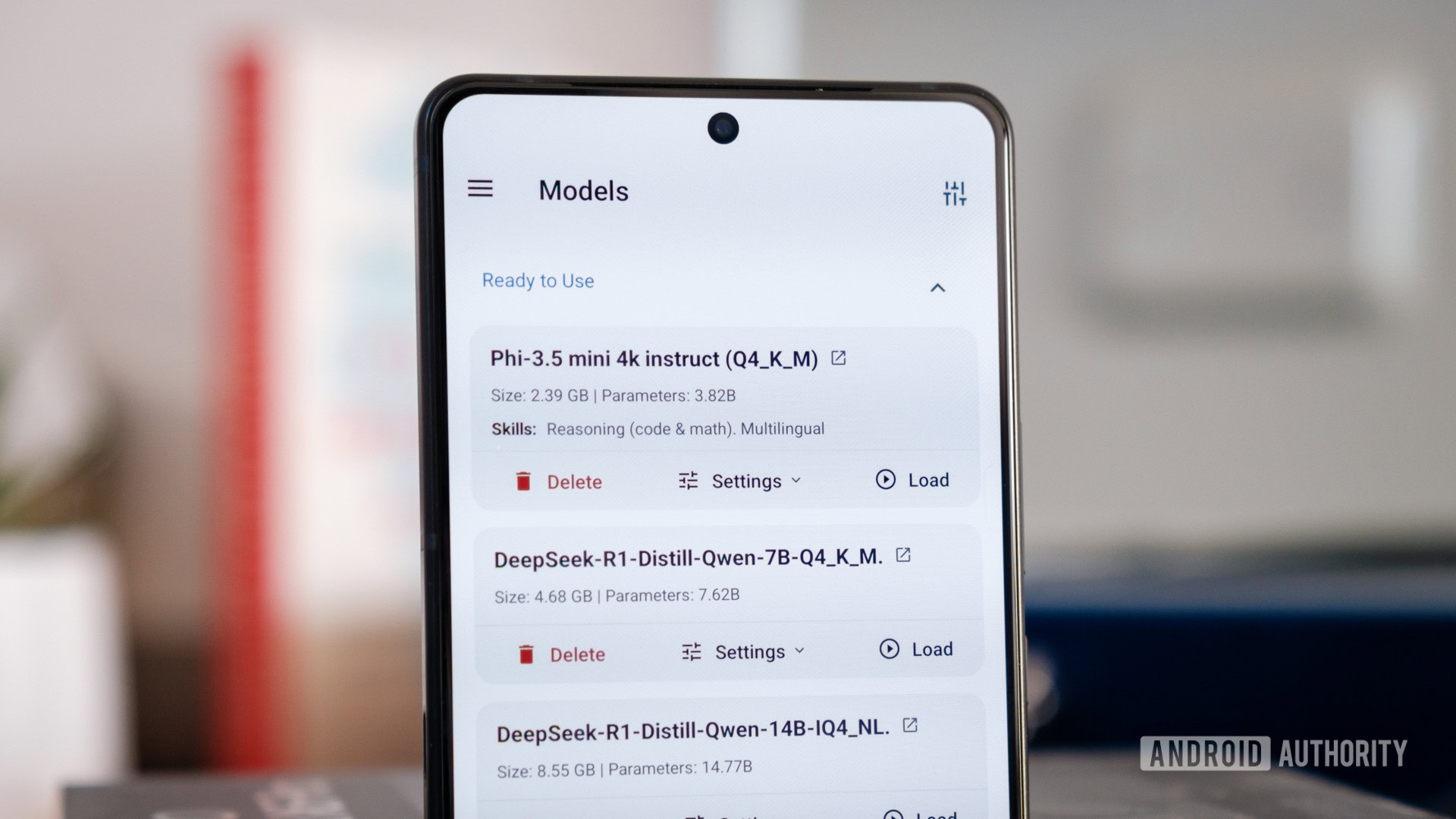

If you need a super-easy answer for chatting with native AI in your Android or iOS handset, you must check out the PocketPal AI app. iPhone homeowners may also strive PrivateLLM. You’ll nonetheless want a cellphone with an honest processor and pool of quick RAM; 12 GB is appropriate in my testing to run smaller, condensed 7b/8b fashions, however 16GB is best and important if you wish to try to sort out 14b.

Via the app, you possibly can entry a variety of fashions by way of the favored HuggingFace portal, which accommodates DeepSeek, LLama, Phi, and plenty of, many others. In reality, HuggingFace’s humongous portfolio is a little bit of an impediment for the uninitiated, and the search performance in PocketPal AI is restricted. Fashions aren’t significantly nicely labeled within the small UI, so choosing the official or optimum one to your gadget is tough, outdoors of avoiding ones that show a reminiscence warning. Fortunately, you possibly can manually import fashions you’ve downloaded your self, which makes the method simpler.

Need to run native AI in your cellphone? PocketPal AI is a brilliant straightforward technique to do exactly that.

Sadly, I skilled a couple of bugs with PocketPal AI, starting from failed downloads to unresponsive chats and full-on app crashes. Mainly, don’t ever navigate away from the app. Quite a lot of responses additionally lower off prematurely as a result of small default window measurement (a giant downside for the verbose DeepSeek), so though it’s user-friendly to begin, you’ll in all probability need to dig into extra advanced settings ultimately. I couldn’t discover a technique to delete chats both.

Fortunately, efficiency is admittedly stable, and it’ll routinely offload the mannequin to cut back RAM when not in use. That is by far the simpler technique to run DeepSeek and different in style fashions offline, however there’s one other means too.

…and the exhausting means

Robert Triggs / Android Authority

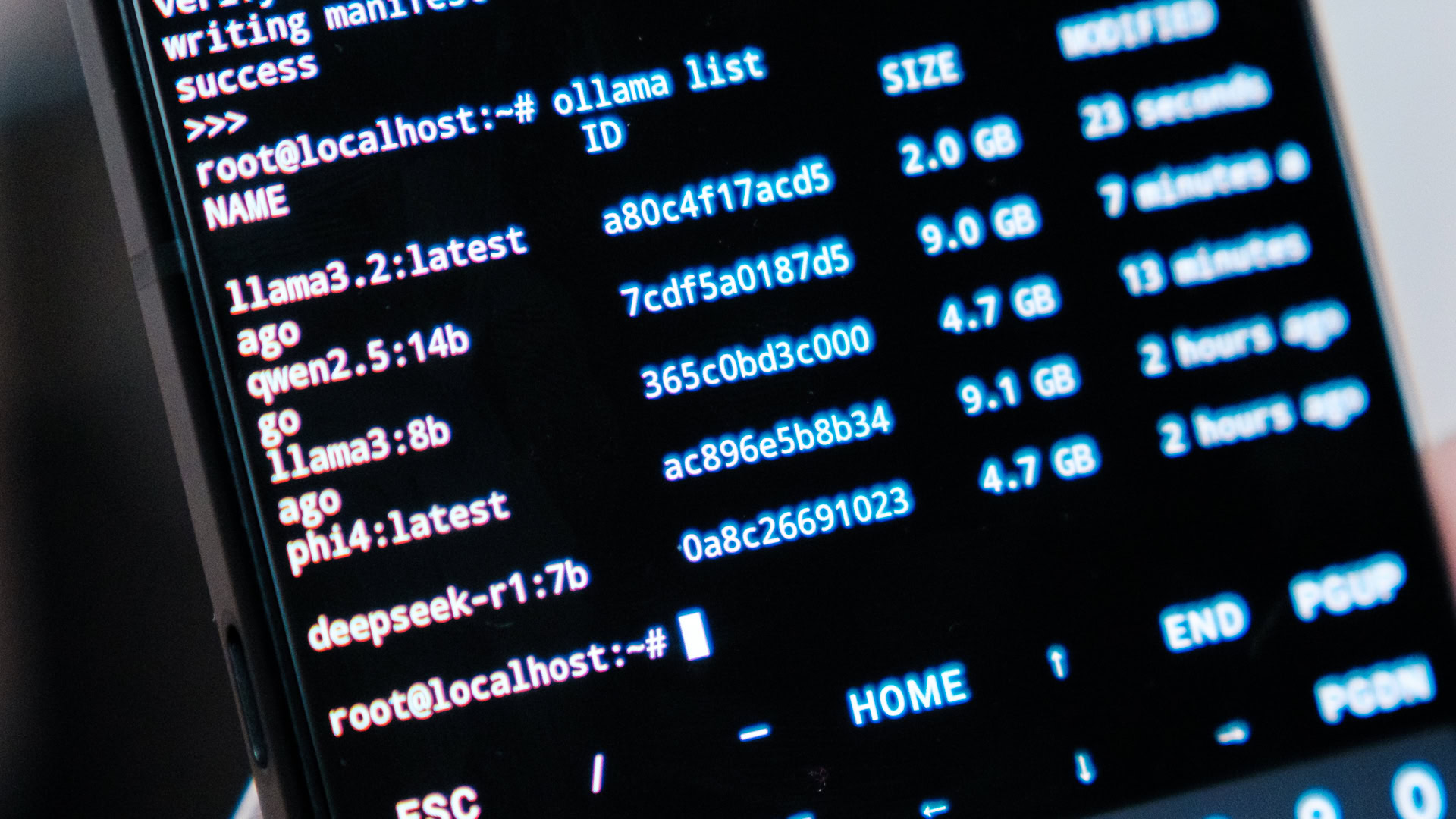

This second methodology entails command-line wrangling by way of Termux to put in Ollama, a well-liked software for working LLM fashions regionally (and the idea for PocketPal AI) that I discovered to be a bit extra dependable than the earlier app. There are a few methods to put in Ollama in your Android cellphone. I’m going to make use of this reasonably sensible methodology by Davide Fornelli, which makes use of Proot to supply a recent (and simply detachable) Debian atmosphere to work with. It’s the simplest to arrange and handle, particularly as you’ll in all probability find yourself eradicating Ollama after enjoying round for a bit. Efficiency with Proot isn’t fairly native, however it’s ok. I recommend studying the total information to grasp the method, however I’ve listed the important steps beneath.

Comply with these steps in your Android cellphone to put in Ollama.

- Set up and open the Termux app

pkgreplace && pkg improve– improve to newest packagespkg set up proot-distro– set up Proot-Distropd set up debian– set up Debianpd login debian– login into our Debian atmospherecurl -fsSL https://ollama.com/set up.sh |sh– obtain and set up Ollamaollama serve– begin working the Ollama server

You’ll be able to exit the Debian atmosphere by way of CTRL+D at any time. To take away Ollama, all of your downloaded fashions, and the Debian atmosphere, observe the steps beneath. This may take your cellphone again to its unique state.

CTRL+Cand/orCTRL+D– exit Ollama and be sure you’re logged out of the Debian atmosphere in Termux.pd take away debian– this can delete every little thing you probably did within the atmosphere (it gained’t contact anything).pkg take away proot-distro– that is elective, however I’ll take away Proot as nicely (it’s just a few MB in measurement, so you possibly can simply preserve it).

With Ollama put in, log in to our Debian atmosphere by way of Termux and run ollama serve to begin up the server. You’ll be able to press CTRL+C to finish the server occasion and unencumber your cellphone’s assets. Whereas that is working now we have a few choices to work together with Ollama and begin working some giant language fashions.

You’ll be able to open a second window in Termux, log in to our Debian atmosphere, and proceed with Ollama command-line interactions. That is the quickest and most secure technique to work together with Ollama, significantly on low-RAM smartphones, and is (presently) the one technique to take away fashions to unencumber storage.

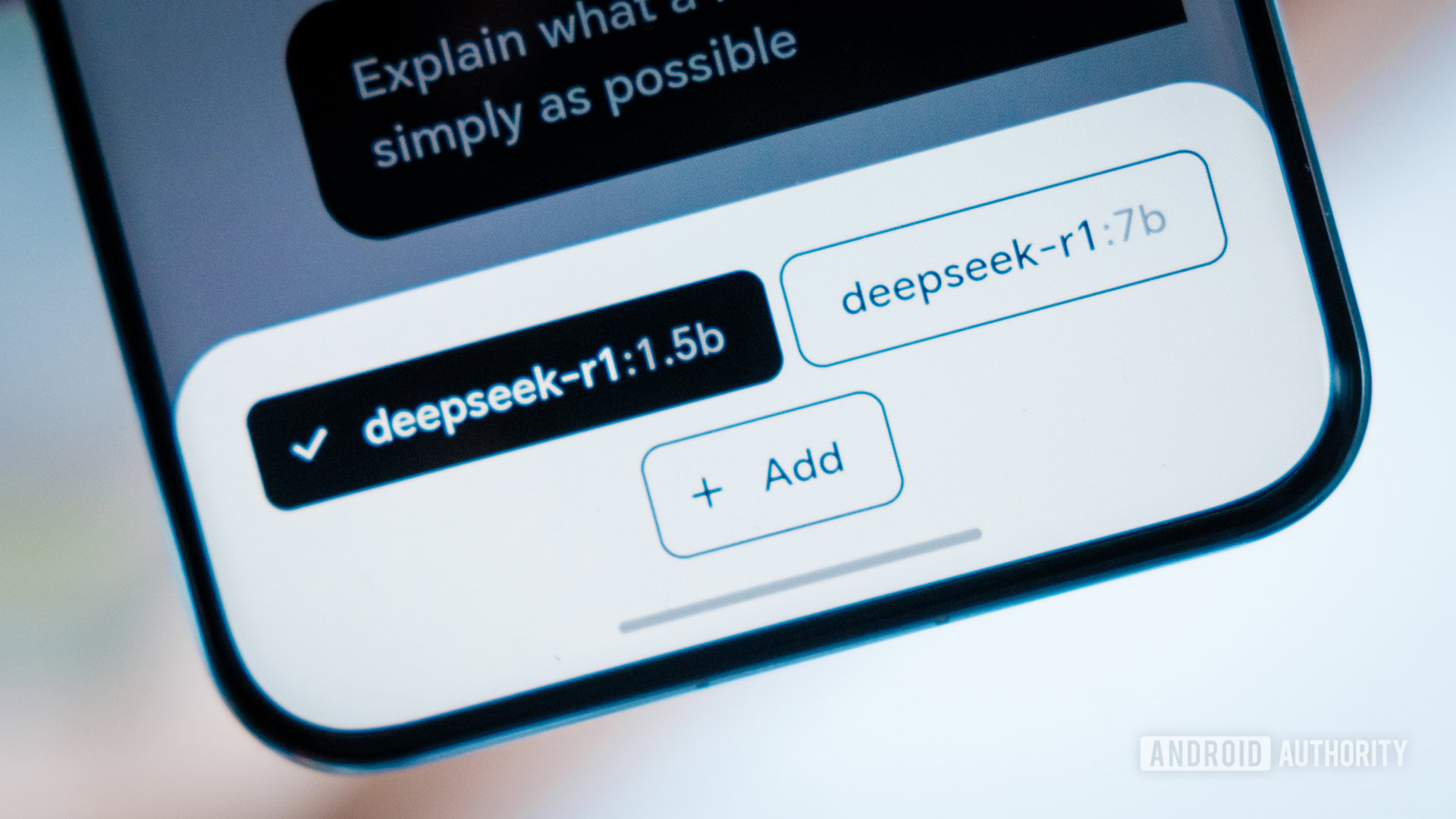

To start out chatting with DeepSeek-R1, enter ollama run deepseek-r1:7b. As soon as it’s downloaded, you possibly can sort away into the command line, and the mannequin will return solutions. You can even decide every other suitably sized mannequin from the well-organized Ollama library.

ollama run MODEL_NAME– obtain and/or run a mannequinollama cease MODEL_NAME– cease a mannequin (Ollama routinely unloads fashions after 5 minutes)ollama pull MODEL_NAME– obtain a mannequinollama rm MODEL_NAME– delete a mannequinollama ps– view the presently loaded mannequinollama listing– listing all of the put in fashions

The choice is to put in JHubi1’s Ollama App for Android. It communicates with Ollama and gives a nicer consumer interface for chatting and putting in fashions of your alternative. The downside is that it’s one other app to run, which eats up treasured RAM and might tip a much less performant smartphone to a crawl. Responses additionally really feel a bit extra sluggish than working within the command line, owing to the communication overhead with Ollama. Nonetheless, it’s a stable quality-of-life improve if you happen to’re planning to perpetually run a neighborhood LLM.

Which LLMs can I run on a smartphone?

Robert Triggs / Android Authority

Whereas watching DeepSeek’s thought course of is spectacular, I don’t suppose it’s essentially probably the most helpful giant language mannequin on the market, particularly to run on a cellphone. Even the seven billion parameter mannequin will pause with longer conversations on an 8 Elite, which makes it a chore to make use of when paired with its lengthy chain of reasoning. Thankfully, there are masses extra fashions to select from that may run sooner and nonetheless present wonderful outcomes. Meta’s Llama, Microsoft’s Phi, and Mistral can all run nicely on a wide range of telephones; you simply want to select probably the most acceptable measurement mannequin relying in your smartphone’s RAM and processing capabilities.

Broadly talking, three billion parameter fashions will want as much as 2.5GB of free RAM, whereas seven and eight billion parameter fashions want round 5-7GB to carry them. 14b fashions can eat as much as 10GB, and so forth, roughly doubling the quantity of RAM each time you double the dimensions of the mannequin. Provided that smartphones additionally need to share RAM with the OS, different apps, and GPU, you want not less than a 50% buffer (much more if you wish to preserve the mannequin working continuously) to make sure your cellphone doesn’t lavatory down by making an attempt to maneuver the mannequin into swap area. As a tough ballpark, telephones with 12GB of quick RAM will run seven and eight billion parameter fashions, however you’ll want 16GB or larger to aim 14b. And keep in mind, Google’s Pixel 9 Professional partitions off some RAM for its personal Gemini mannequin.

Android telephones can decide from an enormous vary of smaller AI fashions.

In fact, extra parameters require vastly extra processing energy to crunch the mannequin as nicely. Even the Snapdragon 8 Elite slows down a bit with Microsoft’s Phi-4:14b and Alibaba’s Qwen2.5:14b, although each are moderately usable on the colossal 24GB ASUS ROG Cellphone 9 Professional I used to check with. If you need one thing that outputs at studying tempo on present or last-gen {hardware} that also affords an honest degree of accuracy, stick to 8b at most. For older or mid-range telephones, dropping with smaller 3b fashions sacrifices way more accuracy however ought to permit for a token output that isn’t a whole snail’s tempo.

In fact, that is simply if you wish to run AI regionally. Should you’re proud of the trade-offs of cloud computing, you possibly can entry way more highly effective fashions like ChatGPT, Gemini, and the full-sized DeepSeek by means of your internet browser or their respective devoted apps. Nonetheless, working AI on my cellphone has actually been an fascinating experiment, and higher builders than me may faucet into way more potential with Ollama’s API hooks.