What you want to know

- Google highlighted the rollout of its new SAIF Danger Evaluation questionnaire for AI system creators.

- The evaluation will ask a sequence of in-depth questions on a creator’s AI mannequin and ship a full “danger report” for potential safety points.

- Google has been targeted on safety and AI, particularly because it introduced AI security practices to the White Home.

Google states the “potential of AI is immense,” which is why this new Danger Evaluation is arriving for AI system creators.

In a weblog submit, Google states the SAIF Danger Evaluation is designed to assist AI fashions created by others adhere to the suitable safety requirements. These creating new AI methods can discover this questionnaire on the prime of the SAIF.Google homepage. The Danger Evaluation will run them via a number of questions relating to their AI. It is going to contact on matters like coaching, “tuning and analysis,” generative AI-powered brokers, entry controls and knowledge units, and far more.

The aim of such an in-depth questionnaire is so Google’s device can generate an correct and acceptable record of actions to safe the software program.

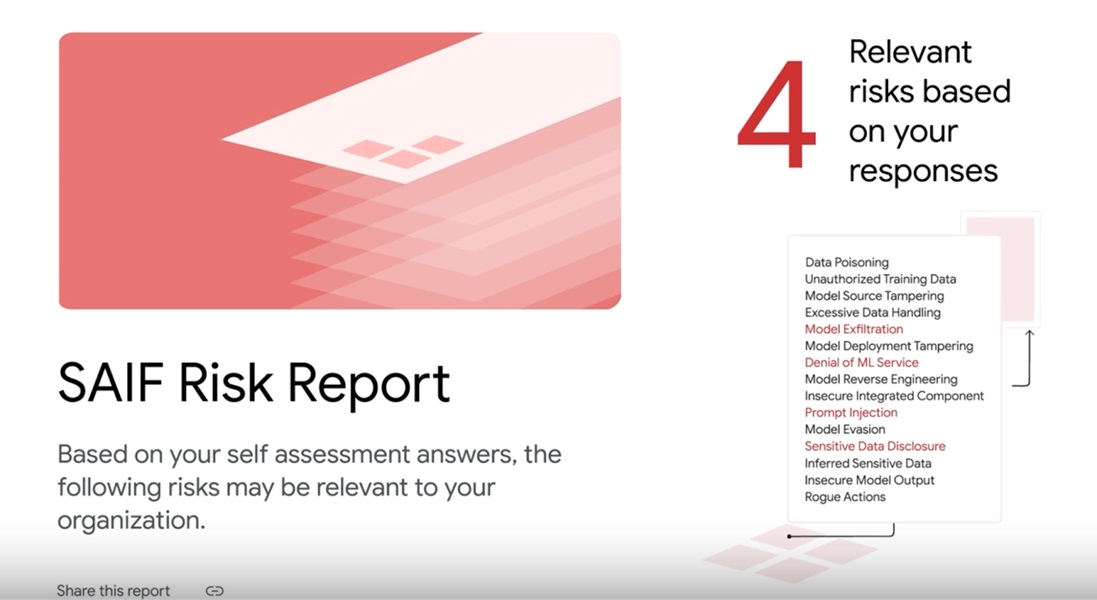

The submit states that customers will discover a detailed report of “particular” dangers to their AI system as soon as the questionnaire is over. Google states AI fashions might be vulnerable to dangers equivalent to knowledge poisoning, immediate injection, mannequin supply tampering, and extra. The Danger Evaluation will even inform AI system creators why the device flagged a selected space as risk-prone. The report will even go into element about any potential “technical” dangers, too.

Moreover, the report will embrace methods to mitigate such dangers from turning into exploited or an excessive amount of of an issue sooner or later.

Google highlighted progress with its not too long ago created Coalition for Safe AI (CoSAI). In keeping with its submit, the corporate has partnered with 35 trade leaders to debut three technical workstreams: Provide Chain Safety for AI Programs, Getting ready Defenders for a Altering Cybersecurity Panorama, and AI Danger Governance. Utilizing these “focus areas,” Google states the CoSAI is working to create useable AI safety options.

Google began gradual and cautious with its AI software program, which nonetheless rings true because the SAIF Danger Evaluation arrives. In fact, one of many highlights of its gradual method was with its AI Principals and being accountable for its software program. Google acknowledged, “… our method to AI should be each daring and accountable. To us meaning creating AI in a manner that maximizes the optimistic advantages to society whereas addressing the challenges.”

The opposite aspect is Google’s efforts to advance AI security practices alongside different huge tech corporations. The businesses introduced these practices to the White Home in 2023, which included the required steps to earn the general public’s belief and encourage stronger safety. Moreover, the White Home tasked the group with “defending the privateness” of those that use their AI platforms.

The White Home additionally tasked the businesses to develop and spend money on cybersecurity measures. Evidently has continued on Google’s aspect as we’re now seeing its SAIF undertaking go from conceptual framework to software program that is put to make use of.