(3rdtimeluckystudio/Shutterstock)

Monetary providers companies are wanting to undertake generative AI to chop prices, develop revenues, and improve buyer satisfaction, as are many organizations. Nevertheless, the dangers related to GenAI are usually not trivial, particularly in monetary providers. TCI Media just lately hosted consultants from EY to assist practitioners in FinServ get began on their accountable AI journeys.

Throughout the current HPC + AI on Wall Avenue occasion, which happened in New York Metropolis in mid-September, TCI Media welcomed two AI consultants from EY Americas, together with Rani Bhuva, a principal at EY and EY Americas Monetary Companies Accountable AI Chief, and Kiranjot Dhillon, a senior supervisor at EY and the EY Americas Monetary Companies AI Chief.

Of their speak, titled “Accountable AI: Regulatory Compliance Traits and Key Concerns for AI Practitioners,” Bhuva and Dhillon mentioned lots of the challenges that monetary providers corporations face when attempting to undertake AI, together with GenAI, in addition to a few of the steps that corporations can take to get began.

FinServ corporations aren’t any stranger to regulation, and so they’ll discover loads of that taking place in GenAI all over the world. The European Union’s AI Act has gained lots of consideration globally, whereas right here within the US, there are about 200 potential knowledge and AI payments being drawn up on the state degree, based on Bhuva.

Corporations should deliver quite a few consultants to the desk to develop and deploy AI responsibly (Picture courtesy EY)

On the federal degree, many of the motion up to now has occurred with the NIST AI Framework from early 2023 and President Joe Biden’s October 2023 government order, she mentioned. The Federal Communications Fee (FCC) and the Federal Reserve have instructed monetary corporations to take the rules they’ve handed over the previous 10 years round mannequin threat administration and governance, and apply that to GenAI, she mentioned.

Federal regulators are nonetheless principally in studying mode in terms of GenAI, she mentioned. Many of those companies, corresponding to US Treasury and Shopper Monetary Safety Board (CFPB), have issued requests for data (RFIs) to get suggestions from the sector, whereas others, just like the Monetary Business Regulatory Authority (FINR) and the Securities and Trade Fee (SEC) have clarified some rules round buyer knowledge in AI apps.

“One factor that has clearly emerged is alignment with the NIST,” Bhuva mentioned. “The problem from the NIST, after all, is that it hasn’t taken under consideration the entire different regulatory issuances inside monetary providers. So if you concentrate on what’s occurred up to now decade, mannequin threat administration, TPRM [third-party risk management], cybersecurity–there’s much more element inside monetary providers regulation that applies at present.”

So how do FinServ corporations get began? EY recommends that corporations take a step again and have a look at the instruments they have already got in place. To make sure compliance, corporations ought to have a look at three particular areas.

- AI governance framework – An overarching framework that encompasses mannequin threat administration, TPRM [third-party risk management], and cybersecurity;

- AI stock – A doc describing the entire AI parts and belongings, together with machine studying fashions and coaching knowledge, that your organization has developed up to now;

- AI reporting – A system to watch and report on the performance of AI methods, particularly high-risk AI methods.

GenAI is altering shortly, and so are the dangers that it entails. The NIST just lately issued steering on how to deal with GenAI-specific dangers. EY’s Bhuva referred to as out one of many dangers: the tendency for AI fashions to make issues up.

“Everybody’s been utilizing the time period ‘hallucination,’ however the NIST is particularly involved with anthropomorphization of AI,” she mentioned. “And so the thought was that hallucination makes AI appear too human. So that they got here up with the phrase confabulation to explain that. I haven’t really heard anybody use confabulation. I feel everyone seems to be caught on hallucinations, however that’s on the market.”

Rani Bhuva (left), a principal at EY and EY Americas Monetary Companies Accountable AI Chief, and Kiranjot Dhillon, a senior supervisor at EY and the EY Americas Monetary Companies AI Chief, current at 2024 HPC + AI on Wall Avenue

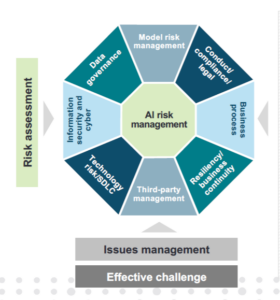

There are lots of points to accountable AI growth, and bringing all of them collectively right into a cohesive program with efficient controls and validation just isn’t a straightforward activity. To do it proper, an organization should adapt quite a lot of separate applications, all the pieces from mannequin threat administration and regulatory compliance to knowledge safety and enterprise continuity. Simply getting everybody to work collectively in the direction of this finish is a problem, Bhuva mentioned.

“You actually need to ensure that the suitable events are on the desk,” she mentioned. “This will get to be pretty sophisticated as a result of it’s essential to have totally different areas of experience, given the complexity of generative AI. Are you able to discuss whether or not or not you’ve successfully addressed all controls related to generative AI until you verify the field on privateness, until you verify the field on TPRM, knowledge governance, mannequin threat administration as effectively?”

Following ideas of moral AI is one other ball of wax altogether. Because the guidelines of moral AI usually aren’t legally enforceable, it’s as much as a person firm whether or not they may keep away from utilizing massive language fashions (LLMs) which have been educated on copyrighted knowledge, Bhuva identified. And even when an LLM supplier indemnifies your organization in opposition to copyright lawsuits, is it nonetheless moral to make use of the LLM if you recognize it was educated on copyrighted knowledge anyway?

“One other problem, if you concentrate on the entire assurances on the international degree, all of them discuss privateness, they discuss equity, they discuss explainability, accuracy, safety–the entire ideas,” Bhuva mentioned. “However lots of these ideas battle with one another. So to make sure that your mannequin is correct, you essentially want lots of knowledge, however you is perhaps violating potential privateness necessities as a way to get all that knowledge. You is perhaps sacrificing explainability for accuracy as effectively. So there’s lots that you may’t resolve for from a regulatory or legislative perspective, and in order that’s why we see lots of curiosity in AI ethics.”

Implementing Accountable GenAI

EY’s Kiranjot Dhillon, who’s an utilized AI scientist, supplied a practitioner’s view of accountable AI to the HPC + AI on Wall Avenue viewers.

One of many massive challenges that GenAI practitioners are going through proper now–and one of many massive the reason why many GenAI apps haven’t been put into manufacturing–is the issue in understanding precisely how GenAI methods will really behave in operational environments, Dhillon mentioned.

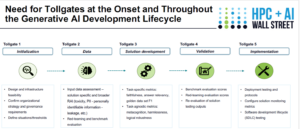

“One of many key root causes is considering by accountable AI and these threat mitigation practices in the direction of the top of the solution-build life cycle,” she mentioned. “It’s extra retrofitting and overlaying these necessities to ensure that they’re met, versus serious about that every one the best way on the onset.”

It’s exhausting to get readability into that while you construct the instrumentation that can inform that query into the system after-the-fact. It’s significantly better to do it from the start, she mentioned.

“Accountable necessities have to be thought by proper on the initialization stage,” Dhillon mentioned. “And applicable toll gates have to be then trickled by the person subsequent steps and go all through operationalization and acquiring approvals in these particular person steps as effectively.”

Because the bones of the GenAI methods are laid down, it’s essential for the builders and designers to consider the metrics and different standards they need to accumulate to make sure that they’re assembly their accountable AI targets, she mentioned. Because the system, it’s as much as the accountable AI crew members–and presumably even a problem crew, or a “pink crew”–to step in and choose whether or not the necessities really are being met.

Dhillon helps the usage of consumer guardrails to construct accountable GenAI methods. These methods, corresponding to Nvidia’s Nemo, can work on each the enter to the LLM in addition to the output. They’ll forestall sure requests from reaching the LLM, and instruct the LLM to not reply in sure methods.

“You may take into consideration topical guardrails the place the answer just isn’t digressing from the subject at hand,” Dhillon mentioned. “It could possibly be security and safety guardrails, like attempting to cut back hallucinations or confabulations, after we speak within the NIST phrases, and attempting to floor the answer an increasing number of. Or stopping the answer from reaching out to an exterior, doubtlessly unsafe purposes proper on the supply degree. So having these eventualities recognized, captured, and tackled is what guardrails actually present us.”

Typically, the most effective guardrail for an LLM is a second LLM that can oversee the primary LLM and preserve an eye fixed out for issues like logical correctness, reply relevancy, or context relevancy, that are highly regarded within the RAG house, Dhillon mentioned.

There are additionally some finest practices in terms of utilizing immediate engineering in a accountable method. Whereas the info is relied upon to coach the mannequin in conventional machine studying, in GenAI, it’s generally finest to offer the pc express directions to comply with, Dhillon mentioned.

“It’s considering by what you’re in search of the LLM to do and instructing it precisely what you anticipate for it to do,” she mentioned. “And it’s actually so simple as that. Be descriptive. Be verbose in what your expectations of the LLM and instructing it to not reply when sure queries are requested, or answered on this means or that means.”

Relying on which kind of prompting you utilize, corresponding to zero-shot prompting, few-shot prompting, or chain-of-thought prompting, the robustness of the ultimate GenAI answer will differ.

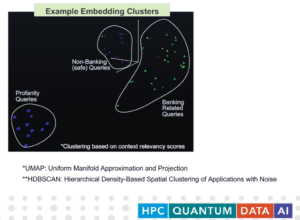

Lastly, it’s essential to have a human within the loop to watch the GenAI system and make sure that it’s not going off the rails. Throughout her presentation, Dhillon confirmed how having a visualization software that may use automated statistical strategies to cluster numerous responses can assist the human shortly spot any anomalies or outliers.

“The thought right here is {that a} human evaluator may shortly have a look at it and see that stuff that’s falling inside that greater, bean formed cluster may be very near what the information base is, so it’s almost certainly related queries,” she mentioned. “And as you begin to sort of go additional away from that key cluster, you’re seeing queries which may perhaps be tangentially associated. All the best way to the underside left is a cluster which is, upon guide evaluate, turned out to be profane queries. In order that’s why it’s very far off within the visualized dimensional house from the stuff that you’d anticipate the LLM to be answered. And at that time, you possibly can create the best varieties of triggers to usher in guide intervention.”

You possibly can watch Bhava and Dhillon’s full presentation, in addition to the opposite recorded HPC +

AI on Wall Avenue shows, by registering at www.hpcaiwallstreet.com.

Associated Gadgets:

Biden’s Government Order on AI and Information Privateness Will get Principally Favorable Reactions

Bridging Intent with Motion: The Moral Journey of AI Democratization

NIST Places AI Threat Administration on the Map with New Framework