On this publish I’m going to point out you the way I tracked the situation of my Tesla Mannequin 3 in actual time and plotted it on a map. I stroll by an finish to finish integration of requesting knowledge from the automotive, streaming it right into a Kafka Matter and utilizing Rockset to show the information through its API to create actual time visualisations in D3.

Getting began with Kafka

When beginning with any new device I discover it greatest to go searching and see the artwork of the doable. Inside the Rockset console there’s a catalog of out of the field integrations that help you connect Rockset to any variety of current functions you might have. The one which instantly caught my eye was the Apache Kafka integration.

This integration lets you take knowledge that’s being streamed right into a Kafka matter and make it instantly obtainable for analytics. Rockset does this by consuming the information from Kafka and storing it inside its analytics platform nearly immediately, so you may start querying this knowledge straight away.

There are a variety of nice posts that define intimately how the Rockset and Kafka integration works and how one can set it up however I’ll give a fast overview of the steps I took to get this up and operating.

Establishing a Kafka Producer

To get began we’ll want a Kafka producer so as to add our actual time knowledge onto a subject. The dataset I’ll be utilizing is an actual time location tracker for my Tesla Mannequin 3. In Python I wrote a easy Kafka producer that each 5 seconds requests the actual time location from my Tesla and sends it to a Kafka matter. Right here’s the way it works.

Firstly we have to setup the connection to the Tesla. To do that I used the Good Automotive API and adopted their getting began information. You’ll be able to attempt it at no cost and make as much as 20 requests a month. If you happen to want to make extra calls than this there’s a paid possibility.

As soon as authorised and you’ve got all of your entry tokens, we are able to use the Good Automotive API to fetch our automobile information.

vehicle_ids = smartcar.get_vehicle_ids(entry['access_token'])['vehicles']

# instantiate the primary automobile within the automobile id listing

automobile = smartcar.Car(vehicle_ids[0], entry['access_token'])

# Get automobile information to check the connection

information = automobile.information()

print(information)

For me, this returns a JSON object with the next properties.

{

"id": "XXXX",

"make": "TESLA",

"mannequin": "Mannequin 3",

"12 months": 2019

}

Now we’ve efficiently linked to the automotive, we have to write some code to request the automotive’s location each 5 seconds and ship that to our Kafka matter.

from kafka import KafkaProducer

# initialise a kafka producer

producer = KafkaProducer(bootstrap_servers=['localhost:1234'])

whereas True:

# get the automobiles location utilizing SmartCar API

location = automobile.location()

# ship the situation as a byte string to the tesla-location matter

producer.ship('tesla-location', location.encode())

time.sleep(5)

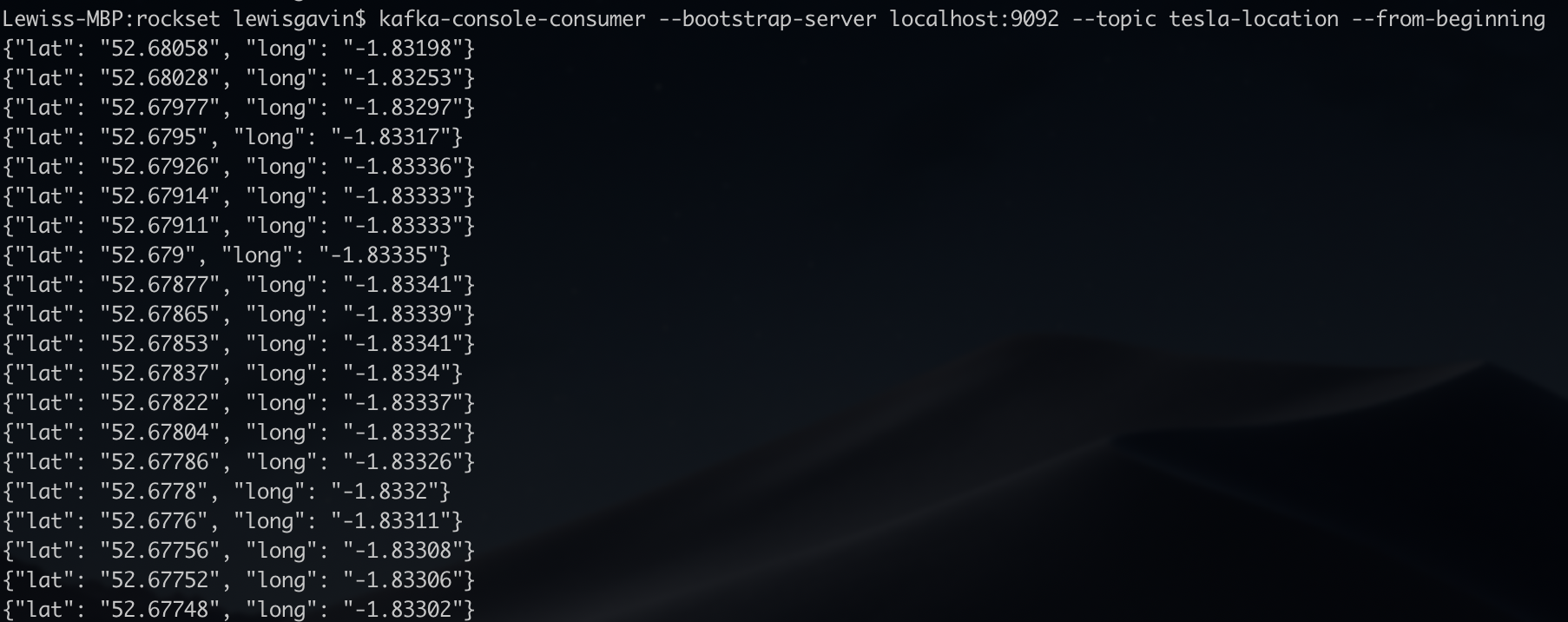

As soon as that is operating we are able to double examine it’s working by utilizing the Kafka console shopper to show the messages as they’re being despatched in actual time. The output ought to look much like Fig 1. As soon as confirmed it’s now time to hook this into Rockset.

Fig 1. Kafka console shopper output

Streaming a Kafka Matter into Rockset

The crew at Rockset have made connecting to an current Kafka matter fast and straightforward through the Rockset console.

- Create Assortment

- Then choose Apache Kafka

- Create Integration – Give it a reputation, select a format (JSON for this instance) and enter the subject title (tesla-location)

- Observe the 4 step course of offered by Rockset to put in Kafka Join and get your Rockset Sink operating

It’s actually so simple as that. To confirm knowledge is being despatched to Rockset you may merely question your new assortment. The gathering title would be the title you gave in step 3 above. So throughout the Rockset console simply head to the Question tab and do a easy choose out of your assortment.

choose * from commons."tesla-integration"

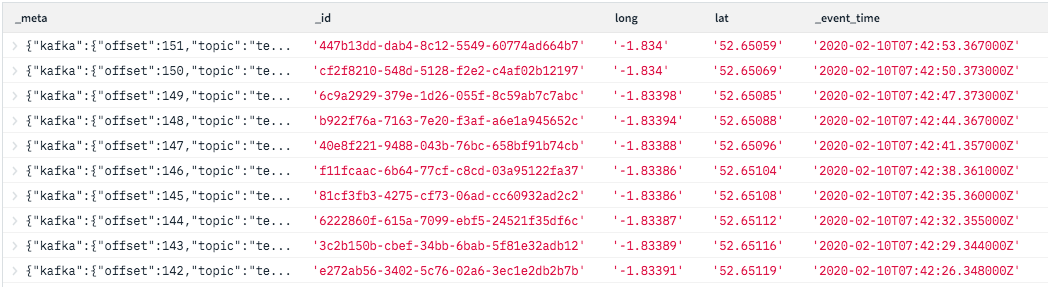

You’ll discover within the outcomes that not solely will you see the lat and lengthy you despatched to the Kafka matter however some metadata that Rockset has added too together with an ID, a timestamp and a few Kafka metadata, this may be seen in Fig 2. These will probably be helpful for understanding the order of the information when plotting the situation of the automobile over time.

Fig 2. Rockset console outcomes output

Connecting to the REST API

From right here, my subsequent pure thought was how one can expose the information that I’ve in Rockset to a entrance finish internet utility. Whether or not it’s the actual time location knowledge from my automotive, weblogs or every other knowledge, having this knowledge in Rockset now provides me the ability to analyse it in actual time. Moderately than utilizing the in-built SQL question editor, I used to be on the lookout for a option to enable an online utility to request the information. This was after I got here throughout the REST API connector within the Rockset Catalog.

Fig 3. Relaxation API Integration

From right here I discovered hyperlinks to the API docs with all the knowledge required to authorise and ship requests to the in-built API (API Keys could be generated throughout the Handle menu, beneath API Keys).

Utilizing Postman to Take a look at the API

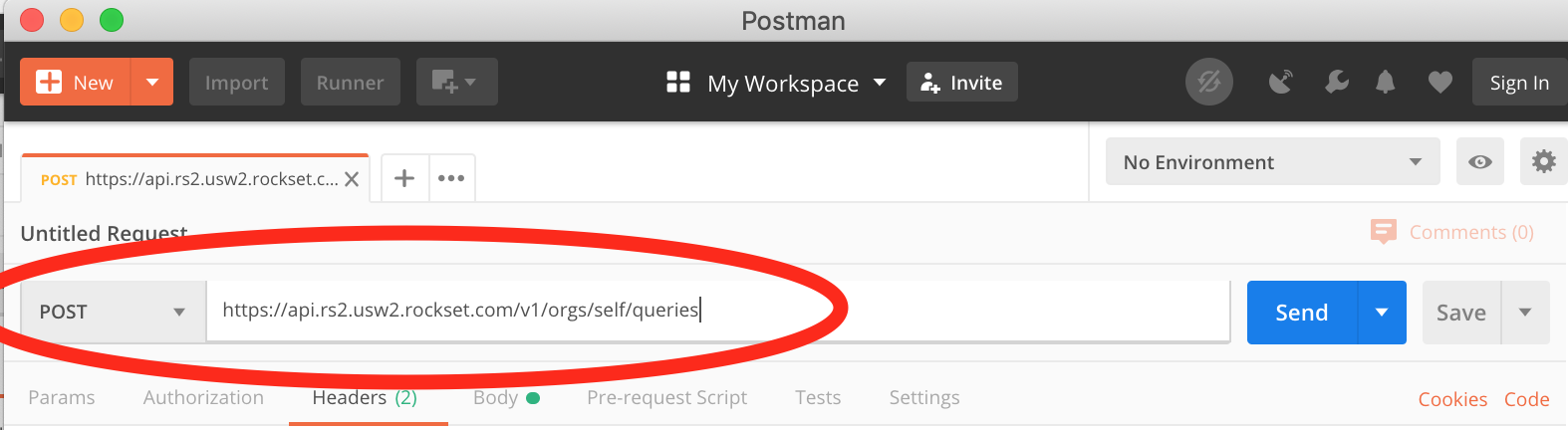

After you have your API key generated, it’s time to check the API. For testing I used an utility known as Postman. Postman offers a pleasant GUI for API testing permitting us to shortly stand up and operating with the Rockset API.

Open a brand new tab in Postman and also you’ll see it can create a window for us to generate a request. The very first thing we have to do is use the URL we need to ship our request to. The Rockset API docs state that the bottom tackle is https://api.rs2.usw2.rockset.com and to question a set you could append /v1/orgs/self/queries – so add this into the request URL field. The docs additionally say the request sort must be POST, so change that within the drop down too as proven in Fig 4.

Fig 4. Postman setup

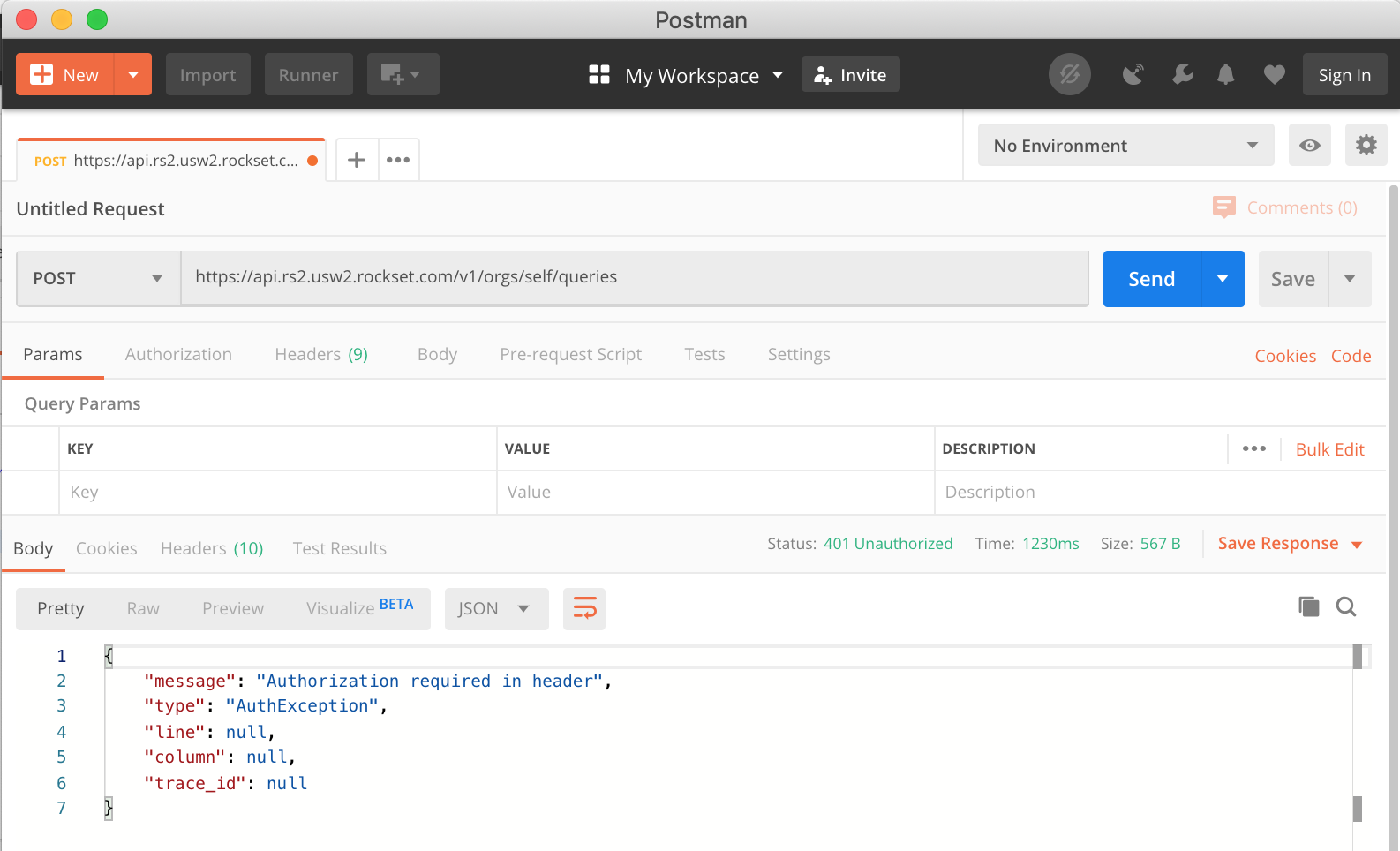

We are able to hit ship now and check the URL we now have offered works. In that case it’s best to get a 401 response from the Rockset API saying that authorization is required within the header as proven in Fig 5.

Fig 5. Auth error

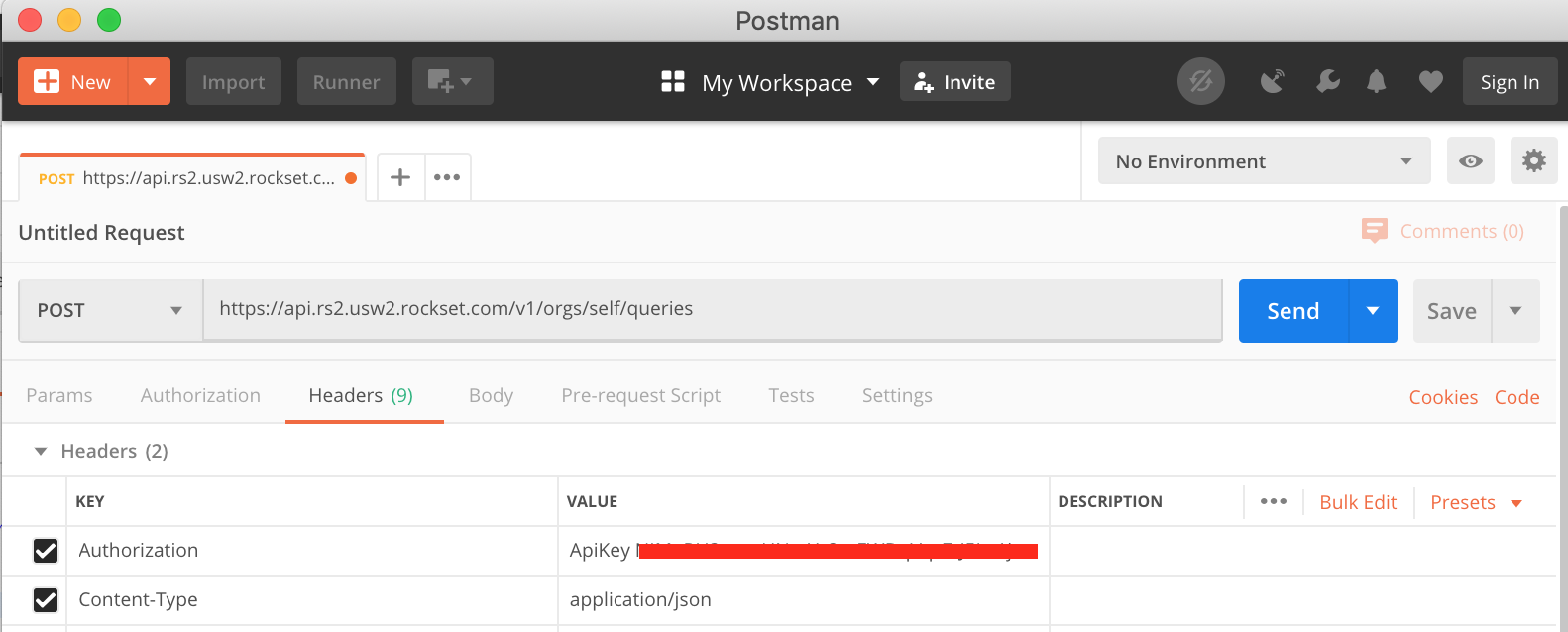

To resolve this, we want the API Key generated earlier. If you happen to’ve misplaced it, don’t fear because it’s obtainable within the Rockset Console beneath Handle > API Keys. Copy the important thing after which again in Postman beneath the “Headers” tab we have to add our key as proven in Fig 6. We’re primarily including a key worth pair to the Header of the request. It’s essential so as to add ApiKey to the worth field earlier than pasting in your key (mine has been obfuscated in Fig 6.) While there, we are able to additionally add the Content material-Sort and set it to utility/json.

Fig 6. Postman authorization

Once more, at this level we are able to hit Ship and we should always get a special response asking us to offer a SQL question within the request. That is the place we are able to begin to see the advantages of utilizing Rockset as on the fly, we are able to ship SQL requests to our assortment that may quickly return our outcomes to allow them to be utilized by a entrance finish utility.

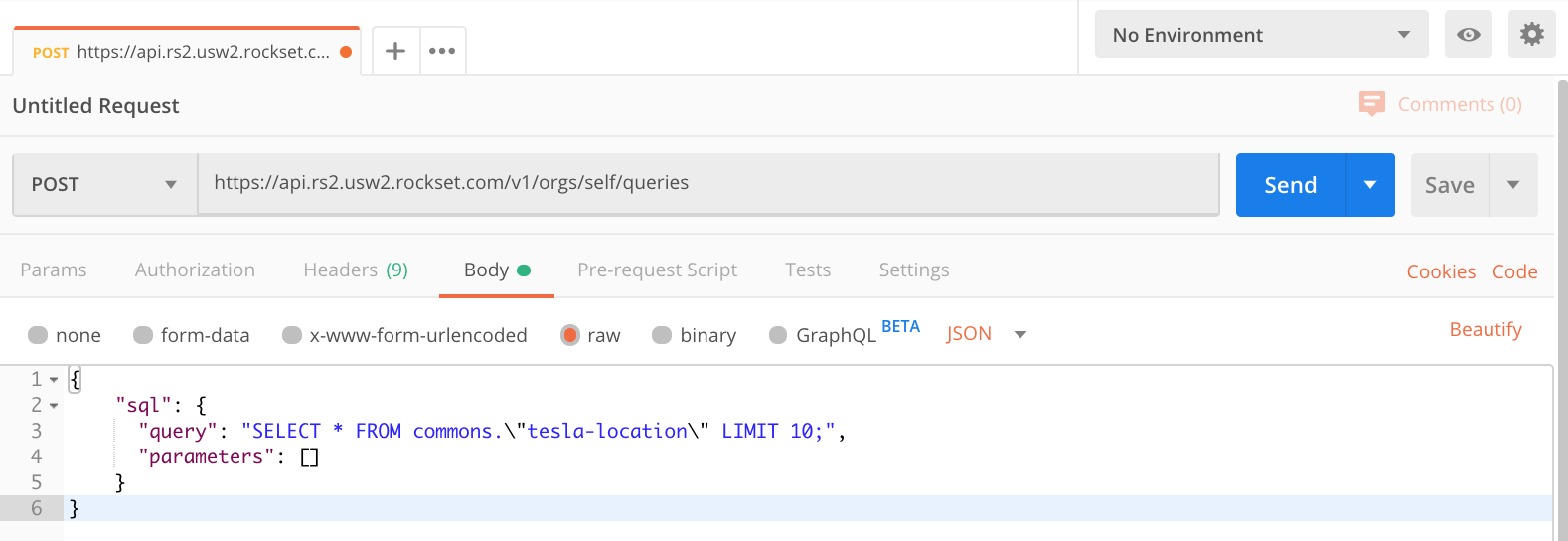

So as to add a SQL question to the request, use the Physique tab inside Postman. Click on the Physique tab, be certain that ‘uncooked’ is chosen and make sure the sort is about to JSON, see Fig 7 for an instance. Inside the physique subject we now want to offer a JSON object within the format required by the API, that gives the API with our SQL assertion.

Fig 7. Postman uncooked physique

As you may see in Fig 7 I’ve began with a easy SELECT assertion to only seize 10 rows of knowledge.

{

"sql": {

"question": "choose * from commons."tesla-location" LIMIT 10",

"parameters": []

}

}

It’s essential you utilize the gathering title that you just created earlier and if it incorporates particular characters, like mine does, that you just put it in quotes and escape the quote characters.

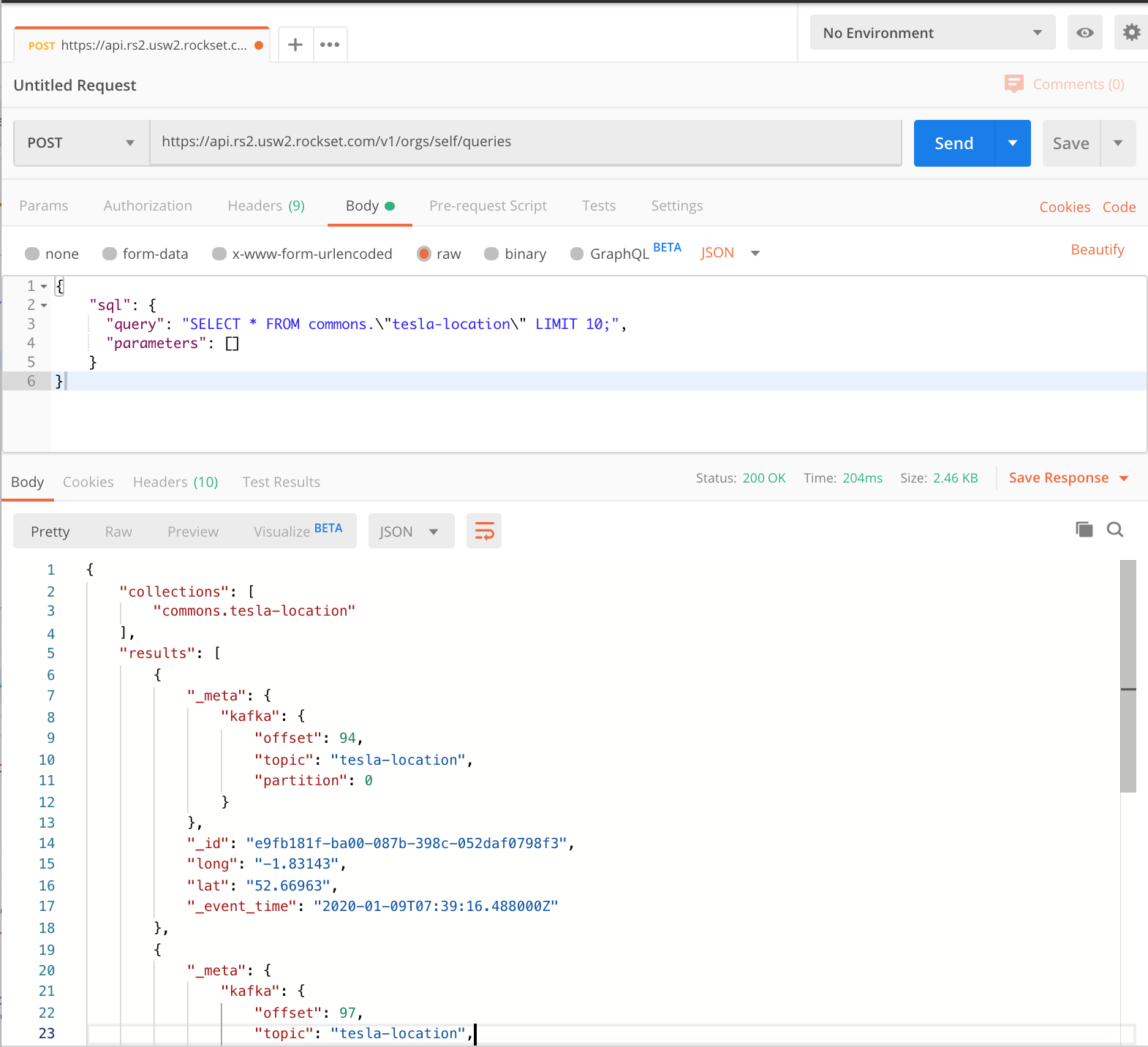

Now we actually are able to hit ship and see how shortly Rockset can return our knowledge.

Fig 8. Rockset outcomes

Fig 8 reveals the outcomes returned by the Rockset API. It offers a collections object so we all know which collections have been queried after which an array of outcomes, every containing some Kafka metadata, an occasion ID and timestamp, and the lat lengthy coordinates that our producer was capturing from the Tesla in actual time. In response to Postman that returned in 0.2 seconds which is completely acceptable for any entrance finish system.

In fact, the probabilities don’t cease right here, you’ll usually need to carry out extra complicated SQL queries and check them to view the response. Now we’re all arrange in Postman this turns into a trivial job. We are able to simply change the SQL and hold hitting ship till we get it proper.

Visualising Information utilizing D3.js

Now we’re capable of efficiently name the API to return knowledge, we need to utilise this API to serve knowledge to a entrance finish. I’m going to make use of D3.js to visualise our location knowledge and plot it in actual time because the automotive is being pushed.

The circulation will probably be as follows. Our Kafka producer will probably be fetching location knowledge from the Tesla each 3 seconds and including it to the subject. Rockset will probably be consuming this knowledge right into a Rockset assortment and exposing it through the API. Our D3.js visualisation will probably be polling the Rockset API for brand spanking new knowledge each 3 seconds and plotting the newest coordinates on a map of the UK.

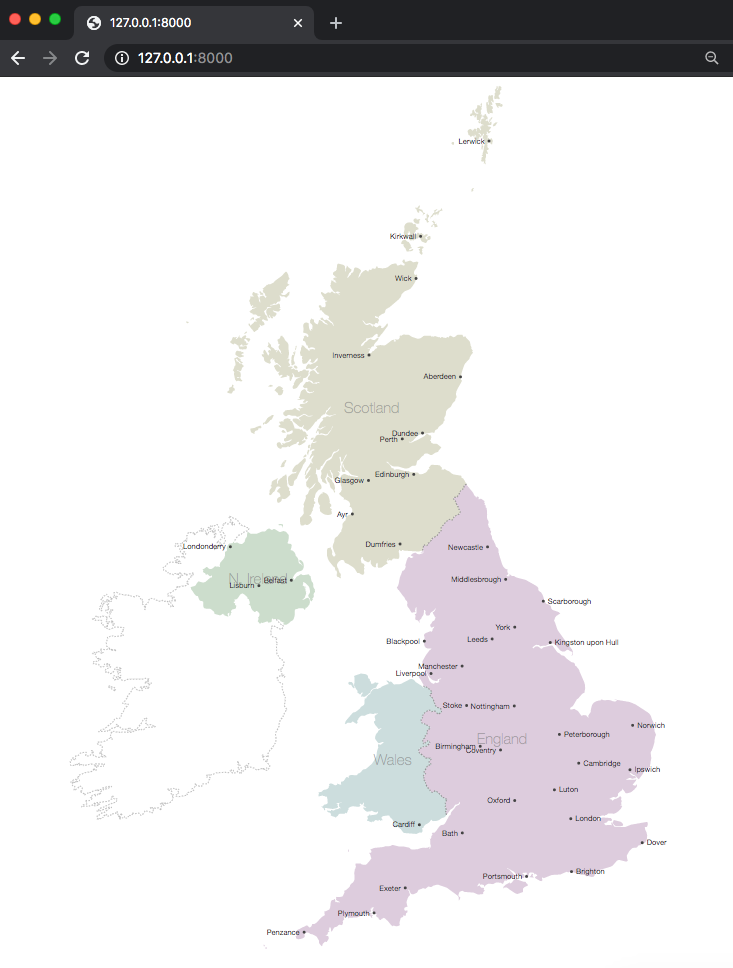

Step one is to get D3 to render a UK map. I used a pre-existing instance to construct the HTML file. Save the html file in a folder and title the file index.html. To create an online server for this so it may be seen within the browser I used Python. You probably have python put in in your machine you may merely run the next to start out an online server within the present listing.

python -m SimpleHTTPServer

By default it can run the server on port 8000. You’ll be able to then go to 127.0.0.1:8000 in your browser and in case your index.html file is setup accurately it’s best to now see a map of the UK as proven in Fig 9. This map would be the base for us to plot our factors.

Fig 9. UK Map drawn by D3.js

Now we now have a map rendering, we want some code to fetch our factors from Rockset. To do that we’re going to put in writing a perform that may fetch the final 10 rows from our Rockset assortment by calling the Rockset API.

perform fetchPoints(){

// initialise SQL request physique utilizing postman instance

var sql="{ "sql": { "question": "choose * from commons."tesla-location" order by _event_time LIMIT 10","parameters": [] }}"

// ask D3 to parse JSON from a request.

d3.json('https://api.rs2.usw2.rockset.com/v1/orgs/self/queries')

// setting headers the identical means we did in Postman

.header('Authorization','ApiKey AAAABBBBCCCCDDDDEEEEFFFFGGGGG1234567')

.header('Content material-Sort','utility/json')

// Making our request a POST request and passing the SQL assertion

.publish(sql)

.response(perform(d){

// now we now have the response from Rockset, lets print and examine it

var response = JSON.parse(d.response)

console.log(response);

// parse out the listing of outcomes (rows from our rockset assortment) and print

var newPoints = response.outcomes

console.log(newPoints)

})

}

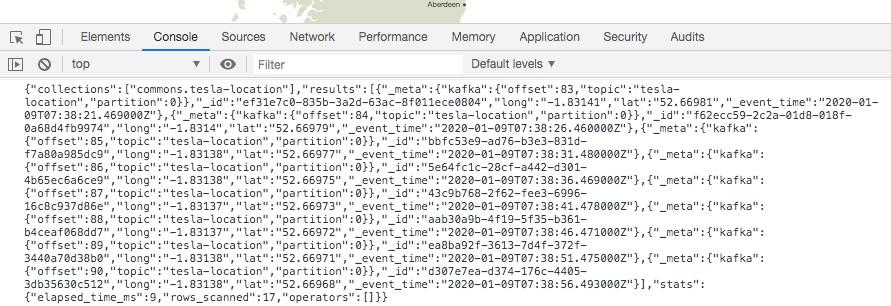

When calling this perform and operating our HTTP server we are able to view the console to take a look at the logs. Load the webpage after which in your browser discover the console. In Chrome this implies opening the developer settings and clicking the console tab.

You need to see a printout of the response from Rockset displaying the entire response object much like that in Fig 10.

Fig 10. Rockset response output

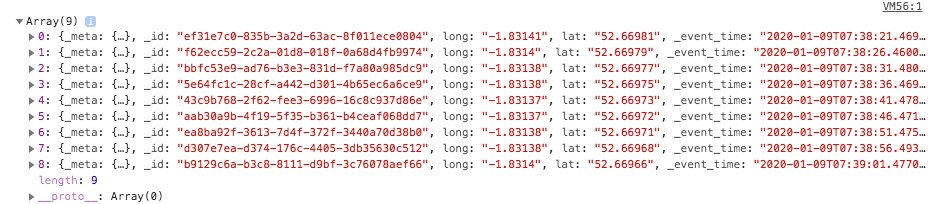

Beneath this must be our different log displaying the outcomes set as proven in Fig 11. The console tells us that it is an Array of objects. Every of the objects ought to characterize a row of knowledge from our assortment as seen within the Rockset console. Every row contains our Kafka meta, rockset ID and timestamp and our lat lengthy pair.

Fig 11. Rockset outcomes log

It’s all coming collectively properly. We now simply have to parse the lat lengthy pair from the outcomes and get them drawn on the map. To do that in D3 we have to retailer every lat lengthy inside their array with the longitude in array index 0 and the latitude in array index 1. Every array of pairs must be contained inside one other array.

[ [long,lat], [long,lat], [long,lat]... ]

D3 can then use this as the information and undertaking these factors onto the map. If you happen to adopted the instance earlier within the article to attract the UK map then it’s best to have all of the boilerplate code required to plot these factors. We simply have to create a perform to name it ourselves.

I’ve initialised a javascript object for use as a dictionary to retailer my lat lengthy pairs. The important thing for every coordinate pair would be the row ID given to every end result by Rockset. It will imply that after I’m polling Rockset for brand spanking new coordinates, if I obtain the identical set of factors once more, it received’t be duplicated in my array.

{

_id : [long,lat],

_id : [long,lat],

…

}

With this in thoughts, I created a perform known as updateData that may take this object and all of the factors and draw them on the map, every time asking D3 to solely draw the factors it hasn’t seen earlier than.

perform updateData(coords){

// seize solely the values (our arrays of factors) and cross to D3

var mapPoints = svg.selectAll("circle").knowledge(Object.values(coords))

// inform D3 to attract the factors and the place to undertaking them on the map

mapPoints.enter().append("circle")

.transition().length(400).delay(200)

.attr("cx", perform (d) { return projection(d)[0]; })

.attr("cy", perform (d) { return projection(d)[1]; })

.attr("r", "2px")

.attr("fill", "pink")

}

All that’s left is to vary how we deal with the response from Rockset in order that we are able to constantly add new factors to our dictionary. We are able to then hold passing this dictionary to our updateData perform in order that the brand new factors get drawn on the map.

//initialise dictionary

var factors = {}

perform fetchPoints(){

// initialise SQL request physique utilizing postman instance

var sql="{ "sql": { "question": "choose * from commons."tesla-location" order by _event_time LIMIT 10","parameters": [] }}"

// ask D3 to parse JSON from a request.

d3.json('https://api.rs2.usw2.rockset.com/v1/orgs/self/queries')

// setting headers the identical means we did in Postman

.header('Authorization','ApiKey AAAABBBBCCCCDDDDEEEEFFFFGGGGG1234567')

.header('Content material-Sort','utility/json')

// Making our request a POST request and passing the SQL assertion

.publish(sql)

.response(perform(d){

// now we now have the response from Rockset, lets print and examine it

var response = JSON.parse(d.response)

// parse out the listing of outcomes (rows from our rockset assortment) and print

var newPoints = response.outcomes

for (var coords of newPoints){

// add lat lengthy pair to dictionary utilizing ID as key

factors[coords._id] = [coords.long,coords.lat]

console.log('updating factors on map ' + factors)

// name our replace perform to attract factors on th

updateData(factors)

}

})

}

That’s the bottom of the applying accomplished. We merely have to loop and constantly name the fetchPoints perform each 5 seconds to seize the newest 10 information from Rockset to allow them to be added to the map.

The completed utility ought to then carry out as seen in Fig 12. (sped up so you may see the entire journey being plotted)

Fig 12. GIF of factors being plotted in actual time

Wrap up

By this publish we’ve learnt how one can efficiently request actual time location knowledge from a Tesla Mannequin 3 and add it to a Kafka matter. We’ve then used Rockset to eat this knowledge so we are able to expose it through the in-built Rockset API in actual time. Lastly, we known as this API to plot the situation knowledge in actual time on a map utilizing D3.js.

This offers you an thought of the entire again finish to entrance finish journey required to have the ability to visualise knowledge in actual time. The benefit of utilizing Rockset for that is that we couldn’t solely use the situation knowledge to plot on a map but additionally carry out analytics for a dashboard that might for instance present journey size or avg time spent not shifting. You’ll be able to see examples of extra complicated queries on linked automotive knowledge from Kafka on this weblog, and you’ll attempt Rockset with your personal knowledge right here.

Lewis Gavin has been an information engineer for 5 years and has additionally been running a blog about abilities throughout the Information neighborhood for 4 years on a private weblog and Medium. Throughout his pc science diploma, he labored for the Airbus Helicopter crew in Munich enhancing simulator software program for navy helicopters. He then went on to work for Capgemini the place he helped the UK authorities transfer into the world of Large Information. He’s presently utilizing this expertise to assist remodel the information panorama at easyfundraising.org.uk, an internet charity cashback website, the place he’s serving to to form their knowledge warehousing and reporting functionality from the bottom up.