Chatbots have gotten invaluable instruments for companies, serving to to enhance effectivity and assist staff. By sifting by way of troves of firm knowledge and documentation, LLMs can assist employees by offering knowledgeable responses to a variety of inquiries. For skilled staff, this may help reduce time spent in redundant, much less productive duties. For newer staff, this can be utilized to not solely velocity the time to an accurate reply however information these employees by way of on-boarding, assess their information development and even recommend areas for additional studying and improvement as they arrive extra absolutely on top of things.

For the foreseeable future, these capabilities seem poised to increase employees greater than to interchange them. And with looming challenges in employee availability in lots of developed economies, many organizations are rewiring their inside processes to reap the benefits of the assist they will present.

Scaling LLM-Primarily based Chatbots Can Be Costly

As companies put together to broadly deploy chatbots into manufacturing, many are encountering a big problem: value. Excessive-performing fashions are sometimes costly to question, and plenty of fashionable chatbot purposes, often known as agentic programs, could decompose particular person person requests into a number of, more-targeted LLM queries so as to synthesize a response. This may make scaling throughout the enterprise prohibitively costly for a lot of purposes.

However think about the breadth of questions being generated by a gaggle of staff. How dissimilar is every query? When particular person staff ask separate however related questions, might the response to a earlier inquiry be re-used to deal with some or all the wants of a latter one? If we might re-use a few of the responses, what number of calls to the LLM may very well be averted and what would possibly the associated fee implications of this be?

Reusing Responses Might Keep away from Pointless Value

Take into account a chatbot designed to reply questions on an organization’s product options and capabilities. Through the use of this instrument, staff would possibly be capable to ask questions so as to assist varied engagements with their clients.

In a typical strategy, the chatbot would ship every question to an underlying LLM, producing practically similar responses for every query. But when we programmed the chatbot utility to first search a set of beforehand cached questions and responses for extremely related inquiries to the one being requested by the person and to make use of an present response every time one was discovered, we might keep away from redundant calls to the LLM. This method, often known as semantic caching, is changing into broadly adopted by enterprises due to the associated fee financial savings of this strategy.

Constructing a Chatbot with Semantic Caching on Databricks

At Databricks, we function a public-facing chatbot for answering questions on our merchandise. This chatbot is uncovered in our official documentation and sometimes encounters related person inquiries. On this weblog, we consider Databricks’ chatbot in a sequence of notebooks to know how semantic caching can improve effectivity by lowering redundant computations. For demonstration functions, we used a synthetically generated dataset, simulating the varieties of repetitive questions the chatbot would possibly obtain.

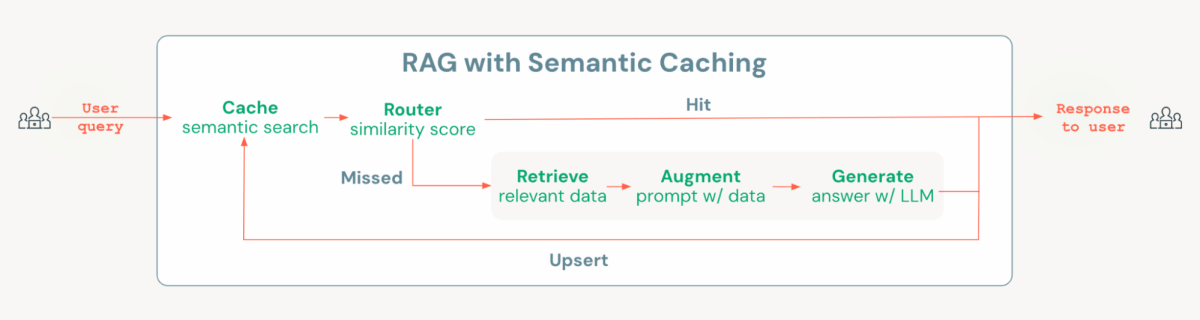

Databricks Mosaic AI supplies all the required elements to construct a cost-optimized chatbot resolution with semantic caching, together with Vector Seek for making a semantic cache, MLflow and Unity Catalog for managing fashions and chains, and Mannequin Serving for deploying and monitoring, in addition to monitoring utilization and payloads. To implement semantic caching, we add a layer originally of the usual Retrieval-Augmented Era (RAG) chain. This layer checks if an identical query already exists within the cache; if it does, then the cached response is retrieved and served. If not, the system proceeds with executing the RAG chain. This easy but highly effective routing logic might be simply carried out utilizing open supply instruments like Langchain or MLflow’s pyfunc.

Within the notebooks, we display how you can implement this resolution on Databricks, highlighting how semantic caching can scale back each latency and prices in comparison with a typical RAG chain when examined with the identical set of questions.

Along with the effectivity enchancment, we additionally present how semantic caching impacts the response high quality utilizing an LLM-as-a-judge strategy in MLflow. Whereas semantic caching improves effectivity, there’s a slight drop in high quality: analysis outcomes present that the usual RAG chain carried out marginally higher in metrics akin to reply relevance. These small declines in high quality are anticipated when retrieving responses from the cache. The important thing takeaway is to find out whether or not these high quality variations are acceptable given the numerous value and latency reductions supplied by the caching resolution. In the end, the choice ought to be based mostly on how these trade-offs have an effect on the general enterprise worth of your use case.

Why Databricks?

Databricks supplies an optimum platform for constructing cost-optimized chatbots with caching capabilities. With Databricks Mosaic AI, customers have native entry to all crucial elements, particularly a vector database, agent improvement and analysis frameworks, serving, and monitoring on a unified, extremely ruled platform. This ensures that key belongings, together with knowledge, vector indexes, fashions, brokers, and endpoints, are centrally managed beneath sturdy governance.

Databricks Mosaic AI additionally gives an open structure, permitting customers to experiment with varied fashions for embeddings and technology. Leveraging the Databricks Mosaic AI Agent Framework and Analysis instruments, customers can quickly iterate on purposes till they meet production-level requirements. As soon as deployed, KPIs like hit ratios and latency might be monitored utilizing MLflow traces, that are mechanically logged in Inference Tables for simple monitoring.

In the event you’re seeking to implement semantic caching to your AI system on Databricks, take a look at this challenge that’s designed that can assist you get began shortly and effectively.