(agsandrew/Shutterstock)

We are actually in an period the place massive basis fashions (large-scale, general-purpose neural networks pre-trained in an unsupervised method on massive quantities of various knowledge) are remodeling fields like laptop imaginative and prescient, pure language processing, and, extra lately, time-series forecasting. These fashions are reshaping time collection forecasting by enabling zero-shot forecasting, permitting predictions on new, unseen knowledge with out retraining for every dataset. This breakthrough considerably cuts growth time and prices, streamlining the method of making and fine-tuning fashions for various duties.

The facility of machine studying (ML) strategies in time collection forecasting first gained prominence in the course of the M4 and M5 forecasting competitions, the place ML-based fashions considerably outperformed conventional statistical strategies for the primary time. Within the M5 competitors (2020), superior fashions like LightGBM, DeepAR, and N-BEATS demonstrated the effectiveness of incorporating exogenous variables—elements like climate or holidays that affect the information however aren’t a part of the core time collection. This method led to unprecedented forecasting accuracy.

These competitions highlighted the significance of cross-learning from a number of associated collection and paved the best way for growing basis fashions particularly designed for time collection evaluation. In addition they spurred curiosity in machine studying fashions for time collection forecasting as ML fashions are more and more overtaking statistical strategies because of their capability to acknowledge complicated temporal patterns and combine exogenous variables. (Be aware: Statistical strategies nonetheless typically outperform ML fashions for short-term univariate time collection forecasting.)

Timeline of Foundational Forecasting Fashions

In October 2023, TimeGPT-1, designed to generalize throughout various time collection datasets with out requiring particular coaching for every dataset, was revealed as one of many first basis forecasting fashions. Not like conventional forecasting strategies, basis forecasting fashions leverage huge quantities of pre-training knowledge to carry out zero-shot forecasting. This breakthrough permits companies to keep away from the prolonged and dear course of of coaching and tuning fashions for particular duties, providing a extremely

A number of collection forecasting with TimeGPT-1 (Credit score: TimeGPT-1 paper)

adaptable answer for industries coping with dynamic and evolving knowledge.

Then, in February 2024, Lag-Llama was launched. It makes a speciality of long-range forecasting by specializing in lagged dependencies, that are temporal correlations between previous values and future outcomes in a time collection. Lagged dependencies are particularly vital in domains like finance and power, the place present developments are sometimes closely influenced by previous occasions over prolonged durations. By effectively capturing these dependencies, Lag-Llama improves forecasting accuracy in eventualities the place longer time horizons are vital.

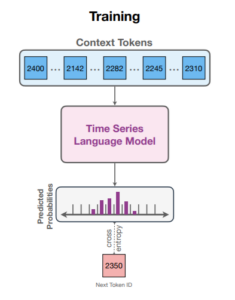

In March 2024, Chronos, a easy but extremely efficient framework for pre-trained probabilistic time collection fashions, was launched. Chronos tokenizes time collection values—changing steady numerical knowledge into discrete classes—via scaling and quantization. This permits it to use transformer-based language fashions, usually used for textual content technology, to time collection knowledge. Transformers excel at figuring out patterns in sequences, and by treating time collection as a sequence of tokens, Chronos allows these fashions to foretell future values successfully. Chronos is predicated on the T5 mannequin household (starting from 20M to 710M parameters) and was pre-trained on public and artificial datasets. Benchmarking throughout 42 datasets confirmed that Chronos considerably outperforms different strategies on acquainted datasets and excels in zero-shot efficiency on new knowledge. This versatility makes Chronos a robust instrument for forecasting in industries like retail, power, and healthcare, the place it generalizes properly throughout various knowledge sources.

In April 2024, Google launched TimesFM, a decoder-only basis mannequin pre-trained on 100 billion real-world time factors. Not like full transformer fashions that use each an encoder and decoder, TimesFM focuses on producing predictions one step at a time primarily based solely on previous inputs, making it superb for time collection forecasting. Basis fashions like TimesFM differ from conventional transformer fashions, which usually require task-specific coaching and are much less versatile throughout completely different domains. TimesFM’s capability to supply correct out-of-the-box predictions in retail, finance, and pure sciences makes it extremely worthwhile, because it eliminates the necessity for intensive retraining on new time collection knowledge.

Chronos coaching (Picture credit score: Chronos: Studying the Language of Time Collection)

In Could 2024, Salesforce launched Moirai, an open supply basis forecasting mannequin designed to assist probabilistic zero-shot forecasting and deal with exogenous options. Moirai tackles challenges in time collection forecasting, corresponding to cross-frequency studying, accommodating a number of variates, and managing various distributional properties. Constructed on the Masked Encoder-based Common Time Collection Forecasting Transformer (MOIRAI) structure, it leverages the Massive-Scale Open Time Collection Archive (LOTSA), which incorporates greater than 27 billion observations throughout 9 domains. With strategies like Any-Variate Consideration and versatile parametric distributions, Moirai delivers scalable, zero-shot forecasting on various datasets with out requiring task-specific retraining, marking a major step towards common time collection forecasting.

IBM’s Tiny Time Mixers (TTM), launched in June 2024, provide a light-weight different to conventional time collection basis fashions. As a substitute of utilizing the eye mechanism of transformers, TTM is an MLP-based mannequin that depends on absolutely related neural networks. Improvements like adaptive patching and determination prefix tuning enable TTM to generalize successfully throughout various datasets whereas dealing with multivariate forecasting and exogenous variables. Its effectivity makes it superb for low-latency environments with restricted computational assets.

AutoLab’s MOMENT, additionally launched in Could 2024, is a household of open supply basis fashions designed for general-purpose time collection evaluation. MOMENT addresses three main challenges in pre-training on time collection knowledge: the shortage of huge cohesive public time collection repositories, the various traits of time collection knowledge (corresponding to variable sampling charges and resolutions), and the absence of established benchmarks for evaluating fashions. To deal with these, AutoLab launched the Time Collection Pile, a set of public time collection knowledge throughout a number of domains, and developed a benchmark to guage MOMENT on duties like short- and long-horizon forecasting, classification, anomaly detection, and imputation. With minimal fine-tuning, MOMENT delivers spectacular zero-shot efficiency on these duties, providing scalable, general-purpose time collection fashions.

Collectively, these fashions symbolize a brand new frontier in time collection forecasting. They provide industries throughout the board the flexibility to generate extra correct forecasts, establish intricate patterns, and enhance decision-making, all whereas lowering the necessity for intensive, domain-specific coaching.

Way forward for Time Collection and Language Fashions: Combining Textual content Knowledge with Sensor Knowledge

Trying forward, combining time collection fashions with language fashions is unlocking thrilling improvements. Fashions like Chronos, Moirai, and TimesFM are pushing the boundaries of time collection forecasting, however the subsequent frontier is mixing conventional sensor knowledge with unstructured textual content for even higher outcomes.

Take the auto business—combining sensor knowledge with technician reviews and repair notes via NLP to get a whole view of potential upkeep points. In healthcare, real-time affected person monitoring is paired with docs’ notes to foretell well being outcomes for earlier diagnoses. Retail and rideshare corporations use social media and occasion knowledge alongside time collection forecasts to higher predict trip demand or gross sales spikes throughout main occasions.

By combining these two highly effective knowledge varieties, industries like IoT, healthcare, and logistics are gaining a deeper, extra dynamic understanding of what’s taking place—and what’s about to occur—resulting in smarter selections and extra correct predictions.

Concerning the creator: Anais Dotis-Georgiou is a Developer Advocate for InfluxData with a ardour for making knowledge stunning with the usage of Knowledge Analytics, AI, and Machine Studying. She takes the information that she collects, does a mixture of analysis, exploration, and engineering to translate the information into one thing of operate, worth, and wonder. When she shouldn’t be behind a display screen, you will discover her exterior drawing, stretching, boarding, or chasing after a soccer ball.

Advocate for InfluxData with a ardour for making knowledge stunning with the usage of Knowledge Analytics, AI, and Machine Studying. She takes the information that she collects, does a mixture of analysis, exploration, and engineering to translate the information into one thing of operate, worth, and wonder. When she shouldn’t be behind a display screen, you will discover her exterior drawing, stretching, boarding, or chasing after a soccer ball.

Associated Gadgets:

InfluxData Touts Huge Efficiency Increase for On-Prem Time-Collection Database

Understanding Open Knowledge Structure and Time Collection Knowledge

It’s About Time for InfluxData

Anais Dotis-Georgiou, Chronos, basis mannequin, GenAI, language fashions, massive language mannequin, Moirai, time collection, time-series mannequin, TimeGPT-1, TimesFM, Tiny Time Mixers