On this IoT instance, we study methods to allow advanced analytic queries on real-time Kafka streams from linked automobile sensors.

Understanding IoT and Related Vehicles

With an rising variety of data-generating sensors being embedded in all method of sensible gadgets and objects, there’s a clear, rising must harness and analyze IoT knowledge. Embodying this pattern is the burgeoning area of linked vehicles, the place suitably geared up autos are capable of talk site visitors and working info, resembling velocity, location, car diagnostics, and driving habits, to cloud-based repositories.

Constructing Actual-Time Analytics on Related Automotive IoT Knowledge

For our instance, we now have a fleet of linked autos that ship the sensor knowledge they generate to a Kafka cluster. We’ll present how this knowledge in Kafka might be operationalized with using extremely concurrent, low-latency queries on the real-time streams.

The power to behave on sensor readings in actual time is helpful for numerous vehicular and site visitors purposes. Makes use of embody detecting patterns and anomalies in driving habits, understanding site visitors situations, routing autos optimally, and recognizing alternatives for preventive upkeep.

How the Kafka IoT Instance Works

The true-time linked automobile knowledge can be simulated utilizing an information producer software. A number of cases of this knowledge producer emit generated sensor metric occasions right into a regionally operating Kafka occasion. This specific Kafka subject is syncing constantly with a group in Rockset by way of the Rockset Kafka Sink connector. As soon as the setup is finished, we’ll extract helpful insights from this knowledge utilizing SQL queries and visualize them in Redash.

There are a number of parts concerned:

- Apache Kafka

- Apache Zookeeper

- Knowledge Producer – Related autos generate IoT messages that are captured by a message dealer and despatched to the streaming software for processing. In our pattern software, the IoT Knowledge Producer is a simulator software for linked autos and makes use of Apache Kafka to retailer IoT knowledge occasions.

- Rockset – We use a real-time database to retailer knowledge from Kafka and act as an analytics backend to serve quick queries and stay dashboards.

- Rockset Kafka Sink connector

- Redash – We use Redash to energy the IoT stay dashboard. Every of the queries we carry out on the IoT knowledge is visualized in our dashboard.

- Question Generator – It is a script for load testing Rockset with the queries of curiosity.

The code we used for the Knowledge Producer and Question Generator might be discovered right here.

Step 1. Utilizing Kafka & Zookeeper for Service Discovery

Kafka makes use of Zookeeper for service discovery and different housekeeping, and therefore Kafka ships with a Zookeeper setup and different helper scripts. After downloading and extracting the Kafka tar, you simply must run the next command to arrange the Zookeeper and Kafka server. This assumes that your present working listing is the place you extracted the Kafka code.

Zookeeper:

./kafka_2.11-2.3.0/bin/zookeeper-server-start.sh ../config/zookeeper.properties

Kafka server:

./kafka_2.11-2.3.0/bin/kafka-server-start.sh ../config/server.properties

For our instance, the default configuration ought to suffice. Ensure that ports 9092 and 2181 are unblocked.

Step 2. Constructing the Knowledge Producer

This knowledge producer is a Maven mission, which can emit sensor metric occasions to our native Kafka occasion. We simulate knowledge from 1,000 autos and a whole lot of sensor data per second. The code might be discovered right here. Maven is required to construct and run this.

After cloning the code, check out iot-kafka-producer/src/essential/assets/iot-kafka.properties. Right here, you’ll be able to present your Kafka and Zookeeper ports (which needs to be untouched when going with the defaults) and the subject title to which the occasion messages could be despatched. Now, go into the rockset-connected-cars/iot-kafka-producer listing and run the next instructions:

mvn compile && mvn exec:java -Dexec.mainClass="com.iot.app.kafka.producer.IoTDataProducer"

It is best to see numerous these occasions constantly dumped into the Kafka subject title given within the configuration beforehand.

Step 3. Integrating Rockset and the Rockset Kafka Connector

We would want the Rockset Kafka Sink connector to load these messages from our Kafka subject to a Rockset assortment. To get the connector working, we first arrange a Kafka integration from the Rockset console. Then, we create a group utilizing the brand new Kafka integration. Run the next command to attach your Kafka subject to the Rockset assortment.

./kafka_2.11-2.3.0/bin/connect-standalone.sh ./connect-standalone.properties ./connect-rockset-sink.properties

Step 4. Querying the IoT Knowledge

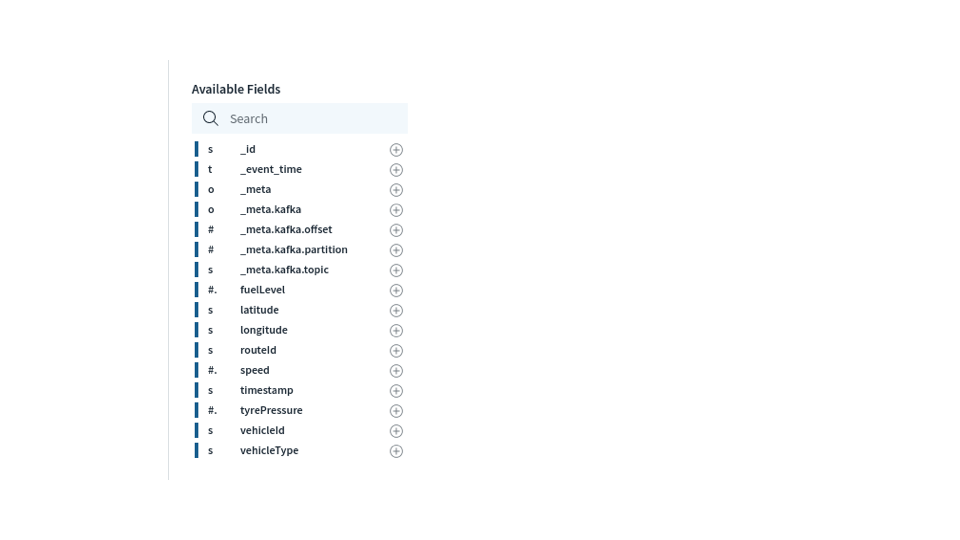

Out there fields within the Rockset assortment

The above exhibits all of the fields out there within the assortment which is used within the following queries. Notice that we didn’t need to predefine a schema or carry out any knowledge preparation to get knowledge in Kafka to be queryable in Rockset.

As our Rockset assortment is getting knowledge, we are able to question utilizing SQL to get some helpful insights.

Rely of autos that produced a sensor metric within the final 5 seconds

This helps up know which autos are actively emitting knowledge.

Question for autos that emitted knowledge within the final 5 seconds

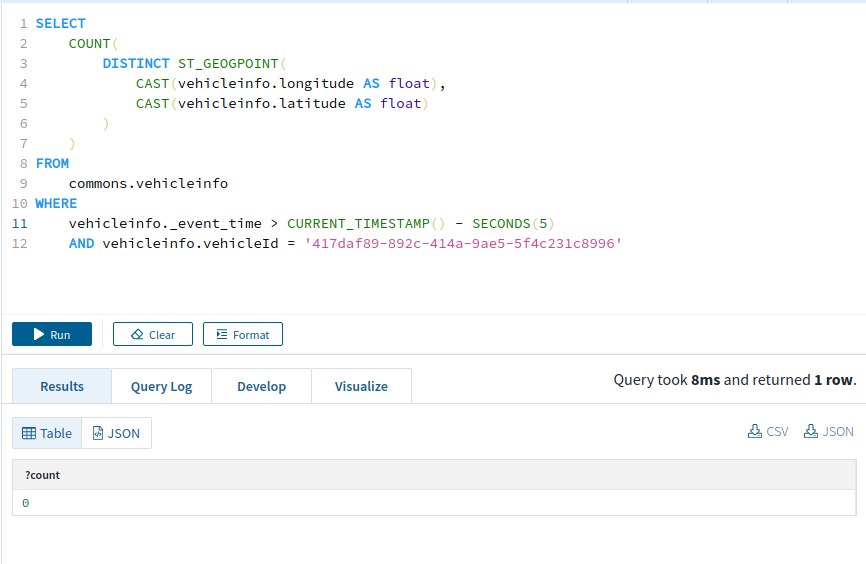

Test if a car is transferring in final 5 seconds

It may be helpful to know if a car is definitely transferring or is caught in site visitors.

Question for autos that moved within the final 5 seconds

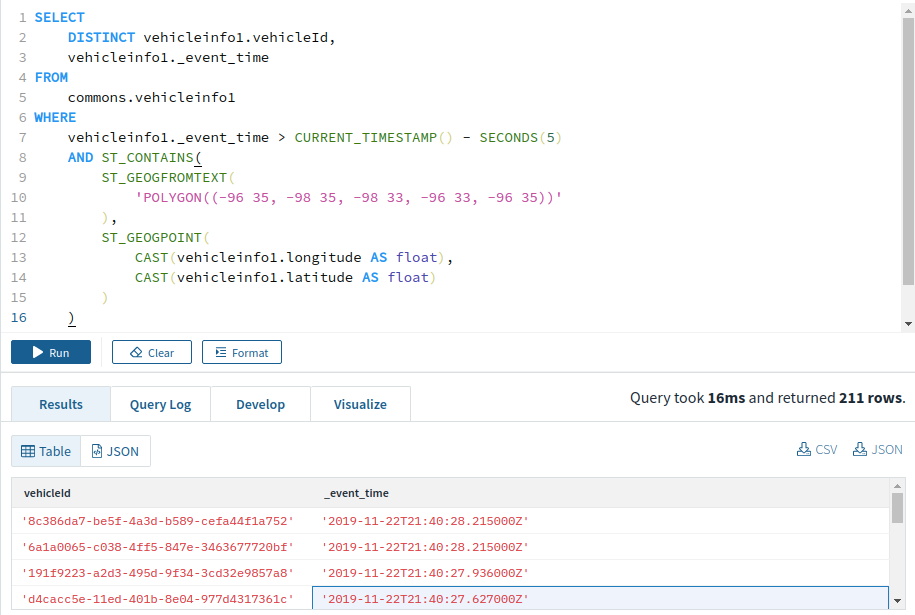

Autos which can be inside a specified Level of Curiosity (POI) within the final 5 seconds

It is a frequent sort of question, particularly for a ride-hailing software, to search out out which drivers can be found within the neighborhood of a passenger. Rockset supplies CURRENT_TIMESTAMP and SECONDS capabilities to carry out timestamp-related queries. It additionally has native help for location-based queries utilizing the capabilities ST_GEOPOINT, ST_GEOGFROMTEXT and ST_CONTAINS.

Question for autos which can be inside a sure space within the final 5 seconds

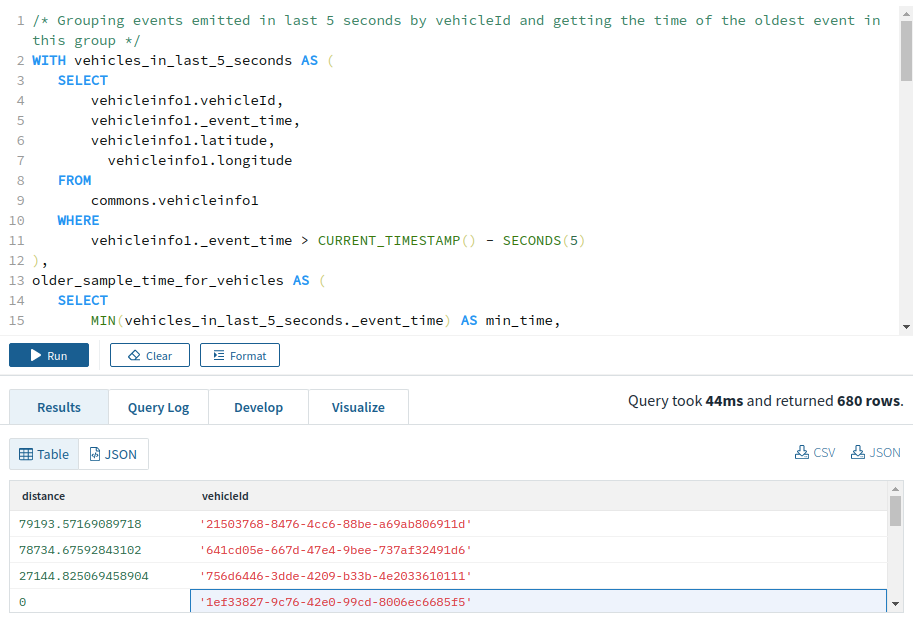

Prime 5 autos which have moved the utmost distance within the final 5 seconds

This question exhibits us essentially the most lively autos.

/* Grouping occasions emitted in final 5 seconds by vehicleId and getting the time of the oldest occasion on this group */

WITH vehicles_in_last_5_seconds AS (

SELECT

vehicleinfo.vehicleId,

vehicleinfo._event_time,

vehicleinfo.latitude,

vehicleinfo.longitude

from

commons.vehicleinfo

WHERE

vehicleinfo._event_time > CURRENT_TIMESTAMP() - SECONDS(5)

),

older_sample_time_for_vehicles as (

SELECT

MIN(vehicles_in_last_5_seconds._event_time) as min_time,

vehicles_in_last_5_seconds.vehicleId

FROM

vehicles_in_last_5_seconds

GROUP BY

vehicles_in_last_5_seconds.vehicleId

),

older_sample_location_for_vehicles AS (

SELECT

vehicles_in_last_5_seconds.latitude,

vehicles_in_last_5_seconds.longitude,

vehicles_in_last_5_seconds.vehicleId

FROM

older_sample_time_for_vehicles,

vehicles_in_last_5_seconds

the place

vehicles_in_last_5_seconds._event_time = older_sample_time_for_vehicles.min_time

and vehicles_in_last_5_seconds.vehicleId = older_sample_time_for_vehicles.vehicleId

),

latest_sample_time_for_vehicles as (

SELECT

MAX(vehicles_in_last_5_seconds._event_time) as max_time,

vehicles_in_last_5_seconds.vehicleId

FROM

vehicles_in_last_5_seconds

GROUP BY

vehicles_in_last_5_seconds.vehicleId

),

latest_sample_location_for_vehicles AS (

SELECT

vehicles_in_last_5_seconds.latitude,

vehicles_in_last_5_seconds.longitude,

vehicles_in_last_5_seconds.vehicleId

FROM

latest_sample_time_for_vehicles,

vehicles_in_last_5_seconds

the place

vehicles_in_last_5_seconds._event_time = latest_sample_time_for_vehicles.max_time

and vehicles_in_last_5_seconds.vehicleId = latest_sample_time_for_vehicles.vehicleId

),

distance_for_vehicles AS (

SELECT

ST_DISTANCE(

ST_GEOGPOINT(

CAST(older_sample_location_for_vehicles.longitude AS float),

CAST(older_sample_location_for_vehicles.latitude AS float)

),

ST_GEOGPOINT(

CAST(latest_sample_location_for_vehicles.longitude AS float),

CAST(latest_sample_location_for_vehicles.latitude AS float)

)

) as distance,

latest_sample_location_for_vehicles.vehicleId

FROM

latest_sample_location_for_vehicles,

older_sample_location_for_vehicles

WHERE

latest_sample_location_for_vehicles.vehicleId = older_sample_location_for_vehicles.vehicleId

)

SELECT

*

from

distance_for_vehicles

ORDER BY

distance_for_vehicles.distance DESC

Question for autos which have traveled the farthest within the final 5 seconds

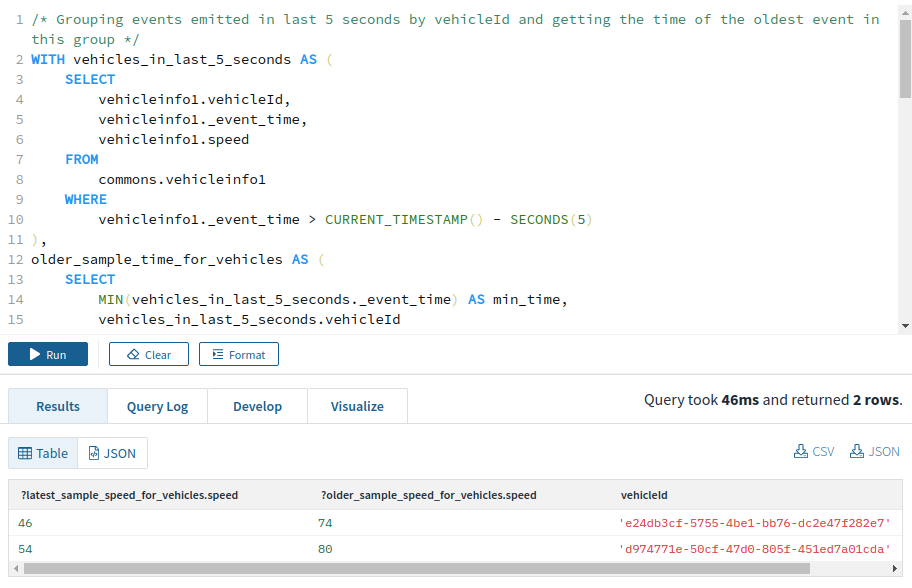

Variety of sudden braking occasions

This question might be useful in detecting slow-moving site visitors, potential accidents, and extra error-prone drivers.

/* Grouping occasions emitted in final 5 seconds by vehicleId and getting the time of the oldest occasion on this group */

WITH vehicles_in_last_5_seconds AS (

SELECT

vehicleinfo.vehicleId,

vehicleinfo._event_time,

vehicleinfo.velocity

from

commons.vehicleinfo

WHERE

vehicleinfo._event_time > CURRENT_TIMESTAMP() - SECONDS(5)

),

older_sample_time_for_vehicles as (

SELECT

MIN(vehicles_in_last_5_seconds._event_time) as min_time,

vehicles_in_last_5_seconds.vehicleId

FROM

vehicles_in_last_5_seconds

GROUP BY

vehicles_in_last_5_seconds.vehicleId

),

older_sample_speed_for_vehicles AS (

SELECT

vehicles_in_last_5_seconds.velocity,

vehicles_in_last_5_seconds.vehicleId

FROM

older_sample_time_for_vehicles,

vehicles_in_last_5_seconds

the place

vehicles_in_last_5_seconds._event_time = older_sample_time_for_vehicles.min_time

and vehicles_in_last_5_seconds.vehicleId = older_sample_time_for_vehicles.vehicleId

),

latest_sample_time_for_vehicles as (

SELECT

MAX(vehicles_in_last_5_seconds._event_time) as max_time,

vehicles_in_last_5_seconds.vehicleId

FROM

vehicles_in_last_5_seconds

GROUP BY

vehicles_in_last_5_seconds.vehicleId

),

latest_sample_speed_for_vehicles AS (

SELECT

vehicles_in_last_5_seconds.velocity,

vehicles_in_last_5_seconds.vehicleId

FROM

latest_sample_time_for_vehicles,

vehicles_in_last_5_seconds

the place

vehicles_in_last_5_seconds._event_time = latest_sample_time_for_vehicles.max_time

and vehicles_in_last_5_seconds.vehicleId = latest_sample_time_for_vehicles.vehicleId

)

SELECT

latest_sample_speed_for_vehicles.velocity,

older_sample_speed_for_vehicles.velocity,

older_sample_speed_for_vehicles.vehicleId

from

older_sample_speed_for_vehicles, latest_sample_speed_for_vehicles

WHERE

older_sample_speed_for_vehicles.vehicleId = latest_sample_speed_for_vehicles.vehicleId

AND latest_sample_speed_for_vehicles.velocity < older_sample_speed_for_vehicles.velocity - 20

Question for autos with sudden braking occasions

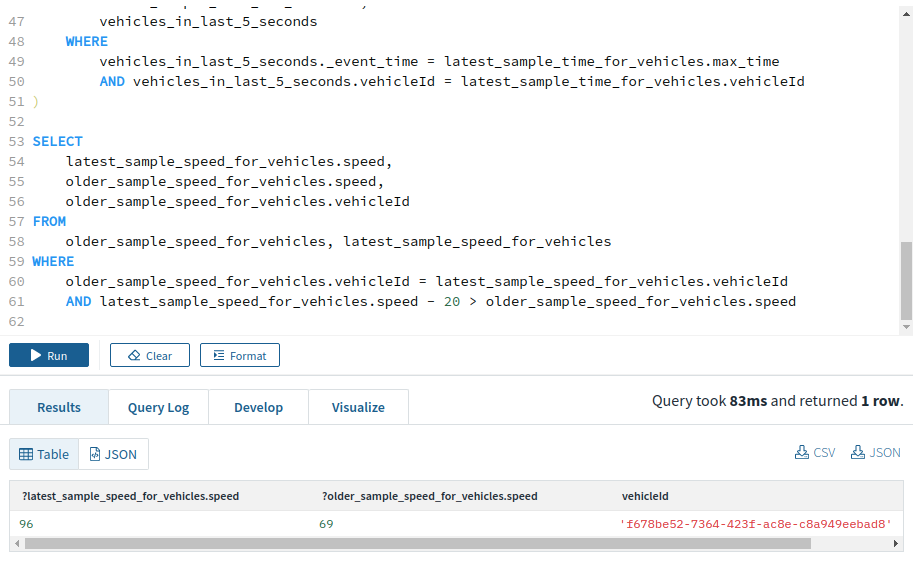

Variety of speedy acceleration occasions

That is much like the question above, simply with the velocity distinction situation modified from

latest_sample_speed_for_vehicles.velocity < older_sample_speed_for_vehicles.velocity - 20

to

latest_sample_speed_for_vehicles.velocity - 20 > older_sample_speed_for_vehicles.velocity

Question for autos with speedy acceleration occasions

Wish to be taught extra? Uncover methods to construct a real-time analytics stack primarily based on Kafka and Rockset

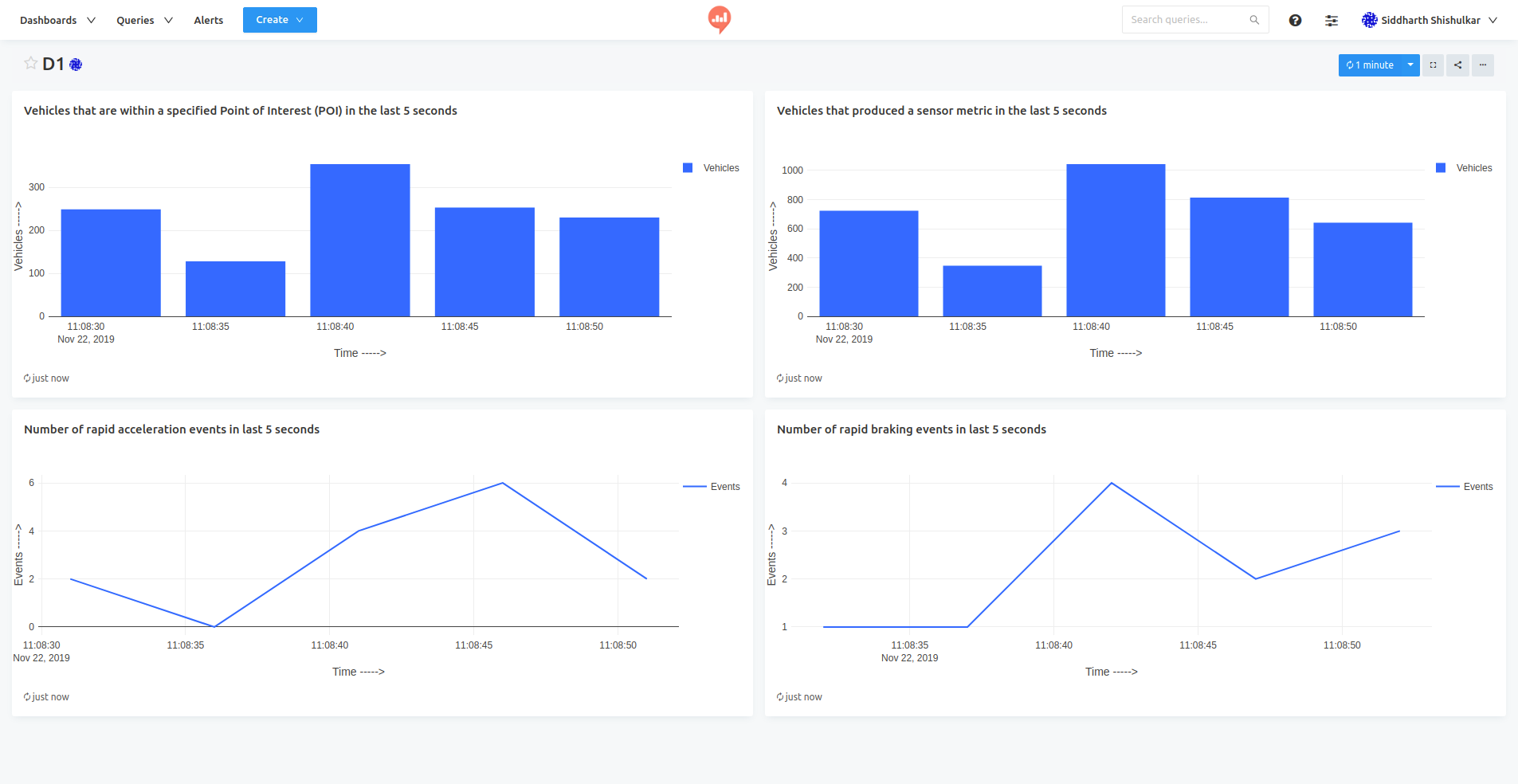

Step 6. Constructing the Reside IoT Analytics Dashboard with Redash

Redash gives a hosted answer which gives straightforward integration with Rockset. With a few clicks, you’ll be able to create charts and dashboards, which auto-refresh as new knowledge arrives. The next visualizations had been created, primarily based on the above queries.

Redash dashboard exhibiting the outcomes from the queries above

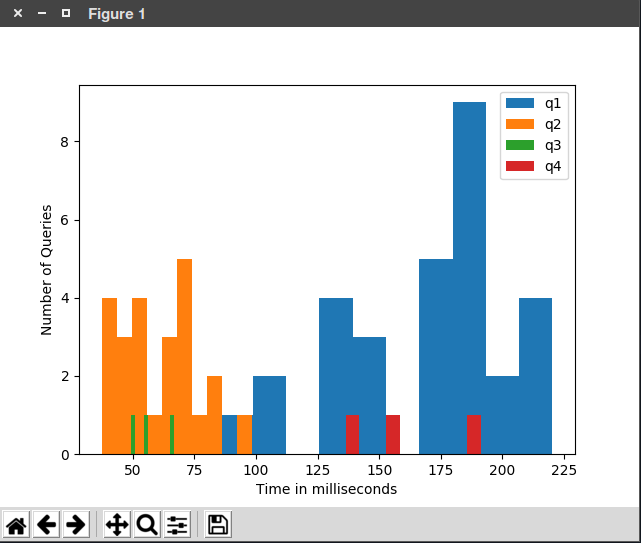

Supporting Excessive Concurrency & Scaling With Rockset

Rockset is able to dealing with numerous advanced queries on giant datasets whereas sustaining question latencies within the a whole lot of milliseconds. This supplies a small python script for load testing Rockset. It may be configured to run any variety of QPS (queries per second) with completely different queries for a given length. It’s going to run the desired variety of queries for a given period of time and generate a histogram exhibiting the time generated by every question for various queries.

By default, it is going to run 4 completely different queries with queries q1, q2, q3, and this fall having 50%, 40%, 5%, and 5% bandwidth respectively.

q1. Is a specified given car stationary or in-motion within the final 5 seconds? (level lookup question inside a window)

q2. Checklist the autos which can be inside a specified Level of Curiosity (POI) within the final 5 seconds. (level lookup & brief vary scan inside a window)

q3. Checklist the highest 5 autos which have moved the utmost distance within the final 5 seconds (international aggregation and topN)

this fall. Get the distinctive rely of all autos that produced a sensor metric within the final 5 seconds (international aggregation with rely distinct)

Under is an instance of a ten second run.

Graph exhibiting question latency distribution for a spread of queries in a 10-sec run

Actual-Time Analytics Stack for IoT

IoT use instances usually contain giant streams of sensor knowledge, and Kafka is usually used as a streaming platform in these conditions. As soon as the IoT knowledge is collected in Kafka, acquiring real-time perception from the information can show helpful.

Within the context of linked automobile knowledge, real-time analytics can profit logistics corporations in fleet administration and routing, trip hailing providers matching drivers and riders, and transportation companies monitoring site visitors situations, simply to call a number of.

By the course of this information, we confirmed how such a linked automobile IoT situation may match. Autos emit location and diagnostic knowledge to a Kafka cluster, a dependable and scalable option to centralize this knowledge. We then synced the information in Kafka to Rockset to allow quick, advert hoc queries and stay dashboards on the incoming IoT knowledge. Key issues on this course of had been:

- Want for low knowledge latency – to question the latest knowledge

- East of use – no schema must be configured

- Excessive QPS – for stay purposes to question the IoT knowledge

- Reside dashboards – integration with instruments for visible analytics

If you happen to’re nonetheless interested in constructing out real-time analytics for IoT gadgets, learn our different weblog, The place’s My Tesla? Making a Knowledge API Utilizing Kafka, Rockset and Postman to Discover Out, to see how we expose real-time Kafka IoT knowledge by means of the Rockset REST API.

Be taught extra about how a real-time analytics stack primarily based on Kafka and Rockset works right here.

Picture by Denys Nevozhai on Unsplash