Many growth groups flip to DynamoDB for constructing event-driven architectures and user-friendly, performant functions at scale. As an operational database, DynamoDB is optimized for real-time transactions even when deployed throughout a number of geographic places. Nevertheless, it doesn’t present robust efficiency for search and analytics entry patterns.

Search and Analytics on DynamoDB

Whereas NoSQL databases like DynamoDB usually have wonderful scaling traits, they assist solely a restricted set of operations which might be targeted on on-line transaction processing. This makes it troublesome to look, filter, combination and be part of information with out leaning closely on environment friendly indexing methods.

DynamoDB shops information beneath the hood by partitioning it over a lot of nodes based mostly on a user-specified partition key subject current in every merchandise. This user-specified partition key may be optionally mixed with a form key to characterize a major key. The first key acts as an index, making question operations cheap. A question operation can do equality comparisons (=)

on the partition key and comparative operations (>, <, =, BETWEEN) on the type key if specified.

Performing analytical queries not lined by the above scheme requires using a scan operation, which is often executed by scanning over the complete DynamoDB desk in parallel. These scans may be sluggish and costly when it comes to learn throughput as a result of they require a full learn of the complete desk. Scans additionally are likely to decelerate when the desk dimension grows, as there may be

extra information to scan to supply outcomes. If we need to assist analytical queries with out encountering prohibitive scan prices, we will leverage secondary indexes, which we’ll focus on subsequent.

Indexing in DynamoDB

In DynamoDB, secondary indexes are sometimes used to enhance utility efficiency by indexing fields which might be queried often. Question operations on secondary indexes can be used to energy particular options by analytic queries which have clearly outlined necessities.

Secondary indexes consist of making partition keys and non-compulsory kind keys over fields that we need to question. There are two varieties of secondary indexes:

- Native secondary indexes (LSIs): LSIs lengthen the hash and vary key attributes for a single partition.

- International secondary indexes (GSIs): GSIs are indexes which might be utilized to a complete desk as a substitute of a single partition.

Nevertheless, as Nike found, overusing GSIs in DynamoDB may be costly. Analytics in DynamoDB, until they’re used just for quite simple level lookups or small vary scans, may end up in overuse of secondary indexes and excessive prices.

The prices for provisioned capability when utilizing indexes can add up rapidly as a result of all updates to the bottom desk need to be made within the corresponding GSIs as nicely. The truth is, AWS advises that the provisioned write capability for a world secondary index ought to be equal to or better than the write capability of the bottom desk to keep away from throttling writes to the bottom desk and crippling the applying. The price of provisioned write capability grows linearly with the variety of GSIs configured, making it price prohibitive to make use of many GSIs to assist many entry patterns.

DynamoDB can be not well-designed to index information in nested constructions, together with arrays and objects. Earlier than indexing the information, customers might want to denormalize the information, flattening the nested objects and arrays. This might tremendously improve the variety of writes and related prices.

For a extra detailed examination of utilizing DynamoDB secondary indexes for analytics, see our weblog Secondary Indexes For Analytics On DynamoDB.

The underside line is that for analytical use circumstances, you possibly can acquire important efficiency and price benefits by syncing the DynamoDB desk with a distinct software or service that acts as an exterior secondary index for operating advanced analytics effectively.

DynamoDB + Elasticsearch

One strategy to constructing a secondary index over our information is to make use of DynamoDB with Elasticsearch. Cloud-based Elasticsearch, resembling Elastic Cloud or Amazon OpenSearch Service, can be utilized to provision and configure nodes in response to the scale of the indexes, replication, and different necessities. A managed cluster requires some operations to improve, safe, and preserve performant, however much less so than operating it solely by your self on EC2 situations.

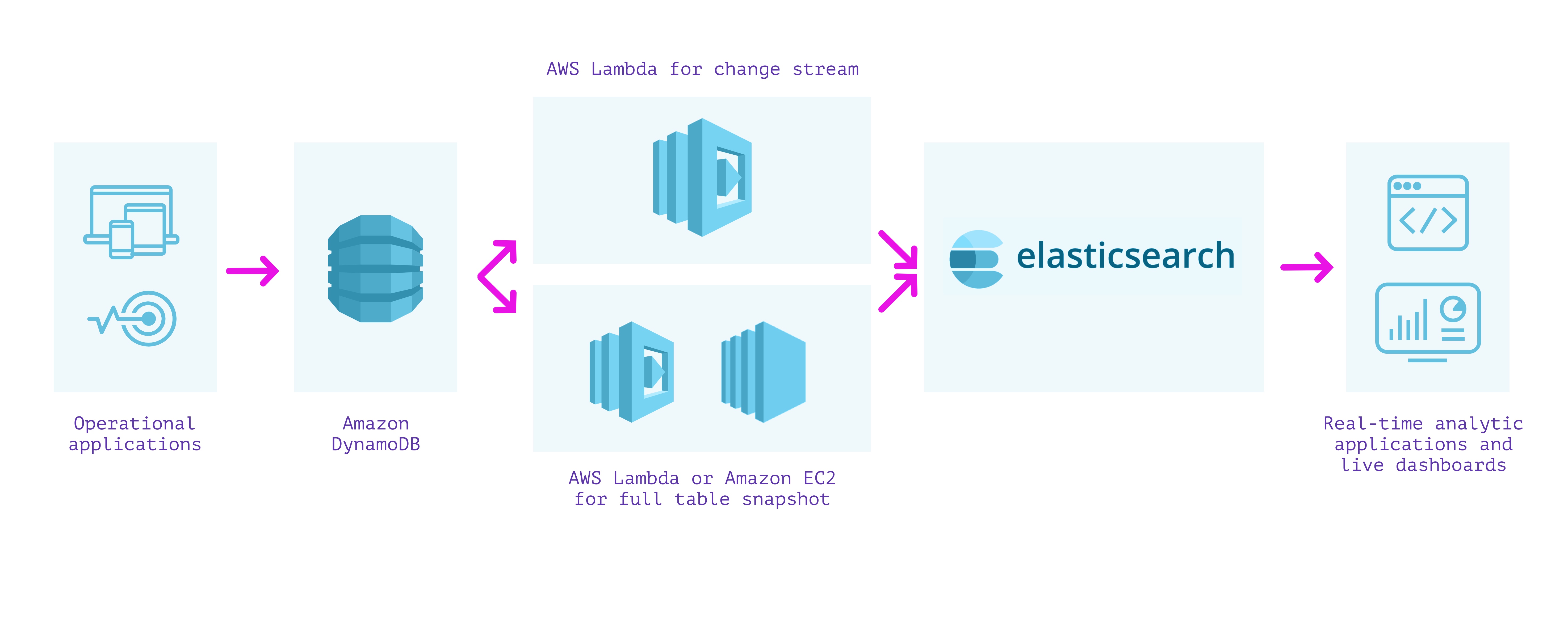

Because the strategy utilizing the Logstash Plugin for Amazon DynamoDB is unsupported and reasonably troublesome to arrange, we will as a substitute stream writes from DynamoDB into Elasticsearch utilizing DynamoDB Streams and an AWS Lambda perform. This strategy requires us to carry out two separate steps:

- We first create a lambda perform that’s invoked on the DynamoDB stream to publish every replace because it happens in DynamoDB into Elasticsearch.

- We then create a lambda perform (or EC2 occasion operating a script if it can take longer than the lambda execution timeout) to publish all the present contents of DynamoDB into Elasticsearch.

We should write and wire up each of those lambda capabilities with the right permissions with a view to be certain that we don’t miss any writes into our tables. When they’re arrange together with required monitoring, we will obtain paperwork in Elasticsearch from DynamoDB and may use Elasticsearch to run analytical queries on the information.

The benefit of this strategy is that Elasticsearch helps full-text indexing and several other varieties of analytical queries. Elasticsearch helps shoppers in varied languages and instruments like Kibana for visualization that may assist rapidly construct dashboards. When a cluster is configured accurately, question latencies may be tuned for quick analytical queries over information flowing into Elasticsearch.

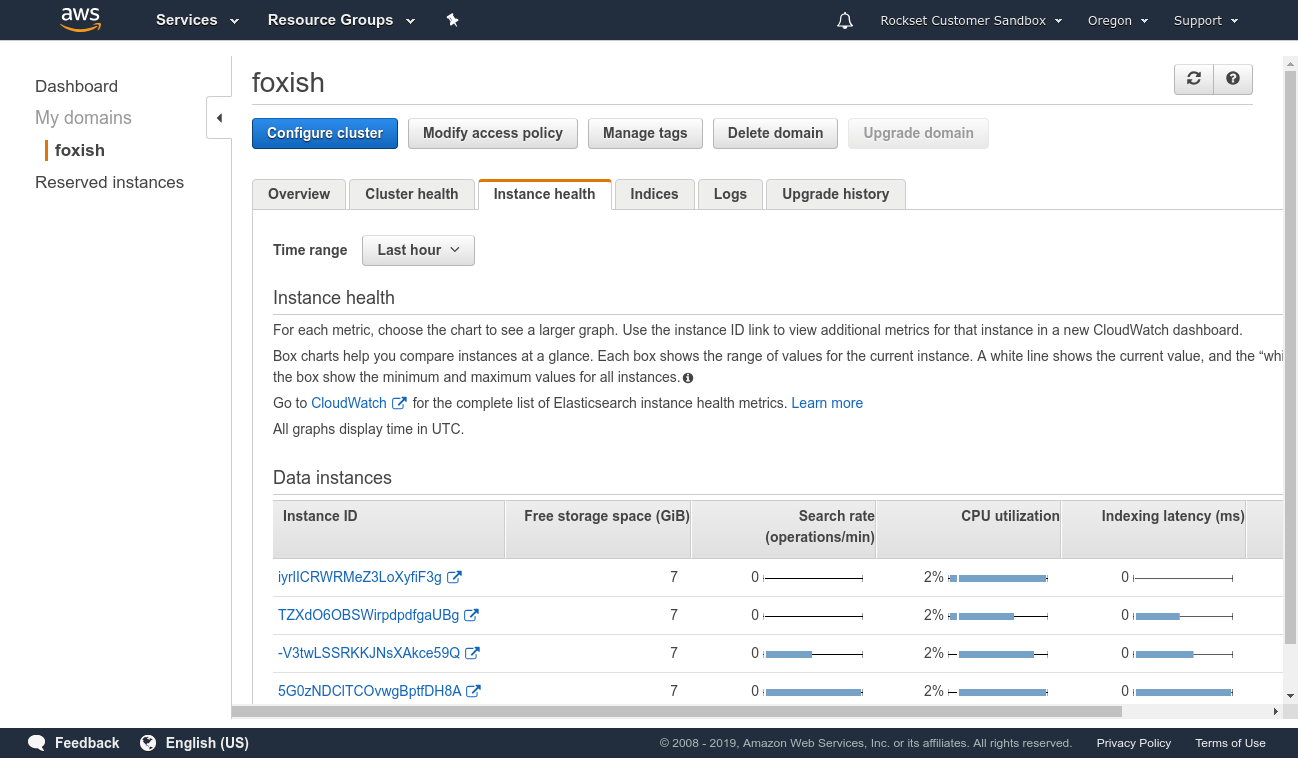

Disadvantages embody that the setup and upkeep price of the answer may be excessive. Even managed Elasticsearch requires coping with replication, resharding, index development, and efficiency tuning of the underlying situations.

Elasticsearch has a tightly coupled structure that doesn’t separate compute and storage. This implies assets are sometimes overprovisioned as a result of they can’t be independently scaled. As well as, a number of workloads, resembling reads and writes, will contend for a similar compute assets.

Elasticsearch additionally can not deal with updates effectively. Updating any subject will set off a reindexing of the complete doc. Elasticsearch paperwork are immutable, so any replace requires a brand new doc to be listed and the outdated model marked deleted. This leads to further compute and I/O expended to reindex even the unchanged fields and to put in writing complete paperwork upon replace.

As a result of lambdas fireplace after they see an replace within the DynamoDB stream, they’ll have have latency spikes as a result of chilly begins. The setup requires metrics and monitoring to make sure that it’s accurately processing occasions from the DynamoDB stream and in a position to write into Elasticsearch.

Functionally, when it comes to analytical queries, Elasticsearch lacks assist for joins, that are helpful for advanced analytical queries that contain multiple index. Elasticsearch customers typically need to denormalize information, carry out application-side joins, or use nested objects or parent-child relationships to get round this limitation.

Benefits

- Full-text search assist

- Assist for a number of varieties of analytical queries

- Can work over the most recent information in DynamoDB

Disadvantages

- Requires administration and monitoring of infrastructure for ingesting, indexing, replication, and sharding

- Tightly coupled structure leads to useful resource overprovisioning and compute competition

- Inefficient updates

- Requires separate system to make sure information integrity and consistency between DynamoDB and Elasticsearch

- No assist for joins between completely different indexes

This strategy can work nicely when implementing full-text search over the information in DynamoDB and dashboards utilizing Kibana. Nevertheless, the operations required to tune and keep an Elasticsearch cluster in manufacturing, its inefficient use of assets and lack of be part of capabilities may be difficult.

DynamoDB + Rockset

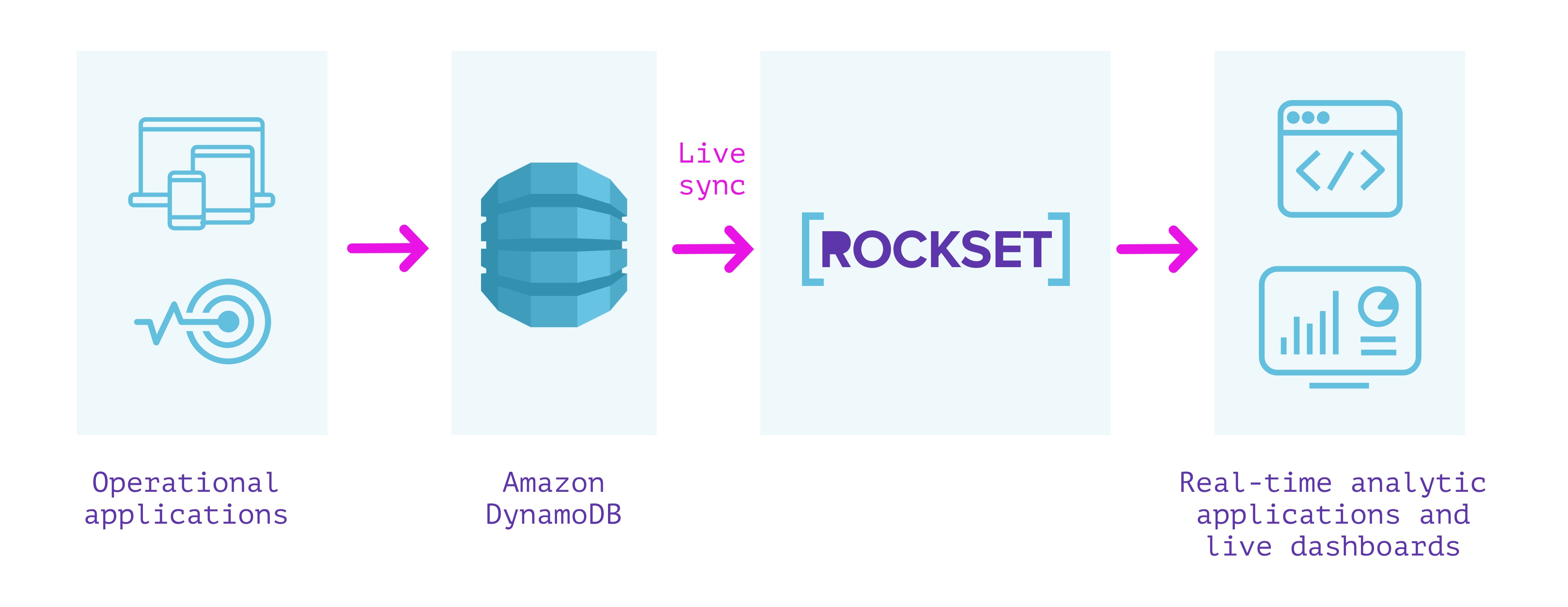

Rockset is a totally managed search and analytics database constructed primarily to assist real-time functions with excessive QPS necessities. It’s typically used as an exterior secondary index for information from OLTP databases.

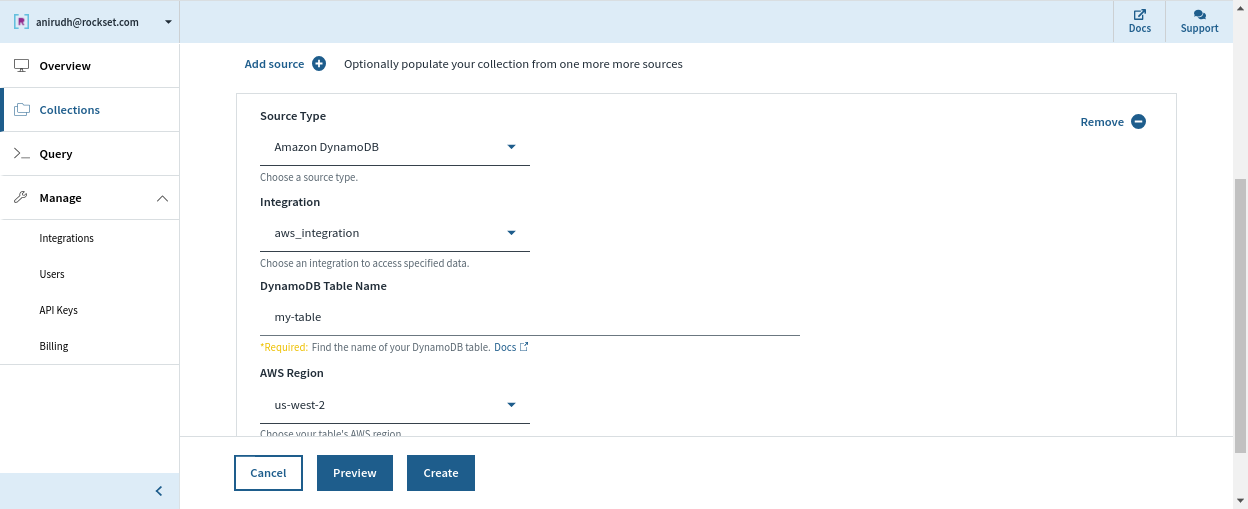

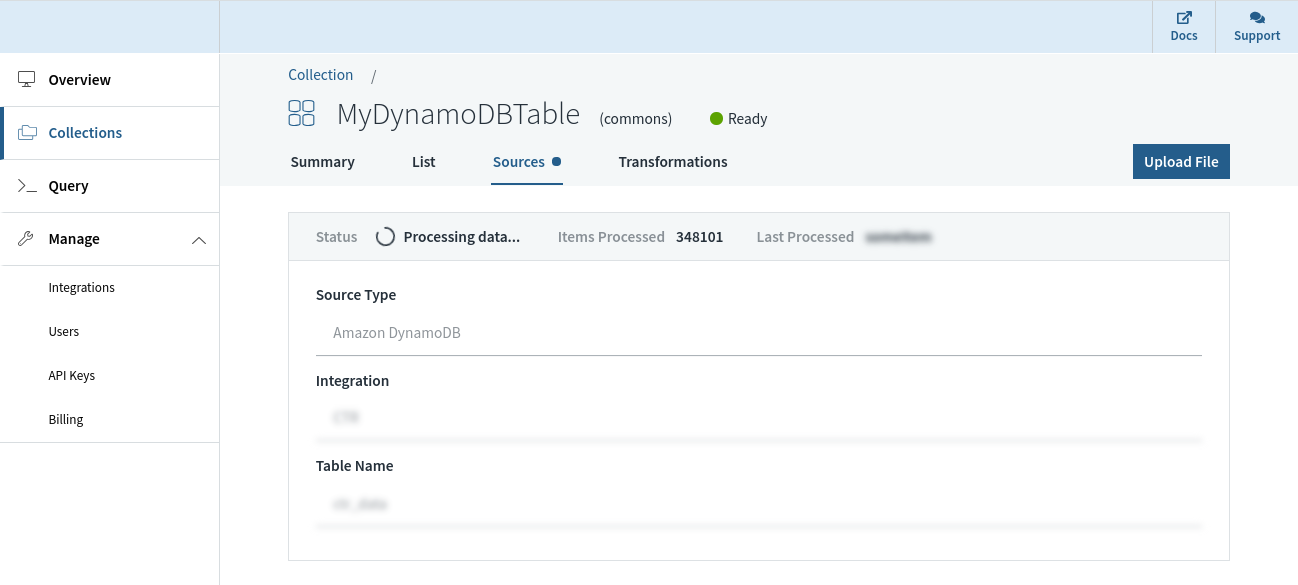

Rockset has a built-in connector with DynamoDB that can be utilized to maintain information in sync between DynamoDB and Rockset. We are able to specify the DynamoDB desk we need to sync contents from and a Rockset assortment that indexes the desk. Rockset indexes the contents of the DynamoDB desk in a full snapshot after which syncs new modifications as they happen. The contents of the Rockset assortment are all the time in sync with the DynamoDB supply; no quite a lot of seconds aside in regular state.

Rockset manages the information integrity and consistency between the DynamoDB desk and the Rockset assortment routinely by monitoring the state of the stream and offering visibility into the streaming modifications from DynamoDB.

With no schema definition, a Rockset assortment can routinely adapt when fields are added/eliminated, or when the construction/kind of the information itself modifications in DynamoDB. That is made doable by robust dynamic typing and good schemas that obviate the necessity for any further ETL.

The Rockset assortment we sourced from DynamoDB helps SQL for querying and may be simply utilized by builders with out having to be taught a domain-specific language. It can be used to serve queries to functions over a REST API or utilizing shopper libraries in a number of programming languages. The superset of ANSI SQL that Rockset helps can work natively on deeply nested JSON arrays and objects, and leverage indexes which might be routinely constructed over all fields, to get millisecond latencies on even advanced analytical queries.

Rockset has pioneered compute-compute separation, which permits isolation of workloads in separate compute items whereas sharing the identical underlying real-time information. This gives customers better useful resource effectivity when supporting simultaneous ingestion and queries or a number of functions on the identical information set.

As well as, Rockset takes care of safety, encryption of knowledge, and role-based entry management for managing entry to it. Rockset customers can keep away from the necessity for ETL by leveraging ingest transformations we will arrange in Rockset to switch the information because it arrives into a group. Customers also can optionally handle the lifecycle of the information by establishing retention insurance policies to routinely purge older information. Each information ingestion and question serving are routinely managed, which lets us concentrate on constructing and deploying dwell dashboards and functions whereas eradicating the necessity for infrastructure administration and operations.

Particularly related in relation to syncing with DynamoDB, Rockset helps in-place field-level updates, in order to keep away from pricey reindexing. Evaluate Rockset and Elasticsearch when it comes to ingestion, querying and effectivity to decide on the best software for the job.

Abstract

- Constructed to ship excessive QPS and serve real-time functions

- Utterly serverless. No operations or provisioning of infrastructure or database required

- Compute-compute separation for predictable efficiency and environment friendly useful resource utilization

- Stay sync between DynamoDB and the Rockset assortment, in order that they’re by no means quite a lot of seconds aside

- Monitoring to make sure consistency between DynamoDB and Rockset

- Automated indexes constructed over the information enabling low-latency queries

- In-place updates that avoids costly reindexing and lowers information latency

- Joins with information from different sources resembling Amazon Kinesis, Apache Kafka, Amazon S3, and many others.

We are able to use Rockset for implementing real-time analytics over the information in DynamoDB with none operational, scaling, or upkeep issues. This could considerably velocity up the event of real-time functions. If you would like to construct your utility on DynamoDB information utilizing Rockset, you may get began without cost on right here.