Though the deepfaking of personal people has develop into a rising public concern and is more and more being outlawed in varied areas, truly proving {that a} user-created mannequin – corresponding to one enabling revenge porn – was particularly educated on a selected particular person’s photos stays extraordinarily difficult.

To place the issue in context: a key component of a deepfake assault is falsely claiming that a picture or video depicts a selected particular person. Merely stating that somebody in a video is identification #A, somewhat than only a lookalike, is sufficient to create hurt, and no AI is important on this situation.

Nonetheless, if an attacker generates AI photos or movies utilizing fashions educated on actual particular person’s information, social media and search engine face recognition methods will routinely hyperlink the faked content material to the sufferer –with out requiring names in posts or metadata. The AI-generated visuals alone make sure the affiliation.

The extra distinct the particular person’s look, the extra inevitable this turns into, till the fabricated content material seems in picture searches and finally reaches the sufferer.

Face to Face

The commonest technique of disseminating identity-focused fashions is at the moment via Low-Rank Adaptation (LoRA), whereby the person trains a small variety of photos for just a few hours in opposition to the weights of a far bigger basis mannequin corresponding to Secure Diffusion (for static photos, largely) or Hunyuan Video, for video deepfakes.

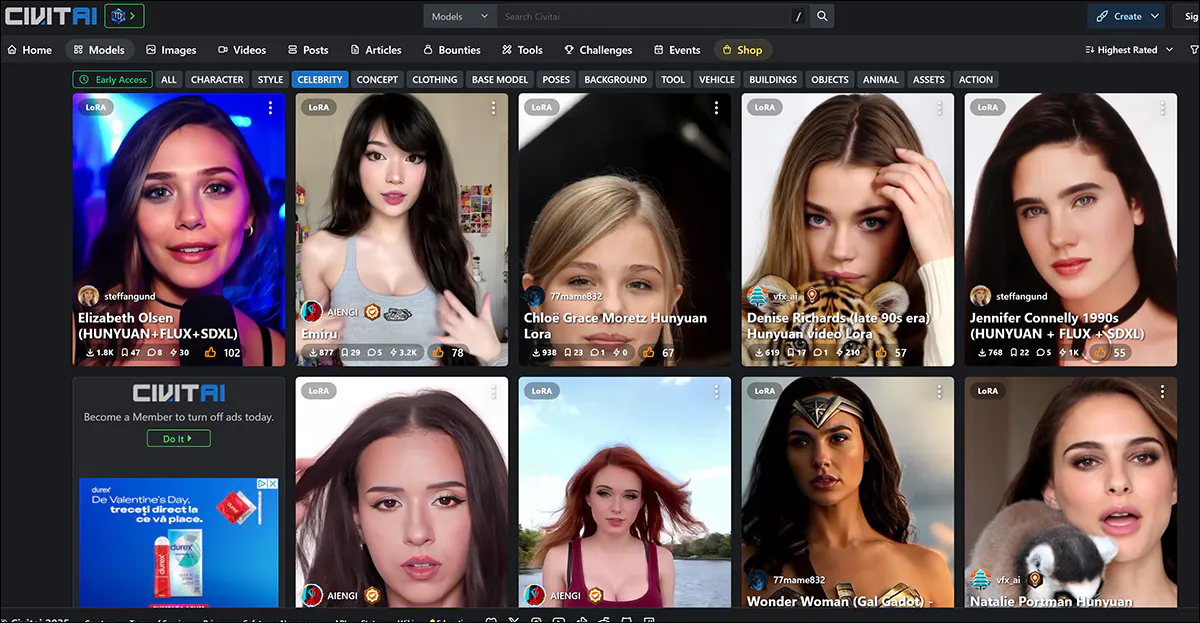

The commonest targets of LoRAs, together with the new breed of video-based LoRAs, are feminine celebrities, whose fame exposes them to this type of therapy with much less public criticism than within the case of ‘unknown’ victims, as a result of assumption that such spinoff works are lined underneath ‘truthful use’ (not less than within the USA and Europe).

Feminine celebrities dominate the LoRA and Dreambooth listings on the civit.ai portal. The preferred such LoRA at the moment has greater than 66,000 downloads, which is appreciable, on condition that this use of AI stays seen as a ‘fringe’ exercise.

There isn’t any such public discussion board for the non-celebrity victims of deepfaking, who solely floor within the media when prosecution instances come up, or the victims converse out in fashionable shops.

Nonetheless, in each situations, the fashions used to pretend the goal identities have ‘distilled’ their coaching information so fully into the latent area of the mannequin that it’s tough to determine the supply photos that had been used.

If it had been doable to take action inside an appropriate margin of error, this is able to allow the prosecution of those that share LoRAs, because it not solely proves the intent to deepfake a selected identification (i.e., that of a specfic ‘unknown’ particular person, even when the malefactor by no means names them throughout the defamation course of), but in addition exposes the uploader to copyright infringement expenses, the place relevant.

The latter could be helpful in jurisdictions the place authorized regulation of deepfaking applied sciences is missing or lagging behind.

Over-Uncovered

The target of coaching a basis mannequin, such because the multi-gigabyte base mannequin {that a} person would possibly obtain from Hugging Face, is that the mannequin ought to develop into well-generalized, and ductile. This includes coaching on an ample variety of various photos, and with applicable settings, and ending coaching earlier than the mannequin ‘overfits’ to the information.

An overfitted mannequin has seen the information so many (extreme) occasions throughout the coaching course of that it’ll have a tendency to breed photos which are very related, thereby exposing the supply of coaching information.

The identification ‘Ann Graham Lotz’ might be virtually completely reproduced within the Secure Diffusion V1.5 mannequin. The reconstruction is almost similar to the coaching information (on the left within the picture above). Supply: https://arxiv.org/pdf/2301.13188

Nonetheless, overfitted fashions are usually discarded by their creators somewhat than distributed, since they’re in any case unfit for objective. Subsequently that is an unlikely forensic ‘windfall’. In any case, the precept applies extra to the costly and high-volume coaching of basis fashions, the place a number of variations of the identical picture which have crept into an enormous supply dataset might make sure coaching photos simple to invoke (see picture and instance above).

Issues are a little bit completely different within the case of LoRA and Dreambooth fashions (although Dreambooth has fallen out of vogue because of its giant file sizes). Right here, the person selects a really restricted variety of various photos of a topic, and makes use of these to coach a LoRA.

On the left, output from a Hunyuan Video LoRA. On the best, the information that made the resemblance doable (photos used with permission of the particular person depicted).

Regularly the LoRA can have a trained-in trigger-word, corresponding to [nameofcelebrity]. Nonetheless, fairly often the specifically-trained topic will seem in generated output even with out such prompts, as a result of even a well-balanced (i.e., not overfitted) LoRA is considerably ‘fixated’ on the fabric it was educated on, and can have a tendency to incorporate it in any output.

This predisposition, mixed with the restricted picture numbers which are optimum for a LoRA dataset, expose the mannequin to forensic evaluation, as we will see.

Unmasking the Information

These issues are addressed in a brand new paper from Denmark, which provides a strategy to determine supply photos (or teams of supply photos) in a black-box Membership Inference Assault (MIA). The method not less than partly includes using custom-trained fashions which are designed to assist expose supply information by producing their very own ‘deepfakes’:

Examples of ‘pretend’ photos generated by the brand new method, at ever-increasing ranges of Classifier-Free Steering (CFG), as much as the purpose of destruction. Supply: https://arxiv.org/pdf/2502.11619

Although the work, titled Membership Inference Assaults for Face Photographs Towards Advantageous-Tuned Latent Diffusion Fashions, is a most attention-grabbing contribution to the literature round this specific subject, it’s also an inaccessible and tersely-written paper that wants appreciable decoding. Subsequently we’ll cowl not less than the fundamental rules behind the mission right here, and a collection of the outcomes obtained.

In impact, if somebody fine-tunes an AI mannequin in your face, the authors’ methodology can assist show it by in search of telltale indicators of memorization within the mannequin’s generated photos.

Within the first occasion, a goal AI mannequin is fine-tuned on a dataset of face photos, making it extra more likely to reproduce particulars from these photos in its outputs. Subsequently, a classifier assault mode is educated utilizing AI-generated photos from the goal mannequin as ‘positives’ (suspected members of the coaching set) and different photos from a special dataset as ‘negatives’ (non-members).

By studying the delicate variations between these teams, the assault mannequin can predict whether or not a given picture was a part of the unique fine-tuning dataset.

The assault is best in instances the place the AI mannequin has been fine-tuned extensively, that means that the extra a mannequin is specialised, the better it’s to detect if sure photos had been used. This usually applies to LoRAs designed to recreate celebrities or non-public people.

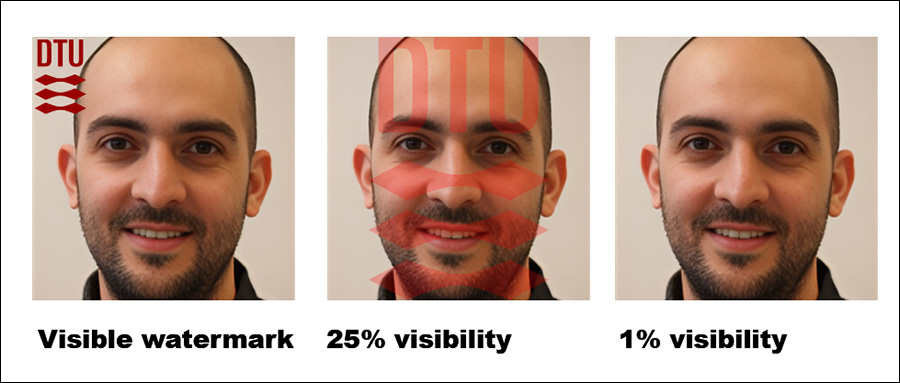

The authors additionally discovered that including seen watermarks to coaching photos makes detection simpler nonetheless – although hidden watermarks don’t assist as a lot.

Impressively, the method is examined in a black-box setting, that means it really works with out entry to the mannequin’s inside particulars, solely its outputs.

The strategy arrived at is computationally intense, because the authors concede; nevertheless, the worth of this work is in indicating the course for added analysis, and to show that information might be realistically extracted to an appropriate tolerance; subsequently, given its seminal nature, it needn’t run on a smartphone at this stage.

Technique/Information

A number of datasets from the Technical College of Denmark (DTU, the host establishment for the paper’s three researchers) had been used within the research, for fine-tuning the goal mannequin and for coaching and testing the assault mode.

Datasets used had been derived from DTU Orbit:

DseenDTU The bottom picture set.

DDTU Photographs scraped from DTU Orbit.

DseenDTU A partition of DDTU used to fine-tune the goal mannequin.

DunseenDTU A partition of DDTU that was not used to fine-tune any picture era mannequin and was as an alternative used to check or prepare the assault mannequin.

wmDseenDTU A partition of DDTU with seen watermarks used to fine-tune the goal mannequin.

hwmDseenDTU A partition of DDTU with hidden watermarks used to fine-tune the goal mannequin.

DgenDTU Photographs generated by a Latent Diffusion Mannequin (LDM) which has been fine-tuned on the DseenDTU picture set.

The datasets used to fine-tune the goal mannequin encompass image-text pairs captioned by the BLIP captioning mannequin (maybe not by coincidence one of the crucial fashionable uncensored fashions within the informal AI neighborhood).

BLIP was set to prepend the phrase ‘a dtu headshot of a’ to every description.

Moreover, a number of datasets from Aalborg College (AAU) had been employed within the exams, all derived from the AU VBN corpus:

DAAU Photographs scraped from AAU vbn.

DseenAAU A partition of DAAU used to fine-tune the goal mannequin.

DunseenAAU A partition of DAAU that’s not used to fine-tune any picture era mannequin, however somewhat is used to check or prepare the assault mannequin.

DgenAAU Photographs generated by an LDM fine-tuned on the DseenAAU picture set.

Equal to the sooner units, the phrase ‘a aau headshot of a’ was used. This ensured that every one labels within the DTU dataset adopted the format ‘a dtu headshot of a (…)’, reinforcing the dataset’s core traits throughout fine-tuning.

Assessments

A number of experiments had been performed to judge how properly the membership inference assaults carried out in opposition to the goal mannequin. Every check aimed to find out whether or not it was doable to hold out a profitable assault inside the schema proven beneath, the place the goal mannequin is fine-tuned on a picture dataset that was obtained with out authorization.

Schema for the method.

With the fine-tuned mannequin queried to generate output photos, these photos are then used as constructive examples for coaching the assault mannequin, whereas further unrelated photos are included as damaging examples.

The assault mannequin is educated utilizing supervised studying and is then examined on new photos to find out whether or not they had been initially a part of the dataset used to fine-tune the goal mannequin. To guage the accuracy of the assault, 15% of the check information is put aside for validation.

As a result of the goal mannequin is fine-tuned on a identified dataset, the precise membership standing of every picture is already established when creating the coaching information for the assault mannequin. This managed setup permits for a transparent evaluation of how successfully the assault mannequin can distinguish between photos that had been a part of the fine-tuning dataset and those who weren’t.

For these exams, Secure Diffusion V1.5 was used. Although this somewhat previous mannequin crops up rather a lot in analysis as a result of want for constant testing, and the intensive corpus of prior work that makes use of it, that is an applicable use case; V1.5 remained fashionable for LoRA creation within the Secure Diffusion hobbyist neighborhood for a very long time, regardless of a number of subsequent model releases, and even despite the appearance of Flux – as a result of the mannequin is totally uncensored.

The researchers’ assault mannequin was based mostly on Resnet-18, with the mannequin’s pretrained weights retained. ResNet-18’s 1000-neuron final layer was substituted with a fully-connected layer with two neurons. Coaching loss was categorical cross-entropy, and the Adam optimizer was used.

For every check, the assault mannequin was educated 5 occasions utilizing completely different random seeds to compute 95% confidence intervals for the important thing metrics. Zero-shot classification with the CLIP mannequin was used because the baseline.

(Please word that the unique main outcomes desk within the paper is terse and unusually obscure. Subsequently I’ve reformulated it beneath in a extra user-friendly vogue. Please click on on the picture to see it in higher decision)

The researchers’ assault methodology proved best when concentrating on fine-tuned fashions, notably these educated on a selected set of photos, corresponding to a person’s face. Nonetheless, whereas the assault can decide whether or not a dataset was used, it struggles to determine particular person photos inside that dataset.

In sensible phrases, the latter shouldn’t be essentially a hindrance to utilizing an method corresponding to this forensically; whereas there’s comparatively little worth in establishing {that a} well-known dataset corresponding to ImageNet was utilized in a mannequin, an attacker on a personal particular person (not a celeb) will are likely to have far much less alternative of supply information, and wish to totally exploit out there information teams corresponding to social media albums and different on-line collections. These successfully create a ‘hash’ which might be uncovered by the strategies outlined.

The paper notes that one other method to enhance accuracy is to make use of AI-generated photos as ‘non-members’, somewhat than relying solely on actual photos. This prevents artificially excessive success charges that might in any other case mislead the outcomes.

An extra issue that considerably influences detection, the authors word, is watermarking. When coaching photos comprise seen watermarks, the assault turns into extremely efficient, whereas hidden watermarks supply little to no benefit.

The correct-most determine reveals the precise ‘hidden’ watermark used within the exams.

Lastly, the extent of steerage in text-to-image era additionally performs a task, with the best stability discovered at a steerage scale of round 8. Even when no direct immediate is used, a fine-tuned mannequin nonetheless tends to supply outputs that resemble its coaching information, reinforcing the effectiveness of the assault.

Conclusion

It’s a disgrace that this attention-grabbing paper has been written in such an inaccessible method, accurately of some curiosity to privateness advocates and informal AI researchers alike.

Although membership inference assaults might transform an attention-grabbing and fruitful forensic device, it’s extra necessary, maybe, for this analysis strand to develop relevant broad rules, to stop it ending up in the identical recreation of whack-a-mole that has occurred for deepfake detection basically, when the discharge of a more recent mannequin adversely impacts detection and related forensic methods.

Since there’s some proof of a higher-level tenet cleaned on this new analysis, we will hope to see extra work on this course.

First printed Friday, February 21, 2025