I spent the spring of my junior yr interning at Rockset, and it couldn’t have been a greater choice. Once I first arrived on the workplace on a sunny day in San Mateo, I had no concept that I used to be about to satisfy so many techniques engineering gurus, or that I used to be about to eat immensely good meals from the festive neighboring streets. Working with my proficient and resourceful mentor, Ben (Software program Engineer, Methods), I’ve been in a position to study greater than I ever thought I may in three months! I now see myself as fairly effectively seasoned at C++ improvement, extra understanding of various database architectures, and barely higher at Tremendous Smash. Solely barely.

One factor I actually appreciated was that even on the primary day of the internship, I used to be in a position to push significant code by implementing the SUFFIXES SQL perform, one thing that was desired by and instantly impactful to Rockset’s prospects.

Over the course of my internship at Rockset, I acquired to dive deeper into many points of our techniques backend, two of which I’ll go into extra element for. I acquired myself into far more segfaults and lengthy hours spent debugging in GDB than I bargained for, which I can now say I got here out the higher finish of. :D.

Question Type Optimization

One in every of my favourite tasks over this internship was to optimize our kind course of for queries with the ORDER BY key phrase in SQL. For instance, queries like:

SELECT a FROM b ORDER BY c OFFSET 1000

would be capable to run as much as 45% quicker with the offset-based optimization added, which is a big efficiency enchancment, particularly for queries with giant quantities of knowledge.

We use operators in Rockset to separate tasks within the execution of a question, primarily based on totally different processes equivalent to scans, kinds and joins. One such operator is the SortOperator, which facilitates ordered queries and handles sorting. The SortOperator makes use of a typical library kind to energy ordered queries, which isn’t receptive to timeouts throughout question execution since there is no such thing as a framework for interrupt dealing with. Because of this when utilizing customary kinds, the question deadline just isn’t enforced, and CPU is wasted on queries that ought to have already timed out.

Present sorting algorithms utilized by customary libraries are a strategic mixture of the quicksort, heapsort and insertion kind, known as introsort. Utilizing a strategic loop and tail recursion, we are able to cut back the variety of recursive calls made within the kind, thereby shaving a major period of time off the kind. Recursion additionally halts at a particular depth, after which both heapsort or insertion kind is used, relying on the variety of components within the interval. The variety of comparisons and recursive calls made in a form are very essential when it comes to efficiency, and my mission was to scale back each with the intention to optimize bigger kinds.

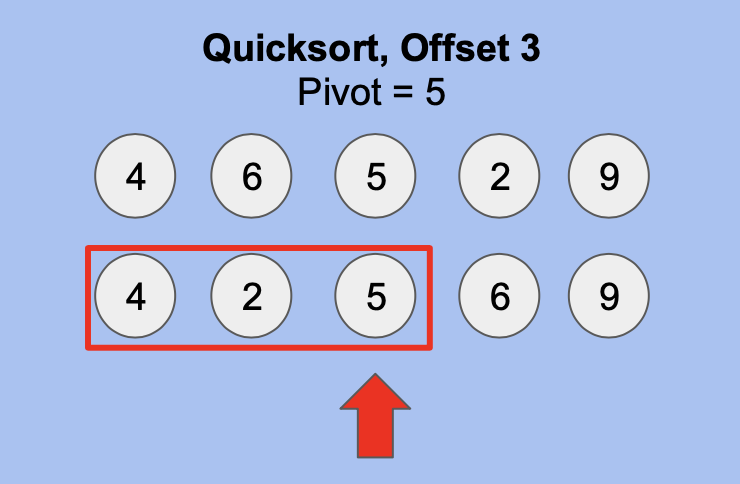

For the offset optimization, I used to be in a position to lower recursive calls by an quantity proportional to the offset by maintaining observe of pivots utilized by earlier recursive calls. Based mostly on my modifications to introsort, we all know that after a single partitioning, the pivot is in its appropriate place. Utilizing this earlier place, we are able to remove recursive calls earlier than the pivot if its place is lower than or equal to the offset requested.

For instance, within the above picture, we’re in a position to halt recursion on the values earlier than and together with the pivot, 5, since its place is <= offset.

With the intention to serve cancellation requests, I needed to guarantee that these checks have been each well timed and performed at common intervals in a means that didn’t enhance the latency of kinds. This meant that having cancellation checks correlated 1:1 with the variety of comparisons or recursive calls instantly can be very damaging to latency. The answer to this was to correlate cancellation checks with recursion depth as an alternative, which by means of subsequent benchmarking I found {that a} recursion depth of >28 general corresponded to at least one second of execution time between ranges. For instance, between a recursion depth of 29 & 28, there may be ~1 second of execution. Related benchmarks have been used to find out when to test for cancellations within the heapsort.

This portion of my internship was closely associated to efficiency and concerned meticulous benchmarking of question execution instances, which helped me perceive find out how to view tradeoffs in engineering. Efficiency time is essential since it’s probably a deciding think about whether or not to make use of Rockset, because it determines how briskly we are able to course of knowledge.

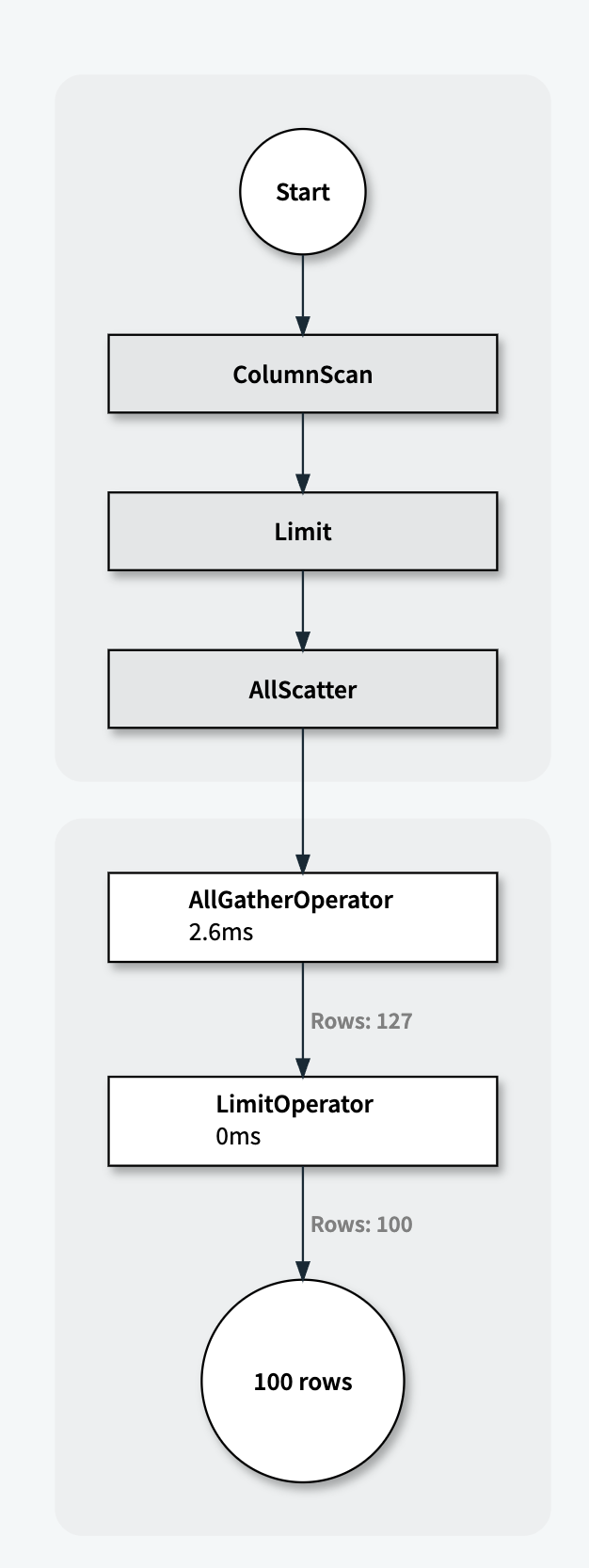

Batching QueryStats to Redis

One other attention-grabbing subject I labored on was lowering the latency of Rockset’s Question Stats writer after a question is run. Question Stats are vital as a result of they supply visibility into the place the assets like CPU time and reminiscence are utilized in question execution. These stats assist our backend staff to enhance question execution efficiency. There are various totally different sorts of stats, particularly for various operators, which clarify how lengthy their processes are taking and the quantity of CPU they’re utilizing. Sooner or later, we plan to share a visible illustration of those stats with our customers in order that they higher perceive useful resource utilization in Rockset’s distributed question engine.

We presently ship the stats from operators used within the execution of queries to intermediately retailer them in Redis, from the place our API server is ready to pull them into an inner software. Within the execution of sophisticated or bigger queries, these stats are gradual to populate, principally as a result of latency brought on by tens of hundreds of spherical journeys to Redis.

My job was to lower the variety of journeys to Redis by batching them by queryID, and make sure that question stats are populated whereas stopping spikes within the variety of question stats ready to be pushed. This effectivity enchancment would help us in scaling our question stats system to execute bigger, extra advanced queries. This downside was significantly attention-grabbing to me because it offers with the alternate of knowledge between two totally different techniques in a batched and ordered trend.

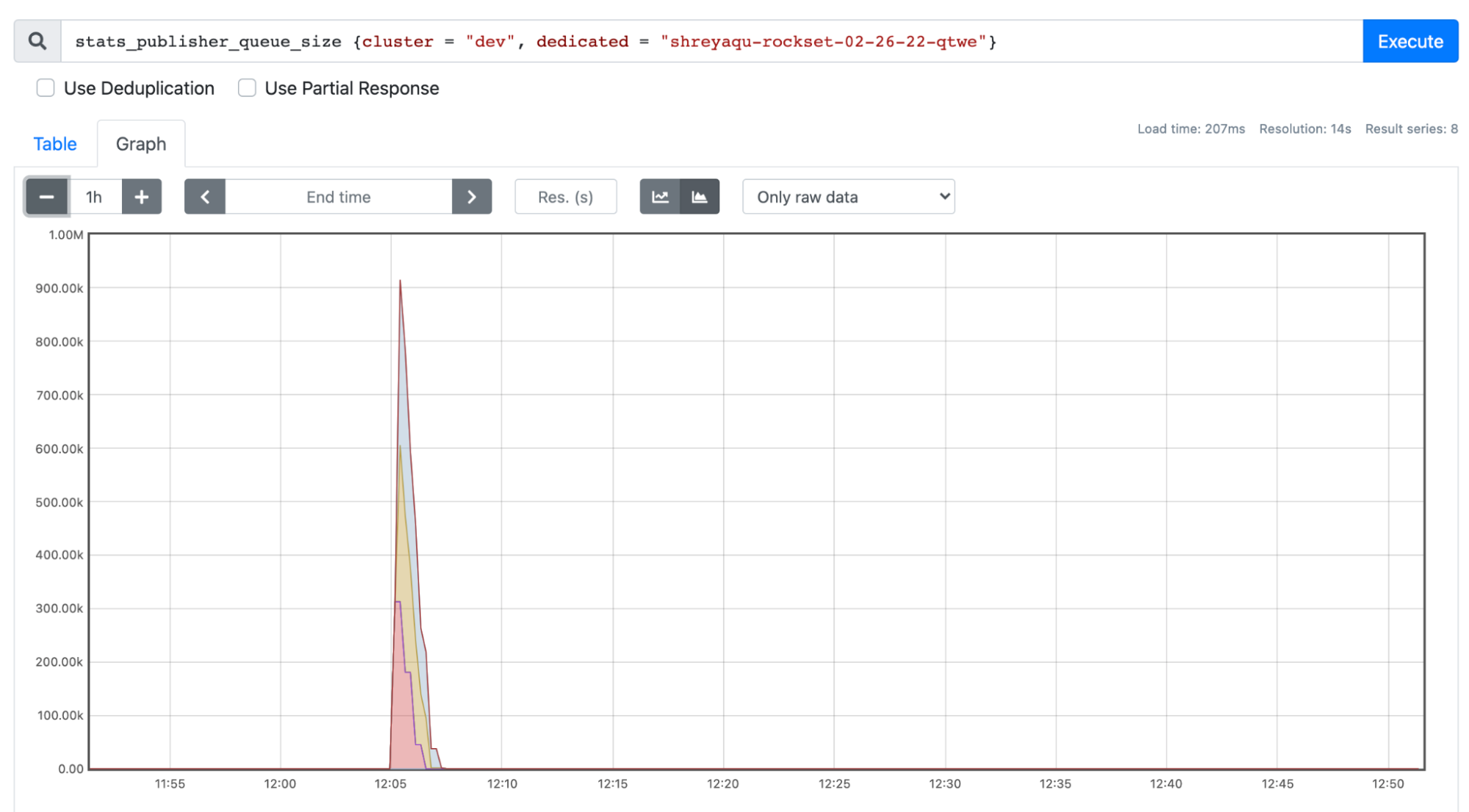

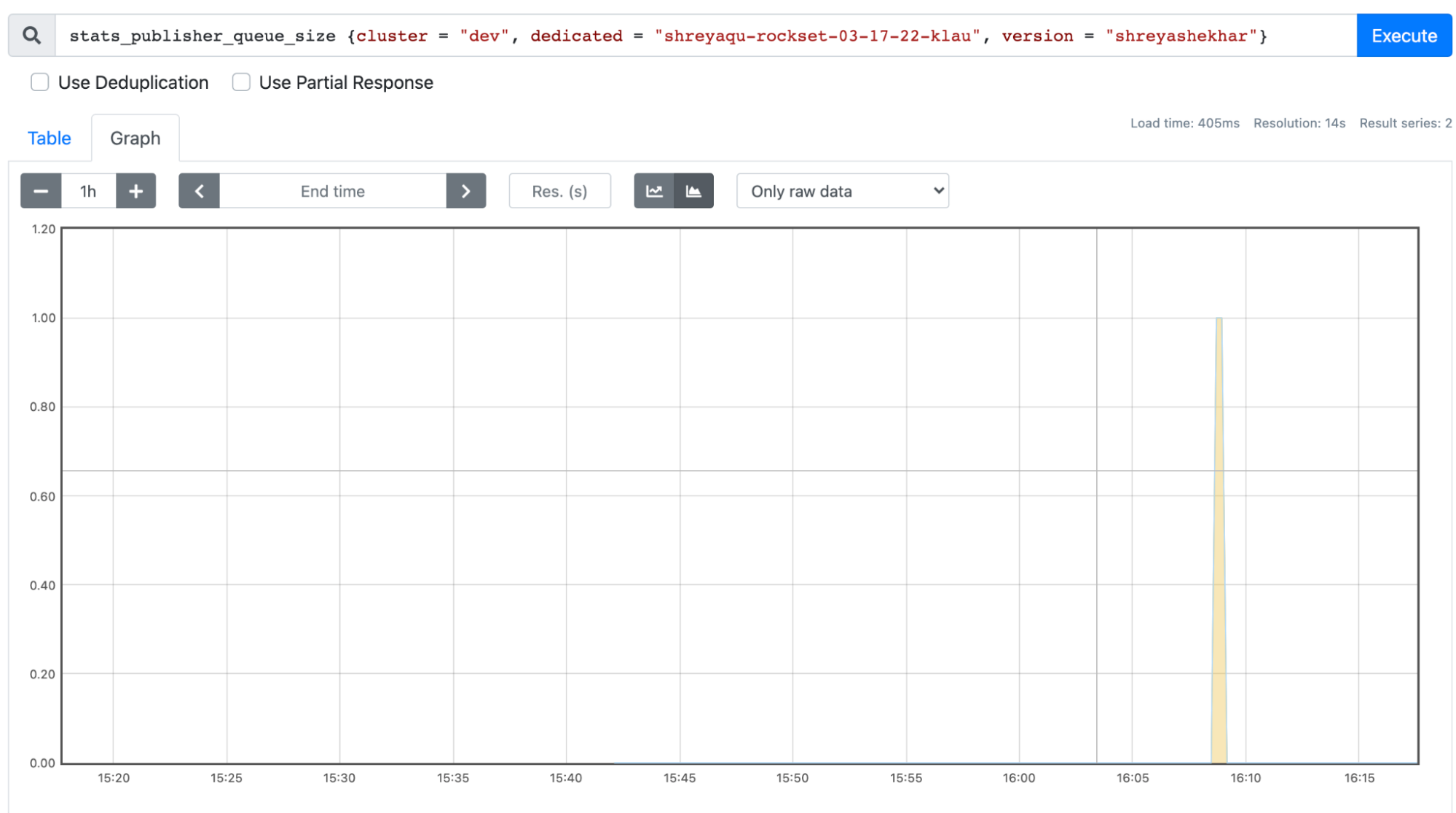

The answer to this subject concerned the usage of a thread secure map construction of queryID ->queue, which was used to retailer and unload querystats particular to a queryId. These stats have been despatched to Redis in as few journeys as attainable by eagerly unloading a queryID’s queue every time it has been populated, and pushing everything of the stats current to Redis. I additionally refactored the Redis API code we have been utilizing to ship question stats, making a perform the place a number of stats could possibly be despatched over as an alternative of simply one by one. As proven within the pictures under, this dramatically decreased the spikes in question stats ready to be despatched to Redis, by no means letting a number of question stats from the identical queryID replenish the queue.

As proven within the screenshots above, stats writer queue measurement was drastically lowered from over 900k to a most of 1!

Extra In regards to the Tradition & The Expertise

What I actually appreciated about my internship expertise at Rockset was the quantity of autonomy I had over the work I used to be doing, and the prime quality mentorship I acquired. My day by day work felt much like that of a full-time engineer on the techniques staff, since I used to be ready to decide on and work on duties I felt have been attention-grabbing to me whereas connecting with totally different engineers to study extra in regards to the code I used to be engaged on. I used to be even in a position to attain out to different groups equivalent to Gross sales and Advertising and marketing to study extra about their work and assist out with points I discovered attention-grabbing.

One other side I cherished was the close-knit group of engineers at Rockset, one thing I acquired quite a lot of publicity to at Hack Week, a week-long firm hackathon that was held in Lake Tahoe earlier this yr. This was a useful expertise for me to satisfy different engineers on the firm, and for all of us to hack away at options we felt needs to be built-in into Rockset’s product with out the presence of regular day by day duties or obligations. I felt that this was a tremendous concept, because it incentivized the engineers to work on concepts they have been personally invested in associated to the product and elevated possession for everybody as effectively. To not point out, everybody from engineers to executives have been current and dealing collectively on this hackathon, which made for an open and endearing firm atmosphere. We additionally had innumerable alternatives for bonding inside the engineering groups on this journey, one in all which was an enormous loss for me in poker. And naturally, the excessive stakes video games of Tremendous Smash.

General, my expertise working as as an intern at Rockset was actually every little thing I had hoped for, and extra.

Shreya Shekhar is learning Electrical Engineering & Laptop Science and Enterprise Administration at U.C. Berkeley.

Rockset is the main Actual-time Analytics Platform Constructed for the Cloud, delivering quick analytics on real-time knowledge with shocking effectivity. Be taught extra at rockset.com.