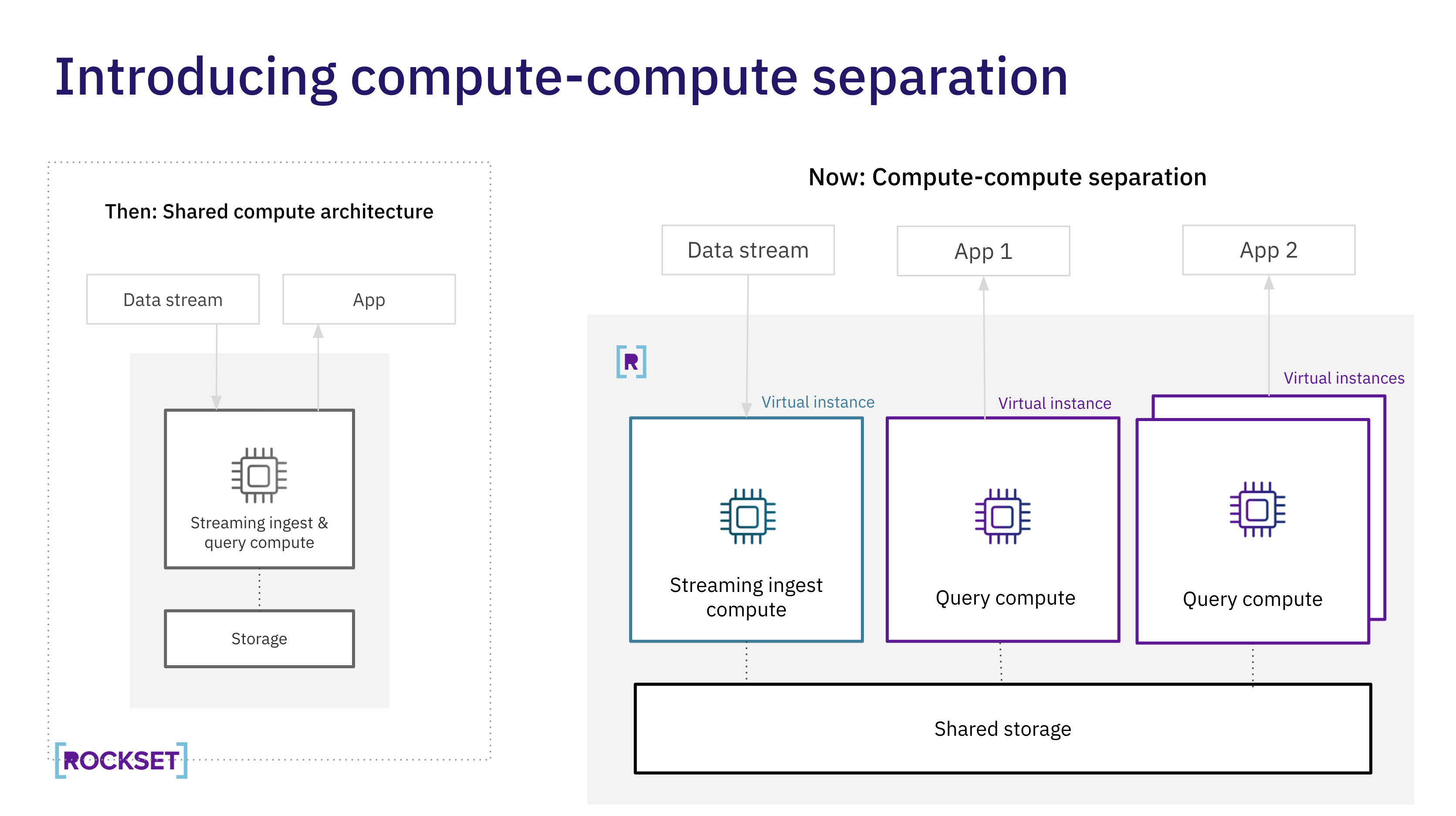

Rockset hosted a tech speak on its new cloud structure that separates storage-compute and compute-compute for real-time analytics. With compute-compute separation within the cloud, customers can allocate a number of, remoted clusters for ingest compute or question compute whereas sharing the identical real-time knowledge.

The speak was led by Rockset co-founder and CEO Venkat Venkataramani and principal architect Nathan Bronson as they shared how Rockset solves the problem of compute rivalry by:

- Isolating streaming ingest and question compute for predictable efficiency even within the face of high-volume writes or reads. This permits customers to keep away from overprovisioning to deal with bursty workloads

- Supporting a number of purposes on shared real-time knowledge. Rockset separates compute from scorching storage and permits a number of compute clusters to function on the shared knowledge.

- Scaling out throughout a number of clusters for prime concurrency purposes

Under, I cowl the high-level implementation shared within the speak and advocate trying out the recording for extra particulars on compute-compute separation.

Embedded content material: https://youtu.be/jUDDokvuDLw

What’s the drawback?

There’s a elementary problem with real-time analytics database design: streaming ingest and low latency queries use the identical compute unit. Shared compute architectures have the benefit of creating not too long ago generated knowledge instantly accessible for querying. The draw back is that shared compute architectures additionally expertise rivalry between ingest and question workloads, resulting in unpredictable efficiency for real-time analytics at scale.

There are three widespread however inadequate methods used to deal with the problem of compute rivalry:

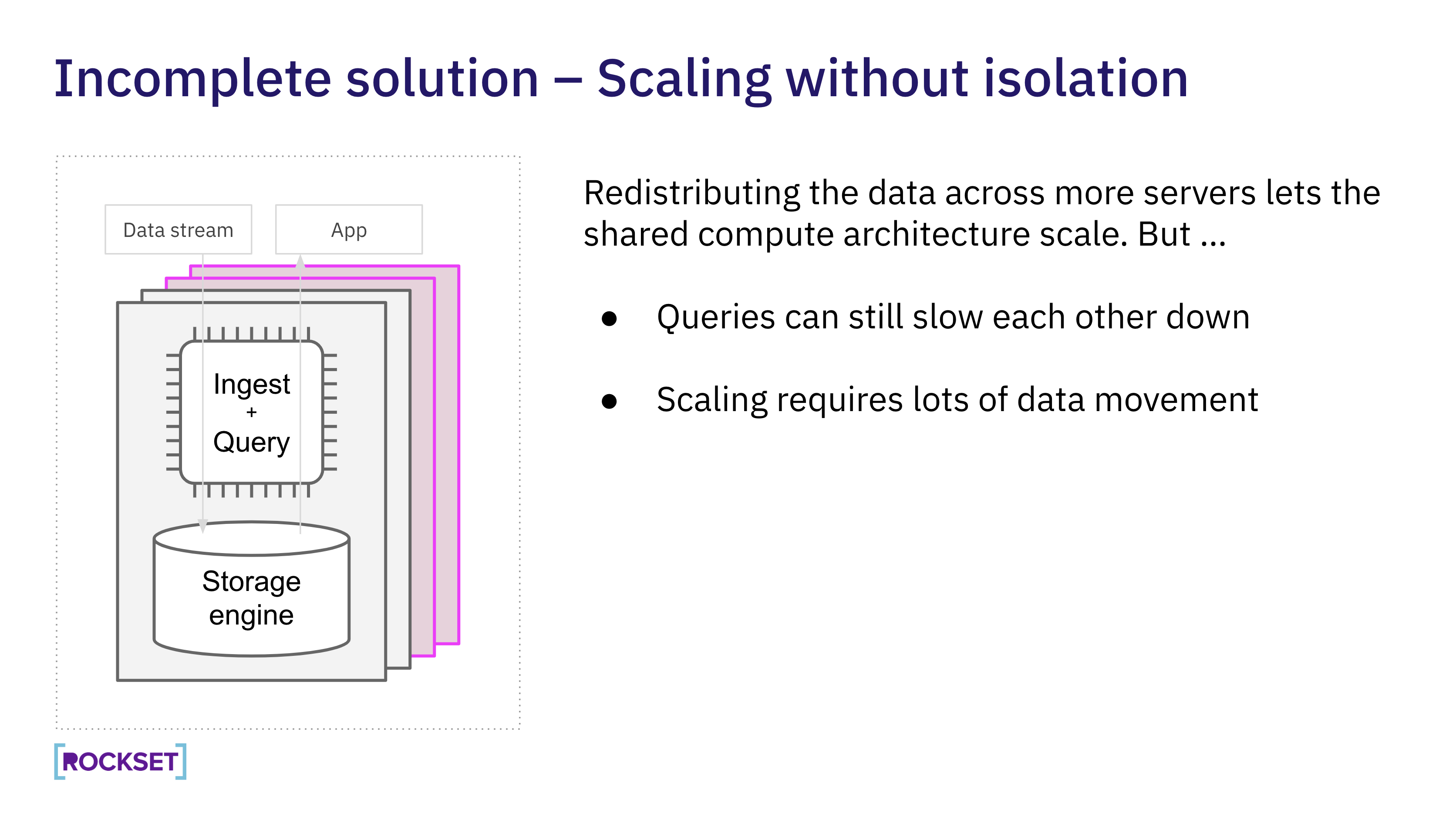

Sharding: Scale out the database throughout a number of nodes. Sharding misdiagnoses the issue as working out of compute not workload isolation. With database sharding, queries can nonetheless step on each other. And, queries for one software can step on the opposite software.

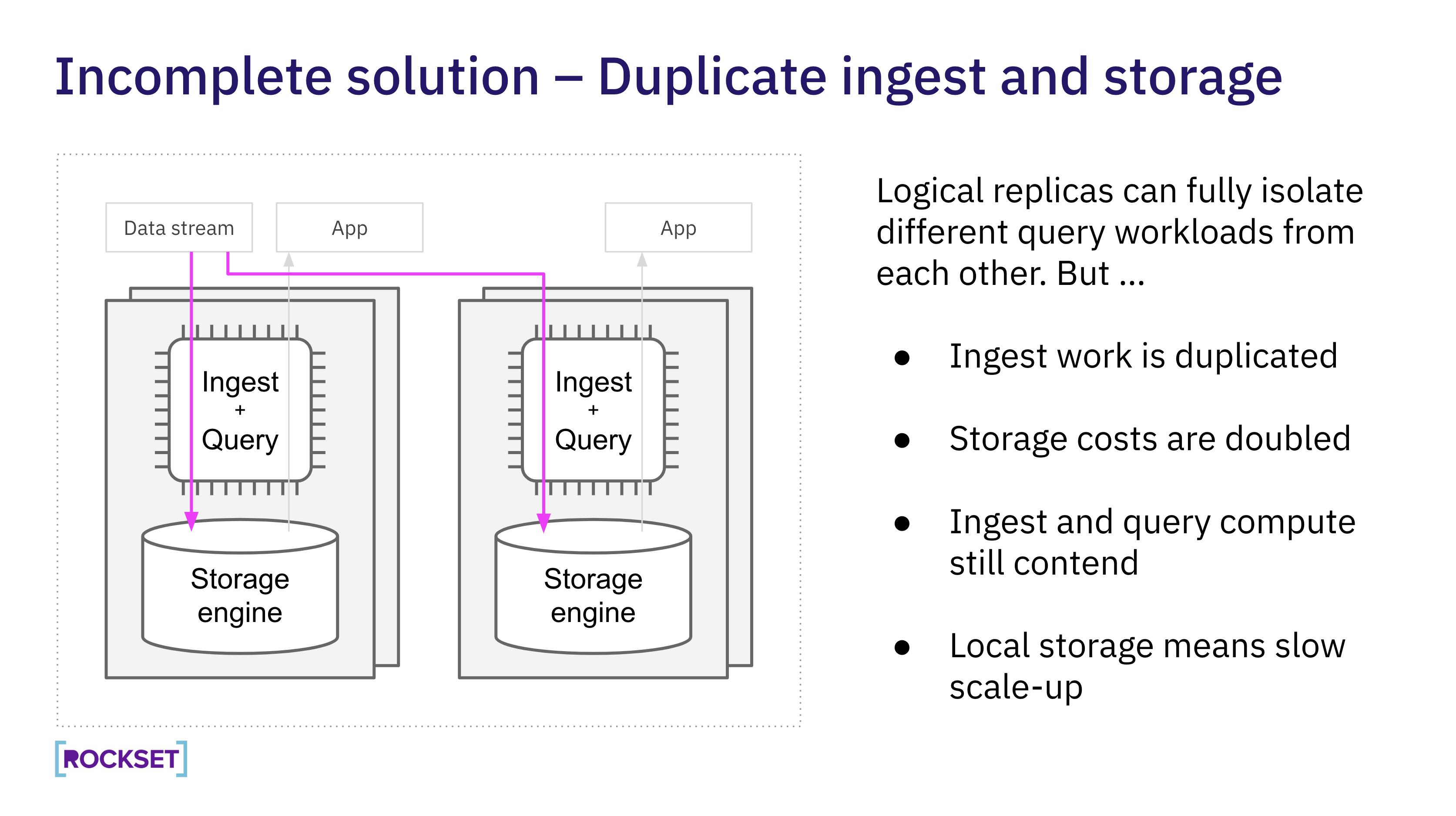

Replicas: Many customers try and create database replicas for isolation by designating the first duplicate for ingestion and secondary replicas for querying. The difficulty that arises is that there’s a lot of duplicate work required by every replica- every duplicate must course of incoming knowledge, retailer the info and index the info. And, the extra replicas you’ve gotten the extra knowledge motion and that results in a sluggish scale up or down. Replicas work at small scale however this method shortly falls aside underneath the burden of frequent ingestion.

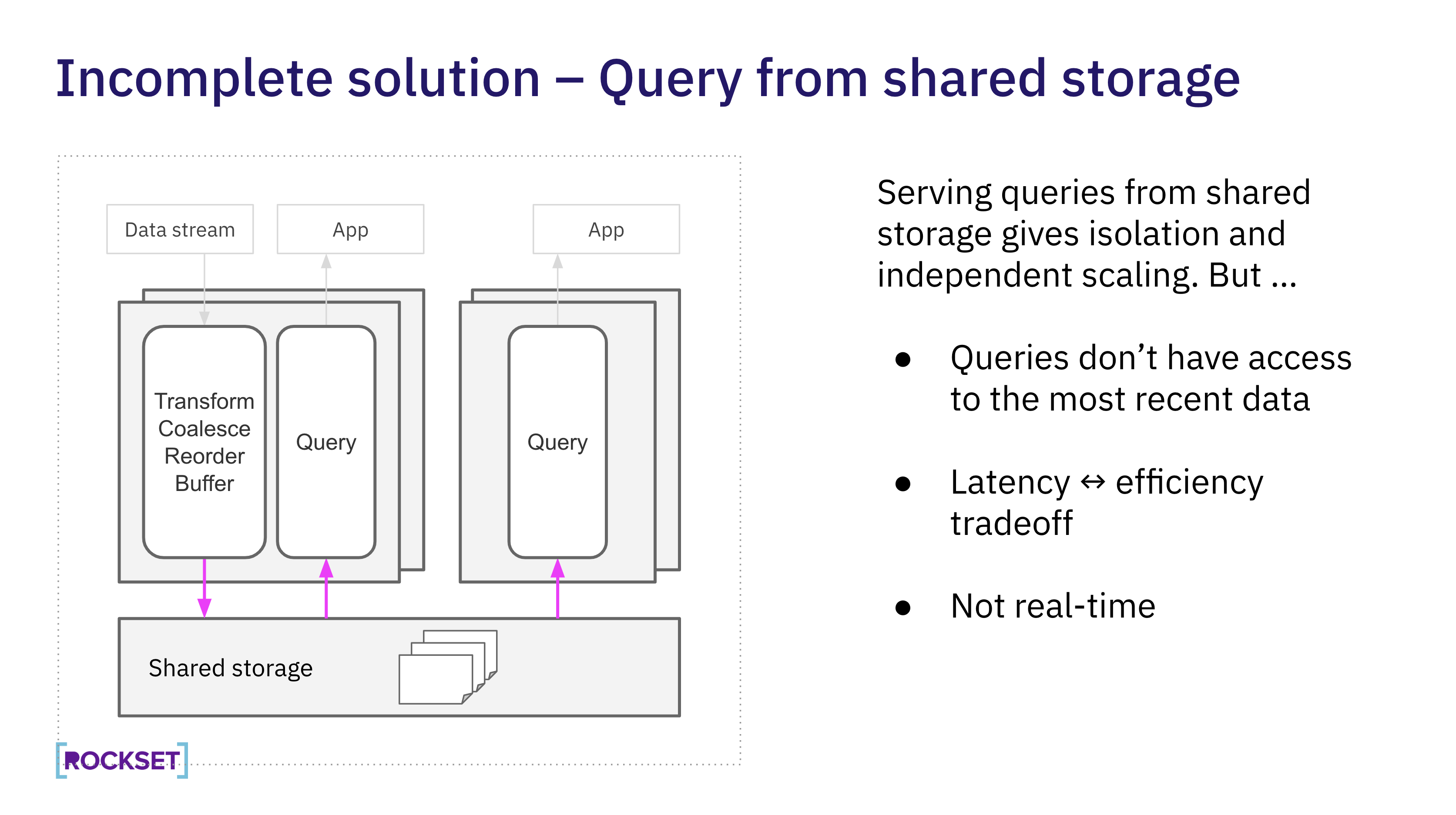

Question immediately from shared storage: Cloud knowledge warehouses have separate compute clusters on shared knowledge, fixing the problem of question and storage rivalry. That structure doesn’t go far sufficient because it doesn’t make newly generated knowledge instantly accessible for querying. On this structure, the newly generated knowledge should flush to storage earlier than it’s made accessible for querying, including latency.

How does Rockset clear up the issue?

Rockset introduces compute-compute separation for real-time analytics. Now, you’ll be able to have a digital occasion, a compute and reminiscence cluster, for streaming ingestion, queries and a number of purposes.

Let’s delve underneath the hood on how Rockset constructed this new cloud structure by first separating compute from scorching storage after which separating compute from compute.

Separating compute from scorching storage

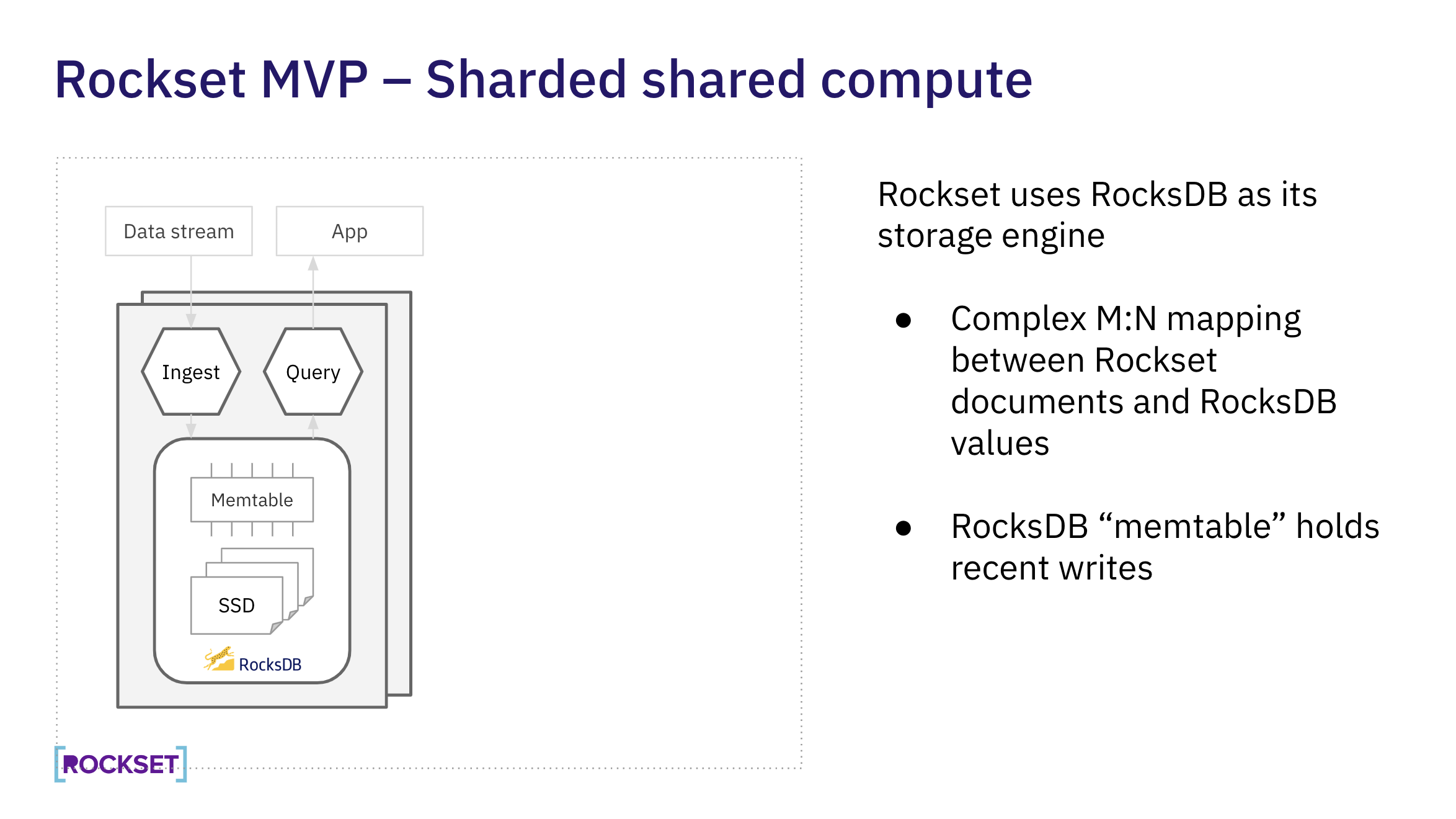

First Era: Sharded shared compute

Rockset makes use of RocksDB as its storage engine underneath the hood. RocksDB is a key-value retailer developed by Meta and used at Airbnb, Linkedin, Microsoft, Pinterest, Yahoo and extra.

Every RocksDB occasion represents a shard of the general dataset, which means that the info is distributed amongst quite a few RocksDB situations. There’s a complicated M:N mapping between Rockset paperwork and RocksDB key-values. That’s as a result of Rockset has a Converged Index with a columnar retailer, row retailer and search index underneath the hood. For instance, Rockset shops many values in a single column in the identical RocksDB key to help quick aggregations.

RocksDB memtables are an in-memory cache that shops the newest writes. On this structure, the question execution path accesses the memtable, making certain that essentially the most not too long ago generated knowledge is made accessible for querying. Rockset additionally shops an entire copy of the info on SSD for quick knowledge entry.

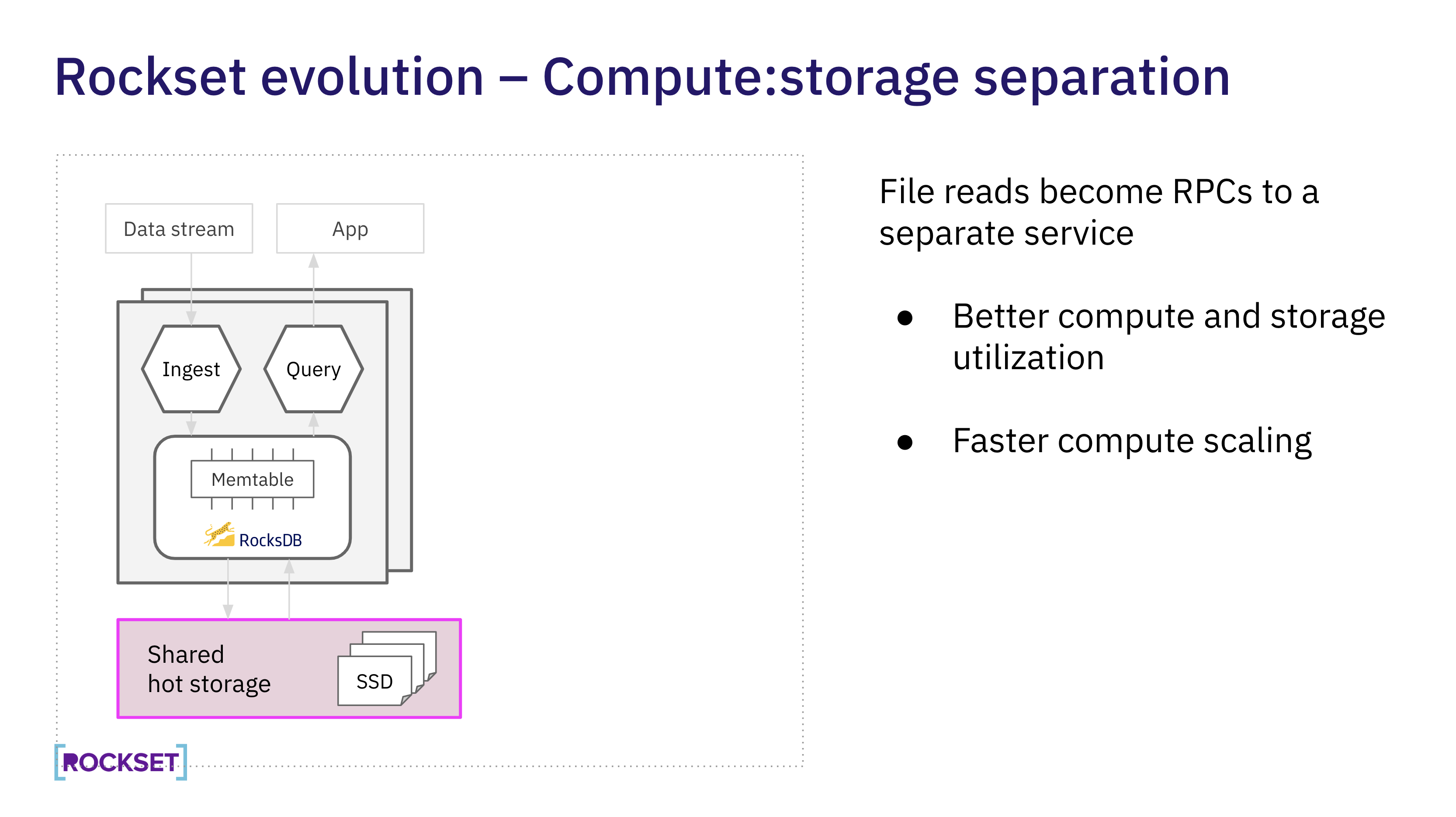

Second Era: Compute-storage separation

Within the second technology structure, Rockset separates compute and scorching storage for sooner scale up and down of digital situations. Rockset makes use of RocksDB’s pluggable file system to create a disaggregated storage layer. The storage layer is a shared scorching storage service that’s flash-heavy with a direct-attached SSD tier.

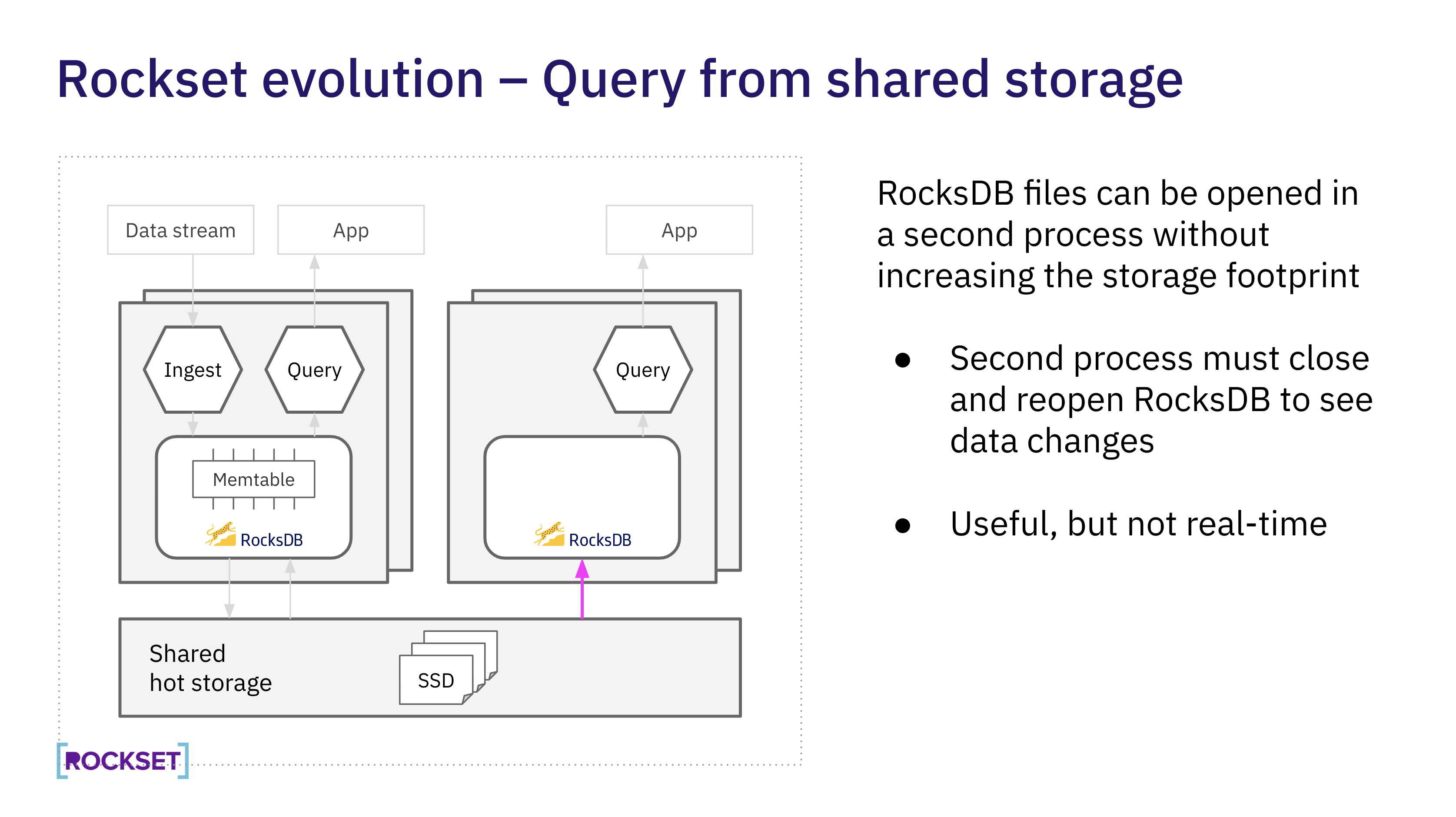

Third Era: Question from shared storage

Within the third technology, Rockset allows the shared scorching storage layer to be accessed by a number of digital situations.

The first digital occasion is actual time and the secondary situations have a periodic refresh of information. Secondary situations entry snapshots from shared scorching storage with out gaining access to fine-grain updates from the memtables. This structure isolates digital situations for a number of purposes, making it potential for Rockset to help each actual time and batch workloads effectively.

Separating ingest compute from question compute

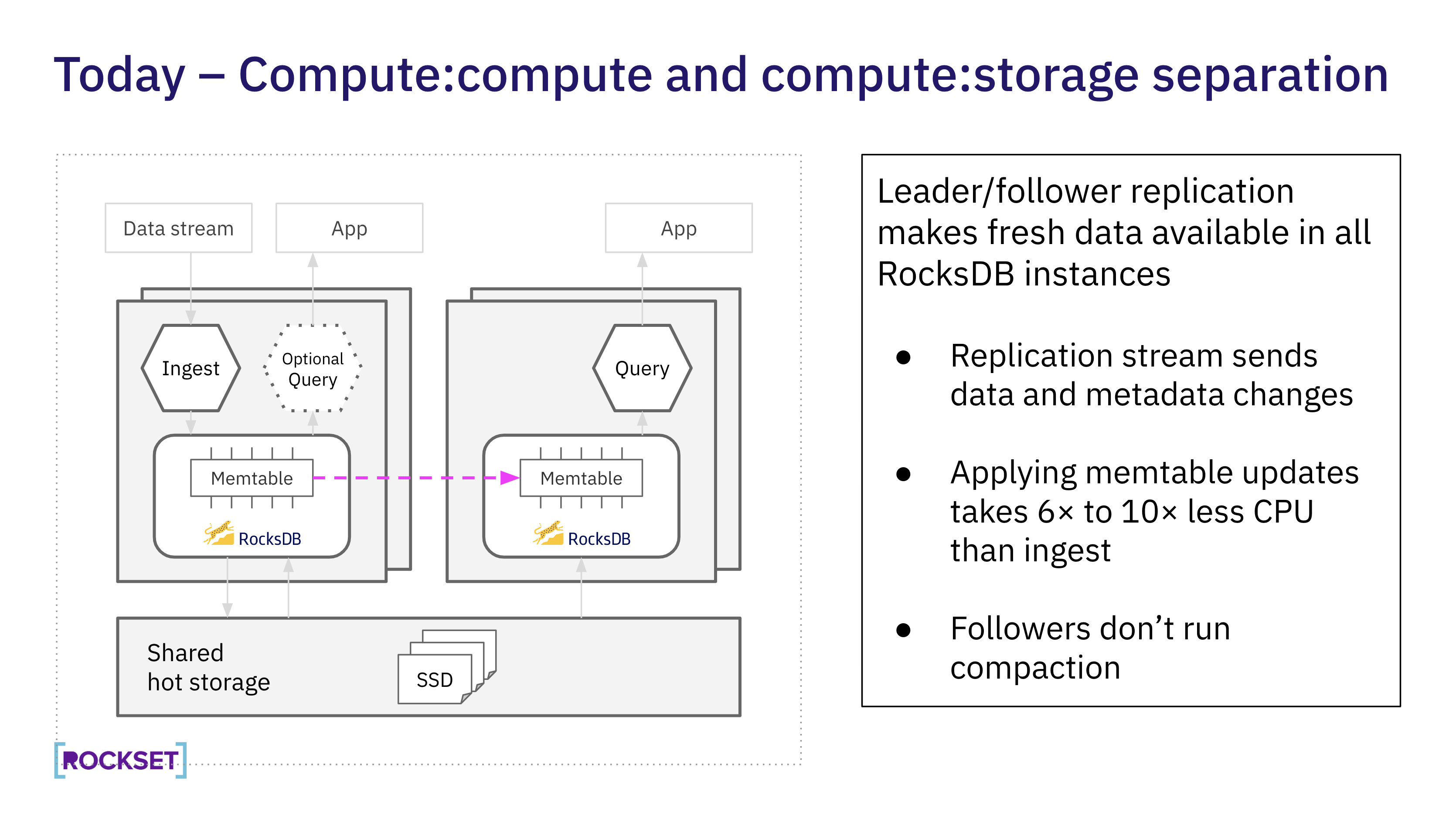

Fourth Era: Compute-compute separation

Within the fourth technology of the Rockset structure, Rockset separates ingest compute from question compute.

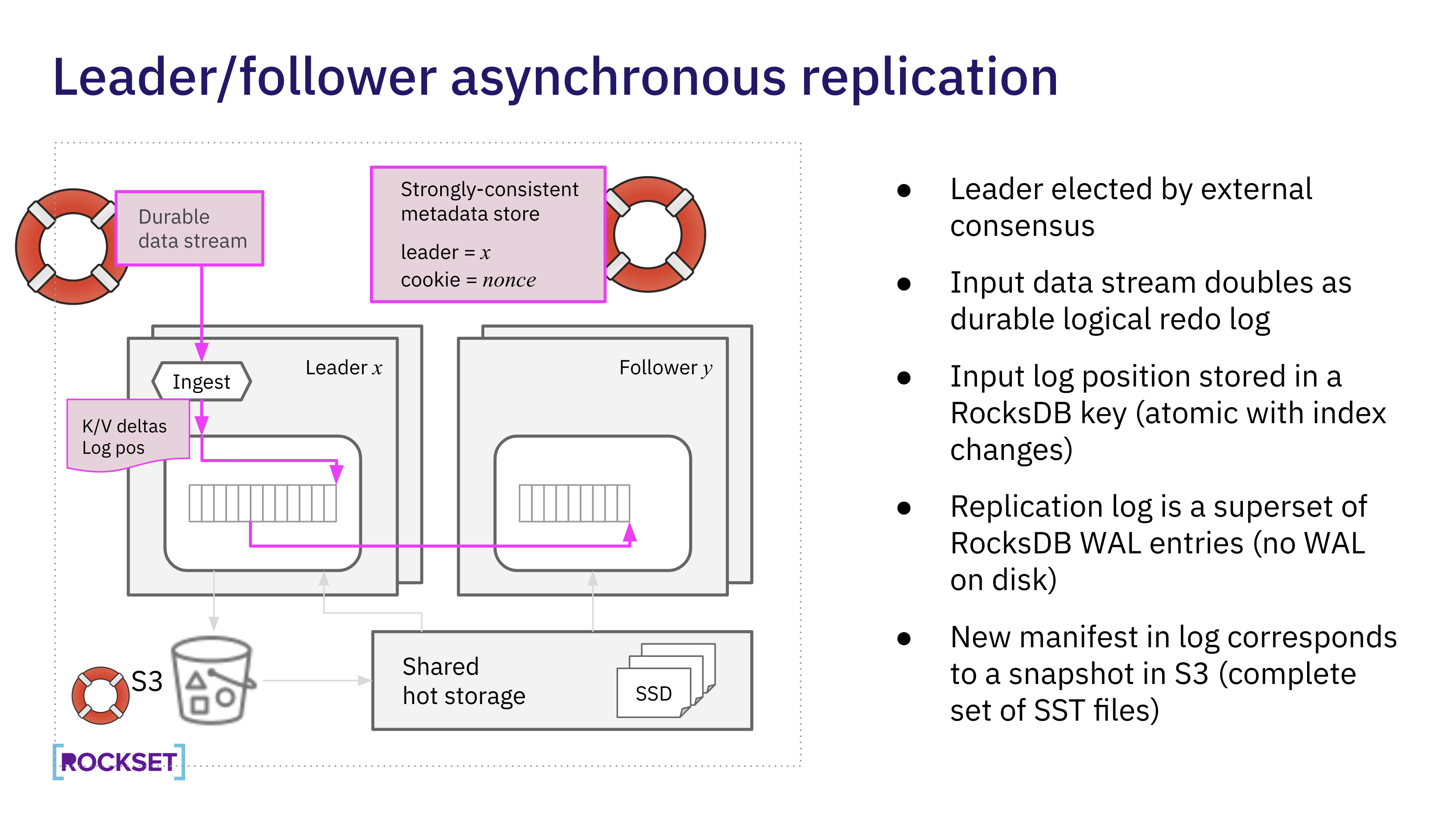

Rocket has constructed upon earlier generations of its structure so as to add fine-grain replication of RocksDB memtables between a number of digital situations. On this leader-follower structure, the chief is chargeable for translating ingested knowledge into index updates and performing RocksDB compaction. This frees the follower from nearly all the compute load of ingest.

The chief creates a replication stream and sends updates and metadata modifications to follower digital situations. Since follower digital situations not must carry out the brunt of the ingestion work, they use 6-10x much less compute to course of knowledge from the replication stream. The implementation comes with a knowledge delay of lower than a 100 milliseconds between the chief and follower digital situations.

Key Design Selections

Primer on LSM Bushes

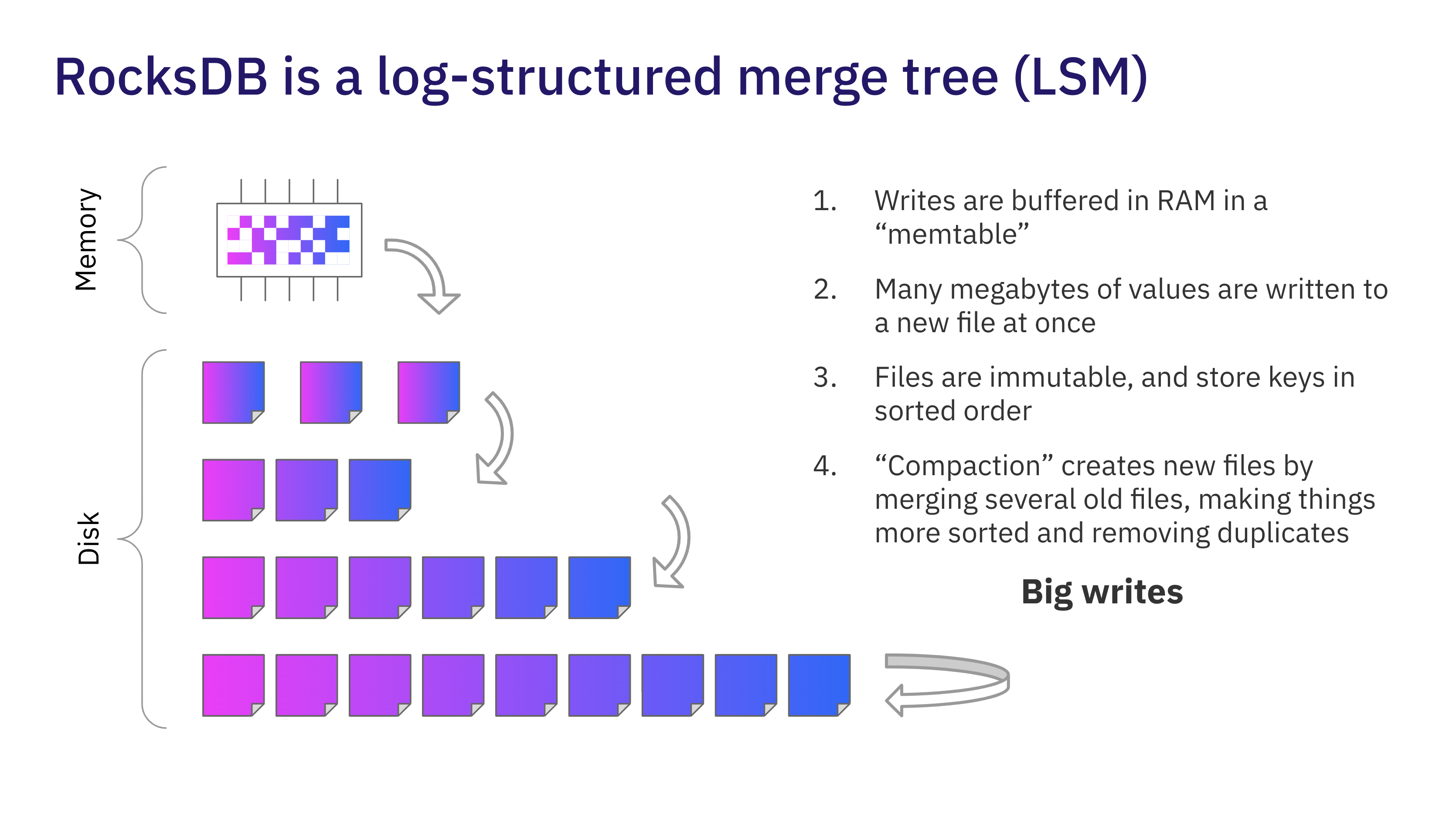

Understanding key design choices of compute-compute separation first requires a primary information of the Log- Structured Merge Tree (LSM) structure in RocksDB. On this structure, writes are buffered in reminiscence in a memtable. Megabytes of writes accumulate earlier than being flushed to disk. Every file is immutable; somewhat than updating recordsdata in place, new recordsdata are created when knowledge is modified. A background compaction course of often merges recordsdata to make storage extra environment friendly. It merges previous recordsdata into new recordsdata, sorting knowledge and eradicating overwritten values. The good thing about compaction, along with minimizing the storage footprint, is that it reduces the variety of places from which the info must be learn.

The vital attribute of LSM writes is that they’re large and latency-insensitive. This offers us plenty of choices for making them sturdy in an economical manner.

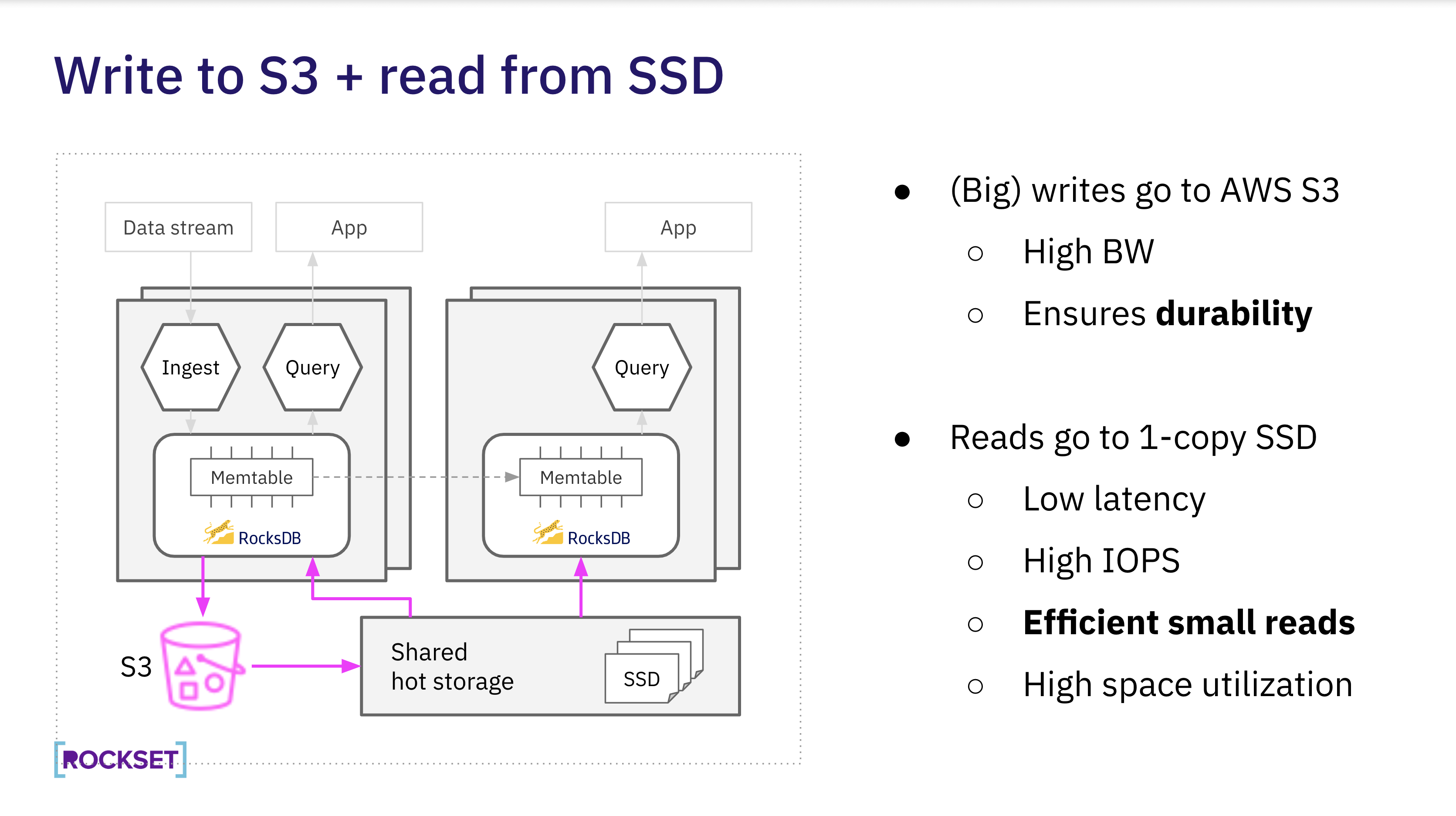

Level reads are vital for queries that use Rockset’s inverted indexes. In contrast to the big latency-insensitive writes carried out by an LSM, level reads lead to small reads which are latency-critical. The core perception of Rockset’s disaggregated storage structure is that we will concurrently use two storage programs, one to get sturdiness and one to get environment friendly quick reads.

Large Writes, Small Reads

Rockset shops copies of information in S3 for sturdiness and a single copy in scorching storage on SSDs for quick knowledge entry. Queries are as much as 1000x sooner on shared scorching storage than S3.

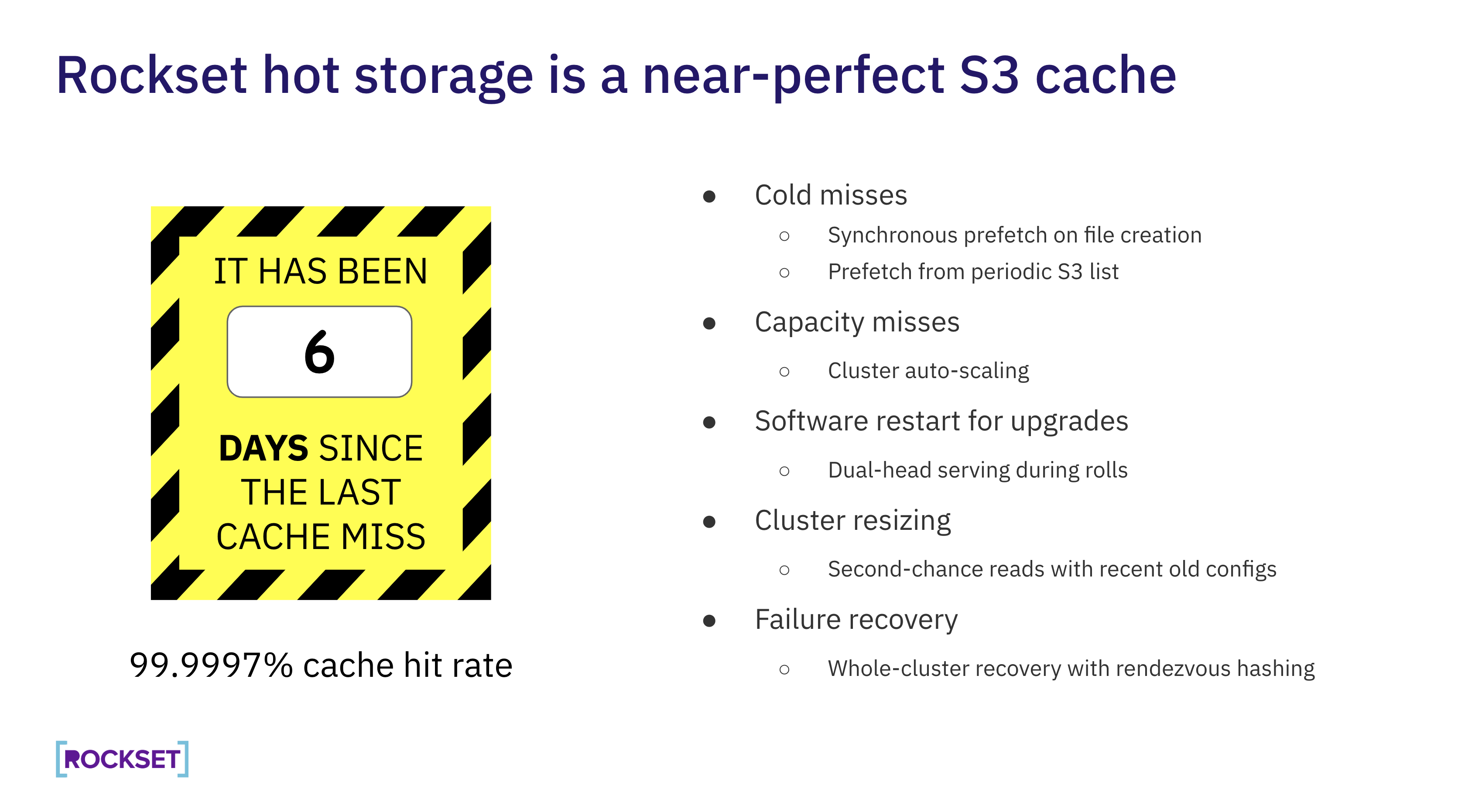

Close to Excellent Scorching Storage Cache

As Rockset is a real-time database, latency issues to our prospects and we can’t afford to overlook accessing knowledge from shared scorching storage. Rockset scorching storage is a near-perfect S3 cache. Most days there are not any cache misses wherever in our manufacturing infrastructure.

Right here’s how Rockset solves for potential cache misses, together with:

- Chilly misses: To make sure knowledge is all the time accessible within the cache, Rockset does a synchronous prefetch on file creation and scans S3 on a periodic foundation.

- Capability misses: Rockset has auto-scaling to make sure that the cluster doesn’t run out of area. As a belt-and-suspenders technique, if we do run out of disk area we evict the least-recently accessed knowledge first.

- Software program restart for upgrades: Twin-head serving for the rollout of latest software program. Rockset brings up the brand new course of and makes positive that it’s on-line earlier than shutting down the previous model of the service.

- Cluster resizing: If Rockset can’t discover the listed knowledge throughout resizing, it runs a second-chance learn utilizing the previous cluster configuration.

- Failure restoration: If a single machine fails, we distribute the restoration throughout all of the machines within the cluster utilizing rendezvous hashing.

Consistency and Sturdiness

Rockset’s leader-follower structure is designed to be constant and sturdy even when there are a number of copies of the info. A technique Rockset sidesteps a few of the challenges of constructing a constant and sturdy distributed database is by utilizing persistent and sturdy infrastructure underneath the hood.

Chief-Follower Structure

Within the leader-follower structure, the info stream feeding into the ingest course of is constant and sturdy. It’s successfully a sturdy logical redo log, enabling Rockset to return to the log to retrieve newly generated knowledge within the case of a failure.

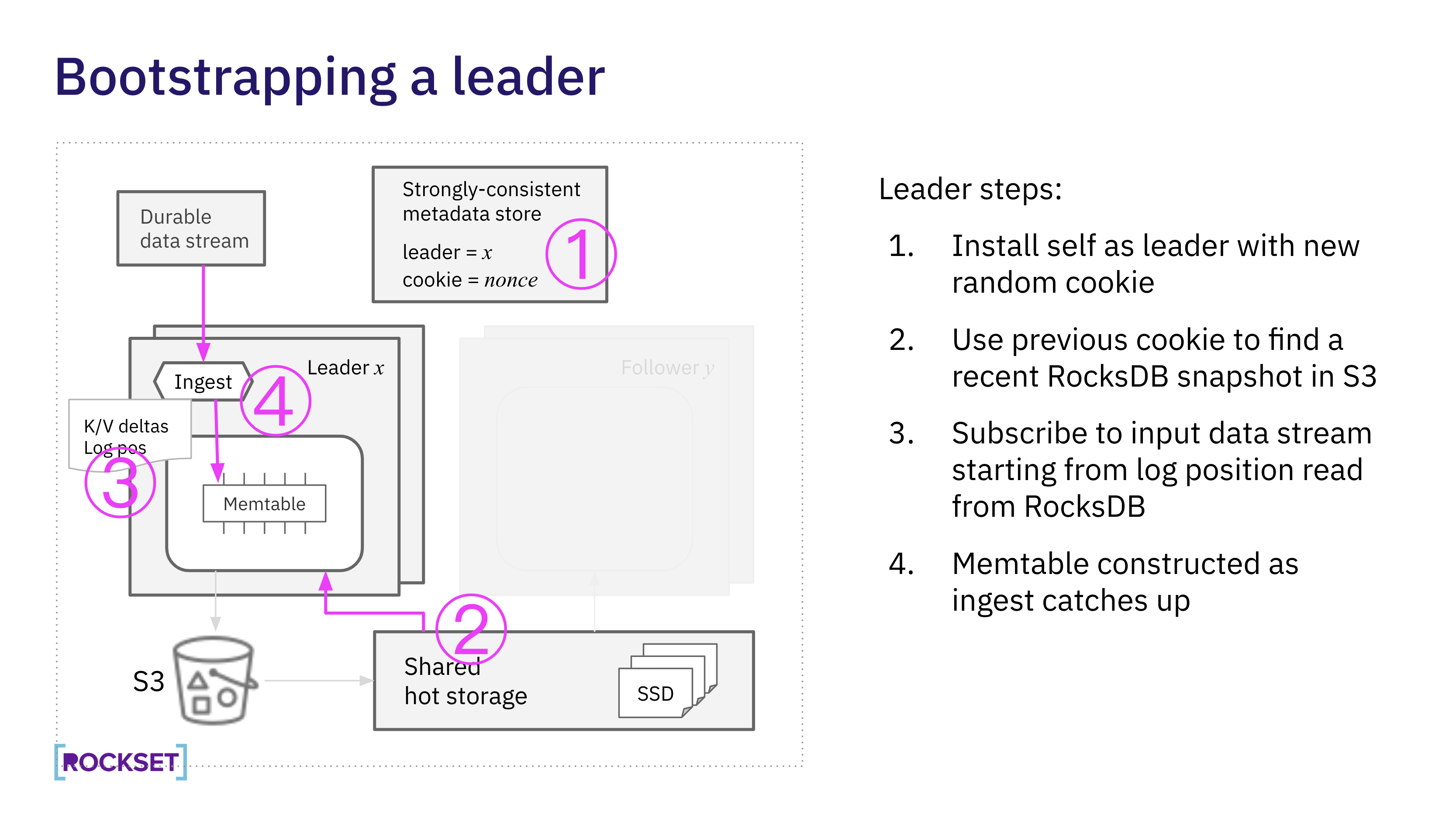

Rockset makes use of an exterior strongly-consistent metadata retailer to carry out chief election. Every time a pacesetter is elected it picks a cookie, a random nonce that’s included within the S3 object path for all the actions taken by that chief. The cookie ensures that even when an previous chief continues to be working, its S3 writes received’t intervene with the brand new chief and its actions shall be ignored by followers.

The enter log place from the sturdy logical redo log is saved in a RocksDB key to make sure exactly-once processing of the enter stream. Which means it’s protected to bootstrap a pacesetter from any latest legitimate RocksDB state.

The replication log is a superset of the RocksDB write-ahead logs, augmenting WAL entries with with extra occasions resembling chief election. Key/worth modifications from the replication log are inserted immediately into the memtable of the follower. When the log signifies that the chief has written the memtable to disk, nevertheless, the follower can simply begin studying the file created by the chief – the chief has already created the file on disaggregated storage. Equally, when the follower will get notification {that a} compaction has completed, it will probably simply begin utilizing the brand new compaction outcomes immediately with out doing any of the compaction work.

On this structure, the shared scorching storage accomplishes just-in-time bodily replication of the bytes of RocksDB’s SST recordsdata, together with the bodily file modifications that outcome from compaction, whereas the chief/follower replication log carries solely logical modifications. Together with the sturdy enter knowledge stream, this lets the chief/follower log be light-weight and non-durable.

Bootstrapping a pacesetter

Within the leader-follower structure, the info stream feeding into the ingest course of is constant and sturdy. It’s successfully a sturdy logical redo log, enabling Rockset to return to the log to retrieve newly generated knowledge within the case of a failure.

Rockset makes use of an exterior strongly-consistent metadata retailer to carry out chief election. Every time a pacesetter is elected it picks a cookie, a random nonce that’s included within the S3 object path for all the actions taken by that chief. The cookie ensures that even when an previous chief continues to be working, its S3 writes received’t intervene with the brand new chief and its actions shall be ignored by followers.

The enter log place from the sturdy logical redo log is saved in a RocksDB key to make sure exactly-once processing of the enter stream. Which means it’s protected to bootstrap a pacesetter from any latest legitimate RocksDB state.

The replication log is a superset of the RocksDB write-ahead logs, augmenting WAL entries with with extra occasions resembling chief election. Key/worth modifications from the replication log are inserted immediately into the memtable of the follower. When the log signifies that the chief has written the memtable to disk, nevertheless, the follower can simply begin studying the file created by the chief – the chief has already created the file on disaggregated storage. Equally, when the follower will get notification {that a} compaction has completed, it will probably simply begin utilizing the brand new compaction outcomes immediately with out doing any of the compaction work.

On this structure, the shared scorching storage accomplishes just-in-time bodily replication of the bytes of RocksDB’s SST recordsdata, together with the bodily file modifications that outcome from compaction, whereas the chief/follower replication log carries solely logical modifications. Together with the sturdy enter knowledge stream, this lets the chief/follower log be light-weight and non-durable.

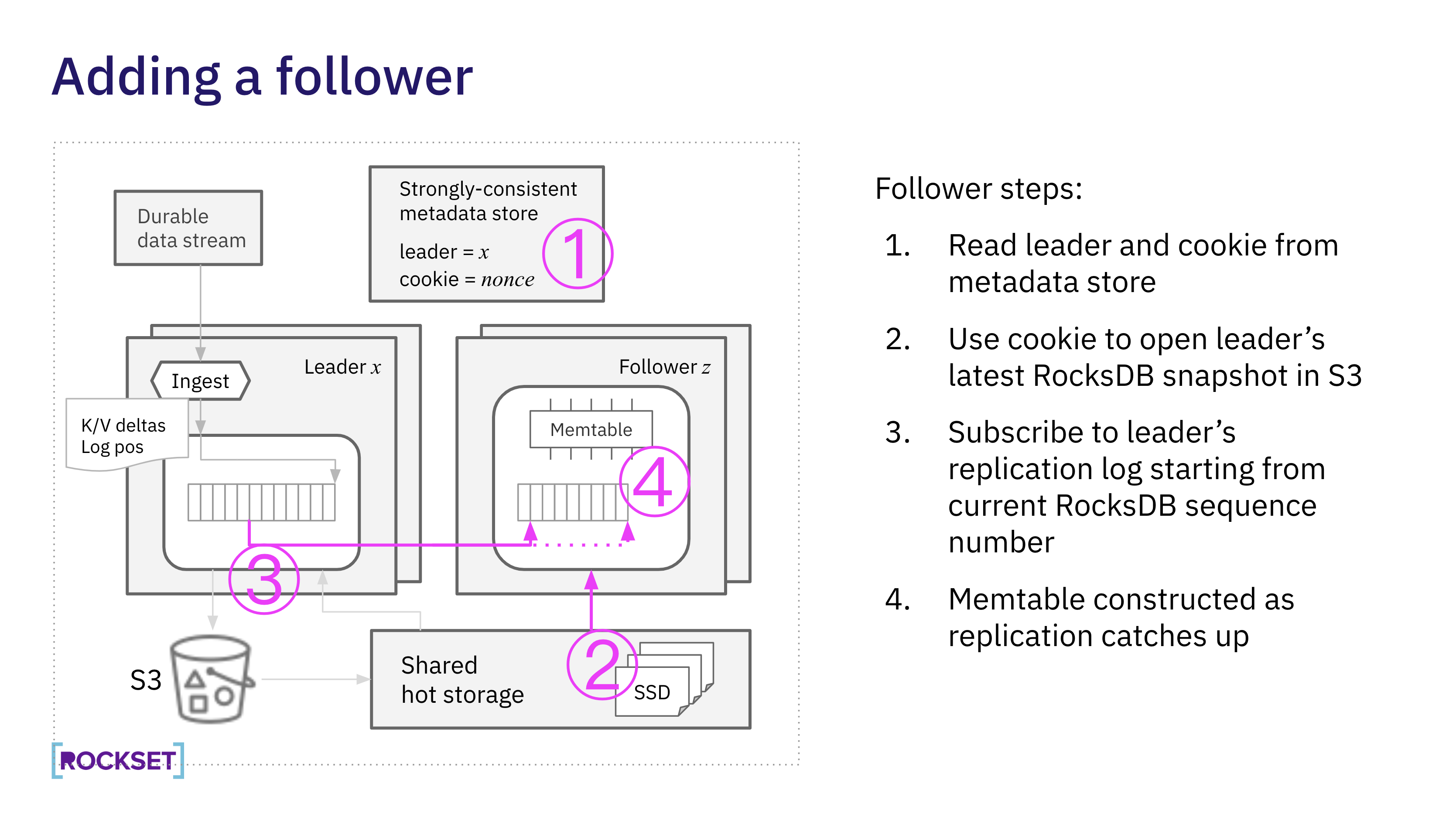

Including a follower

Followers use the chief’s cookie to seek out the newest RocksDB snapshot in shared scorching storage and subscribe to the chief’s replication log. The follower constructs a memtable with essentially the most not too long ago generated knowledge from the replication log of the chief.

Actual-World Implications

We’ve walked by way of the implementation of compute-compute separation and the way it solves for:

Streaming ingest and question compute isolation:The issue of a knowledge flash flood monopolizing your compute and jeopardizing your queries is solved with isolation. And, the identical on the question aspect you probably have a burst of customers in your software. Scale independently so you’ll be able to proper measurement the digital occasion in your ingest or question workload.

A number of purposes on shared real-time knowledge: You may spin up or down any variety of digital situations to segregate software workloads. A number of manufacturing purposes can share the identical dataset, eliminating the necessity for replicas.

Linear concurrency scaling: You right-size the digital occasion primarily based on question latency primarily based on single question efficiency. Then you’ll be able to autoscale for concurrency, spinning up the identical digital occasion measurement for linear scaling.

We simply scratched the floor on Rockset’s compute-compute structure for real-time analytics. You may be taught extra by watching the tech speak or seeing how the structure works in a step-by-step product demonstration.