Think about should you may automate the tedious job of analyzing earnings studies, extracting key insights, and making knowledgeable suggestions—all with out lifting a finger. On this article, we’ll stroll you thru the right way to create a multi-agent system utilizing OpenAI’s Swarm framework, designed to deal with these precise duties. You’ll learn to arrange and orchestrate three specialised brokers: one to summarize earnings studies, one other to research sentiment, and a 3rd to generate actionable suggestions. By the top of this tutorial, you’ll have a scalable, modular resolution to streamline monetary evaluation, with potential purposes past simply earnings studies.

Studying Outcomes

- Perceive the basics of OpenAI’s Swarm framework for multi-agent methods.

- Learn to create brokers for summarizing, sentiment evaluation, and suggestions.

- Discover using modular brokers for earnings report evaluation.

- Securely handle API keys utilizing a .env file.

- Implement a multi-agent system to automate earnings report processing.

- Achieve insights into real-world purposes of multi-agent methods in finance.

- Arrange and execute a multi-agent workflow utilizing OpenAI’s Swarm framework.

This text was revealed as part of the Knowledge Science Blogathon.

What’s OpenAI’s Swarm?

Swarm is a light-weight, experimental framework from OpenAI that focuses on multi-agent orchestration. It permits us to coordinate a number of brokers, every dealing with particular duties, like summarizing content material, performing sentiment evaluation, or recommending actions. In our case, we’ll design three brokers:

- Abstract Agent: Offers a concise abstract of the earnings report.

- Sentiment Agent: Analyzes sentiment from the report.

- Suggestion Agent: Recommends actions based mostly on sentiment evaluation.

Use Instances and Advantages of Multi-Agent Techniques

You’ll be able to broaden the multi-agent system constructed right here for numerous use instances.

- Portfolio Administration: Automate monitoring of a number of firm studies and counsel portfolio modifications based mostly on sentiment tendencies.

- Information Summarization for Finance: Combine real-time information feeds with these brokers to detect potential market actions early.

- Sentiment Monitoring: Use sentiment evaluation to foretell inventory actions or crypto tendencies based mostly on optimistic or unfavorable market information.

By splitting duties into modular brokers, you possibly can reuse particular person parts throughout totally different tasks, permitting for flexibility and scalability.

Step 1: Setting Up Your Undertaking Surroundings

Earlier than we dive into coding, it’s important to put a strong basis for the undertaking. On this step, you’ll create the mandatory folders and information and set up the required dependencies to get all the pieces working easily.

mkdir earnings_report

cd earnings_report

mkdir brokers utils

contact important.py brokers/__init__.py utils/__init__.py .gitignoreSet up Dependencies

pip set up git+https://github.com/openai/swarm.git openai python-dotenvStep 2: Retailer Your API Key Securely

Safety is vital, particularly when working with delicate information like API keys. This step will information you on the right way to retailer your OpenAI API key securely utilizing a .env file, making certain your credentials are secure and sound.

OPENAI_API_KEY=your-openai-api-key-here

This ensures your API key is just not uncovered in your code.

Step 3: Implement the Brokers

Now, it’s time to carry your brokers to life! On this step, you’ll create three separate brokers: one for summarizing the earnings report, one other for sentiment evaluation, and a 3rd for producing actionable suggestions based mostly on the sentiment.

Abstract Agent

The Abstract Agent will extract the primary 100 characters of the earnings report as a abstract.

Create brokers/summary_agent.py:

from swarm import Agent

def summarize_report(context_variables):

report_text = context_variables["report_text"]

return f"Abstract: {report_text[:100]}..."

summary_agent = Agent(

identify="Abstract Agent",

directions="Summarize the important thing factors of the earnings report.",

features=[summarize_report]

)

Sentiment Agent

This agent will test if the phrase “revenue” seems within the report to find out if the sentiment is optimistic.

Create brokers/sentiment_agent.py:

from swarm import Agent

def analyze_sentiment(context_variables):

report_text = context_variables["report_text"]

sentiment = "optimistic" if "revenue" in report_text else "unfavorable"

return f"The sentiment of the report is: {sentiment}"

sentiment_agent = Agent(

identify="Sentiment Agent",

directions="Analyze the sentiment of the report.",

features=[analyze_sentiment]

)

Suggestion Agent

Primarily based on the sentiment, this agent will counsel “Purchase” or “Maintain”.

Create brokers/recommendation_agent.py:

from swarm import Agent

def generate_recommendation(context_variables):

sentiment = context_variables["sentiment"]

suggestion = "Purchase" if sentiment == "optimistic" else "Maintain"

return f"My suggestion is: {suggestion}"

recommendation_agent = Agent(

identify="Suggestion Agent",

directions="Advocate actions based mostly on the sentiment evaluation.",

features=[generate_recommendation]

)

Step 4: Add a Helper Perform for File Loading

Loading information effectively is a essential a part of any undertaking. Right here, you’ll create a helper operate to streamline the method of studying and loading the earnings report file, making it simpler to your brokers to entry the info.

def load_earnings_report(filepath):

with open(filepath, "r") as file:

return file.learn()

Step 5: Tie Every little thing Collectively in important.py

Along with your brokers prepared, it’s time to tie all the pieces collectively. On this step, you’ll write the primary script that orchestrates the brokers, permitting them to work in concord to research and supply insights on the earnings report.

from swarm import Swarm

from brokers.summary_agent import summary_agent

from brokers.sentiment_agent import sentiment_agent

from brokers.recommendation_agent import recommendation_agent

from utils.helpers import load_earnings_report

import os

from dotenv import load_dotenv

# Load setting variables from the .env file

load_dotenv()

# Set the OpenAI API key from the setting variable

os.environ['OPENAI_API_KEY'] = os.getenv('OPENAI_API_KEY')

# Initialize Swarm shopper

shopper = Swarm()

# Load earnings report

report_text = load_earnings_report("sample_earnings.txt")

# Run abstract agent

response = shopper.run(

agent=summary_agent,

messages=[{"role": "user", "content": "Summarize the report"}],

context_variables={"report_text": report_text}

)

print(response.messages[-1]["content"])

# Cross abstract to sentiment agent

response = shopper.run(

agent=sentiment_agent,

messages=[{"role": "user", "content": "Analyze the sentiment"}],

context_variables={"report_text": report_text}

)

print(response.messages[-1]["content"])

# Extract sentiment and run suggestion agent

sentiment = response.messages[-1]["content"].cut up(": ")[-1].strip()

response = shopper.run(

agent=recommendation_agent,

messages=[{"role": "user", "content": "Give a recommendation"}],

context_variables={"sentiment": sentiment}

)

print(response.messages[-1]["content"])

Step 6: Create a Pattern Earnings Report

To check your system, you want information! This step reveals you the right way to create a pattern earnings report that your brokers can course of, making certain all the pieces is prepared for motion.

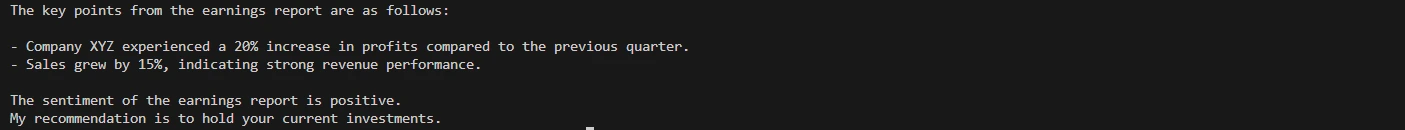

Firm XYZ reported a 20% improve in income in comparison with the earlier quarter.

Gross sales grew by 15%, and the corporate expects continued development within the subsequent fiscal yr.

Step 7: Run the Program

Now that all the pieces is about up, it’s time to run this system and watch your multi-agent system in motion because it analyzes the earnings report, performs sentiment evaluation, and presents suggestions.

python important.pyAnticipated Output:

Conclusion

We’ve constructed a multi-agent resolution utilizing OpenAI’s Swarm framework to automate the evaluation of earnings studies. We will course of monetary info and supply actionable suggestions with just some brokers. You’ll be able to simply prolong this resolution by including new brokers for deeper evaluation or integrating real-time monetary APIs.

Strive it your self and see how one can improve it with further information sources or brokers for extra superior evaluation!

Key Takeaways

- Modular Structure: Breaking the system into a number of brokers and utilities retains the code maintainable and scalable.

- Swarm Framework Energy: Swarm permits easy handoffs between brokers, making it simple to construct advanced multi-agent workflows.

- Safety through .env: Managing API keys with dotenv ensures that delicate information isn’t hardcoded into the undertaking.

- This undertaking can broaden to deal with reside monetary information by integrating APIs, enabling it to offer real-time suggestions for traders.

Often Requested Questions

A. OpenAI’s Swarm is an experimental framework designed for coordinating a number of brokers to carry out particular duties. It’s supreme for constructing modular methods the place every agent has an outlined position, comparable to summarizing content material, performing sentiment evaluation, or producing suggestions.

A. On this tutorial, the multi-agent system consists of three key brokers: the Abstract Agent, Sentiment Agent, and Suggestion Agent. Every agent performs a particular operate like summarizing an earnings report, analyzing its sentiment, or recommending actions based mostly on sentiment.

A. You’ll be able to retailer your API key securely in a .env file. This fashion, the API key is just not uncovered immediately in your code, sustaining safety. The .env file may be loaded utilizing the python-dotenv package deal.

A. Sure, the undertaking may be prolonged to deal with reside information by integrating monetary APIs. You’ll be able to create further brokers to fetch real-time earnings studies and analyze tendencies to offer up-to-date suggestions.

A. Sure, the brokers are designed to be modular, so you possibly can reuse them in different tasks. You’ll be able to adapt them to totally different duties comparable to summarizing information articles, performing textual content sentiment evaluation, or making suggestions based mostly on any type of structured information.

The media proven on this article is just not owned by Analytics Vidhya and is used on the Writer’s discretion.