Many organizations that we’ve spoken to are within the exploration section of utilizing vector search for AI-powered personalization, suggestions, semantic search and anomaly detection. The current and astronomical enhancements in accuracy and accessibility of huge language fashions (LLMs) together with BERT and OpenAI have made corporations rethink the way to construct related search and analytics experiences.

On this weblog, we seize engineering tales from 5 early adopters of vector search- Pinterest, Spotify, eBay, Airbnb and Doordash- who’ve built-in AI into their purposes. We hope these tales shall be useful to engineering groups who’re considering by way of the total lifecycle of vector search all the best way from producing embeddings to manufacturing deployments.

What’s vector search?

Vector search is a technique for effectively discovering and retrieving comparable objects from a big dataset primarily based on representations of the info in a high-dimensional house. On this context, objects will be something, reminiscent of paperwork, pictures, or sounds, and are represented as vector embeddings. The similarity between objects is computed utilizing distance metrics, reminiscent of cosine similarity or Euclidean distance, which quantify the closeness of two vector embeddings.

The vector search course of often entails:

- Producing embeddings: The place related options are extracted from the uncooked knowledge to create vector representations utilizing fashions reminiscent of word2vec, BERT or Common Sentence Encoder

- Indexing: The vector embeddings are organized into a knowledge construction that permits environment friendly search utilizing algorithms reminiscent of FAISS or HNSW

- Vector search: The place probably the most comparable objects to a given question vector are retrieved primarily based on a selected distance metric like cosine similarity or Euclidean distance

To raised visualize vector search, we are able to think about a 3D house the place every axis corresponds to a function. The time and the place of a degree within the house is set by the values of those options. On this house, comparable objects are positioned nearer collectively and dissimilar objects are farther aside.

Embedded content material: https://gist.github.com/julie-mills/b3aefe62996c4b969b18e8abd658ce84

Given a question, we are able to then discover probably the most comparable objects within the dataset. The question is represented as a vector embedding in the identical house because the merchandise embeddings, and the gap between the question embedding and every merchandise embedding is computed. The merchandise embeddings with the shortest distance to the question embedding are thought-about probably the most comparable.

Embedded content material: https://gist.github.com/julie-mills/d5833ea9c692edb6750e5e94749e36bf

That is clearly a simplified visualization as vector search operates in high-dimensional areas.

Within the subsequent sections, we’ll summarize 5 engineering blogs on vector search and spotlight key implementation issues. The complete engineering blogs will be discovered under:

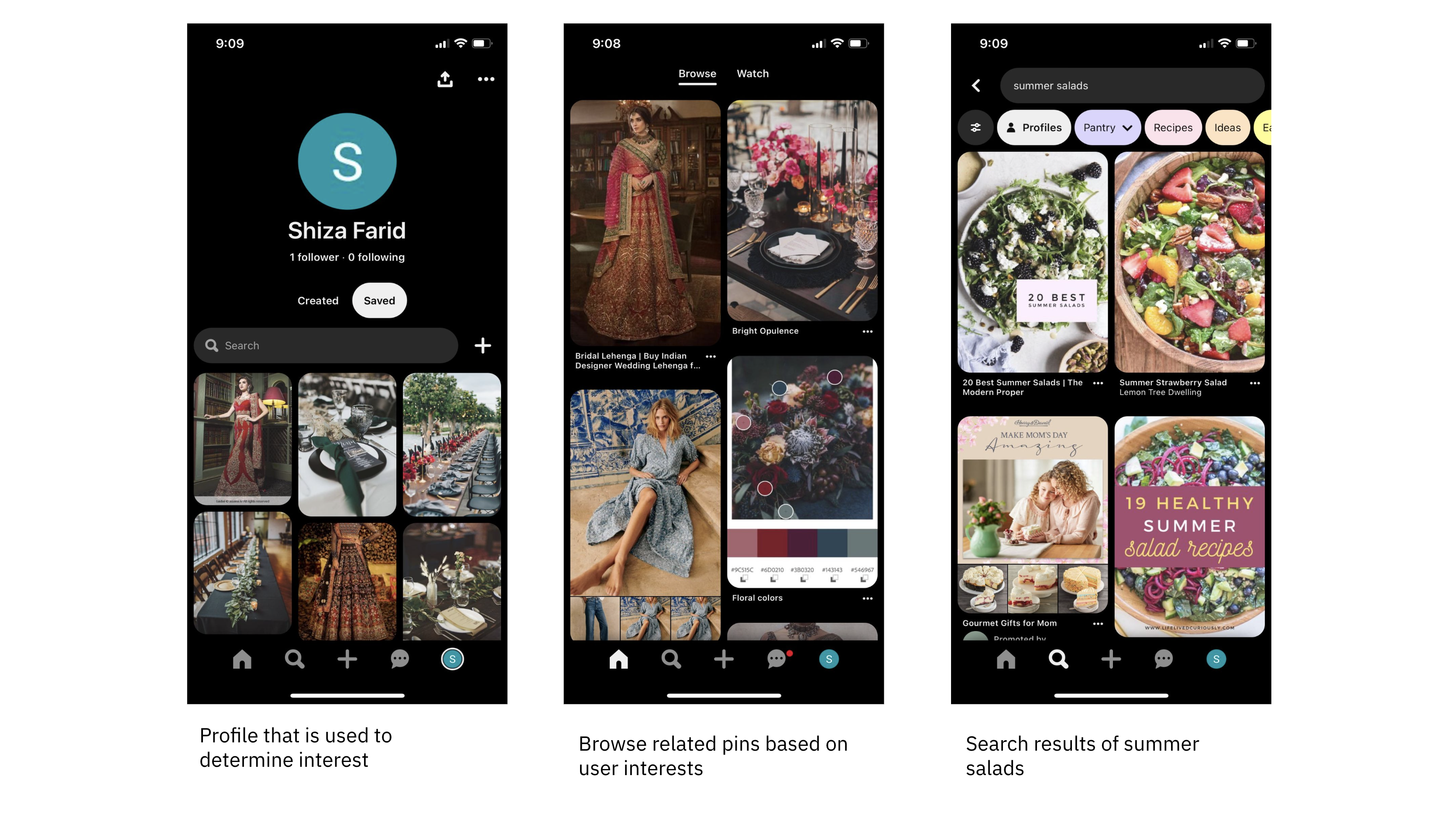

Pinterest: Curiosity search and discovery

Pinterest makes use of vector search for picture search and discovery throughout a number of areas of its platform, together with really helpful content material on the house feed, associated pins and search utilizing a multitask studying mannequin.

A multi-task mannequin is educated to carry out a number of duties concurrently, usually sharing underlying representations or options, which may enhance generalization and effectivity throughout associated duties. Within the case of Pinterest, the group educated and used the identical mannequin to drive really helpful content material on the homefeed, associated pins and search.

Pinterest trains the mannequin by pairing a customers search question (q) with the content material they clicked on or pins they saved (p). Right here is how Pinterest created the (q,p) pairs for every activity:

- Associated Pins: Phrase embeddings are derived from the chosen topic (q) and the pin clicked on or saved by the consumer (p).

- Search: Phrase embeddings are created from the search question textual content (q) and the pin clicked on or saved by the consumer (p).

- Homefeed: Phrase embeddings are generated primarily based on the curiosity of the consumer (q) and the pin clicked on or saved by the consumer (p).

To acquire an total entity embedding, Pinterest averages the related phrase embeddings for associated pins, search and the homefeed.

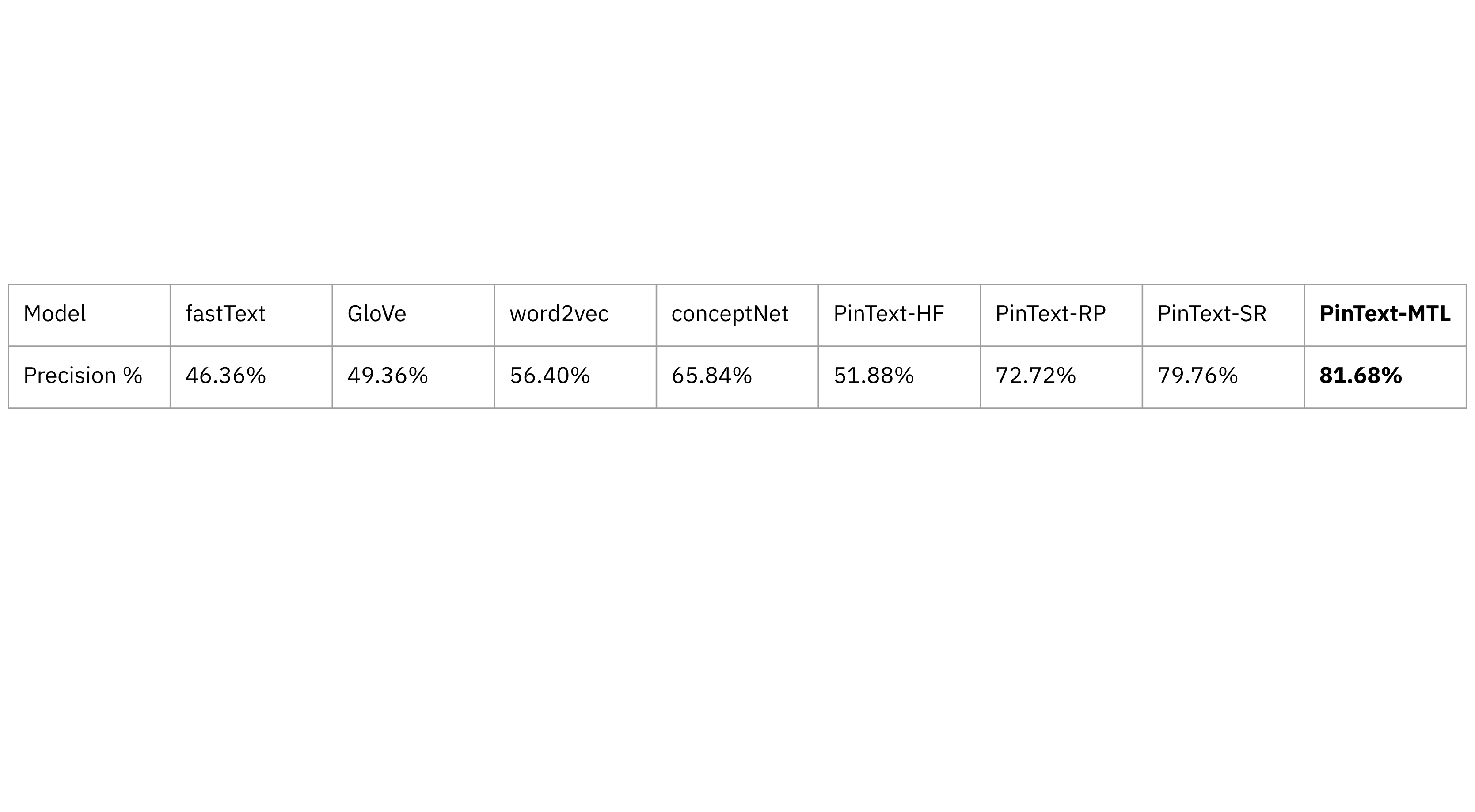

Pinterest created and evaluated its personal supervised Pintext-MTL (multi-task studying) in opposition to unsupervised studying fashions together with GloVe, word2vec in addition to a single-task studying mannequin, PinText-SR on precision. PinText-MTL had greater precision than the opposite embedding fashions, that means that it had a better proportion of true constructive predictions amongst all constructive predictions.

Pinterest additionally discovered that multi-task studying fashions had a better recall, or a better proportion of related cases accurately recognized by the mannequin, making them a greater match for search and discovery.

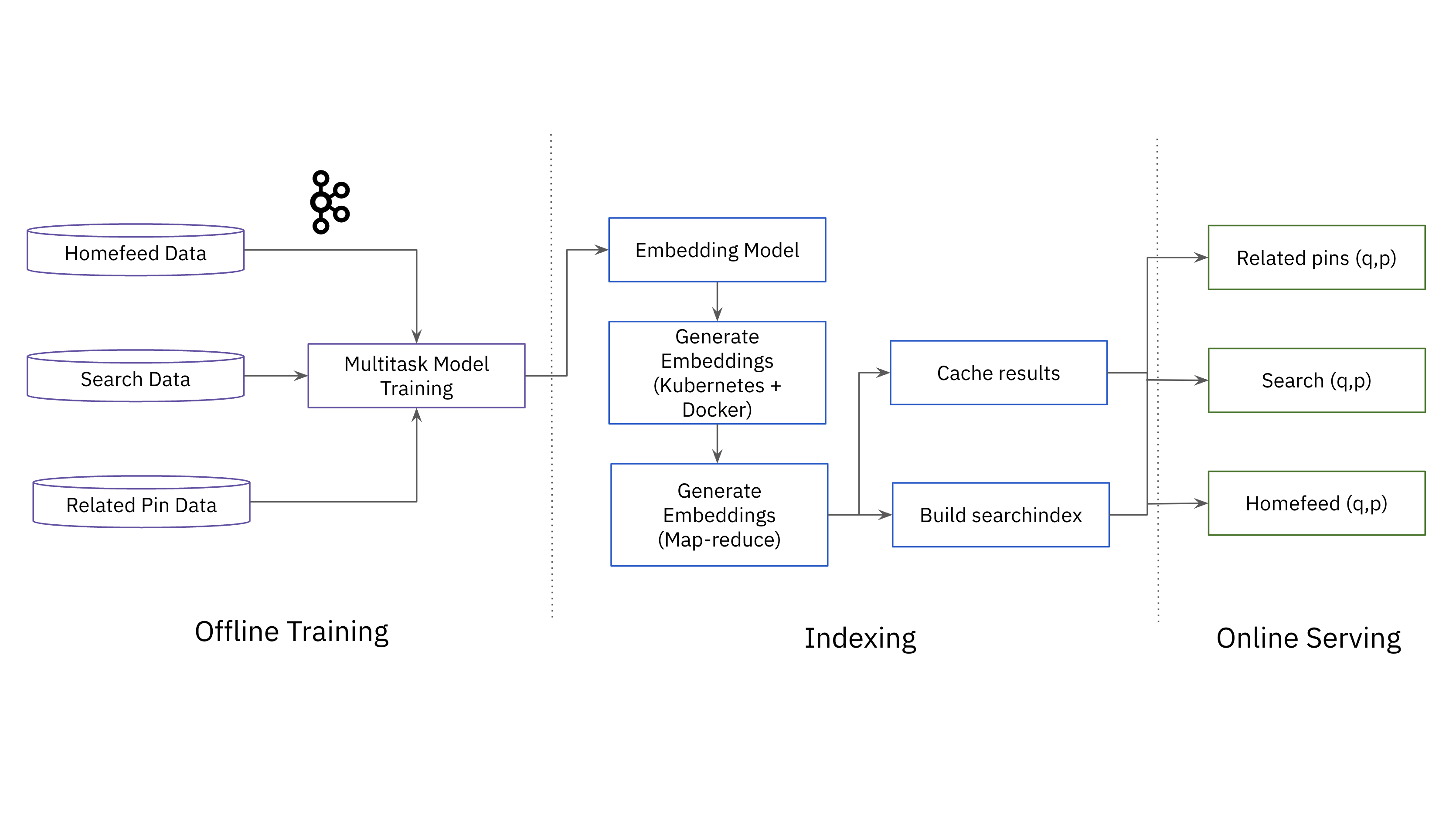

To place this all collectively in manufacturing, Pinterest has a multitask mannequin educated on streaming knowledge from the homefeed, search and associated pins. As soon as that mannequin is educated, vector embeddings are created in a big batch job utilizing both Kubernetes+Docker or a map-reduce system. The platform builds a search index of vector embeddings and runs a Okay-nearest neighbors (KNN) search to search out probably the most related content material for customers. Outcomes are cached to satisfy the efficiency necessities of the Pinterest platform.

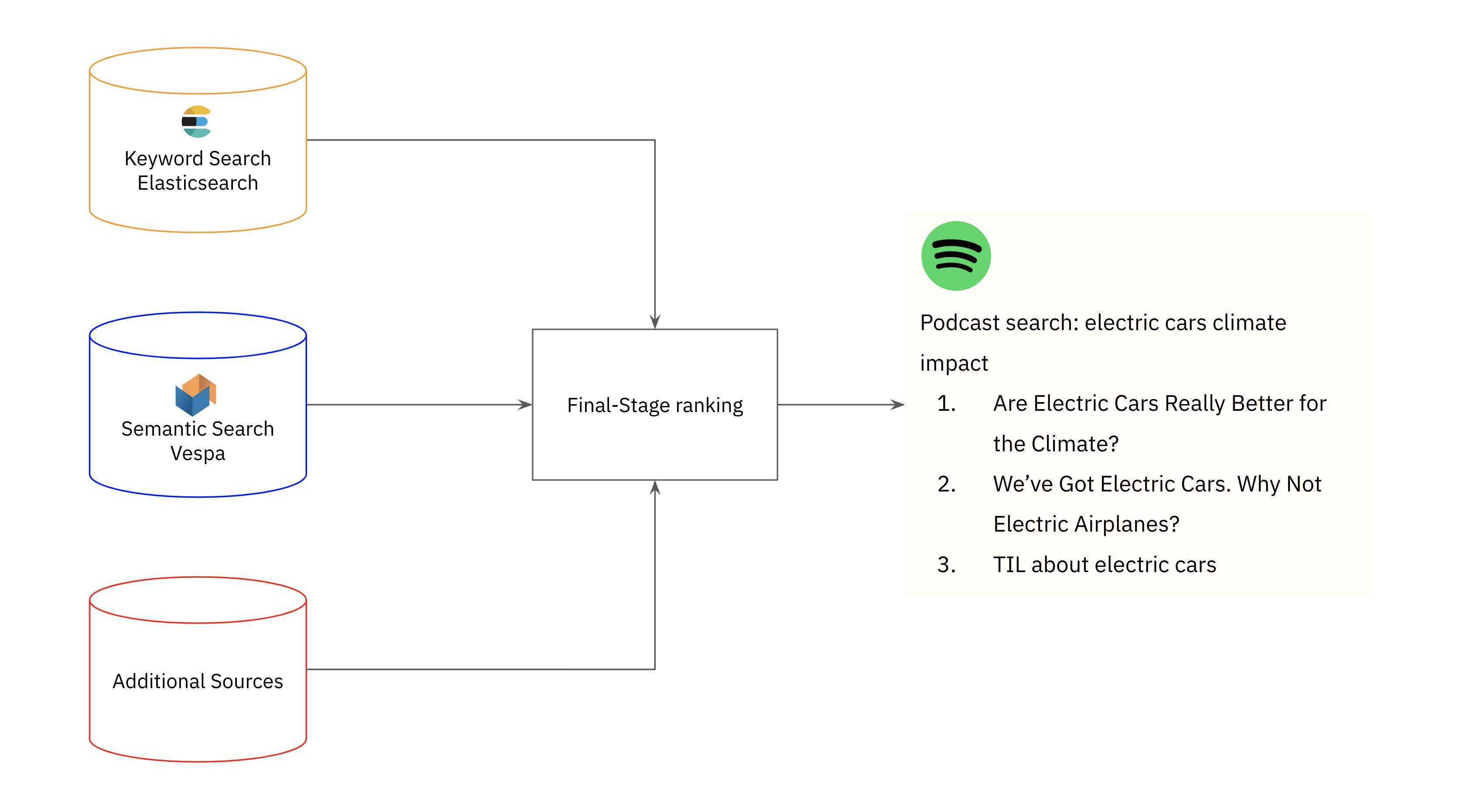

Spotify: Podcast search

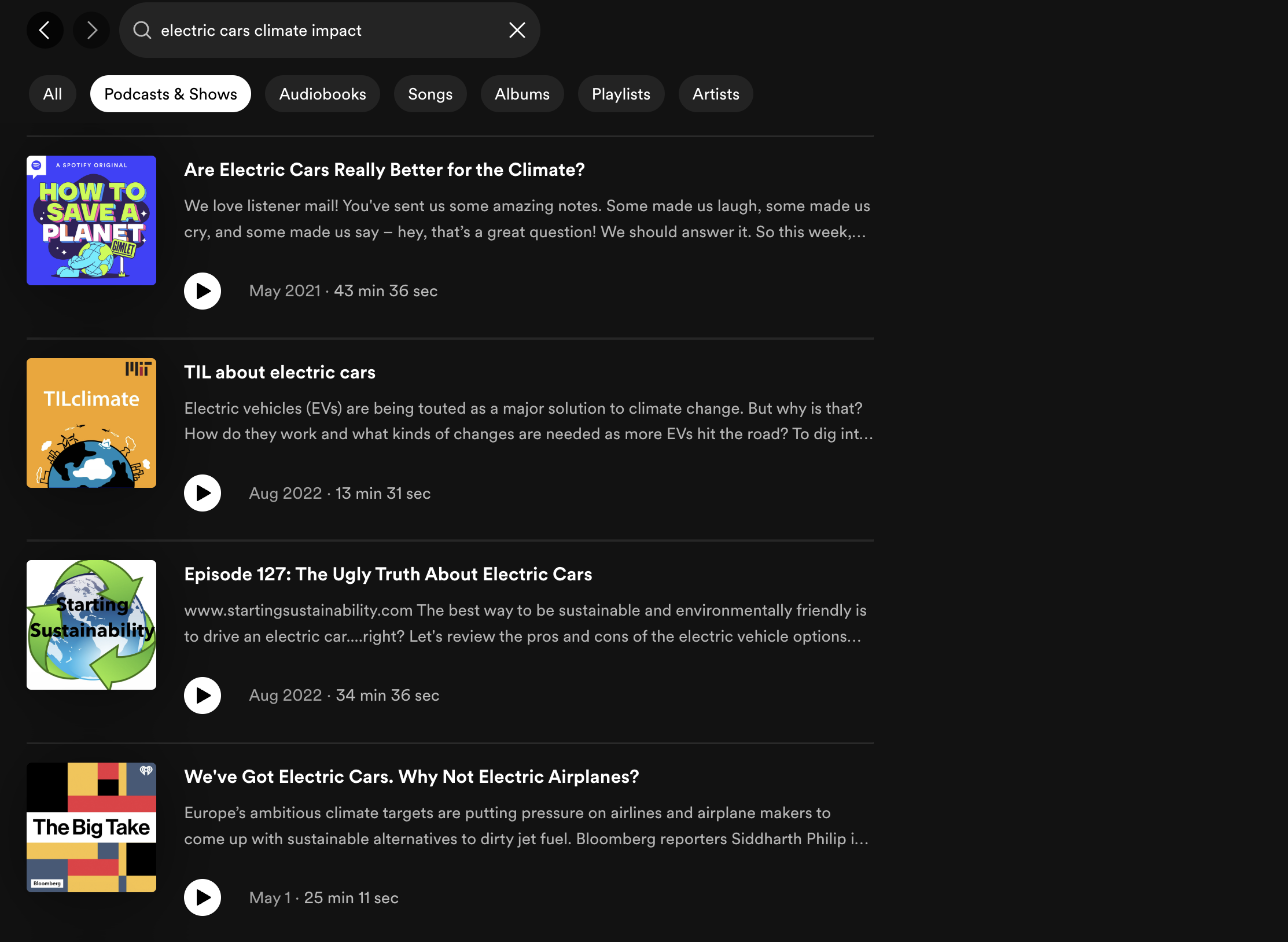

Spotify combines key phrase and semantic search to retrieve related podcast episode outcomes for customers. For example, the group highlighted the constraints of key phrase seek for the question “electrical vehicles local weather affect”, a question which yielded 0 outcomes despite the fact that related podcast episodes exist within the Spotify library. To enhance recall, the Spotify group used Approximate Nearest Neighbor (ANN) for quick, related podcast search.

The group generates vector embeddings utilizing the Common Sentence Encoder CMLM mannequin as it’s multilingual, supporting a world library of podcasts, and produces high-quality vector embeddings. Different fashions had been additionally evaluated together with BERT, a mannequin educated on a giant corpus of textual content knowledge, however discovered that BERT was higher suited to phrase embeddings than sentence embeddings and was pre-trained solely in English.

Spotify builds the vector embeddings with the question textual content being the enter embedding and a concatenation of textual metadata fields together with title and outline for the podcast episode embeddings. To find out the similarity, Spotify measured the cosine distance between the question and episode embeddings.

To coach the bottom Common Sentence Encoder CMLM mannequin, Spotify used constructive pairs of profitable podcast searches and episodes. They included in-batch negatives, a way highlighted in papers together with Dense Passage Retrieval for Open-Area Query Answering (DPR) and Que2Search: Quick and Correct Question and Doc Understanding for Search at Fb, to generate random detrimental pairings. Testing was additionally carried out utilizing artificial queries and manually written queries.

To include vector search into serving podcast suggestions in manufacturing, Spotify used the next steps and applied sciences:

- Index episode vectors: Spotify indexes the episode vectors offline in batch utilizing Vespa, a search engine with native help for ANN. One of many causes that Vespa was chosen is that it could possibly additionally incorporate metadata filtering post-search on options like episode reputation.

- On-line inference: Spotify makes use of Google Cloud Vertex AI to generate a question vector. Vertex AI was chosen for its help for GPU inference, which is more economical when utilizing massive transformer fashions to generate embeddings, and for its question cache. After the question vector embedding is generated, it’s used to retrieve the highest 30 podcast episodes from Vespa.

Semantic search contributes to the identification of pertinent podcast episodes, but it’s unable to completely supplant key phrase search. This is because of the truth that semantic search falls in need of actual time period matching when customers search a precise episode or podcast identify. Spotify employs a hybrid search strategy, merging semantic search in Vespa with key phrase search in Elasticsearch, adopted by a conclusive re-ranking stage to determine the episodes exhibited to customers.

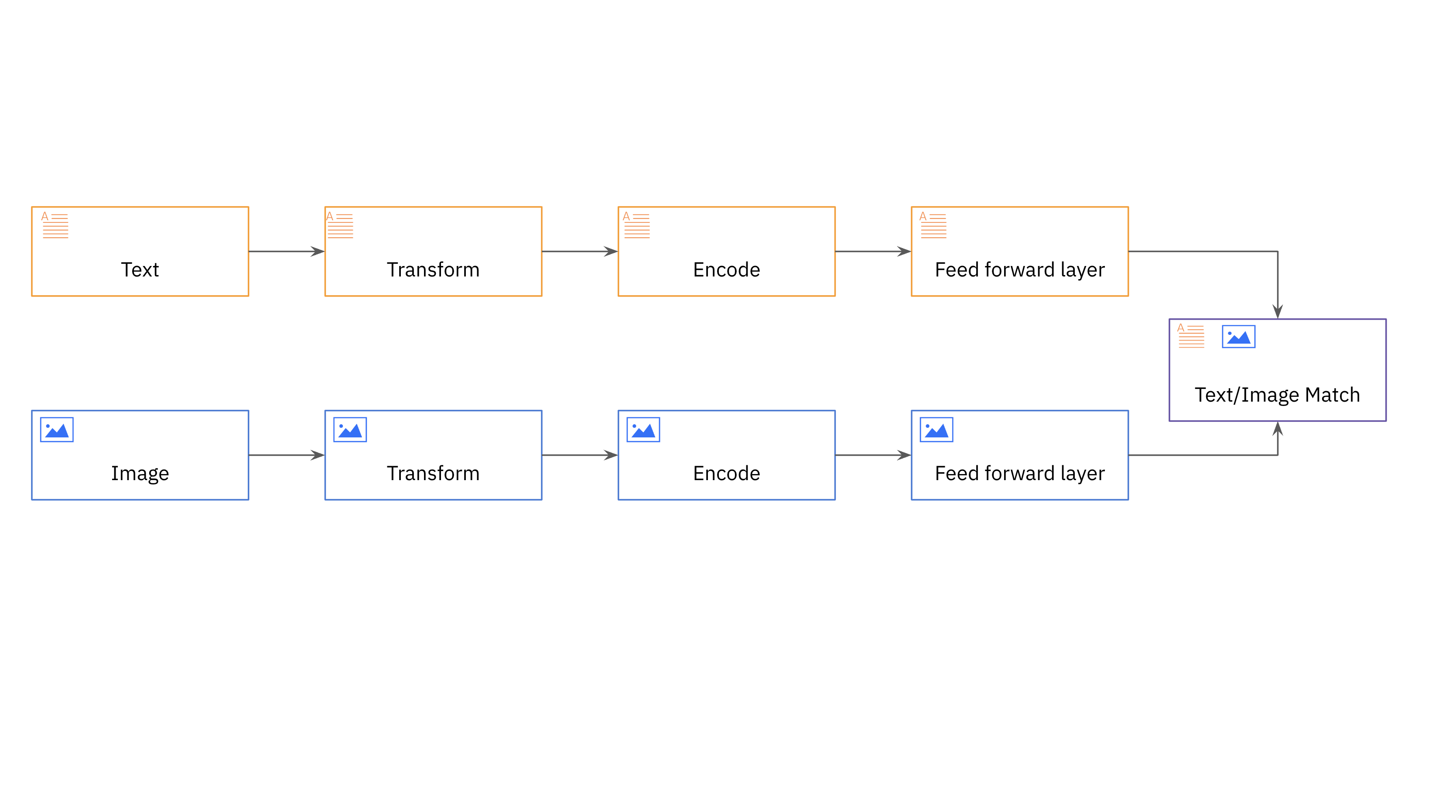

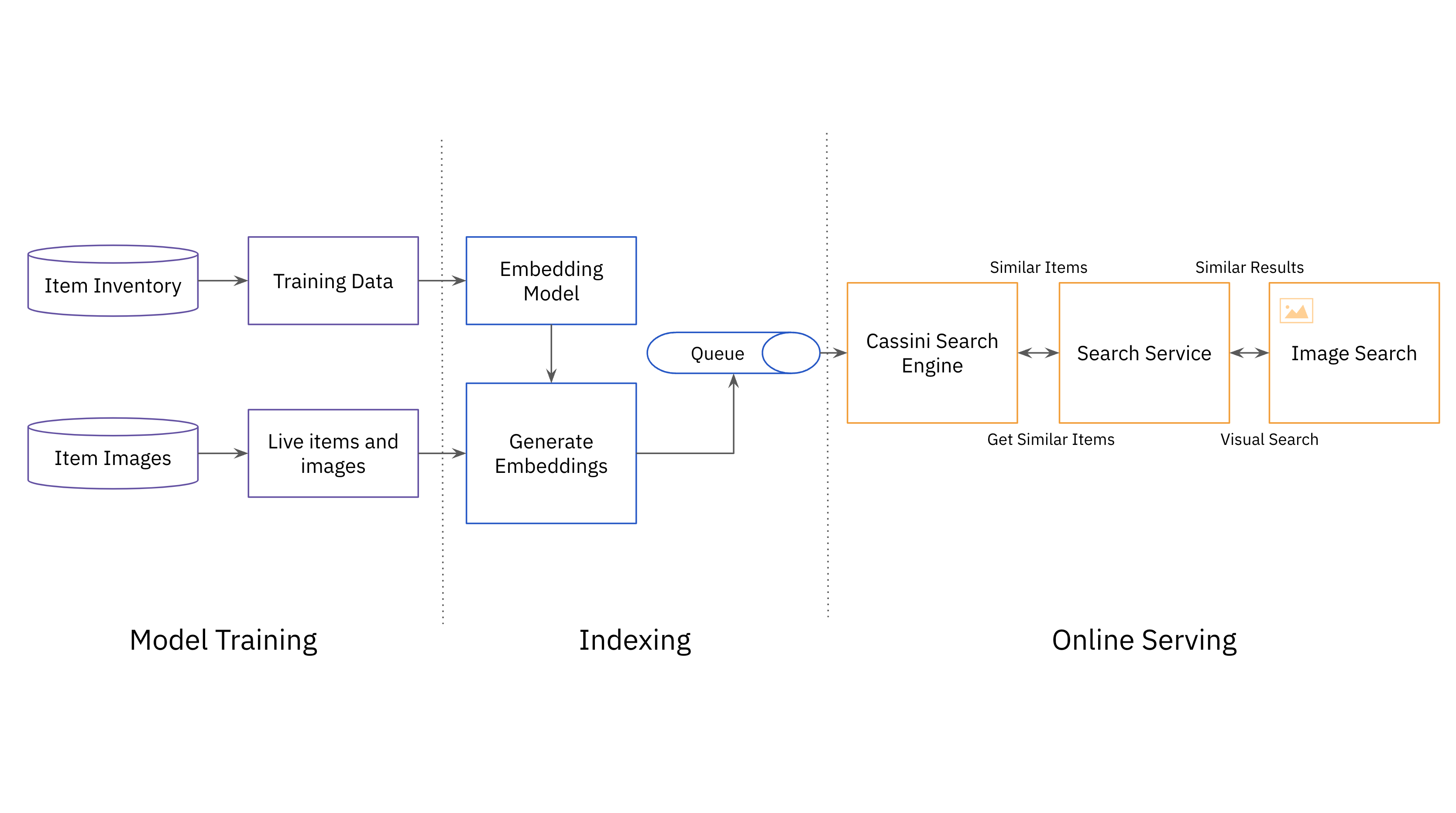

eBay: Picture search

Historically, search engines like google and yahoo have displayed outcomes by aligning the search question textual content with textual descriptions of things or paperwork. This technique depends extensively on language to deduce preferences and isn’t as efficient in capturing parts of fashion or aesthetics. eBay introduces picture search to assist customers discover related, comparable objects that meet the fashion they’re in search of.

eBay makes use of a multi-modal mannequin which is designed to course of and combine knowledge from a number of modalities or enter sorts, reminiscent of textual content, pictures, audio, or video, to make predictions or carry out duties. eBay incorporates each textual content and pictures into its mannequin, producing picture embeddings using a Convolutional Neural Community (CNN) mannequin, particularly Resnet-50, and title embeddings utilizing a text-based mannequin reminiscent of BERT. Each itemizing is represented by a vector embedding that mixes each the picture and title embeddings.

As soon as the multi-modal mannequin is educated utilizing a big dataset of image-title itemizing pairs and not too long ago bought listings, it’s time to put it into manufacturing within the web site search expertise. Because of the massive variety of listings at eBay, the info is loaded in batches to HDFS, eBay’s knowledge warehouse. eBay makes use of Apache Spark to retrieve and retailer the picture and related fields required for additional processing of listings, together with producing itemizing embeddings. The itemizing embeddings are printed to a columnar retailer reminiscent of HBase which is nice at aggregating large-scale knowledge. From HBase, the itemizing embedding is listed and served in Cassini, a search engine created at eBay.

The pipeline is managed utilizing Apache Airflow, which is able to scaling even when there’s a excessive amount and complexity of duties. It additionally supplies help for Spark, Hadoop, and Python, making it handy for the machine studying group to undertake and make the most of.

Visible search permits customers to search out comparable types and preferences within the classes of furnishings and residential decor, the place fashion and aesthetics are key to buy choices. Sooner or later, eBay plans to increase visible search throughout all classes and likewise assist customers uncover associated objects to allow them to set up the identical appear and feel throughout their dwelling.

AirBnb: Actual-time customized listings

Search and comparable listings options drive 99% of bookings on the AirBnb web site. AirBnb constructed a itemizing embedding method to enhance comparable itemizing suggestions and supply real-time personalization in search rankings.

AirBnb realized early on that they may increase the applying of embeddings past simply phrase representations, encompassing consumer behaviors together with clicks and bookings as nicely.

To coach the embedding fashions, AirBnb included over 4.5M lively listings and 800 million search classes to find out the similarity primarily based on what listings a consumer clicks and skips in a session. Listings that had been clicked by the identical consumer in a session are pushed nearer collectively; listings that had been skipped by the consumer are pushed additional away. The group settled on the dimensionality of a list embedding of d=32 given the tradeoff between offline efficiency and reminiscence wanted for on-line serving.

Embedded content material: https://youtu.be/aWjsUEX7B1I

AirBnb discovered that sure listings traits don’t require studying, as they are often straight obtained from metadata, reminiscent of value. Nevertheless, attributes like structure, fashion, and ambiance are significantly tougher to derive from metadata.

Earlier than transferring to manufacturing, AirBnb validated their mannequin by testing how nicely the mannequin really helpful listings {that a} consumer really booked. The group additionally ran an A/B check evaluating the prevailing listings algorithm in opposition to the vector embedding-based algorithm. They discovered that the algorithm with vector embeddings resulted in a 21% uptick in CTR and 4.9% enhance in customers discovering a list that they booked.

The group additionally realized that vector embeddings might be used as a part of the mannequin for real-time personalization in search. For every consumer, they collected and maintained in actual time, utilizing Kafka, a short-term historical past of consumer clicks and skips within the final two weeks. For each search carried out by the consumer, they ran two similarity searches:

- primarily based on the geographic markets that had been not too long ago searched after which

- the similarity between the candidate listings and those the consumer has clicked/skipped

Embeddings had been evaluated in offline and on-line experiments and have become a part of the real-time personalization options.

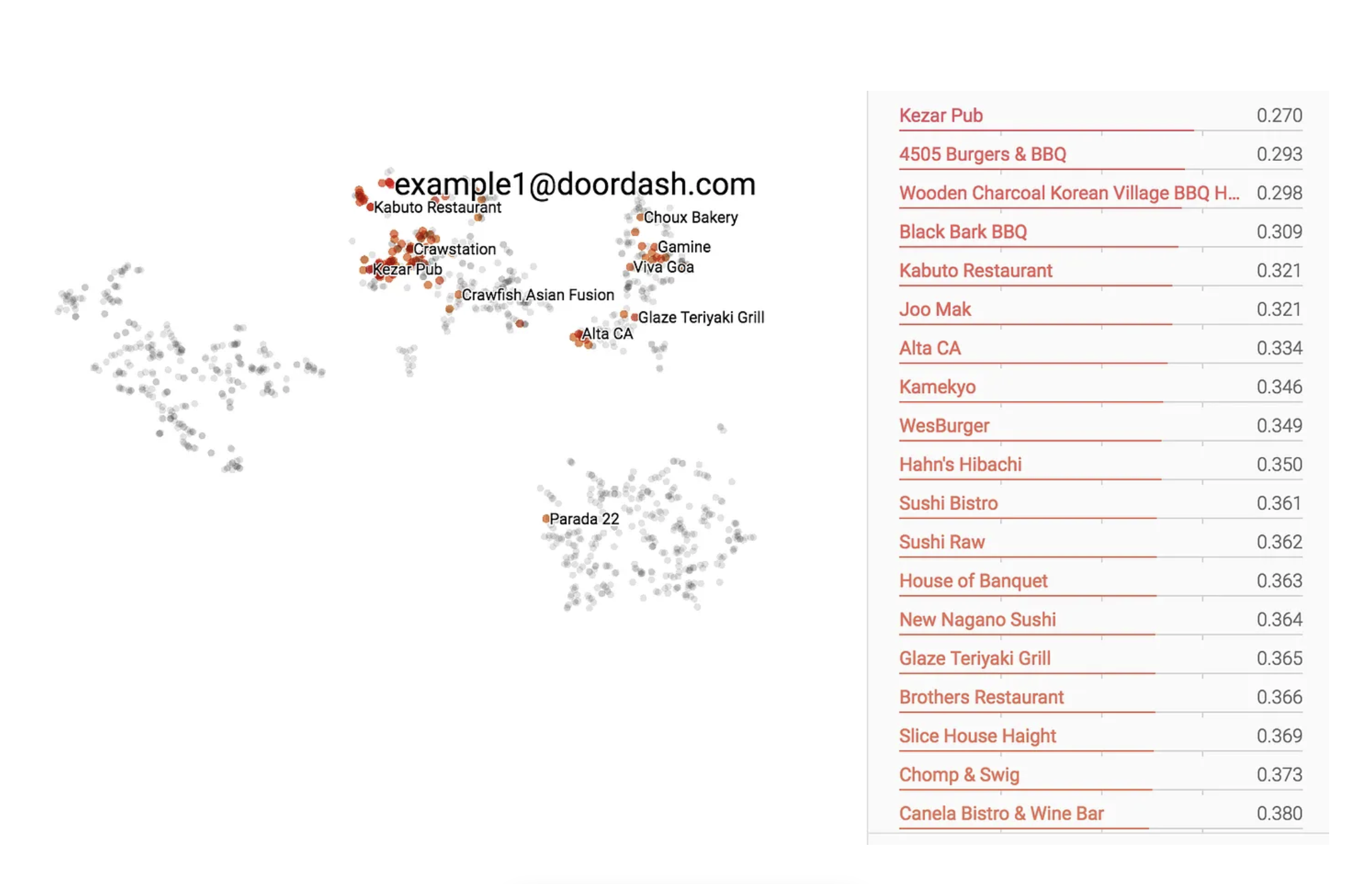

Doordash: Customized retailer feeds

Doordash has all kinds of shops that customers can select to order from and with the ability to floor probably the most related shops utilizing customized preferences improves search and discovery.

Doordash wished to use latent data to its retailer feed algorithms utilizing vector embeddings. This may allow Doordash to uncover similarities between shops that weren’t well-documented together with if a retailer has candy objects, is taken into account fashionable or options vegetarian choices.

Doordash used a by-product of word2vec, an embedding mannequin utilized in pure language processing, known as store2vec that it tailored primarily based on current knowledge. The group handled every retailer as a phrase and fashioned sentences utilizing the listing of shops seen throughout a single consumer session, with a most restrict of 5 shops per sentence. To create consumer vector embeddings, Doordash summed the vectors of the shops from which customers positioned orders up to now 6 months or as much as 100 orders.

For example, Doordash used vector search to search out comparable eating places for a consumer primarily based on their current purchases at in style, fashionable joints 4505 Burgers and New Nagano Sushi in San Francisco. Doordash generated a listing of comparable eating places measuring the cosine distance from the consumer embedding to retailer embeddings within the space. You’ll be able to see that the shops that had been closest in cosine distance embody Kezar Pub and Wood Charcoal Korean Village BBQ.

Doordash included store2vec distance function as one of many options in its bigger suggestion and personalization mannequin. With vector search, Doordash was in a position to see a 5% enhance in click-through-rate. The group can be experimenting with new fashions like seq2seq, mannequin optimizations and incorporating real-time onsite exercise knowledge from customers.

Key issues for vector search

Pinterest, Spotify, eBay, Airbnb and Doordash create higher search and discovery experiences with vector search. Many of those groups began out utilizing textual content search and located limitations with fuzzy search or searches of particular types or aesthetics. In these eventualities, including vector search to the expertise made it simpler to search out related, and infrequently customized, podcasts, pillows, leases, pins and eateries.

There are a number of choices that these corporations made which can be price calling out when implementing vector search:

- Embedding fashions: Many began out utilizing an off-the-shelf mannequin after which educated it on their very own knowledge. In addition they acknowledged that language fashions like word2vec might be utilized by swapping phrases and their descriptions with objects and comparable objects that had been not too long ago clicked. Groups like AirBnb discovered that utilizing derivatives of language fashions, moderately than picture fashions, might nonetheless work nicely for capturing visible similarities and variations.

- Coaching: Many of those corporations opted to coach their fashions on previous buy and click on by way of knowledge, making use of current large-scale datasets.

- Indexing: Whereas many corporations adopted ANN search, we noticed that Pinterest was in a position to mix metadata filtering with KNN seek for effectivity at scale.

- Hybrid search: Vector search not often replaces textual content search. Many instances, like in Spotify’s instance, a ultimate rating algorithm is used to find out whether or not vector search or textual content search generated probably the most related outcome.

- Productionizing: We’re seeing many groups use batch-based techniques to create the vector embeddings, provided that these embeddings are not often up to date. They make use of a special system, incessantly Elasticsearch, to compute the question vector embedding dwell and incorporate real-time metadata of their search.

Rockset, a real-time search and analytics database, not too long ago added help for vector search. Give vector search on Rockset a attempt for real-time personalization, suggestions, anomaly detection and extra by beginning a free trial with $300 in credit as we speak.