Lower than two years after the overall launch of ChatGPT, most software program builders have adopted AI assistants for programming. That is boosting effectivity, however on the identical time, it is led to a better cadence of software program improvement that has made sustaining safety tougher.

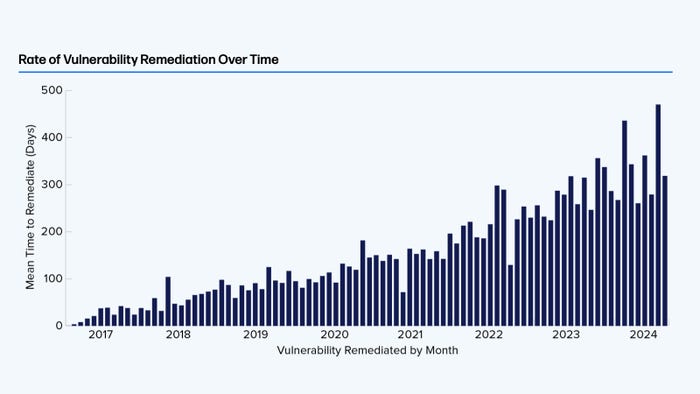

Builders are on observe to obtain greater than 6.6 trillion software program elements in 2024, which features a 70% improve in downloads of JavaScript elements and a 87% improve in Python modules, in keeping with the annual “State of the Software program Provide Chain” report from Sonatype. On the identical time, the imply time to remediate vulnerabilities in these open supply tasks has grown considerably over the previous seven years, from about 25 days in 2017 to greater than 300 days in 2024.

One possible cause: The arrival of AI is driving speedier improvement cycles, making safety tougher, says Brian Fox, chief know-how officer of Sonatype. Nearly all of builders now use AI instruments of their improvement course of in keeping with a current Stackoverflow survey, with 62% of coders saying they used an AI assistant, up from 44% final 12 months.

“AI has shortly grow to be a strong device for dashing up the coding course of, however the tempo of safety has not progressed as shortly, and it’s creating a niche that’s resulting in lower-quality, less-secure code,” he says. “We’re headed in the correct path, however the true advantage of AI will come when builders don’t must sacrifice high quality or safety for pace.”

Safety researchers have warned that AI code era may end in extra vulnerabilities and novel assaults. For example, a bunch of researchers demonstrated the power to poison the massive language fashions (LLMs) used for code era with maliciously exploitable code on the USENIX Safety Symposium in August. In March, researchers with an LLM safety vendor confirmed that attackers may use AI hallucinations as a method to direct builders and their purposes to malicious packages.

Builders even have rising considerations over the potential for AI assistants to recommend or propagate susceptible code. Whereas nearly all of builders (56%) count on AI assistants to supply usable code, solely 23% count on the code to be safe, whereas a bigger group (40%) do not consider AI assistants present safe code in any respect, in keeping with analysis by software program improvement agency JetBrains and the College of California at Irvine, printed in June.

Open supply tasks take longer to remediate vulnerabilities. Supply: Sonatype

Many builders stay nonplussed by the pace of change wrought by AI coding instruments, and there’s possible extra to return, says Jimmy Rabon, senior product supervisor with Black Duck Software program, a software-integrity instruments supplier.

“We’ve not seen the long-term results of including one thing that may code on the stage of a junior- or intermediate-level developer and at large scale,” he says. “My expectation is that we are going to see extra intermediate errors — the essential errors that you’d make as a junior or intermediate stage developer — and [issues with] understanding the context of the place among the knowledge flows.”

2024: The Yr of the Developer’s AI Assistant

Whereas AI assistants at the moment are being utilized by nearly all of builders, in enterprise environments, adoption of AI instruments is way larger — greater than 90% of builders used AI assistants, in keeping with Black Duck’s 2024 World State of DevSecOps survey. AI as a device for builders is well-entrenched and “won’t ever go away,” Rabon says.

But many builders do not have the expertise to evaluate whether or not code offered by an AI assistant is secure. Entry-level builders, for instance, are extra trusting of AI-produced code than their skilled counterparts, with 49% trusting the accuracy of AI-generated code versus 42% for extra skilled builders, in keeping with Stackoverflow’s annual developer survey.

As well as, AI instruments will have an effect on the schooling of builders and will make it tougher for these entry-level builders to realize the ability wanted to advance of their careers, specialists say. The reliance on AI to finish easy programming tasks may cut back the necessity for brand new or entry-level builders who sometimes sort out less complicated coding duties, eradicating a coaching path, Sonatype’s Fox says.

“The event group is getting older, and the introduction of AI poses potential dangers to youthful generations,” he says. “If AI can deal with the duties beforehand assigned to budding builders, how will they acquire the expertise wanted to switch older builders exiting the business?”

Automated Technology of Safe Code

Till the businesses behind AI assistants create coaching datasets that include safe code solutions, or put in place guardrails to guard towards susceptible and malicious code era, firms must deploy automated software program safety instruments to examine the work of any coding assistant.

The excellent news is, between the extra safety checks and the quick evolution of code-generation assistants, the safety of software program and purposes may ultimately grow to be a lot stronger, says Black Duck’s Rabon.

“There are particular primary safety flaws that I believe will disappear,” he says. “In the event you requested an AI system to generate code, why ought to it ever [suggest an insecure function?] … I do not assume that we have had sufficient time to essentially see the dramatic results of [such capabilities] or show them out.”