batchLLM

Because the title implies, batchLLM is designed to run prompts over a number of targets. Extra particularly, you may run a immediate over a column in an information body and get an information body in return with a brand new column of responses. This generally is a useful approach of incorporating LLMs in an R workflow for duties similar to sentiment evaluation, classification, and labeling or tagging.

It additionally logs batches and metadata, enables you to evaluate outcomes from completely different LLMs aspect by aspect, and has built-in delays for API price limiting.

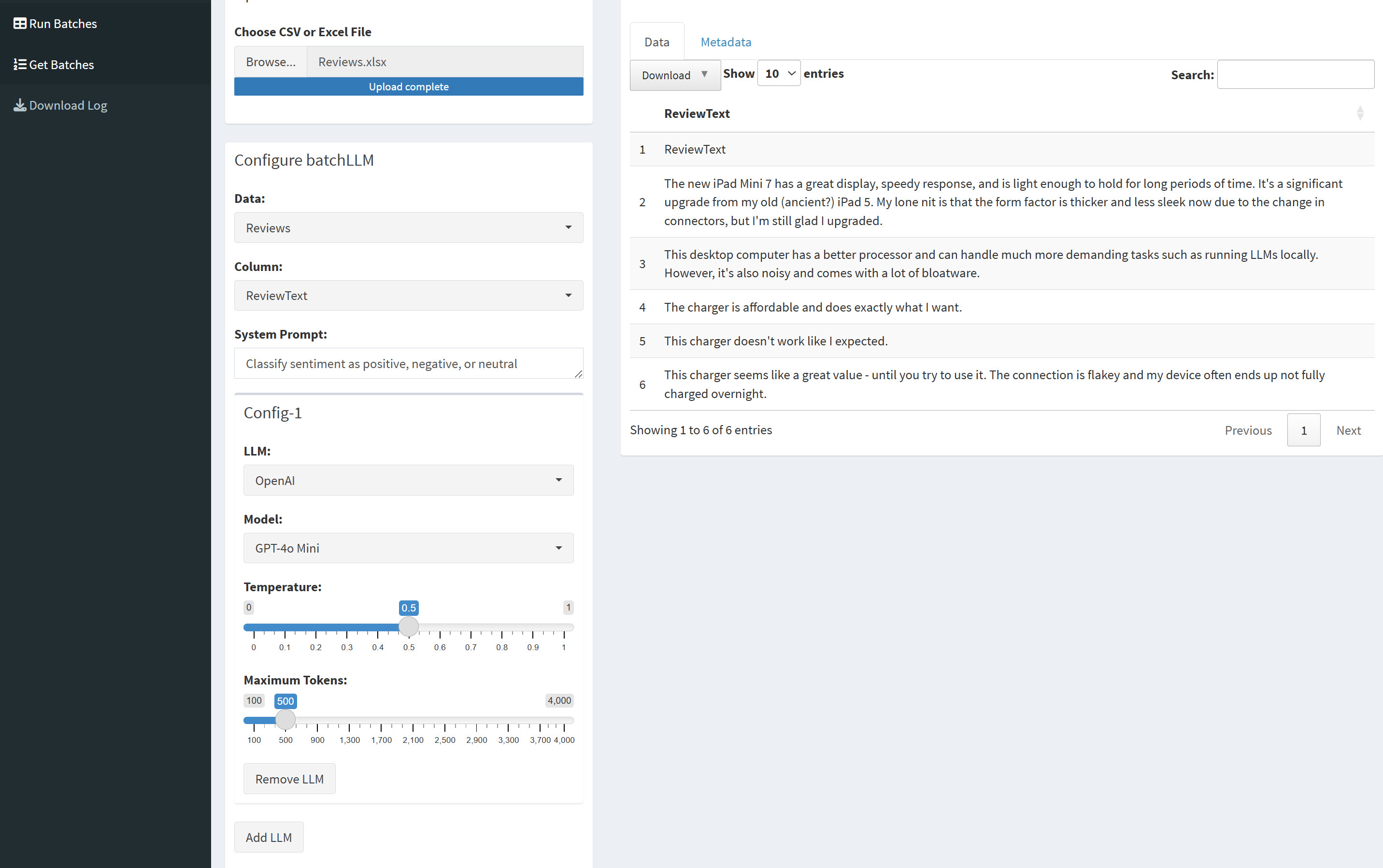

batchLLM’s Shiny app provides a useful graphical consumer interface for working LLM queries and instructions on a column of knowledge.

batchLLM additionally features a built-in Shiny app that provides you a useful internet interface for doing all this work. You’ll be able to launch the net app with batchLLM_shiny() or as an RStudio add-in, when you use RStudio. There’s additionally a internet demo of the app.

batchLLM’s creator, Dylan Pieper, mentioned he created the package deal because of the must categorize “1000’s of distinctive offense descriptions in courtroom knowledge.” Nonetheless, notice that this “batch processing” software doesn’t use the cheaper, time-delayed LLM calls supplied by some mannequin suppliers. Pieper defined on GitHub that “many of the providers didn’t supply it or the API packages didn’t help it” on the time he wrote batchLLM. He additionally famous that he had most popular real-time responses to asynchronous ones.

We’ve checked out three high instruments for integrating massive language fashions into R scripts and applications. Now let’s take a look at a pair extra instruments that target particular duties when utilizing LLMs inside R: retrieving data from massive quantities of knowledge, and scripting frequent prompting duties.

ragnar (RAG for R)

RAG, or retrieval augmented technology, is among the most helpful functions for LLMs. As an alternative of counting on an LLM’s inner information or directing it to look the net, the LLM generates its response based mostly solely on particular data you’ve given it. InfoWorld’s Good Solutions function is an instance of a RAG software, answering tech questions based mostly solely on articles revealed by InfoWorld and its sister websites.

A RAG course of usually includes splitting paperwork into chunks, utilizing fashions to generate embeddings for every chunk, embedding a consumer’s question, after which discovering probably the most related textual content chunks for that question based mostly on calculating which chunks’ embeddings are closest to the question’s. The related textual content chunks are then despatched to an LLM together with the unique query, and the mannequin solutions based mostly on that offered context. This makes it sensible to reply questions utilizing many paperwork as potential sources with out having to stuff all of the content material of these paperwork into the question.

There are quite a few RAG packages and instruments for Python and JavaScript, however not many in R past producing embeddings. Nonetheless, the ragnar package deal, at present very a lot beneath improvement, goals to supply “an entire resolution with wise defaults, whereas nonetheless giving the educated consumer exact management over all of the steps.”

These steps both do or will embrace doc processing, chunking, embedding, storage (defaulting to DuckDB), retrieval (based mostly on each embedding similarity search and textual content search), a method known as re-ranking to enhance search outcomes, and immediate technology.

In the event you’re an R consumer and considering RAG, keep watch over ragnar.

tidyprompt

Severe LLM customers will doubtless need to code sure duties greater than as soon as. Examples embrace producing structured output, calling features, or forcing the LLM to reply in a selected approach (similar to chain-of-thought).

The concept behind the tidyprompt package deal is to supply “constructing blocks” to assemble prompts and deal with LLM output, after which chain these blocks collectively utilizing typical R pipes.

tidyprompt “must be seen as a software which can be utilized to reinforce the performance of LLMs past what APIs natively supply,” in line with the package deal documentation, with features similar to answer_as_json(), answer_as_text(), and answer_using_tools().

A immediate will be so simple as

library(tidyprompt)

"Is London the capital of France?" |>

answer_as_boolean() |>

send_prompt(llm_provider_groq(parameters = record(mannequin = "llama3-70b-8192") ))

which on this case returns FALSE. (Notice that I had first saved my Groq API key in an R surroundings variable, as could be the case for any cloud LLM supplier.) For a extra detailed instance, try the Sentiment evaluation in R with a LLM and ‘tidyprompt’ vignette on GitHub.

There are additionally extra complicated pipelines utilizing features similar to llm_feedback() to examine if an LLM response meets sure circumstances and user_verify() to make it doable for a human to examine an LLM response.

You’ll be able to create your personal tidyprompt immediate wraps with the prompt_wrap() perform.

The tidyprompt package deal helps OpenAI, Google Gemini, Ollama, Groq, Grok, XAI, and OpenRouter (not Anthropic immediately, however Claude fashions can be found on OpenRouter). It was created by Luka Koning and Tjark Van de Merwe.

The underside line

The generative AI ecosystem for R is not as sturdy as Python’s, and that’s unlikely to alter. Nonetheless, previously yr, there’s been loads of progress in creating instruments for key duties programmers would possibly need to do with LLMs in R. If R is your language of alternative and also you’re considering working with massive language fashions both domestically or through APIs, it’s price giving a few of these choices a attempt.