We’re excited to announce Phi-4-multimodal and Phi-4-mini, the most recent fashions in Microsoft’s Phi household of small language fashions (SLMs). These fashions are designed to empower builders with superior AI capabilities.

We’re excited to announce Phi-4-multimodal and Phi-4-mini, the most recent fashions in Microsoft’s Phi household of small language fashions (SLMs). These fashions are designed to empower builders with superior AI capabilities. Phi-4-multimodal, with its capability to course of speech, imaginative and prescient, and textual content concurrently, opens new prospects for creating progressive and context-aware purposes. Phi-4-mini, then again, excels in text-based duties, offering excessive accuracy and scalability in a compact kind. Now obtainable in Azure AI Foundry, HuggingFace, and the NVIDIA API Catalog the place builders can discover the total potential of Phi-4-multimodal on the NVIDIA API Catalog, enabling them to experiment and innovate with ease.

What’s Phi-4-multimodal?

Phi-4-multimodal marks a brand new milestone in Microsoft’s AI improvement as our first multimodal language mannequin. On the core of innovation lies steady enchancment, and that begins with listening to our prospects. In direct response to buyer suggestions, we’ve developed Phi-4-multimodal, a 5.6B parameter mannequin, that seamlessly integrates speech, imaginative and prescient, and textual content processing right into a single, unified structure.

By leveraging superior cross-modal studying methods, this mannequin permits extra pure and context-aware interactions, permitting units to know and cause throughout a number of enter modalities concurrently. Whether or not deciphering spoken language, analyzing photographs, or processing textual data, it delivers extremely environment friendly, low-latency inference—all whereas optimizing for on-device execution and diminished computational overhead.

Natively constructed for multimodal experiences

Phi-4-multimodal is a single mannequin with mixture-of-LoRAs that features speech, imaginative and prescient, and language, all processed concurrently inside the similar illustration area. The result’s a single, unified mannequin able to dealing with textual content, audio, and visible inputs—no want for complicated pipelines or separate fashions for various modalities.

The Phi-4-multimodal is constructed on a brand new structure that enhances effectivity and scalability. It incorporates a bigger vocabulary for improved processing, helps multilingual capabilities, and integrates language reasoning with multimodal inputs. All of that is achieved inside a strong, compact, extremely environment friendly mannequin that’s suited to deployment on units and edge computing platforms.

This mannequin represents a step ahead for the Phi household of fashions, providing enhanced efficiency in a small package deal. Whether or not you’re on the lookout for superior AI capabilities on cell units or edge methods, Phi-4-multimodal offers a high-capability possibility that’s each environment friendly and versatile.

Unlocking new capabilities

With its elevated vary of capabilities and adaptability, Phi-4-multimodal opens thrilling new prospects for app builders, companies, and industries trying to harness the ability of AI in progressive methods. The way forward for multimodal AI is right here, and it’s prepared to remodel your purposes.

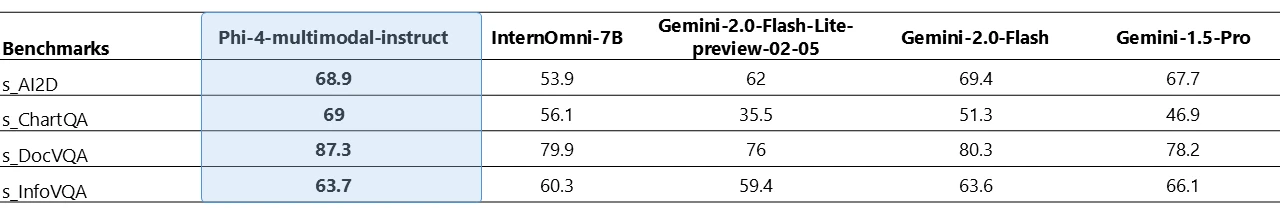

Phi-4-multimodal is able to processing each visible and audio collectively. The next desk exhibits the mannequin high quality when the enter question for imaginative and prescient content material is artificial speech on chart/desk understanding and doc reasoning duties. In comparison with different present state-of-the-art omni fashions that may allow audio and visible alerts as enter, Phi-4-multimodal achieves a lot stronger efficiency on a number of benchmarks.

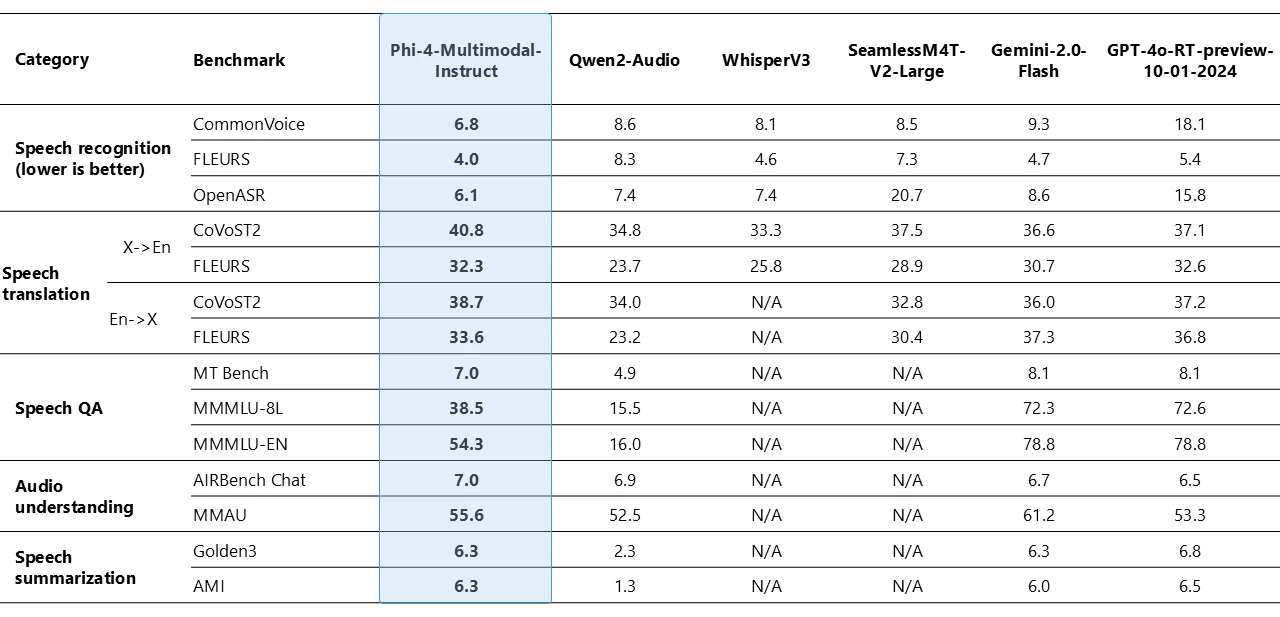

Phi-4-multimodal has demonstrated outstanding capabilities in speech-related duties, rising as a number one open mannequin in a number of areas. It outperforms specialised fashions like WhisperV3 and SeamlessM4T-v2-Giant in each computerized speech recognition (ASR) and speech translation (ST). The mannequin has claimed the highest place on the Huggingface OpenASR leaderboard with a formidable phrase error price of 6.14%, surpassing the earlier greatest efficiency of 6.5% as of February 2025. Moreover, it’s amongst a number of open fashions to efficiently implement speech summarization and obtain efficiency ranges akin to GPT-4o mannequin. The mannequin has a spot with shut fashions, resembling Gemini-2.0-Flash and GPT-4o-realtime-preview, on speech query answering (QA) duties because the smaller mannequin dimension ends in much less capability to retain factual QA information. Work is being undertaken to enhance this functionality within the subsequent iterations.

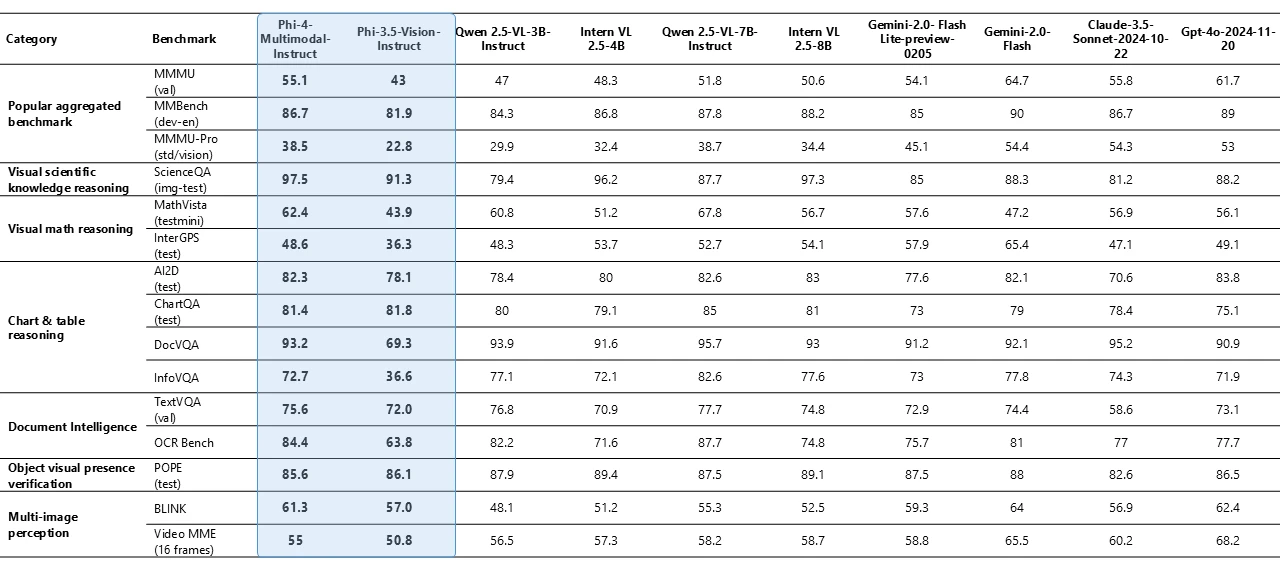

Phi-4-multimodal with solely 5.6B parameters demonstrates outstanding imaginative and prescient capabilities throughout varied benchmarks, most notably reaching robust efficiency on mathematical and science reasoning. Regardless of its smaller dimension, the mannequin maintains aggressive efficiency on normal multimodal capabilities, resembling doc and chart understanding, Optical Character Recognition (OCR), and visible science reasoning, matching or exceeding shut fashions like Gemini-2-Flash-lite-preview/Claude-3.5-Sonnet.

What’s Phi-4-mini?

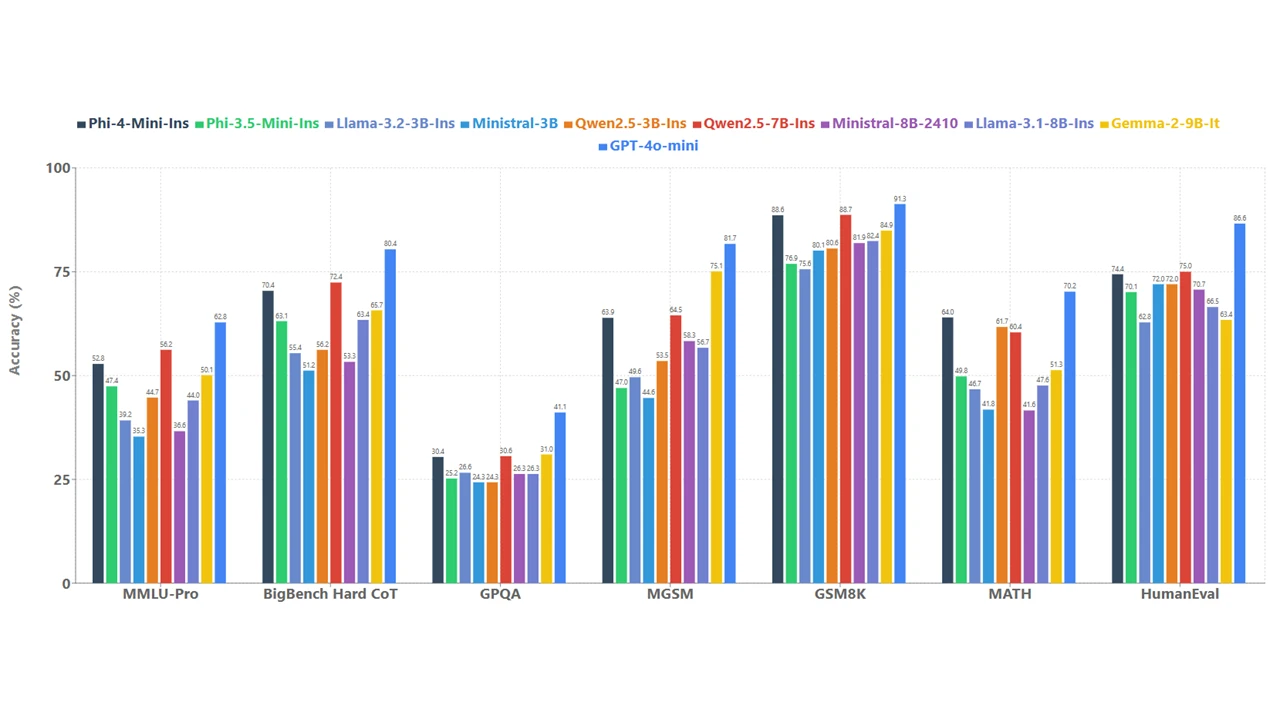

Phi-4-mini is a 3.8B parameter mannequin and a dense, decoder-only transformer that includes grouped-query consideration, 200,000 vocabulary, and shared input-output embeddings, designed for velocity and effectivity. Regardless of its compact dimension, it continues outperforming bigger fashions in text-based duties, together with reasoning, math, coding, instruction-following, and function-calling. Supporting sequences as much as 128,000 tokens, it delivers excessive accuracy and scalability, making it a strong resolution for superior AI purposes.

To know the mannequin high quality, we evaluate Phi-4-mini with a set of fashions over a wide range of benchmarks as proven in Determine 4.

Operate calling, instruction following, lengthy context, and reasoning are highly effective capabilities that allow small language fashions like Phi-4-mini to entry exterior information and performance regardless of their restricted capability. By means of a standardized protocol, operate calling permits the mannequin to seamlessly combine with structured programming interfaces. When a consumer makes a request, Phi-4-Mini can cause via the question, determine and name related features with acceptable parameters, obtain the operate outputs, and incorporate these outcomes into its responses. This creates an extensible agentic-based system the place the mannequin’s capabilities might be enhanced by connecting it to exterior instruments, software program interfaces (APIs), and knowledge sources via well-defined operate interfaces. The next instance simulates a sensible house management agent with Phi-4-mini.

At Headwaters, we’re leveraging fine-tuned SLM like Phi-4-mini on the sting to boost operational effectivity and supply progressive options. Edge AI demonstrates excellent efficiency even in environments with unstable community connections or in fields the place confidentiality is paramount. This makes it extremely promising for driving innovation throughout varied industries, together with anomaly detection in manufacturing, speedy diagnostic help in healthcare, and enhancing buyer experiences in retail. We’re trying ahead to delivering new options within the AI agent period with Phi-4 mini.

—Masaya Nishimaki, Firm Director, Headwaters Co., Ltd.

Customization and cross-platform

Due to their smaller sizes, Phi-4-mini and Phi-4-multimodal fashions can be utilized in compute-constrained inference environments. These fashions can be utilized on-device, particularly when additional optimized with ONNX Runtime for cross-platform availability. Their decrease computational wants make them a decrease value possibility with significantly better latency. The longer context window permits taking in and reasoning over giant textual content content material—paperwork, net pages, code, and extra. Phi-4-mini and multimodal demonstrates robust reasoning and logic capabilities, making it an excellent candidate for analytical duties. Their small dimension additionally makes fine-tuning or customization simpler and extra reasonably priced. The desk beneath exhibits examples of finetuning eventualities with Phi-4-multimodal.

| Duties | Base Mannequin | Finetuned Mannequin | Compute |

| Speech translation from English to Indonesian | 17.4 | 35.5 | 3 hours, 16 A100 |

| Medical visible query answering | 47.6 | 56.7 | 5 hours, 8 A100 |

For extra details about customization or to study extra concerning the fashions, check out Phi Cookbook on GitHub.

How can these fashions be utilized in motion?

These fashions are designed to deal with complicated duties effectively, making them perfect for edge case eventualities and compute-constrained environments. Given the brand new capabilities Phi-4-multimodal and Phi-4-mini deliver, the makes use of of Phi are solely increasing. Phi fashions are being embedded into AI ecosystems and used to discover varied use instances throughout industries.

Language fashions are highly effective reasoning engines, and integrating small language fashions like Phi into Home windows permits us to keep up environment friendly compute capabilities and opens the door to a way forward for steady intelligence baked in throughout all of your apps and experiences. Copilot+ PCs will construct upon Phi-4-multimodal’s capabilities, delivering the ability of Microsoft’s superior SLMs with out the power drain. This integration will improve productiveness, creativity, and education-focused experiences, turning into a regular a part of our developer platform.

—Vivek Pradeep, Vice President Distinguished Engineer of Home windows Utilized Sciences.

- Embedded on to your sensible system: Telephone producers integrating Phi-4-multimodal immediately right into a smartphone might allow smartphones to course of and perceive voice instructions, acknowledge photographs, and interpret textual content seamlessly. Customers may gain advantage from superior options like real-time language translation, enhanced picture and video evaluation, and clever private assistants that perceive and reply to complicated queries. This could elevate the consumer expertise by offering highly effective AI capabilities immediately on the system, making certain low latency and excessive effectivity.

- On the highway: Think about an automotive firm integrating Phi-4-multimodal into their in-car assistant methods. The mannequin might allow autos to know and reply to voice instructions, acknowledge driver gestures, and analyze visible inputs from cameras. As an example, it might improve driver security by detecting drowsiness via facial recognition and offering real-time alerts. Moreover, it might supply seamless navigation help, interpret highway indicators, and supply contextual data, making a extra intuitive and safer driving expertise whereas related to the cloud and offline when connectivity isn’t obtainable.

- Multilingual monetary providers: Think about a monetary providers firm integrating Phi-4-mini to automate complicated monetary calculations, generate detailed reviews, and translate monetary paperwork into a number of languages. As an example, the mannequin can help analysts by performing intricate mathematical computations required for threat assessments, portfolio administration, and monetary forecasting. Moreover, it will probably translate monetary statements, regulatory paperwork, and shopper communications into varied languages and will enhance shopper relations globally.

Microsoft’s dedication to safety and security

Azure AI Foundry offers customers with a strong set of capabilities to assist organizations measure, mitigate, and handle AI dangers throughout the AI improvement lifecycle for conventional machine studying and generative AI purposes. Azure AI evaluations in AI Foundry allow builders to iteratively assess the standard and security of fashions and purposes utilizing built-in and customized metrics to tell mitigations.

Each fashions underwent safety and security testing by our inner and exterior safety consultants utilizing methods crafted by Microsoft AI Crimson Workforce (AIRT). These strategies, developed over earlier Phi fashions, incorporate international views and native audio system of all supported languages. They span areas resembling cybersecurity, nationwide safety, equity, and violence, addressing present traits via multilingual probing. Utilizing AIRT’s open-source Python Danger Identification Toolkit (PyRIT) and guide probing, purple teamers carried out single-turn and multi-turn assaults. Working independently from the event groups, AIRT repeatedly shared insights with the mannequin group. This strategy assessed the brand new AI safety and security panorama launched by our newest Phi fashions, making certain the supply of high-quality capabilities.

Check out the mannequin playing cards for Phi-4-multimodal and Phi-4-mini, and the technical paper to see a top level view of really helpful makes use of and limitations for these fashions.

Be taught extra about Phi-4

We invite you to return discover the probabilities with Phi-4-multimodal and Phi-4-mini in Azure AI Foundry, Hugging Face, and NVIDIA API Catalog with a full multimodal expertise. We are able to’t wait to listen to your suggestions and see the unimaginable issues you’ll accomplish with our new fashions.