(Inkoly/Shutterstock)

GenAI hit the scene quick and livid when ChatGPT was launched on November 30, 2022. The hunt for larger and higher fashions has modified the {hardware}, knowledge heart, and energy panorama and foundational fashions are nonetheless beneath fast growth. One of many challenges in HPC and technical computing is discovering the place GenAI “matches in” and, extra importantly, “what all of it means” when it comes to future discoveries.

Certainly, the resource-straining market results have principally been as a consequence of creating and coaching massive AI fashions. The anticipated inference market (deploying the fashions) might require completely different HW and is predicted to be a lot bigger than the coaching market.

What about HPC?

Except for making GPUs scarce and costly (even within the cloud), these fast adjustments have instructed many questions within the HPC neighborhood. As an illustration;

- How can HPC leverage GenAI? (Can it? )

- How does it match with conventional HPC instruments and purposes?

- Can GenAI write code for HPC purposes?

- Can GenAI motive about Science and Know-how?

Solutions to those and different questions are forthcoming. Many organizations are engaged on these points, together with the Trillion Parameter Consortium (TPC) — Generative AI for Science and Engineering.

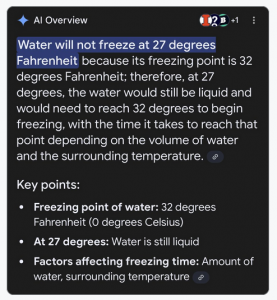

What has been reported, nevertheless, is that with all of the enhancements in LLMs, they proceed, on occasion, to supply inaccurate or improper solutions (euphemistically known as “hallucinations”). Contemplate the next search immediate and subsequent AI-generated reply. Somebody requested an elementary college degree chemistry query, “Will Water Freeze at 27 levels F?” and the reply is comically improper and appears topic to defective reasoning. If GenAI is to work in science and expertise, the fashions should be improved.

Possibly extra knowledge will assist

The “intelligence” of the preliminary LLMs was improved by together with extra knowledge. Because of this, fashions grew to become larger, requiring extra assets and computation time. As measured by some rising benchmarks, the “smartness” of the fashions did enhance, however there is a matter with this strategy. Scaling fashions means discovering extra knowledge, and in a easy sense, the mannequin makers have already scraped a considerable amount of the web into their fashions. The success of LLMs has additionally created extra web content material within the type of automated information articles, summaries, social media posts, artistic writing, and so on.

There aren’t any actual figures; estimates are that 10–15% of the web’s textual content material right now has been created by AI. Predictions point out that by 2030, AI-generated content material may comprise over 50% of the web’s textual knowledge.

Nevertheless, there are considerations about LLMs consuming their very own knowledge. It’s usually recognized that LLMs educated on knowledge generated by different AI fashions will result in a degradation in efficiency over successive generations — a situation known as Mannequin collapse. Certainly, fashions can hallucinate internet content material (“No, water is not going to freeze at 27F”), which can develop into enter new mannequin — and so forth.

As well as, the latest launch of report-generating instruments like OpenAI Deep Analysis and Google’s Gemini Deep Analysis make it simple for researchers to create papers and paperwork by suggesting matters to analysis instruments. Brokers comparable to Deep Analysis are designed to conduct intensive analysis, synthesize info from numerous internet sources, and generate complete reviews that inevitably will discover their method into coaching knowledge for the following technology of LLMs.

Wait, don’t we create our personal knowledge

HPC creates piles of information. Conventional HPC crunches numbers to judge mathematical fashions utilizing enter knowledge and parameters. In a single sense, knowledge are distinctive and unique and supply the next choices

- Clear and full – no hallucinations, no lacking knowledge

- Tunable – we are able to decide the form of the information

- Correct – typically examined in opposition to experiment

- Nearly limitless – generate many situations

There appears to be no tail to eat with science and technical knowledge. An excellent instance are the Microsoft Aurora (to not be confused with Argonne’s Aurora exascale system) data-based climate mannequin outcomes (coated on HPCwire).

Utilizing this mannequin, Microsoft asserts that Aurora’s coaching on greater than 1,000,000 hours of meteorological and climatic knowledge has resulted in a 5,000-fold improve in computational velocity in comparison with numerical forecasting. The AI strategies are agnostic of what knowledge sources are used to coach them. Scientists can prepare them on conventional simulation knowledge, or they’ll additionally prepare them utilizing actual remark knowledge, or a mix of each. In response to the researchers, the Aurora outcomes point out that rising the information set range and likewise the mannequin measurement can enhance accuracy. Knowledge sizes differ by a number of hundred terabytes as much as a petabyte in measurement.

Giant Quantitative Fashions: LQMs

The important thing to creating LLMs is changing phrases or tokens to vectors and coaching utilizing a lot of matrix math (GPUs) to create fashions representing relationships between tokens. Utilizing inference, the fashions predict the following token whereas answering questions.

We have already got numbers, vectors, and matrices in Science and Engineering! We don’t wish to predict the following phrase like Giant Langue Fashions; we wish to predict numbers utilizing Giant Quantitative Fashions or LQMs.

Constructing an LQM is harder than constructing an LLM and requires a deep understanding of the system being modeled (AI), entry to massive quantities of information (Huge Knowledge), and complicated computational instruments (HPC). LQMs are constructed by interdisciplinary groups of scientists, engineers, and knowledge analysts who work collectively on fashions. As soon as full, LQMs can be utilized in numerous methods. They are often run on supercomputers to simulate completely different situations (i.e., HPC acceleration) and permit customers to discover “what if” questions and predict outcomes beneath numerous situations sooner than utilizing conventional numeric based mostly fashions.

An instance of an LQM-based firm is SandboxAQ, coated in AIwire that was spun out of Google in March 2022.

Their complete funding is reported as $800 million they usually plan to concentrate on Cryptography, Quantum Sensors, and LQMs. Their LQM efforts concentrate on life sciences, vitality, chemical substances, and monetary companies.

However …, knowledge administration

Keep in mind BIG DATA, it by no means went away and is getting larger. And it may be one of many largest challenges to AI mannequin technology. As reported in BigDATAwire, “Essentially the most often cited technological inhibitors to AI/ML deployments are storage and knowledge administration (35%)—considerably higher than computing (26%),” Current S&P International Market Intelligence Report.

As well as, it’s computationally possible to carry out AI and ML processing with out GPUs; nevertheless, it’s practically inconceivable to take action with out correct high-performance and scalable storage. A bit-known truth about knowledge science is that 70%–80% of the time spent on knowledge science tasks is in what is often often known as Knowledge Engineering or Knowledge Analytics (the time not spent working fashions).

To completely perceive mannequin storage wants, Glen Lockwood supplies a wonderful description of AI mannequin storage and knowledge administration course of in a latest weblog submit.

Andrew Ng’s AI Virtuous Cycle

If one considers Andrew Ng‘s Virtuous Cycle of AI, which describes how firms use AI to construct higher merchandise ,the benefit of utilizing AI turns into clear.

The cycle, as illustrated within the determine, has the next steps

The cycle, as illustrated within the determine, has the next steps

- Begins with consumer exercise, which generates knowledge on consumer habits

- Knowledge should be managed — curated, tagged, archived, saved, moved

- Knowledge is run by AI, which defines consumer habits and propensities

- Permits organizations to construct higher merchandise

- Attracts extra customers, which generates extra knowledge

- and the cycle continues.

The framework of the AI Virtuous Cycle illustrates the self-reinforcing loop in synthetic intelligence the place improved algorithms result in higher knowledge, which in flip enhances the algorithms additional. This cycle explains how developments in a single space of AI can speed up progress in others, making a Virtuous Cycle of steady enchancment.

The Virtuous Cycle for scientific and technical computing

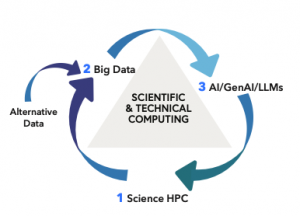

Much like the Virtuous Cycle for product creation, a Virtuous Cycle for scientific and technical computing has developed throughout many domains. As described within the picture, the digital cycle consists of HPC, Huge Knowledge, and AI in a constructive suggestions loop. The cycle may be described as follows;

- Scientific Analysis and HPC: Grand-challenge science requires HPC functionality and has the capability to generate a really excessive quantity of information.

- Knowledge Feeds AI Fashions: Knowledge Administration is vital. Excessive volumes of information should be managed, cleaned, curated, archived, sourced, saved

- “Knowledge” Fashions Enhance Analysis: Armed with insights from the information, AI fashions/LLMs/LQMs analyze patterns, be taught from examples, and make predictions. HPC techniques are required for coaching, Inferencing, and predicting new knowledge for Step 1.

- Lather, Rinse, Repeat

Utilizing this Virtuous Cycle customers profit from these key indicators:

- Constructive Suggestions Loops: Similar to viral progress, constructive suggestions loops drive AI success.

- Enhancements result in extra utilization, which in flip fuels additional enhancements.

- Community Results: The extra customers, the higher the AI fashions develop into. A robust consumer base reinforces the cycle.

- Strategic Asset: AI-driven insights develop into a strategic asset. Scientific analysis that harnesses this cycle delivers a aggressive edge.

The sensible manifestation of the AI Virtuous Cycle shouldn’t be merely a conceptual framework, however is actively reshaping the digital analysis surroundings. As analysis organizations embrace and perceive AI, they begin to understand the advantages of a steady cycle of discovery, innovation, and enchancment, perpetually propelling themselves ahead.

The brand new HPC accelerator

HPC is consistently on the lookout for methods to speed up efficiency. Whereas not a selected piece of {hardware} or software program, the Virtuous AI Cycle seen as an entire is a large acceleration leap for science and expertise. And we’re at first of adoption.

This new period of HPC will likely be constructed on LLMs and LQMs (and different AI instruments) that present acceleration utilizing “knowledge fashions” derived from numerical knowledge and actual knowledge. Conventional, verified, examined HPC “numeric fashions” will be capable of present uncooked coaching knowledge and probably assist validate the outcomes of information fashions. Because the cycle accelerates, creating extra knowledge and utilizing Huge Knowledge instruments will develop into important for coaching the following technology of fashions. Lastly, Quantum Computing, as coated by QCwire, will proceed to mature and additional speed up this cycle.

The strategy shouldn’t be with out questions and challenges. The accelerating cycle will create additional strain on assets and sustainability options. Most significantly, will the Virtuous Cycle for scientific and technical computing eat its tail?

Conserving you within the virtuous loop

Tabor Communications gives publications that present industry-leading protection in HPC, Quantum Computing, Huge Knowledge, and AI. It’s no coincidence that these are parts of the Virtuous Cycle for scientific and technical computing. Our protection has been converging on the Virtuous Cycle for a few years. We plan to ship HPC, Quantum, Huge Knowledge, and AI into the context of the Virtuous Cycle and assist our readers profit from these fast adjustments which can be accelerating science and expertise.