Understanding and evaluating your synthetic intelligence (AI) system’s predictions may be difficult. AI and machine studying (ML) classifiers are topic to limitations attributable to quite a lot of elements, together with idea or information drift, edge circumstances, the pure uncertainty of ML coaching outcomes, and rising phenomena unaccounted for in coaching information. These kinds of elements can result in bias in a classifier’s predictions, compromising choices made primarily based on these predictions.

The SEI has developed a new AI robustness (AIR) instrument to assist packages higher perceive and enhance their AI classifier efficiency. On this weblog submit, we clarify how the AIR instrument works, present an instance of its use, and invite you to work with us if you wish to use the AIR instrument in your group.

Challenges in Measuring Classifier Accuracy

There’s little doubt that AI and ML instruments are among the strongest instruments developed within the final a number of a long time. They’re revolutionizing trendy science and know-how within the fields of prediction, automation, cybersecurity, intelligence gathering, coaching and simulation, and object detection, to call only a few. There’s accountability that comes with this nice energy, nevertheless. As a neighborhood, we have to be aware of the idiosyncrasies and weaknesses related to these instruments and guarantee we’re taking these under consideration.

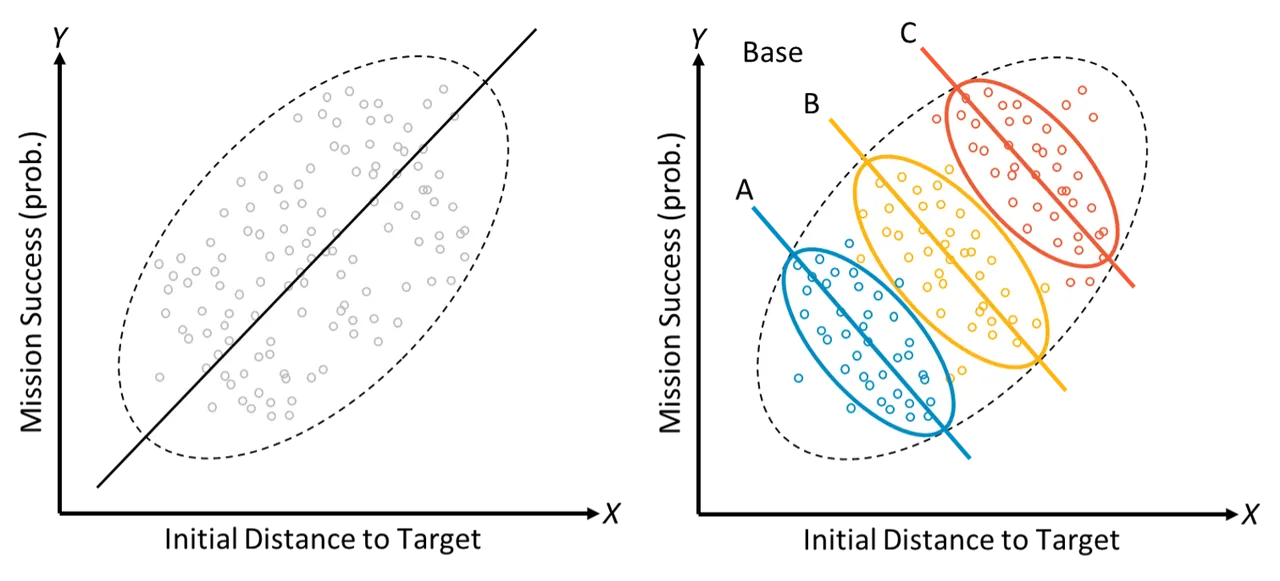

One of many biggest strengths of AI and ML is the power to successfully acknowledge and mannequin correlations (actual or imagined) throughout the information, resulting in modeling capabilities that in lots of areas excel at prediction past the strategies of classical statistics. Such heavy reliance on correlations throughout the information, nevertheless, can simply be undermined by information or idea drift, evolving edge circumstances, and rising phenomena. This may result in fashions that will go away different explanations unexplored, fail to account for key drivers, and even probably attribute causes to the incorrect elements. Determine 1 illustrates this: at first look (left) one may fairly conclude that the likelihood of mission success seems to extend as preliminary distance to the goal grows. Nonetheless, if one provides in a 3rd variable for base location (the coloured ovals on the appropriate of Determine 1), the connection reverses as a result of base location is a typical explanation for each success and distance. That is an instance of a statistical phenomenon often known as Simpson’s Paradox, the place a development in teams of information reverses or disappears after the teams are mixed. This instance is only one illustration of why it’s essential to grasp sources of bias in a single’s information.

Determine 1: An illustration of Simpson’s Paradox

To be efficient in crucial drawback areas, classifiers additionally have to be strong: they want to have the ability to produce correct outcomes over time throughout a spread of eventualities. When classifiers turn into untrustworthy as a consequence of rising information (new patterns or distributions within the information that weren’t current within the authentic coaching set) or idea drift (when the statistical properties of the end result variable change over time in unexpected methods), they could turn into much less possible for use, or worse, could misguide a crucial operational resolution. Usually, to judge a classifier, one compares its predictions on a set of information to its anticipated conduct (floor fact). For AI and ML classifiers, the information initially used to coach a classifier could also be insufficient to yield dependable future predictions as a consequence of modifications in context, threats, the deployed system itself, and the eventualities into account. Thus, there isn’t a supply for dependable floor fact over time.

Additional, classifiers are sometimes unable to extrapolate reliably to information they haven’t but seen as they encounter surprising or unfamiliar contexts that weren’t aligned with the coaching information. As a easy instance, for those who’re planning a flight mission from a base in a heat surroundings however your coaching information solely consists of cold-weather flights, predictions about gasoline necessities and system well being won’t be correct. For these causes, it’s crucial to take causation under consideration. Understanding the causal construction of the information may also help establish the assorted complexities related to conventional AI and ML classifiers.

Causal Studying on the SEI

Causal studying is a discipline of statistics and ML that focuses on defining and estimating trigger and impact in a scientific, data-driven manner, aiming to uncover the underlying mechanisms that generate the noticed outcomes. Whereas ML produces a mannequin that can be utilized for prediction from new information, causal studying differs in its concentrate on modeling, or discovering, the cause-effect relationships inferable from a dataset. It solutions questions resembling:

- How did the information come to be the best way it’s?

- What system or context attributes are driving which outcomes?

Causal studying helps us formally reply the query of “does X trigger Y, or is there another motive why they at all times appear to happen collectively?” For instance, let’s say we’ve these two variables, X and Y, which can be clearly correlated. People traditionally have a tendency to take a look at time-correlated occasions and assign causation. We would motive: first X occurs, then Y occurs, so clearly X causes Y. However how can we check this formally? Till not too long ago, there was no formal methodology for testing causal questions like this. Causal studying permits us to construct causal diagrams, account for bias and confounders, and estimate the magnitude of impact even in unexplored eventualities.

Latest SEI analysis has utilized causal studying to figuring out how strong AI and ML system predictions are within the face of circumstances and different edge circumstances which can be excessive relative to the coaching information. The AIR instrument, constructed on the SEI’s physique of labor in informal studying, offers a brand new functionality to judge and enhance classifier efficiency that, with the assistance of our companions, will likely be able to be transitioned to the DoD neighborhood.

How the AIR Instrument Works

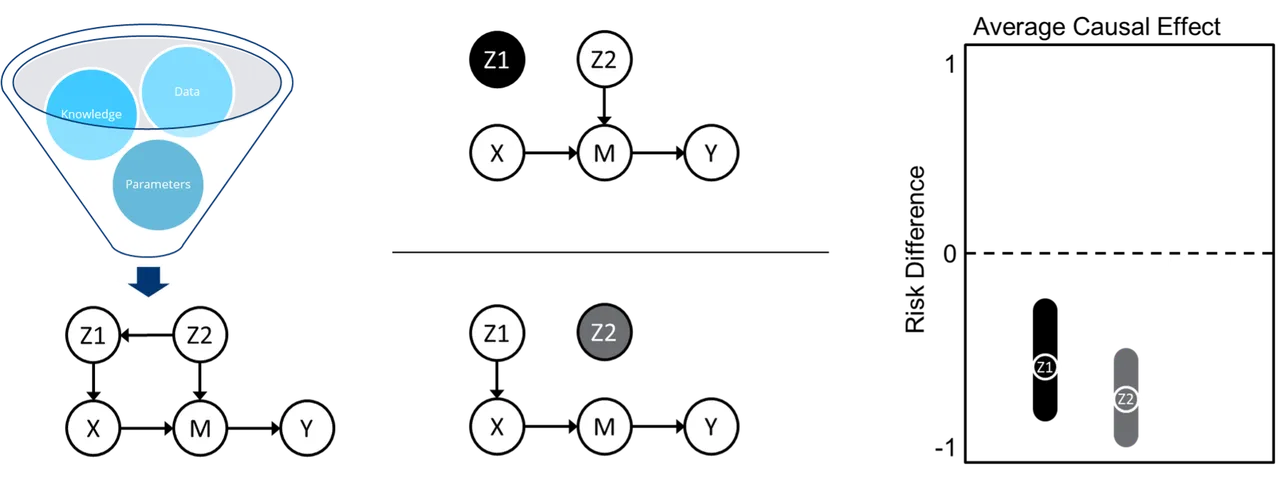

AIR is an end-to-end causal inference instrument that builds a causal graph of the information, performs graph manipulations to establish key sources of potential bias, and makes use of state-of-the-art ML algorithms to estimate the typical causal impact of a state of affairs on an consequence, as illustrated in Determine 2. It does this by combining three disparate, and infrequently siloed, fields from throughout the causal studying panorama: causal discovery for constructing causal graphs from information, causal identification for figuring out potential sources of bias in a graph, and causal estimation for calculating causal results given a graph. Working the AIR instrument requires minimal handbook effort—a consumer uploads their information, defines some tough causal data and assumptions (with some steering), and selects applicable variable definitions from a dropdown record.

Determine 2: Steps within the AIR instrument

Causal discovery, on the left of Determine 2, takes inputs of information, tough causal data and assumptions, and mannequin parameters and outputs a causal graph. For this, we make the most of a state-of-the-art causal discovery algorithm referred to as Finest Order Rating Search (BOSS). The ensuing graph consists of a state of affairs variable (X), an consequence variable (Y), any intermediate variables (M), mother and father of both X (Z1) or M (Z2), and the path of their causal relationship within the type of arrows.

Causal identification, in the midst of Determine 2, splits the graph into two separate adjustment units geared toward blocking backdoor paths by means of which bias may be launched. This goals to keep away from any spurious correlation between X and Y that is because of widespread causes of both X or M that may have an effect on Y. For instance, Z2 is proven right here to have an effect on each X (by means of Z1) and Y (by means of M). To account for bias, we have to break any correlations between these variables.

Lastly, causal estimation, illustrated on the appropriate of Determine 2, makes use of an ML ensemble of doubly-robust estimators to calculate the impact of the state of affairs variable on the end result and produce 95% confidence intervals related to every adjustment set from the causal identification step. Doubly-robust estimators enable us to supply constant outcomes even when the end result mannequin (what’s likelihood of an consequence?) or the remedy mannequin (what’s the likelihood of getting this distribution of state of affairs variables given the end result?) is specified incorrectly.

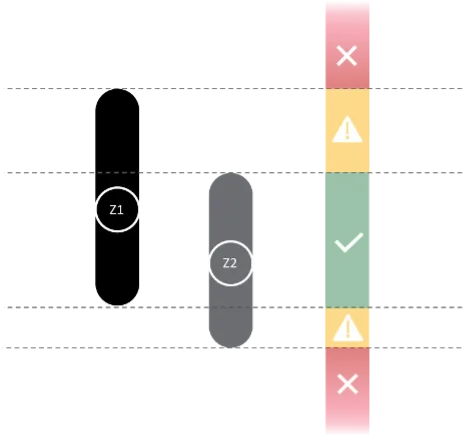

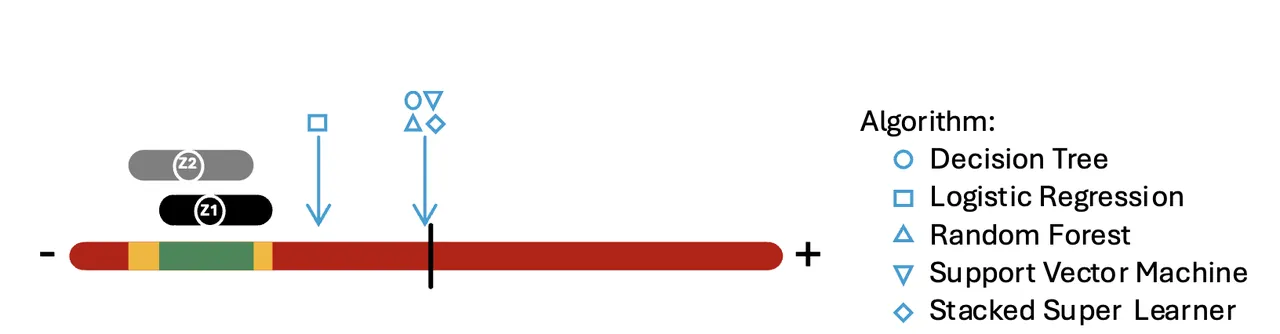

Determine 3: Decoding the AIR instrument’s outcomes

The 95% confidence intervals calculated by AIR present two unbiased checks on the conduct, or predicted consequence, of the classifier on a state of affairs of curiosity. Whereas it may be an aberration if just one set of the 2 bands is violated, it might even be a warning to watch classifier efficiency for that state of affairs usually sooner or later. If each bands are violated, a consumer must be cautious of classifier predictions for that state of affairs. Determine 3 illustrates an instance of two confidence interval bands.

The 2 adjustment units output from AIR present suggestions of what variables or options to concentrate on for subsequent classifier retraining. Sooner or later, we’d prefer to make use of the causal graph along with the discovered relationships to generate artificial coaching information for enhancing classifier predictions.

The AIR Instrument in Motion

To exhibit how the AIR instrument may be utilized in a real-world state of affairs, take into account the next instance. A notional DoD program is utilizing unmanned aerial autos (UAVs) to gather imagery, and the UAVs can begin the mission from two completely different base places. Every location has completely different environmental circumstances related to it, resembling wind velocity and humidity. This system seeks to foretell mission success, outlined because the UAV efficiently buying photographs, primarily based on the beginning location, they usually have constructed a classifier to help of their predictions. Right here, the state of affairs variable, or X, is the bottom location.

This system could need to perceive not simply what mission success appears to be like like primarily based on which base is used, however why. Unrelated occasions could find yourself altering the worth or impression of environmental variables sufficient that the classifier efficiency begins to degrade.

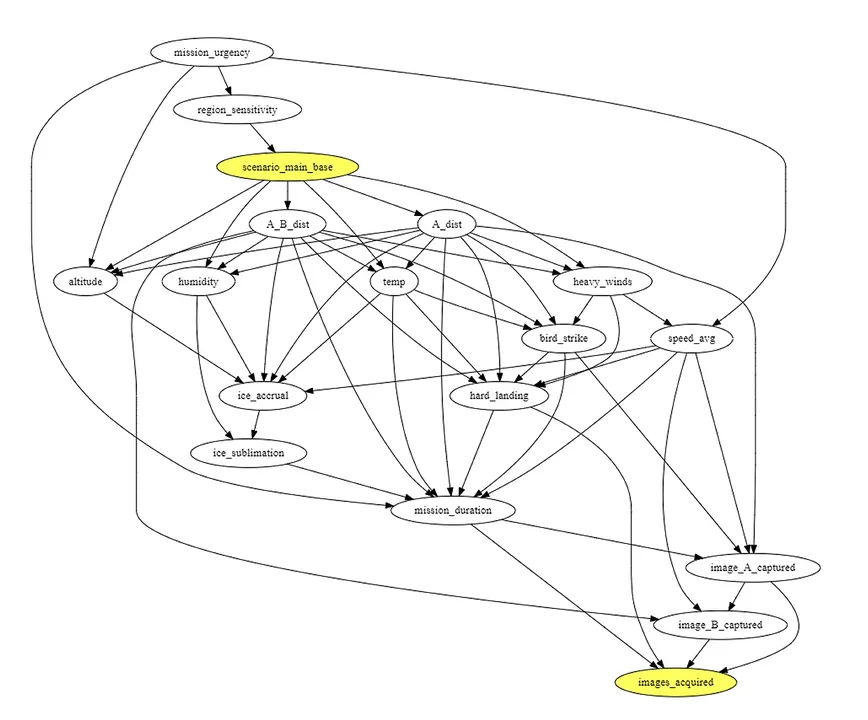

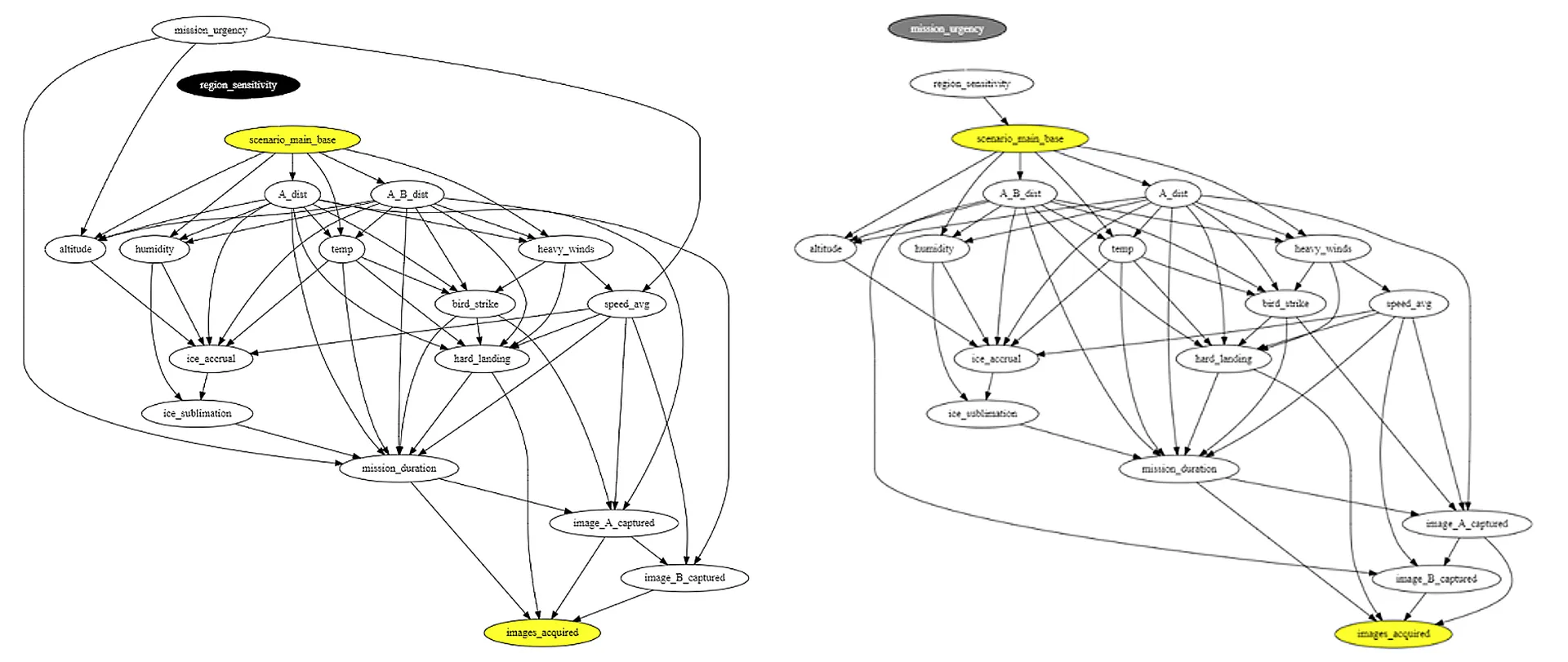

Determine 4: Causal graph of direct cause-effect relationships within the UAV instance state of affairs.

Step one of the AIR instrument applies causal discovery instruments to generate a causal graph (Determine 4) of the most probably cause-and-effect relationships amongst variables. For instance, ambient temperature impacts the quantity of ice accumulation a UAV may expertise, which might have an effect on whether or not the UAV is ready to efficiently fulfill its mission of acquiring photographs.

In step 2, AIR infers two adjustment units to assist detect bias in a classifier’s predictions (Determine 5). The graph on the left is the results of controlling for the mother and father of the principle base remedy variable. The graph to the appropriate is the results of controlling for the mother and father of the intermediate variables (other than different intermediate variables) resembling environmental circumstances. Eradicating edges from these adjustment units removes potential confounding results, permitting AIR to characterize the impression that selecting the principle base has on mission success.

Determine 5: Causal graphs similar to the 2 adjustment units.

Lastly, in step 3, AIR calculates the danger distinction that the principle base alternative has on mission success. This threat distinction is calculated by making use of non-parametric, doubly-robust estimators to the duty of estimating the impression that X has on Y, adjusting for every set individually. The result’s a degree estimate and a confidence vary, proven right here in Determine 6. Because the plot exhibits, the ranges for every set are comparable, and analysts can now examine these ranges to the classifier prediction.

Determine 6: Danger distinction plot exhibiting the typical causal impact (ACE) of every adjustment set (i.e., Z1 and Z2) alongside AI/ML classifiers. The continuum ranges from -1 to 1 (left to proper) and is coloured primarily based on stage of settlement with ACE intervals.

Determine 6 represents the danger distinction related to a change within the variable, i.e., scenario_main_base. The x-axis ranges from optimistic to damaging impact, the place the state of affairs both will increase the probability of the end result or decreases it, respectively; the midpoint right here corresponds to no vital impact. Alongside the causally-derived confidence intervals, we additionally incorporate a five-point estimate of the danger distinction as realized by 5 in style ML algorithms—resolution tree, logistic regression, random forest, stacked tremendous learner, and assist vector machine. These inclusions illustrate that these issues will not be specific to any particular ML algorithm. ML algorithms are designed to study from correlation, not the deeper causal relationships implied by the identical information. The classifiers’ prediction threat variations, represented by varied gentle blue shapes, fall exterior the AIR-calculated causal bands. This outcome signifies that these classifiers are possible not accounting for confounding as a consequence of some variables, and the AI classifier(s) must be re-trained with extra information—on this case, representing launch from predominant base versus launch from one other base with quite a lot of values for the variables showing within the two adjustment units. Sooner or later, the SEI plans so as to add a well being report to assist the AI classifier maintainer establish further methods to enhance AI classifier efficiency.

Utilizing the AIR instrument, this system workforce on this state of affairs now has a greater understanding of the information and extra explainable AI.

How Generalizable is the AIR Instrument?

The AIR instrument can be utilized throughout a broad vary of contexts and eventualities. For instance, organizations with classifiers employed to assist make enterprise choices about prognostic well being upkeep, automation, object detection, cybersecurity, intelligence gathering, simulation, and lots of different purposes could discover worth in implementing AIR.

Whereas the AIR instrument is generalizable to eventualities of curiosity from many fields, it does require a consultant information set that meets present instrument necessities. If the underlying information set is of affordable high quality and completeness (i.e., the information consists of vital causes of each remedy and consequence) the instrument may be utilized extensively.

Alternatives to Companion

The AIR workforce is at present in search of collaborators to contribute to and affect the continued maturation of the AIR instrument. In case your group has AI or ML classifiers and subject-matter specialists to assist us perceive your information, our workforce may also help you construct a tailor-made implementation of the AIR instrument. You’ll work carefully with the SEI AIR workforce, experimenting with the instrument to study your classifiers’ efficiency and to assist our ongoing analysis into evolution and adoption. A number of the roles that would profit from—and assist us enhance—the AIR instrument embrace:

- ML engineers—serving to establish check circumstances and validate the information

- information engineers—creating information fashions to drive causal discovery and inference phases

- high quality engineers—making certain the AIR instrument is utilizing applicable verification and validation strategies

- program leaders—deciphering the knowledge from the AIR instrument

With SEI adoption assist, partnering organizations achieve in-house experience, progressive perception into causal studying, and data to enhance AI and ML classifiers.