I first met with the Rockset group once they have been simply 4 folks in a small workplace in San Francisco. I used to be bowled over by their expertise and friendliness, however most significantly, their willingness to spend so much of time mentoring me. I knew little or no about Rockset’s applied sciences and didn’t know what to anticipate from such an agile early-stage startup, however determined to hitch the group for a summer time internship anyway.

I Was Rockset’s First Ever Intern

Since I didn’t have a lot expertise with software program engineering, I used to be all in favour of touching as many various items as I may to get a really feel for what I could be all in favour of. The group was very accommodating of this—since I used to be the primary and solely intern, I had quite a lot of freedom to discover totally different areas of the Rockset stack. I spent per week engaged on the Python shopper, per week engaged on the Java ingestion code, and per week engaged on the C++ SQL backend.

There’s all the time quite a lot of work to be carried out at a startup, so I had the chance to work on no matter was wanted and fascinating to me. I made a decision to delve into the SQL backend, and began engaged on the question compiler and execution system. A variety of the work I did over the summer time ended up being targeted on aggregation queries, and on this weblog put up I’ll dive deeper into how aggregation queries are executed in Rockset. We’ll first discuss serial execution of easy and sophisticated aggregation queries, after which discover methods to distribute the workload to enhance time and area effectivity.

Serial Execution of Aggregation Queries

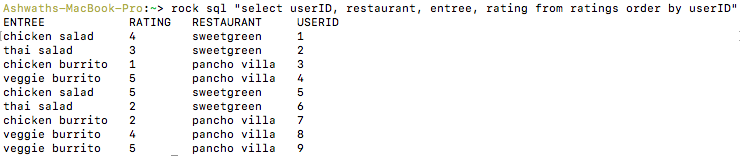

Let’s say we now have a desk scores, the place every row consists of a consumer, a restaurant, an entree and that consumer’s ranking of that entree at that restaurant.

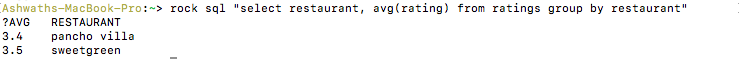

The aggregation question choose restaurant, avg(ranking) from scores group by restaurant computes the common ranking of every restaurant. (See right here for more information on the GROUP BY notation.)

An easy option to execute this computation could be to traverse the rows within the desk and construct a hash map from restaurant to a (sum, rely) pair, representing the sum and rely of all of the scores seen up to now. Then, we are able to traverse every entry of the map and add (restaurant, sum/rely) to the set of returned outcomes. Certainly, for easy and low-memory aggregations, this single computation stage suffices. Nevertheless, with extra complicated queries, we’ll want a number of computation phases.

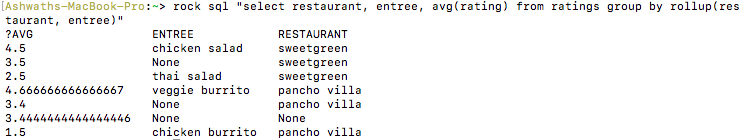

Suppose we needed to compute not simply the common ranking of every restaurant, but in addition the breakdown of that common ranking by entree. The SQL question for that may be choose restaurant, entree, avg(ranking) from scores group by rollup(restaurant, entree). (See our docs and this tutorial for more information on the ROLLUP notation).

Executing this question is similar to executing the earlier one, besides now we now have to assemble the important thing(s) for the hash map in another way. The instance question has three distinct groupings: (), (restaurant) and (restaurant, entree). For every row within the desk, we create three hash keys, one for every grouping. A hash key’s generated by hashing collectively an identifier for which grouping it corresponds to and the values of the columns within the grouping. We now have two computation phases: first, computing the hash keys, and second, utilizing the hash keys to construct a hash map that retains monitor of the working sum and rely (just like the primary question). Going ahead, we’ll name them the hashing and aggregation phases, respectively.

Thus far, we’ve made the idea that the entire desk is saved on the identical machine and all computation is finished on the identical machine. Nevertheless, Rockset makes use of a distributed design the place knowledge is partitioned and saved on a number of leaf nodes and queries are executed on a number of aggregator nodes.

Lowering Question Latency Utilizing Partial Aggregations in Rockset

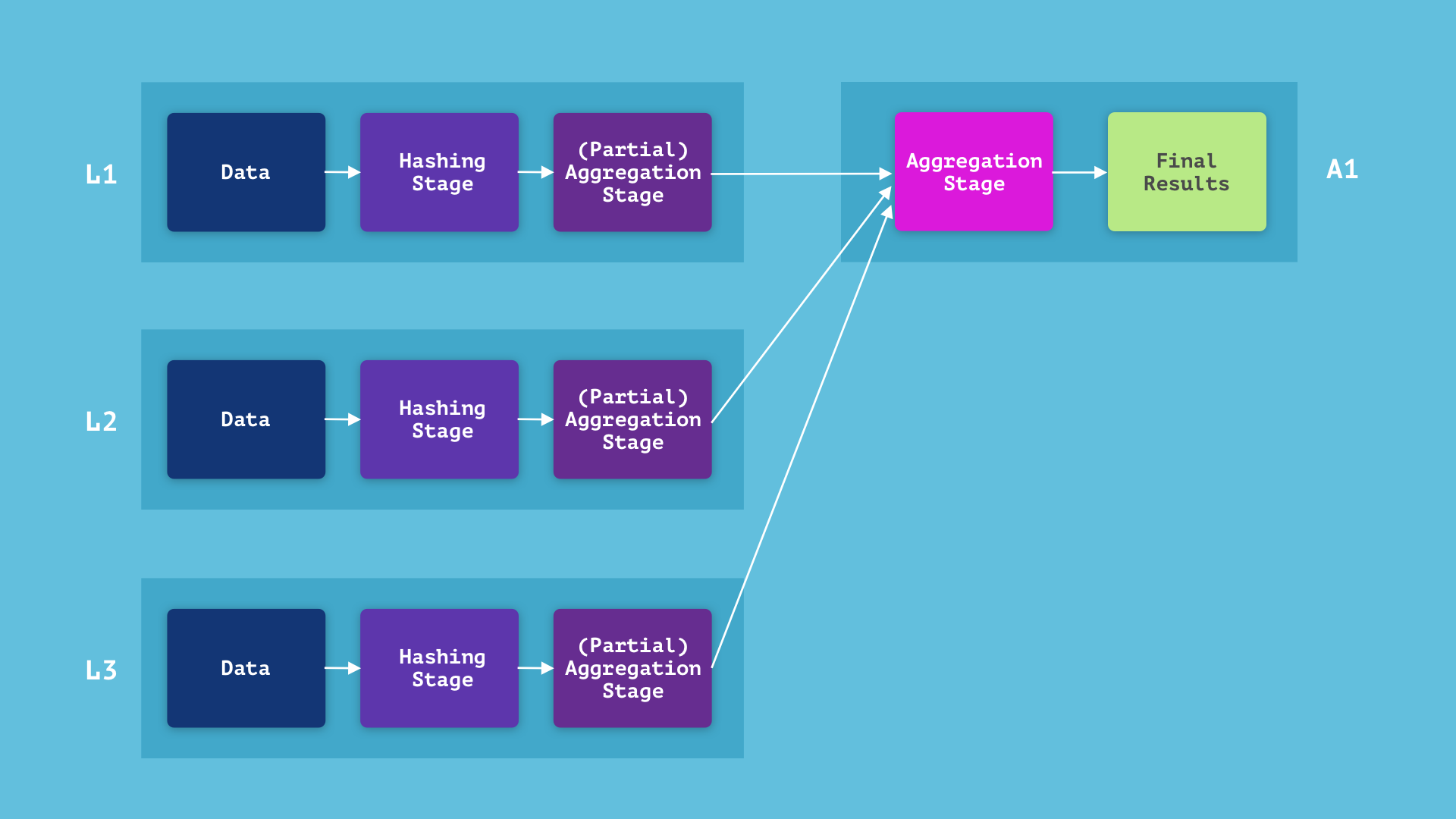

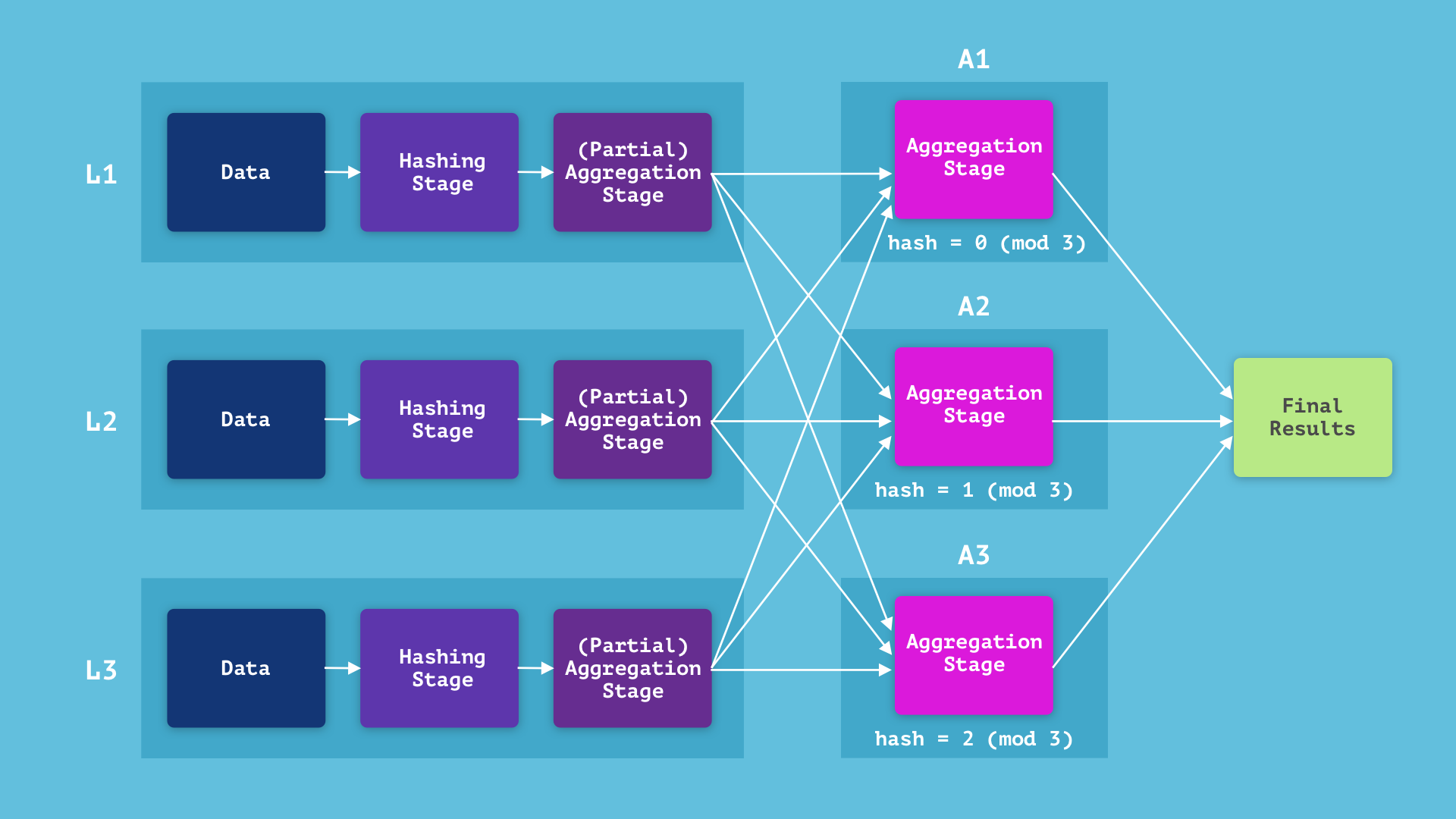

Let’s say there are three leaf machines (L1, L2, L3) and three aggregators (A1, A2, A3). (See this weblog put up for particulars on the Aggregator Leaf Tailer structure.) The easy resolution could be to have all three leaves ship their knowledge to a single aggregator, say A1, and have A1 execute the hashing and aggregation phases. Notice that we are able to cut back the computation time by having the leaves run the hashing phases in parallel and ship the outcomes to the aggregator, which is able to then solely need to run the aggregation stage.

We are able to additional cut back the computation time by having every leaf node run a “partial” aggregation stage on the info it has and ship that end result to the aggregator, which might then end the aggregation stage. In concrete phrases, if a single leaf incorporates a number of rows with the identical hash key, it doesn’t have to ship all of them to an aggregator—it may well compute the sum and rely of these rows and solely ship that. In our instance, if the rows equivalent to customers 4 and eight are each saved on the identical leaf, that leaf doesn’t have to ship each rows to the aggregator. This decreases the serialization and communication load and parallelizes a number of the aggregation computation.

A crude evaluation tells us that for sufficiently massive datasets, this may often lower the computation time, however it’s simple to see that partial aggregations enhance some queries greater than others. The efficiency of the question choose rely(*) from scores will drastically enhance, since as a substitute of sending all of the rows to the aggregator and counting them there, every leaf will rely the variety of rows it has and the aggregator will solely have to sum them up. The crux of the question is run in parallel and the serialization load is drastically decreased. Quite the opposite, the efficiency of the question choose consumer, avg(ranking) group by consumer gained’t enhance in any respect (it’ll truly worsen as a consequence of overhead), because the customers are all distinct so the partial aggregation phases gained’t truly accomplish something.

Lowering Reminiscence Necessities Utilizing Distributed Aggregations in Rockset

We’ve talked about decreasing the execution time, however what concerning the reminiscence utilization? Aggregation queries are particularly space-intensive, as a result of the aggregation stage can’t run in a streaming trend. It should see all of the enter knowledge earlier than having the ability to finalize any output row, and subsequently should retailer the whole hash map (which takes as a lot area as the entire output) till the tip. If the output is just too massive to be saved on a single machine, the machine will run out of reminiscence and crash. Partial aggregations don’t assist with this downside, nonetheless, working the aggregation stage in a distributed trend does. Specifically, we are able to run the aggregation stage on a number of aggregators concurrently, and distribute the info in a constant method.

To resolve which aggregator to ship a row of information to, the leaves may merely take the hash key modulo the variety of obtainable aggregators. Every aggregator would then execute the aggregation stage on the info it receives, after which we are able to merge the end result from every aggregator to get the ultimate end result. This manner, the hash map is distributed over all three aggregators, so we are able to compute aggregations which can be thrice as massive. The extra machines we now have, the bigger the aggregation we are able to compute.

My Rockset Internship – A Nice Alternative to Expertise Startup Life

Interning at Rockset gave me the chance to design and implement quite a lot of the options we’ve talked about, and to be taught (at a excessive degree) how a SQL compiler and execution system is designed. With the mentorship of the Rockset group, I used to be in a position to push these options into manufacturing inside per week of implementing them, and see how shortly and successfully aggregation queries ran.

Past the technical points, it was very fascinating to see how an agile, early-stage startup like Rockset capabilities on a day-to-day and month-to-month foundation. For somebody like me who’d by no means been at such a small startup earlier than, the expertise taught me quite a lot of intangible expertise that I’m positive shall be extremely helpful wherever I find yourself. The scale of the startup made for an open and collegial environment, which allowed me to realize experiences past a conventional software program engineering function. As an illustration, because the engineers at Rockset are additionally those answerable for customer support, I may pay attention to any of these conversations and be included in discussions about the right way to extra successfully serve prospects. I used to be additionally uncovered to quite a lot of the broader firm technique, so I may find out about how startups like Rockset plan and execute longer-term progress objectives.

For somebody who loves meals like I do, there’s no scarcity of choices in San Mateo. Rockset caters lunch from a distinct native restaurant every day, and as soon as per week the entire group goes out for lunch collectively. The workplace is only a ten minute stroll from the Caltrain station, which makes commuting to the workplace a lot simpler. Along with a bunch of enjoyable folks to work with, once I was at Rockset we had off-sites each month (my favourite was archery).

In case you’re all in favour of challenges just like those mentioned on this weblog put up, I hope you’ll think about making use of to hitch the group at Rockset!