Dwell dashboards might help organizations make sense of their occasion knowledge and perceive what’s occurring of their companies in actual time. Advertising managers consistently wish to know what number of signups there have been within the final hour, day, or week. Product managers are all the time seeking to perceive which product options are working effectively and most closely utilized. In lots of conditions, it is very important be capable to take quick motion based mostly on real-time occasion knowledge, as within the case of limited-time gross sales in e-commerce or managing contact middle service ranges. With the belief of the worth companies can extract from real-time knowledge, many organizations which have standardized on Tableau for BI are searching for to implement reside Tableau dashboards on their occasion streams as effectively.

Getting Began

On this weblog, I’ll step by implementing a reside dashboard on occasion knowledge utilizing Tableau. The occasions we are going to monitor will probably be current modifications to numerous Wikimedia tasks, together with Wikipedia.

For this undertaking, we are going to want:

Ingesting Knowledge from Wikimedia Occasion Stream

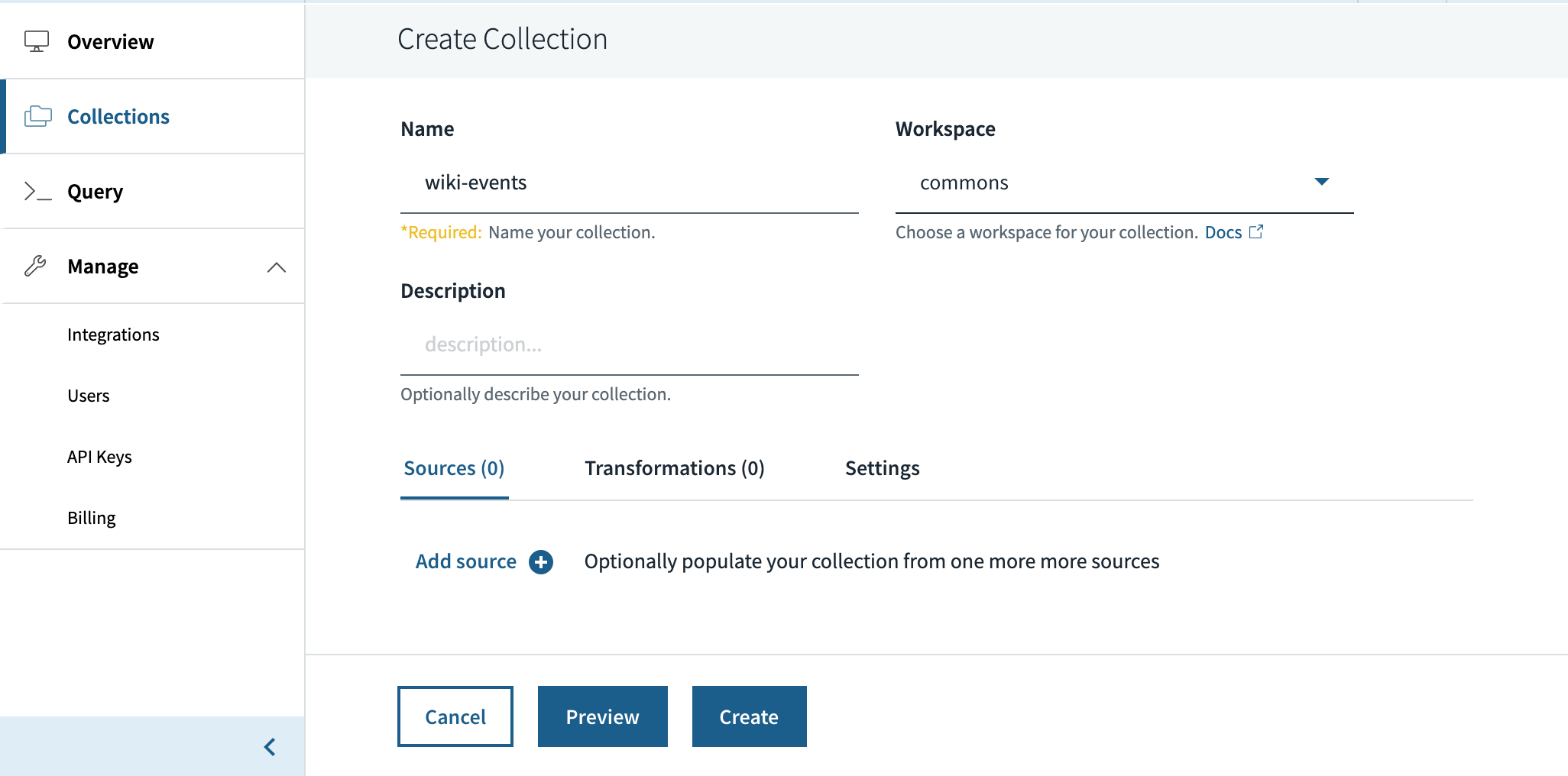

I’ll first create a set, to which we are going to write the occasions originating from the Wikimedia stream.

As soon as we’ve got the gathering arrange in Rockset, I can run a script that subscribes to occasions from the Wikimedia stream and writes them to the wiki-events assortment in Rockset.

import json

from sseclient import SSEClient as EventSource

from rockset import Shopper

rs=Shopper(api_key=ROCKSET_API_KEY)

occasions = rs.Assortment.retrieve("wiki-events")

streams="recentchange,page-links-change,page-create,page-move,page-properties-change,page-delete,check,recentchange,revision-create,page-undelete"

url="https://stream.wikimedia.org/v2/stream/{}".format(streams)

for occasion in EventSource(url):

attempt:

if occasion.occasion == 'message':

change = json.hundreds(occasion.knowledge)

occasions.add_docs([change])

besides:

proceed

Whereas we’re utilizing Rockset’s Write API to ingest the Wikimedia occasion stream on this case, Rockset may sync knowledge from different sources, like Amazon DynamoDB, Amazon Kinesis, and Apache Kakfa, to energy reside dashboards, if required.

Now that we’re ingesting the occasion stream, the gathering is rising steadily each second. Describing the gathering exhibits us the form of information. The result’s fairly lengthy, so I’ll simply present an abbreviated model under to offer you a way of what the JSON knowledge appears like, together with a few of the fields we will probably be exploring in Tableau. The info is considerably complicated, containing sparse fields and nested objects and arrays.

rockset> DESCRIBE wiki-events;

+--------------------------------------------------------+---------------+---------+-----------+

| area | occurrences | whole | kind |

|--------------------------------------------------------+---------------+---------+-----------|

| ['$schema'] | 12172 | 2619723 | string |

| ['_event_time'] | 2619723 | 2619723 | timestamp |

| ['_id'] | 2619723 | 2619723 | string |

| ['added_links'] | 442942 | 2619723 | array |

| ['added_links', '*'] | 3375505 | 3375505 | object |

| ['added_links', '*', 'external'] | 3375505 | 3375505 | bool |

| ['added_links', '*', 'link'] | 3375505 | 3375505 | string |

...

| ['bot'] | 1040316 | 2619723 | bool |

| ['comment'] | 1729328 | 2619723 | string |

| ['database'] | 1561437 | 2619723 | string |

| ['id'] | 1005932 | 2619723 | int |

| ['length'] | 679149 | 2619723 | object |

| ['length', 'new'] | 679149 | 679149 | int |

| ['length', 'old'] | 636124 | 679149 | int |

...

| ['removed_links'] | 312950 | 2619723 | array |

| ['removed_links', '*'] | 2225975 | 2225975 | object |

| ['removed_links', '*', 'external'] | 2225975 | 2225975 | bool |

| ['removed_links', '*', 'link'] | 2225975 | 2225975 | string |

...

| ['timestamp'] | 1040316 | 2619723 | int |

| ['title'] | 1040316 | 2619723 | string |

| ['type'] | 1040316 | 2619723 | string |

| ['user'] | 1040316 | 2619723 | string |

| ['wiki'] | 1040316 | 2619723 | string |

+--------------------------------------------------------+---------------+---------+-----------+

Connecting a Tableau Dashboard to Actual-Time Occasion Knowledge

Allow us to bounce into constructing the dashboard. I will first want to hook up with Rockset, as a brand new knowledge supply, from my Tableau Desktop software. Observe the steps right here to set this up.

We will create a primary chart displaying the variety of modifications made by bots vs. non-bots for each minute within the final one hour. I can use a customized SQL question inside Tableau to specify the question for this, which provides us the ensuing chart.

choose

bot as is_bot,

format_iso8601(timestamp_seconds(60 * (timestamp / 60))) as tb_time

from

"wiki-events" c

the place

timestamp is just not null

and bot is just not null

That is about 1,400 occasions per minute, with bots accountable for almost all of them.

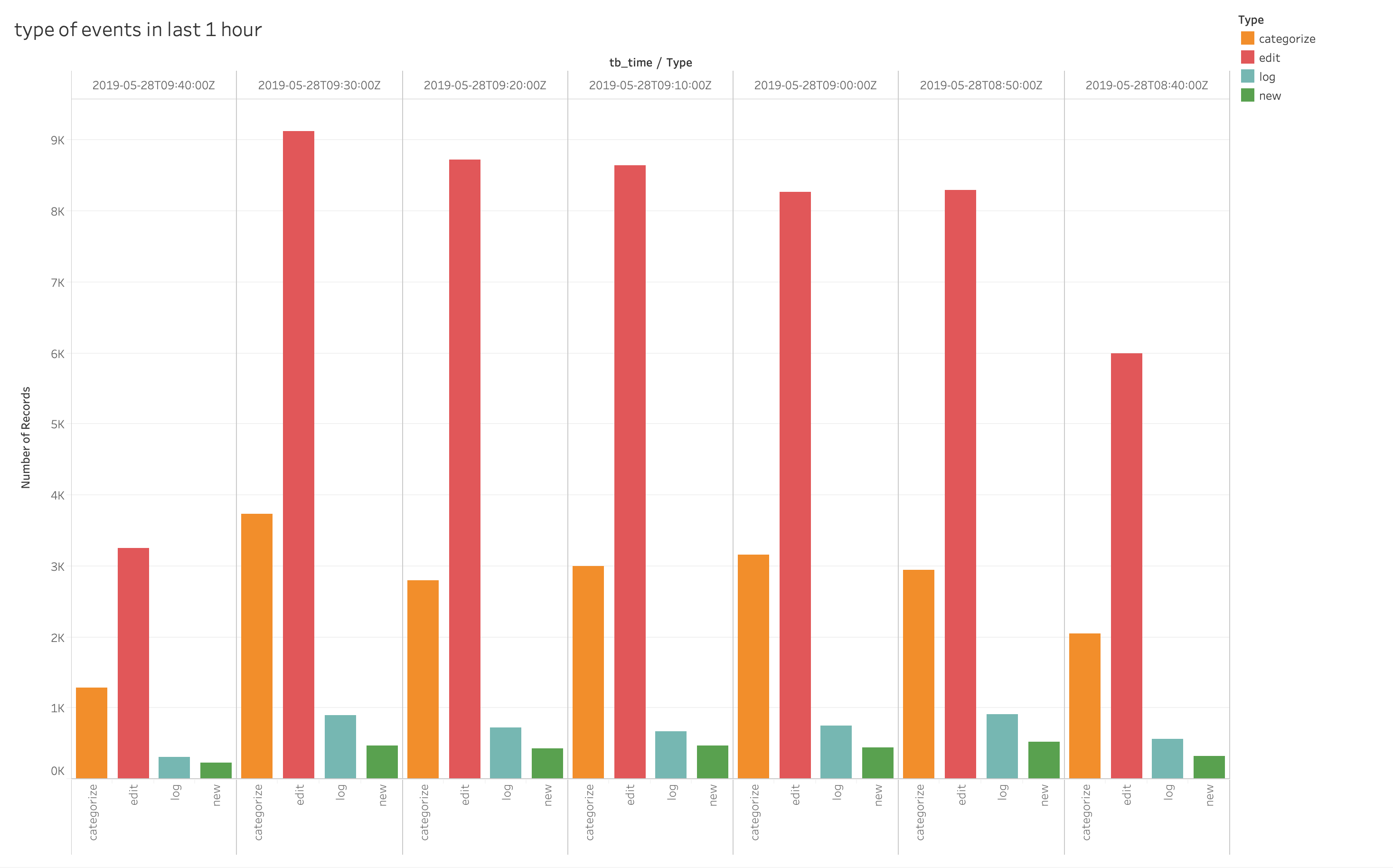

Wikimedia additionally tracks a number of forms of change occasions: edit, new, log, and categorize. We will get an up-to-date view of the varied forms of modifications made, at 10-minute intervals, for the final hour.

choose

kind,

format_iso8601(timestamp_seconds(600 * (timestamp / 600))) as tb_time

from

"wiki-events"

the place

timestamp_seconds(timestamp) > current_timestamp() - hours(1)

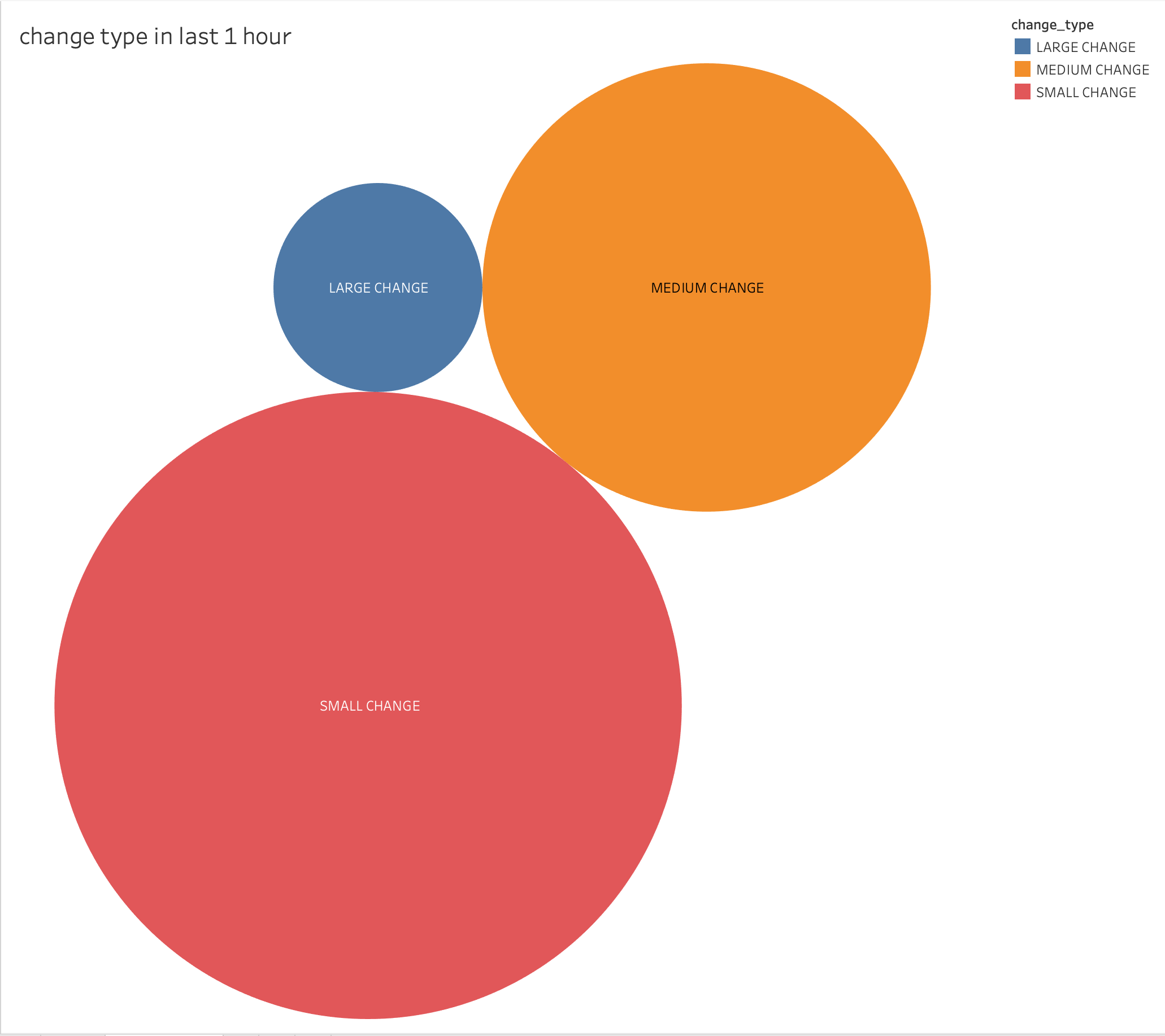

Lastly, I plotted a chart to visualise the magnitude of the edits made throughout the final hour, whether or not they have been small-, medium-, or large-scale edits.

choose

CASE

WHEN

sq.change_in_length <= 100

THEN

'SMALL CHANGE'

WHEN

sq.change_in_length <= 1000

THEN

'MEDIUM CHANGE'

ELSE

'LARGE CHANGE'

END

as change_type

from

(

choose

abs(c.size.new - c.size.outdated) as change_in_length

from

"wiki-events" c

the place

c.kind="edit"

and timestamp_seconds(c.timestamp) > current_timestamp() - hours(1)

)

sq

Recap

In just a few steps, we ingested a stream of complicated JSON occasion knowledge, related Tableau to the information in Rockset, and added some charts to our reside dashboard. Whereas it might usually take tens of minutes, if not longer, to course of uncooked occasion knowledge to be used with a dashboarding software, utilizing Tableau on real-time knowledge in Rockset permits customers to carry out reside evaluation on their knowledge inside seconds of the occasions occurring.

In the event you want to adapt what we have achieved right here to your use case, the supply code for this train is out there right here.