Organizations converse of operational reporting and analytics as the following technical problem in bettering enterprise processes and effectivity. In a world the place everyone seems to be changing into an analyst, stay dashboards floor up-to-date insights and operationalize real-time information to supply in-time decision-making help throughout a number of areas of a company. We’ll take a look at what it takes to construct operational dashboards and reporting utilizing normal information visualization instruments, like Tableau, Grafana, Redash, and Apache Superset. Particularly, we’ll be specializing in utilizing these BI instruments on information saved in DynamoDB, as we have now discovered the trail from DynamoDB to information visualization instrument to be a typical sample amongst customers of operational dashboards.

Creating information visualizations with present BI instruments, like Tableau, might be a superb match for organizations with fewer sources, much less strict UI necessities, or a want to shortly get a dashboard up and operating. It has the additional advantage that many analysts on the firm are already aware of how you can use the instrument. If you’re curious about crafting your personal customized dashboard, examine Customized Reside Dashboards on DynamoDB as an alternative.

We take into account a number of approaches, all of which use DynamoDB Streams however differ in how the dashboards are served:

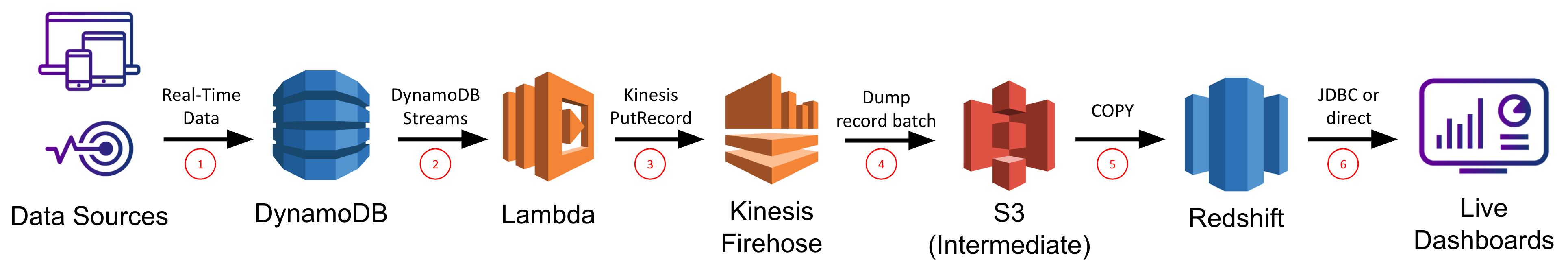

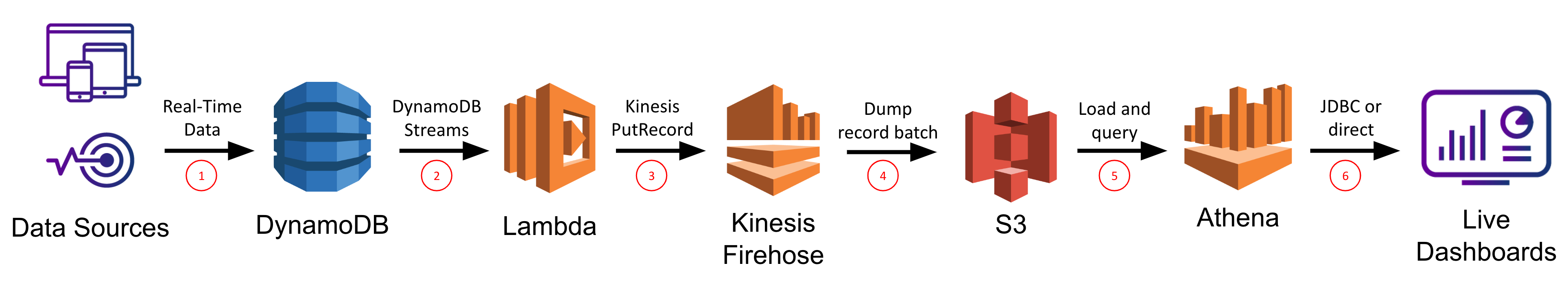

1. DynamoDB Streams + Lambda + Kinesis Firehose + Redshift

2. DynamoDB Streams + Lambda + Kinesis Firehose + S3 + Athena

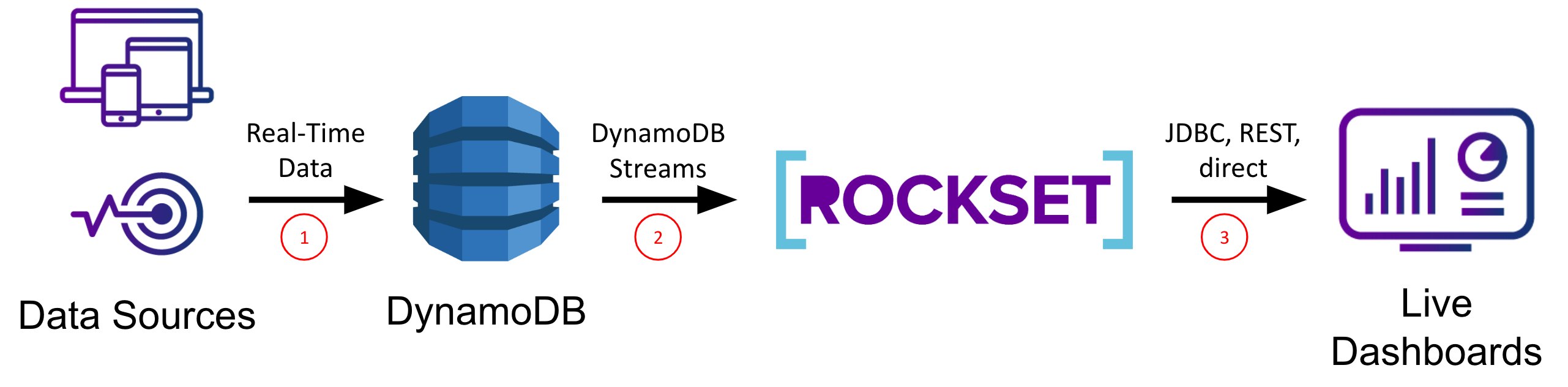

3. DynamoDB Streams + Rockset

We’ll consider every method on its ease of setup/upkeep, information latency, question latency/concurrency, and system scalability so you possibly can choose which method is finest for you primarily based on which of those standards are most vital in your use case.

Concerns for Constructing Operational Dashboards Utilizing Normal BI Instruments

Constructing stay dashboards is non-trivial as any resolution must help extremely concurrent, low latency queries for quick load occasions (or else drive down utilization/effectivity) and stay sync from the information sources for low information latency (or else drive up incorrect actions/missed alternatives). Low latency necessities rule out immediately working on information in OLTP databases, that are optimized for transactional, not analytical, queries. Low information latency necessities rule out ETL-based options which enhance your information latency above the real-time threshold and inevitably result in “ETL hell”.

DynamoDB is a completely managed NoSQL database supplied by AWS that’s optimized for level lookups and small vary scans utilizing a partition key. Although it’s extremely performant for these use instances, DynamoDB isn’t a sensible choice for analytical queries which generally contain giant vary scans and sophisticated operations reminiscent of grouping and aggregation. AWS is aware of this and has answered prospects requests by creating DynamoDB Streams, a change-data-capture system which can be utilized to inform different companies of recent/modified information in DynamoDB. In our case, we’ll make use of DynamoDB Streams to synchronize our DynamoDB desk with different storage programs which can be higher suited to serving analytical queries.

To construct your stay dashboard on high of an present BI instrument primarily means you might want to present a SQL API over a real-time information supply, after which you should use your BI instrument of alternative–Tableau, Superset, Redash, Grafana, and many others.–to plug into it and create your whole information visualizations on DynamoDB information. Due to this fact, right here we’ll deal with making a real-time information supply with SQL help and go away the specifics of every of these instruments for an additional put up.

Kinesis Firehose + Redshift

We’ll begin off this finish of the spectrum by contemplating utilizing Kinesis Firehose to synchronize your DynamoDB desk with a Redshift desk, on high of which you’ll be able to run your BI instrument of alternative. Redshift is AWS’s information warehouse providing that’s particularly tailor-made for OLAP workloads over very giant datasets. Most BI instruments have express Redshift integrations accessible, and there’s a regular JDBC connection to can be utilized as effectively.

The very first thing to do is create a brand new Redshift cluster, and inside it create a brand new database and desk that might be used to carry the information to be ingested from DynamoDB. You’ll be able to hook up with your Redshift database by way of a regular SQL shopper that helps a JDBC connection and the PostgreSQL dialect. You’ll have to explicitly outline your desk with all subject names, information varieties, and column compression varieties at this level earlier than you possibly can proceed.

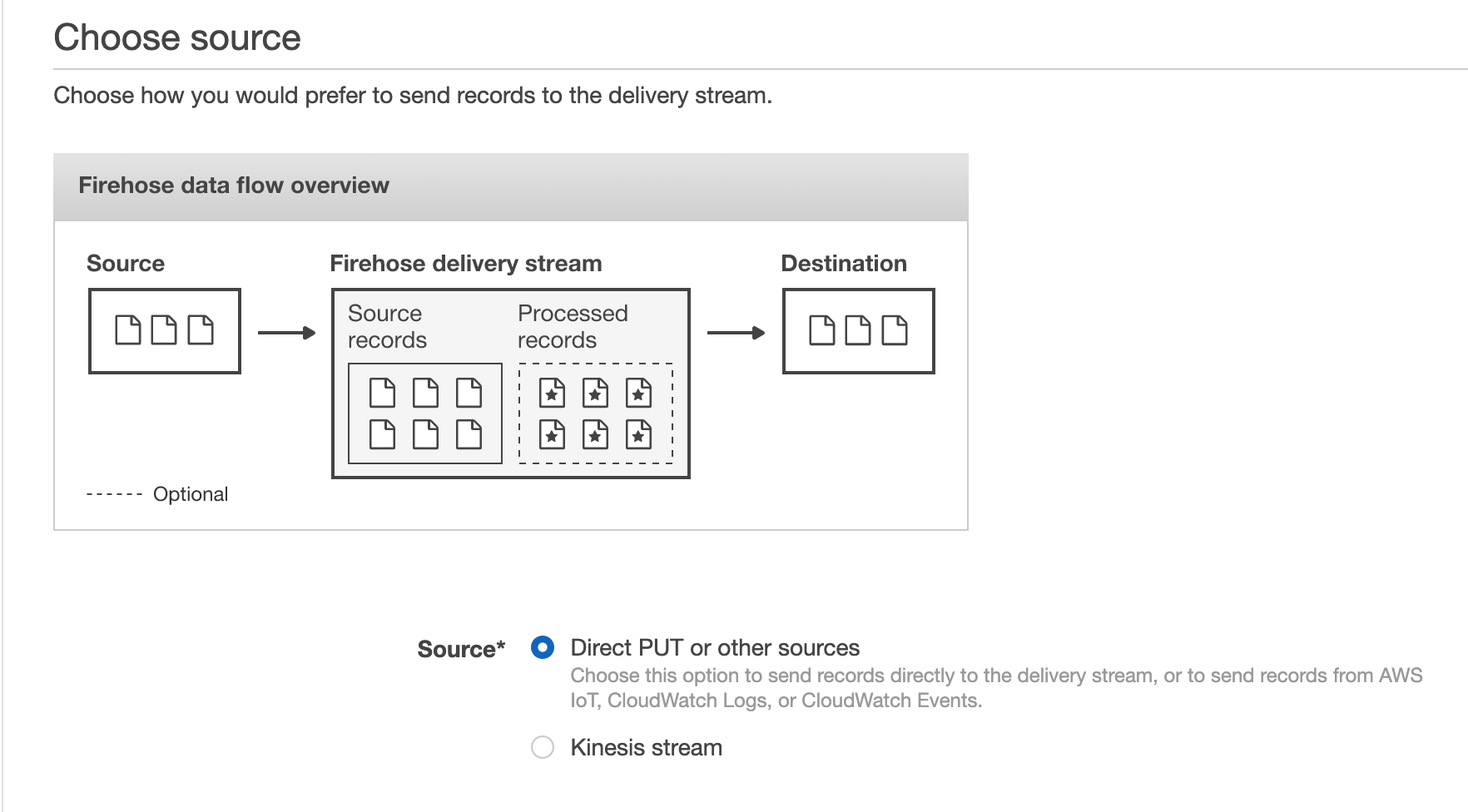

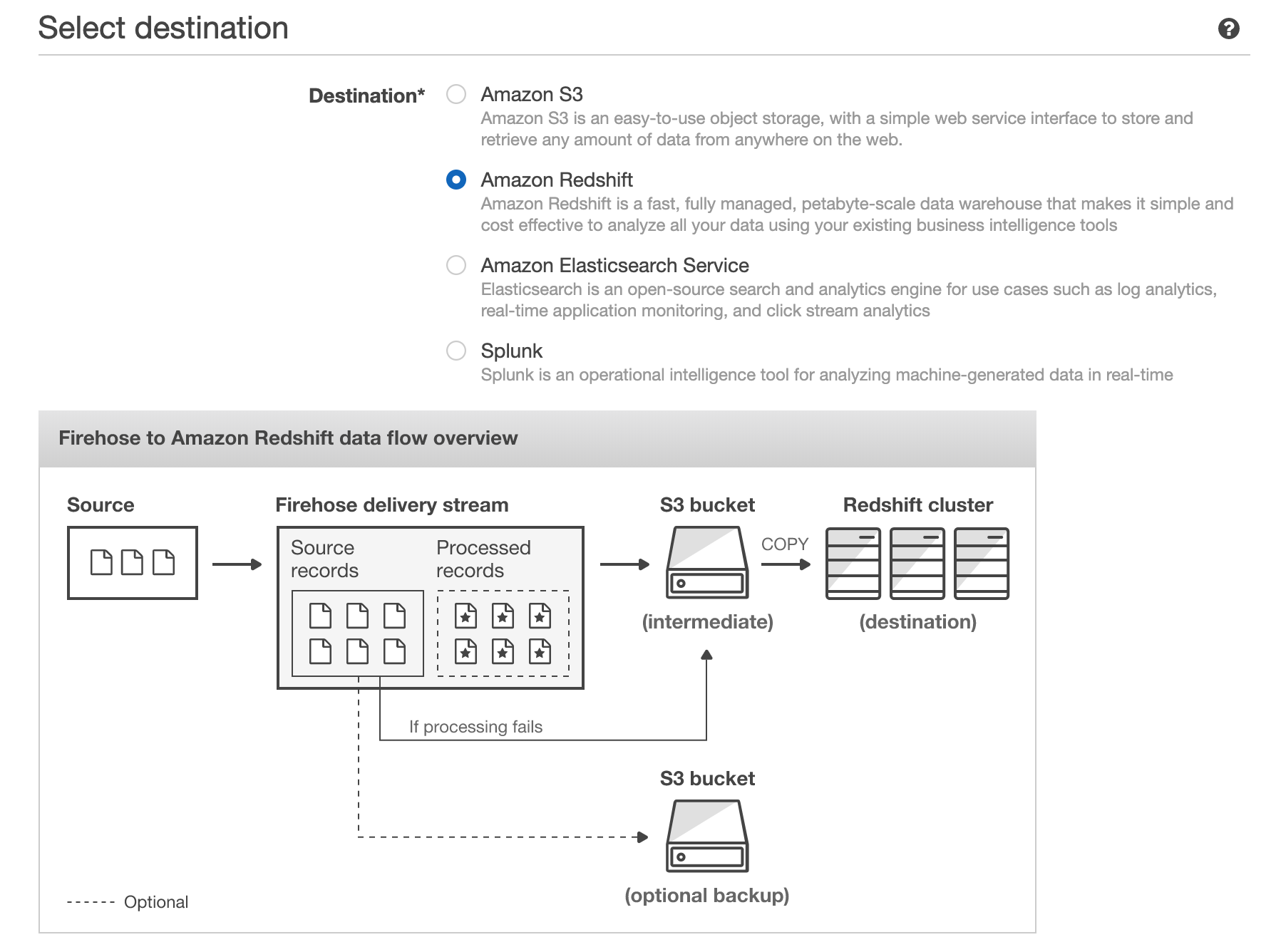

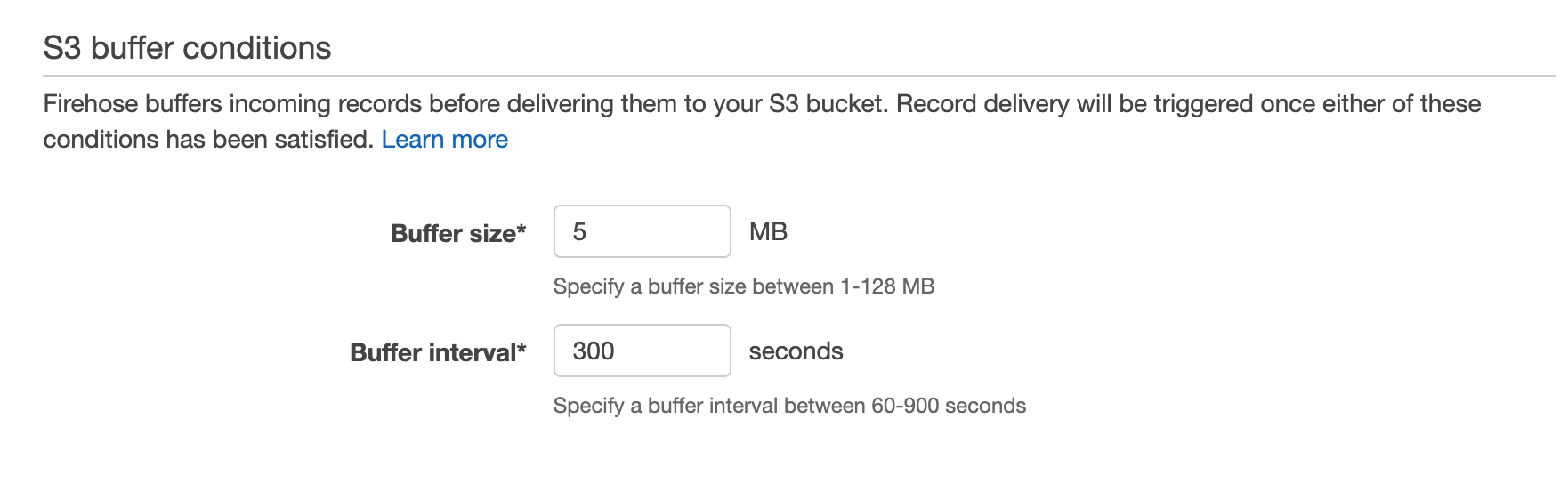

Subsequent, you’ll must go to the Kinesis dashboard and create a brand new Kinesis Firehose, which is the variant AWS offers to stream occasions to a vacation spot bucket in S3 or a vacation spot desk in Redshift. We’ll select the supply possibility Direct PUT or different sources, and we’ll choose our Redshift desk because the vacation spot. Right here it offers you some useful optimizations you possibly can allow like staging the information in S3 earlier than performing a COPY command into Redshift (which results in fewer, bigger writes to Redshift, thereby preserving valuable compute sources in your Redshift cluster and providing you with a backup in S3 in case there are any points through the COPY). We will configure the buffer dimension and buffer interval to manage how a lot/usually Kinesis writes in a single chunk. For instance, a 100MB buffer dimension and 60s buffer interval would inform Kinesis Firehose to write down as soon as it has acquired 100MB of knowledge, or 60s has handed, whichever comes first.

Lastly, you possibly can arrange a Lambda operate that makes use of the DynamoDB Streams API to retrieve current adjustments to the DynamoDB desk. This operate will buffer these adjustments and ship a batch of them to Kinesis Firehose utilizing its PutRecord or PutRecordBatch API. The operate would look one thing like

exports.handler = async (occasion, context) => {

for (const report of occasion.Information) {

let platform = report.dynamodb['NewImage']['platform']['S'];

let quantity = report.dynamodb['NewImage']['amount']['N'];

let information = ... // format in line with your Redshift schema

var params = {

Knowledge: information

StreamName: 'take a look at'

PartitionKey: '1234'

};

kinesis.putRecord(params, operate(err, information) {

if (err) console.log(err, err.stack); // an error occurred

else console.log(information); // profitable response

});

}

return `Efficiently processed ${occasion.Information.size} information.`;

};

Placing this all collectively we get the next chain response each time new information is put into the DynamoDB desk:

- The Lambda operate is triggered, and makes use of the DynamoDB Streams API to get the updates and writes them to Kinesis Firehose

- Kinesis Firehose buffers the updates it will get and periodically (primarily based on buffer dimension/interval) flushes them to an intermediate file in S3

- The file in S3 is loaded into the Redshift desk utilizing the Redshift COPY command

- Any queries towards the Redshift desk (e.g. from a BI instrument) mirror this new information as quickly because the COPY completes

On this means, any dashboard constructed by way of a BI instrument that’s built-in with Redshift will replace in response to adjustments in your DynamoDB desk.

Execs:

- Redshift can scale to petabytes

- Many BI instruments (e.g. Tableau, Redash) have devoted Redshift integrations

- Good for advanced, compute-heavy queries

- Based mostly on acquainted PostgreSQL; helps full-featured SQL, together with aggregations, sorting, and joins

Cons:

- Must provision/preserve/tune Redshift cluster which is dear, time consuming, and fairly difficult

- Knowledge latency on the order of a number of minutes (or extra relying on configurations)

- Because the DynamoDB schema evolves, tweaks might be required to the Redshift desk schema / the Lambda ETL

- Redshift pricing is by the hour for every node within the cluster, even when you’re not utilizing them or there’s little information on them

- Redshift struggles with extremely concurrent queries

TLDR:

- Contemplate this feature when you don’t have many lively customers in your dashboard, don’t have strict real-time necessities, and/or have already got a heavy funding in Redshift

- This method makes use of Lambdas and Kinesis Firehose to ETL your information and retailer it in Redshift

- You’ll get good question efficiency, particularly for advanced queries over very giant information

- Knowledge latency gained’t be nice although and Redshift struggles with excessive concurrency

- The ETL logic will most likely break down as your information adjustments and want fixing

- Administering a manufacturing Redshift cluster is a big endeavor

For extra data on this method, take a look at the AWS documentation for loading information from DynamoDB into Redshift.

S3 + Athena

Subsequent we’ll take into account Athena, Amazon’s service for operating SQL on information immediately in S3. That is primarily focused for rare or exploratory queries that may tolerate longer runtimes and save on value by not having the information copied right into a full-fledged database or cache like Redshift, Redis, and many others.

Very similar to the earlier part, we’ll use Kinesis Firehose right here, however this time it is going to be used to shuttle DynamoDB desk information into S3. The setup is identical as above with choices for buffer interval and buffer dimension. Right here this can be very vital to allow compression on the S3 recordsdata since that can result in each quicker and cheaper queries since Athena fees you primarily based on the information scanned. Then, just like the earlier part, you possibly can register a Lambda operate and use the DynamoDB streams API to make calls to the Kinesis Firehose API as adjustments are made to our DynamoDB desk. On this means you’ll have a bucket in S3 storing a duplicate of your DynamoDB information over a number of compressed recordsdata.

Notice: You’ll be able to moreover save on value and enhance efficiency by utilizing a extra optimized storage format and partitioning your information.

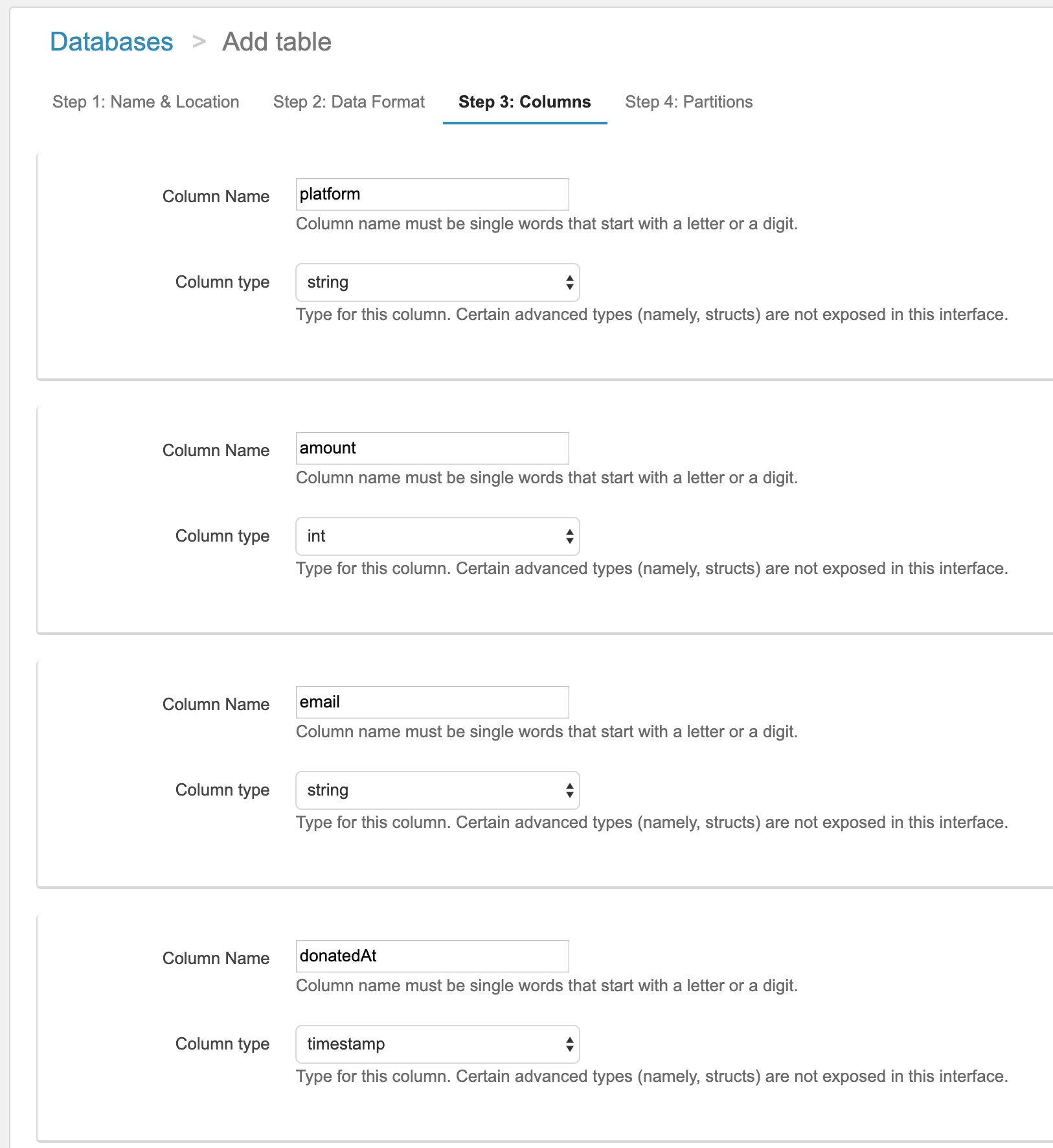

Subsequent within the Athena dashboard you possibly can create a brand new desk and outline the columns there both by way of the UI or utilizing Hive DDL statements. Like Hive, Athena has a schema on learn system, that means as every new report is learn in, the schema is utilized to it (vs. being utilized when the file is written).

As soon as your schema is outlined, you possibly can submit queries by way of the console, by way of their JDBC driver, or by way of BI instrument integrations like Tableau and Amazon Quicksight. Every of those queries will result in your recordsdata in S3 being learn, the schema being utilized to all of information, and the question end result being computed throughout the information. Because the information isn’t optimized in a database, there are not any indexes and studying every report is dearer because the bodily format isn’t optimized. Because of this your question will run, however it can tackle the order of minutes to probably hours.

Execs:

- Works at giant scales

- Low information storage prices since all the things is in S3

- No always-on compute engine; pay per question

Cons:

- Very excessive question latency– on the order of minutes to hours; can’t use with interactive dashboards

- Must explicitly outline your information format and format earlier than you possibly can start

- Blended varieties within the S3 recordsdata attributable to DynamoDB schema adjustments will result in Athena ignoring information that don’t match the schema you specified

- Except you place within the time/effort to compress your information, ETL your information into Parquet/ORC format, and partition your information recordsdata in S3, queries will successfully at all times scan your entire dataset, which might be very gradual and really costly

TLDR:

- Contemplate this method if value and information dimension are the driving elements in your design and provided that you possibly can tolerate very lengthy and unpredictable run occasions (minutes to hours)

- This method makes use of Lambda + Kinesis Firehose to ETL your information and retailer it in S3

- Finest for rare queries on tons of knowledge and DynamoDB reporting / dashboards that do not must be interactive

Check out this AWS weblog for extra particulars on how you can analyze information in S3 utilizing Athena.

Rockset

The final possibility we’ll take into account on this put up is Rockset, a serverless search and analytics service. Rockset’s information engine has robust dynamic typing and good schemas which infer subject varieties in addition to how they modify over time. These properties make working with NoSQL information, like that from DynamoDB, straight ahead. Rockset additionally integrates with each customized dashboards and BI instruments.

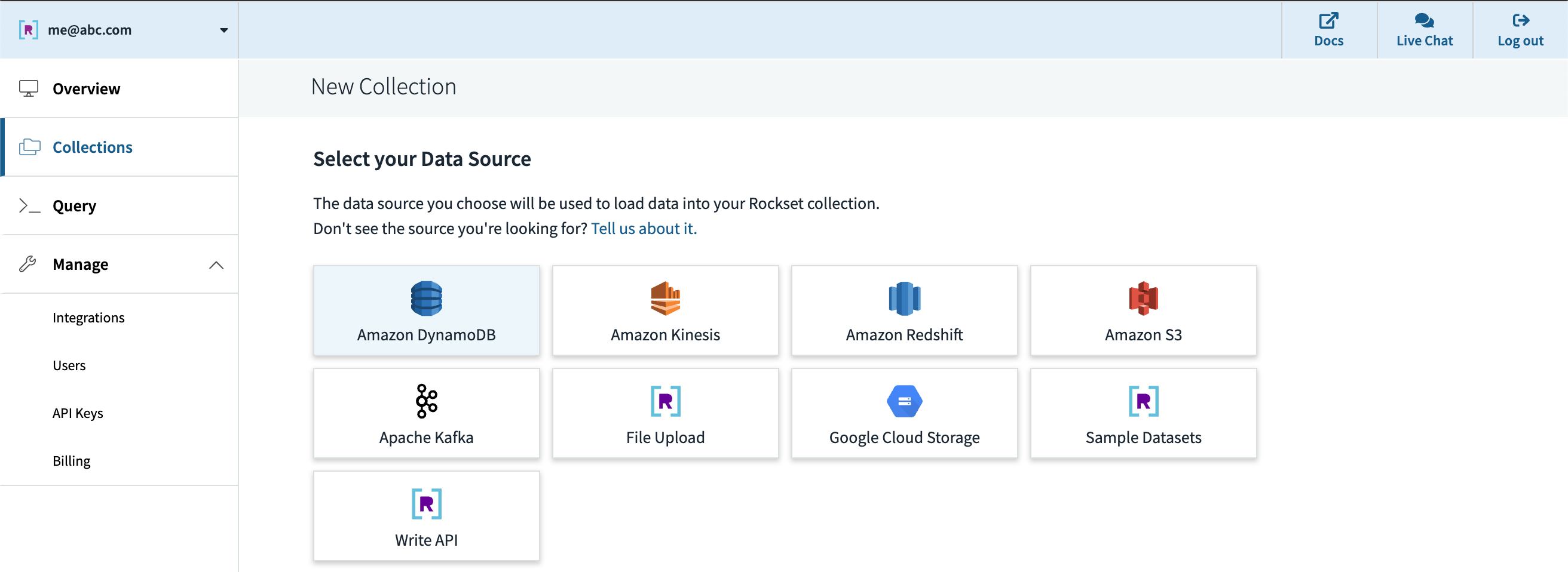

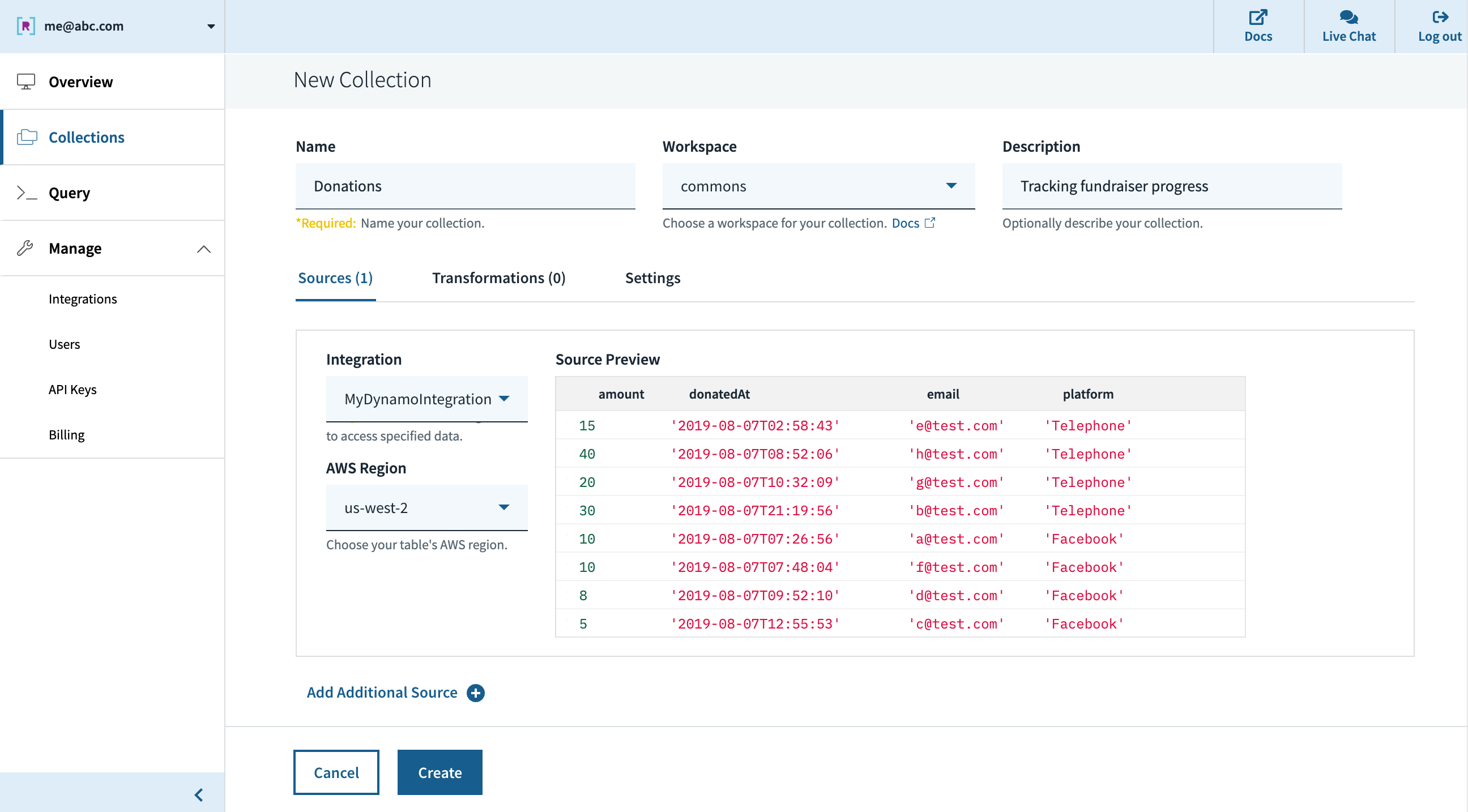

After creating an account at www.rockset.com, we’ll use the console to arrange our first integration– a set of credentials used to entry our information. Since we’re utilizing DynamoDB as our information supply, we’ll present Rockset with an AWS entry key and secret key pair that has correctly scoped permissions to learn from the DynamoDB desk we wish. Subsequent we’ll create a set– the equal of a DynamoDB/SQL desk– and specify that it ought to pull information from our DynamoDB desk and authenticate utilizing the combination we simply created. The preview window within the console will pull a couple of information from the DynamoDB desk and show them to ensure all the things labored appropriately, after which we’re good to press “Create”.

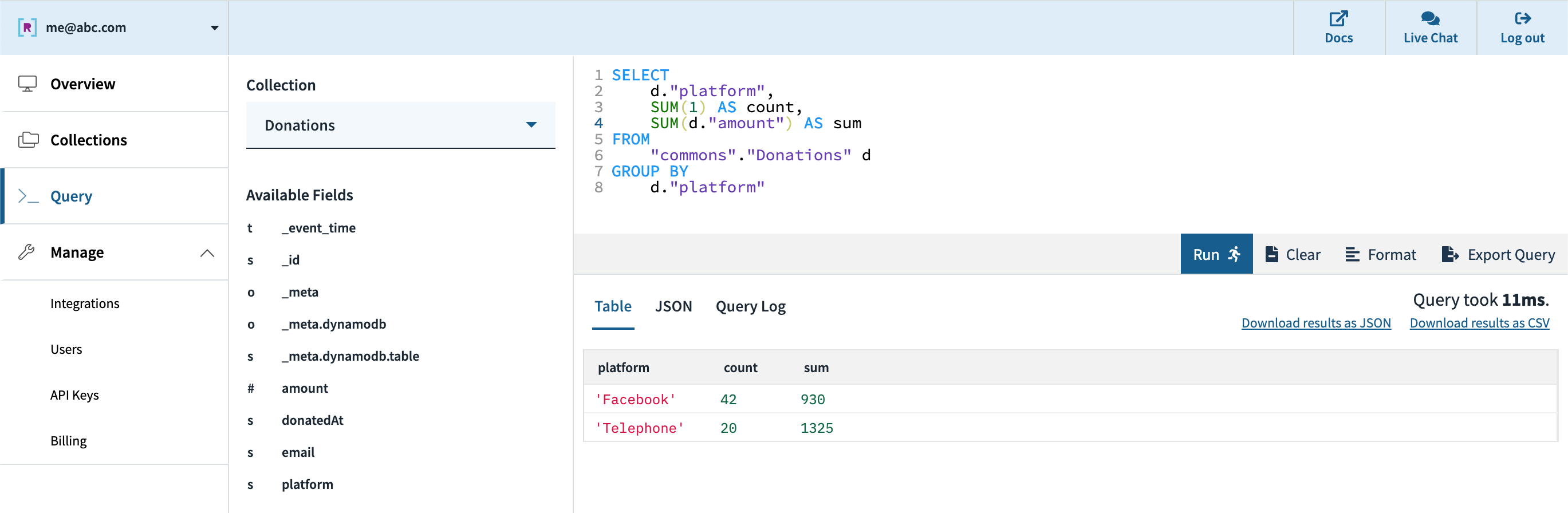

Quickly after, we are able to see within the console that the gathering is created and information is streaming in from DynamoDB. We will use the console’s question editor to experiment/tune the SQL queries that might be utilized in our stay dashboard. Since Rockset has its personal question compiler/execution engine, there’s first-class help for arrays, objects, and nested information buildings.

Subsequent, we are able to create an API key within the console which might be utilized by the dashboard for authentication to Rockset’s servers. Our choices for connecting to a BI instrument like Tableau, Redash, and many others. are the JDBC driver that Rockset offers or the native Rockset integration for those who have one.

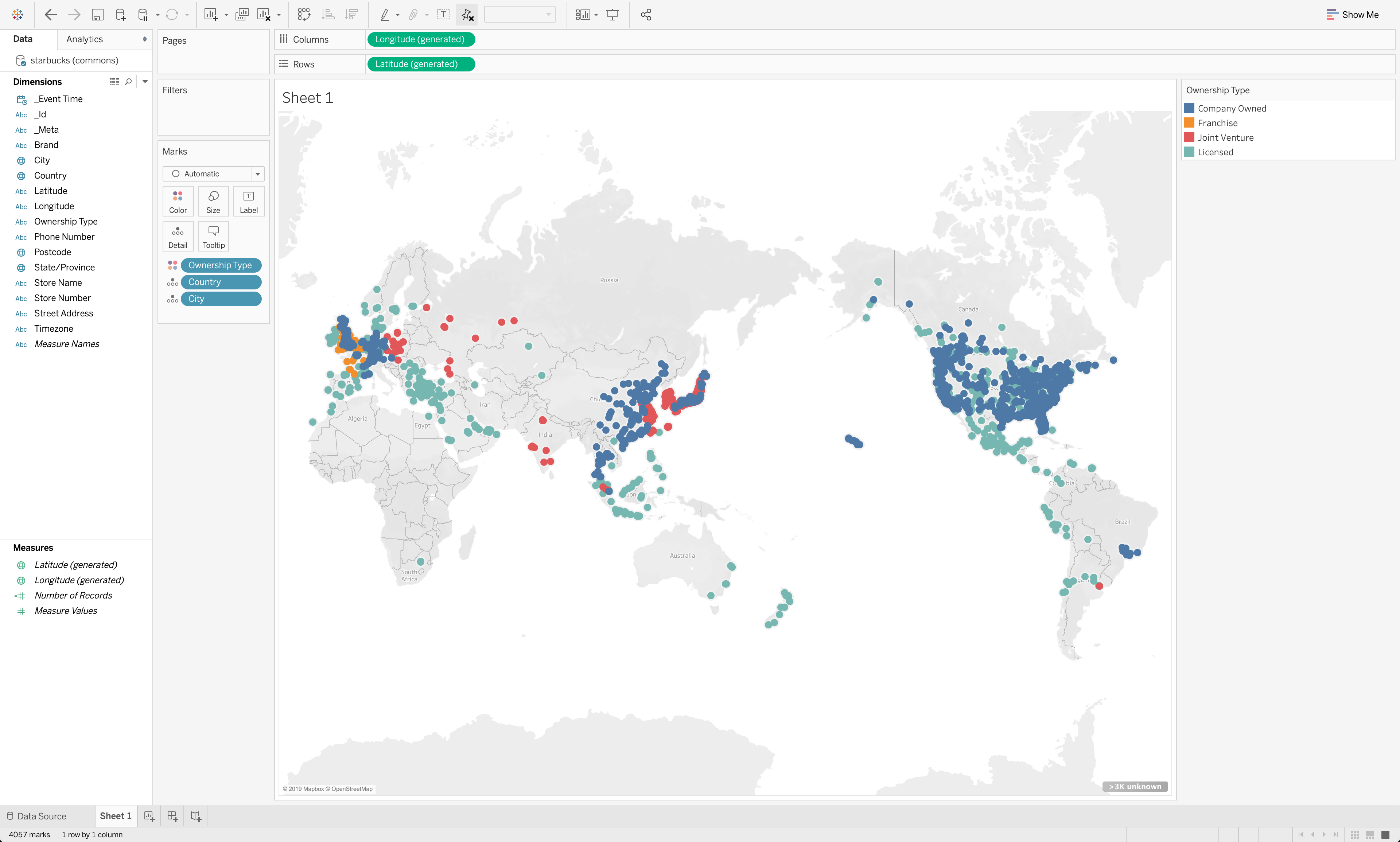

We have now efficiently gone from DynamoDB information to a quick, interactive dashboard on Tableau, or different BI instrument of alternative. Rockset’s cloud-native structure permits it to scale question efficiency and concurrency dynamically as wanted, enabling quick queries even on giant datasets with advanced, nested information with inconsistent varieties.

Execs:

- Serverless– quick setup, no-code DynamoDB integration, and 0 configuration/administration required

- Designed for low question latency and excessive concurrency out of the field

- Integrates with DynamoDB (and different sources) in real-time for low information latency with no pipeline to take care of

- Robust dynamic typing and good schemas deal with combined varieties and works effectively with NoSQL programs like DynamoDB

- Integrates with a wide range of BI instruments (Tableau, Redash, Grafana, Superset, and many others.) and customized dashboards (by way of shopper SDKs, if wanted)

Cons:

- Optimized for lively dataset, not archival information, with candy spot as much as 10s of TBs

- Not a transactional database

- It’s an exterior service

TLDR:

- Contemplate this method in case you have strict necessities on having the most recent information in your real-time dashboards, must help giant numbers of customers, or wish to keep away from managing advanced information pipelines

- Constructed-in integrations to shortly go from DynamoDB (and lots of different sources) to stay dashboards

- Can deal with combined varieties, syncing an present desk, and tons of quick queries

- Finest for information units from a couple of GBs to 10s of TBs

For extra sources on how you can combine Rockset with DynamoDB, take a look at this weblog put up that walks by way of a extra advanced instance.

Conclusion

On this put up, we thought of a couple of approaches to enabling normal BI instruments, like Tableau, Redash, Grafana, and Superset, for real-time dashboards on DynamoDB, highlighting the professionals and cons of every. With this background, it is best to have the ability to consider which possibility is correct in your use case, relying in your particular necessities for question and information latency, concurrency, and ease of use, as you implement operational reporting and analytics in your group.

Different DynamoDB sources: