The UK’s BBC has complained about Apple’s notification summarization function in iOS 18 utterly fabricating the gist of an article. Here is what occurred, and why.

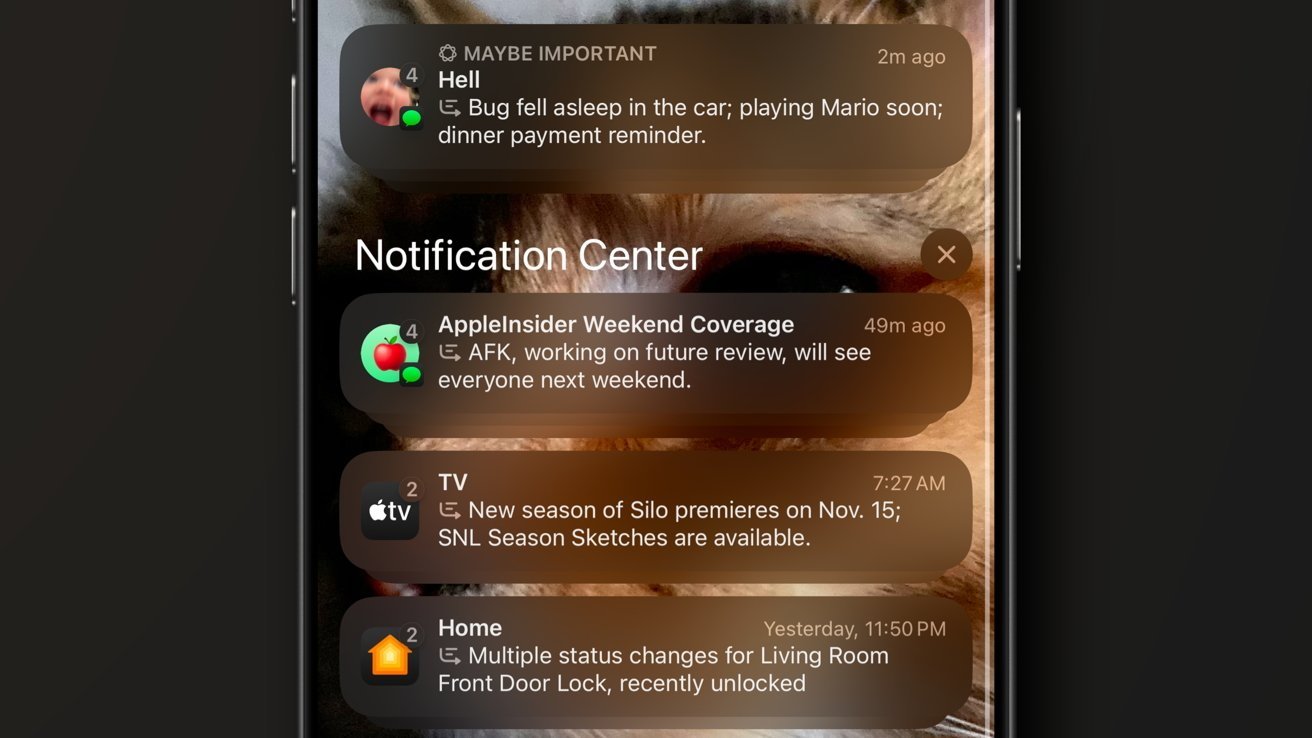

The introduction of Apple Intelligence included summarization options, saving customers time by providing key factors of a doc or a group of notifications. On Friday, the summarization of notifications was a giant downside for one main information outlet.

The BBC has complained to Apple about how the summarization misinterprets information headlines and comes up with the mistaken conclusion when producing summaries. A spokesperson mentioned Apple was contacted to “elevate this concern and repair the issue.”

In an instance provided in its public grievance, a notification summarizing BBC Information states “Luigi Mangione shoots himself,” referring to the person arrested for the homicide of UnitedHealthcare CEO Brian Thompson. Mangione, who’s in custody, could be very a lot alive.

“It’s important to us that our audiences can belief any info or journalism revealed in our identify and that features notifications,” mentioned the spokesperson.

Incorrect summarizations aren’t simply a problem for the BBC, because the New York Instances has additionally fallen sufferer. In a Bluesky publish a couple of November 21 abstract, it claimed “Netanyahu arrested,” nonetheless the story was actually concerning the Worldwide Legal Courtroom issuing an arrest warrant for the Israeli prime minister.

Apple declined to remark to the BBC.

Hallucinating the information

The situations of incorrect summaries are known as “hallucinations.” This refers to when an AI mannequin both comes up with not fairly factual responses, even within the face of extraordinarily clear units of knowledge, comparable to a information story.

Hallucinations is usually a large downside for AI companies, particularly in instances the place customers depend on getting a simple and easy reply to a question. It is also one thing that corporations aside from Apple additionally must take care of.

For instance, early variations of Google’s Bard AI, now Gemini, someway mixed Malcolm Owen the AppleInsider author with the lifeless singer of the identical identify from the band The Ruts.

Hallucinations can occur in fashions for a wide range of causes, comparable to points with the coaching information or the coaching course of itself, or a misapplication of discovered patterns to new information. The mannequin may additionally be missing sufficient context in its information and immediate to supply a completely appropriate response, or make an incorrect assumption concerning the supply information.

It’s unknown what precisely is inflicting the headline summarization points on this occasion. The supply article was clear concerning the shooter, and mentioned nothing about an assault on the person.

This can be a downside that Apple CEO Tim Cook dinner understood was a potential difficulty on the time of asserting Apple Intelligence. In June, he acknowledged that it might be “wanting 100%,” however that it might nonetheless be “very top quality.”

In August, it was revealed that Apple Intelligence had directions particularly to counter hallucinations, together with the phrases “Don’t hallucinate. Don’t make up factual info.”

Additionally it is unclear whether or not Apple will need to or be capable to do a lot concerning the hallucinations, as a consequence of selecting to not monitor what customers are actively seeing on their gadgets. Apple Intelligence prioritizes on-device processing the place attainable, a safety measure that additionally means Apple will not get again a lot suggestions for precise summarization outcomes.