MongoDB.dwell happened final week, and Rockset had the chance to take part alongside members of the MongoDB neighborhood and share about our work to make MongoDB knowledge accessible through real-time exterior indexing. In our session, we mentioned the necessity for contemporary data-driven purposes to carry out real-time aggregations and joins, and the way Rockset makes use of MongoDB change streams and Converged Indexing to ship quick queries on knowledge from MongoDB.

Knowledge-Pushed Functions Want Actual-Time Aggregations and Joins

Builders of data-driven purposes face many challenges. Functions of immediately usually function on knowledge from a number of sources—databases like MongoDB, streaming platforms, and knowledge lakes. And the information volumes these purposes want to research usually scale into a number of terabytes. Above all, purposes want quick queries on dwell knowledge to personalize consumer experiences, present real-time buyer 360s, or detect anomalous conditions, because the case could also be.

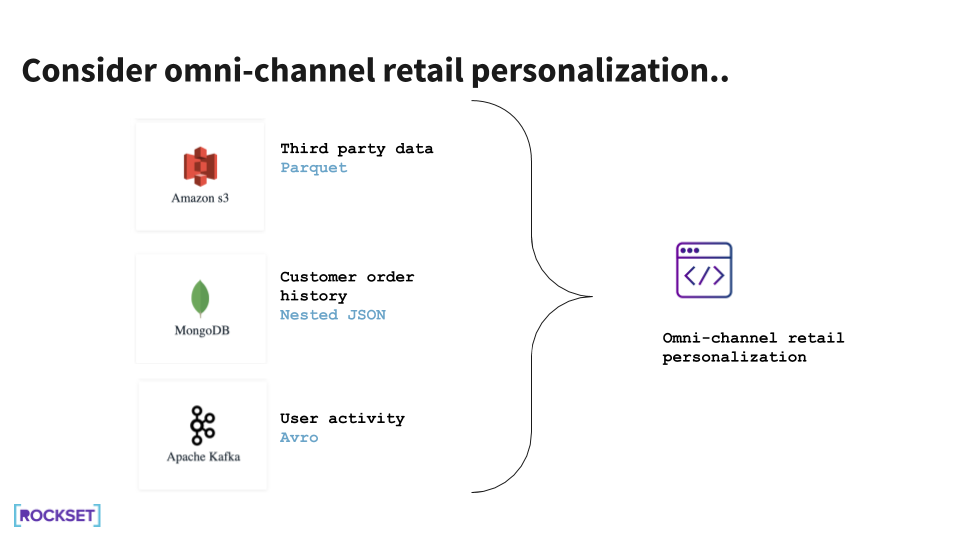

An omni-channel retail personalization software, for instance, could require order knowledge from MongoDB, consumer exercise streams from Kafka, and third-party knowledge from an information lake. The applying must decide what product advice or provide to ship to clients in actual time, whereas they’re on the web site.

Actual-Time Structure At the moment

Certainly one of two choices is often used to help these real-time data-driven purposes immediately.

- We will constantly ETL all new knowledge from a number of knowledge sources, corresponding to MongoDB, Kafka, and Amazon S3, into one other system, like PostgreSQL, that may help aggregations and joins. Nevertheless, it takes effort and time to construct and preserve the ETL pipelines. Not solely would we’ve got to replace our pipelines commonly to deal with new knowledge units or modified schemas, the pipelines would add latency such that the information can be stale by the point it could possibly be queried within the second system.

- We will load new knowledge from different knowledge sources—Kafka and Amazon S3—into our manufacturing MongoDB occasion and run our queries there. We’d be accountable for constructing and sustaining pipelines from these sources to MongoDB. This answer works nicely at smaller scale, however scaling knowledge, queries, and efficiency can show troublesome. This is able to require managing a number of indexes in MongoDB and writing application-side logic to help complicated queries like joins.

A Actual-Time Exterior Indexing Strategy

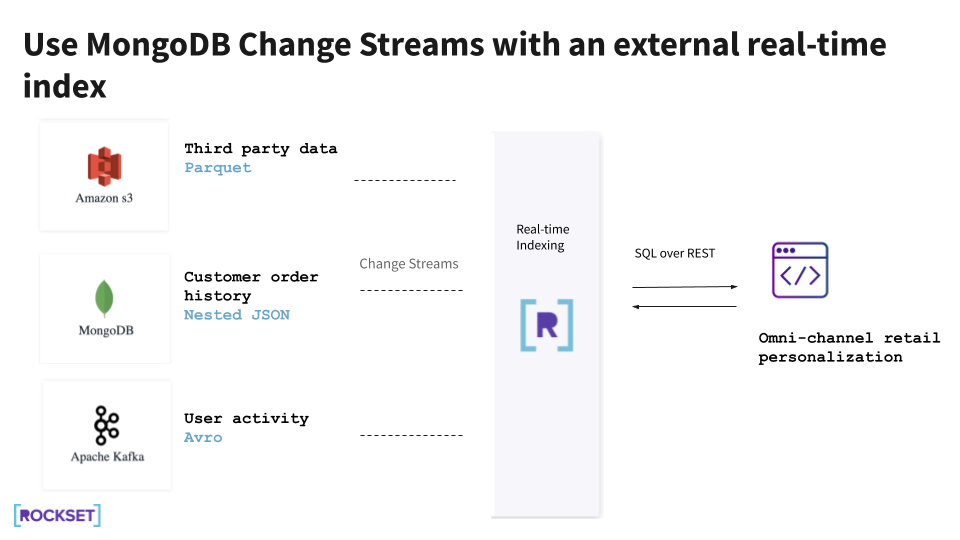

We will take a distinct method to assembly the necessities of data-driven purposes.

Utilizing Rockset for real-time indexing permits us to create APIs merely utilizing SQL for search, aggregations, and joins. This implies no further application-side logic is required to help complicated queries. As an alternative of making and managing our personal indexes, Rockset robotically builds indexes on ingested knowledge. And Rockset ingests knowledge with out requiring a pre-defined schema, so we are able to skip ETL pipelines and question the newest knowledge.

Rockset supplies built-in connectors to MongoDB and different widespread knowledge sources, so we don’t must construct our personal. For MongoDB Atlas, the Rockset connector makes use of MongoDB change streams to constantly sync from MongoDB with out affecting manufacturing MongoDB.

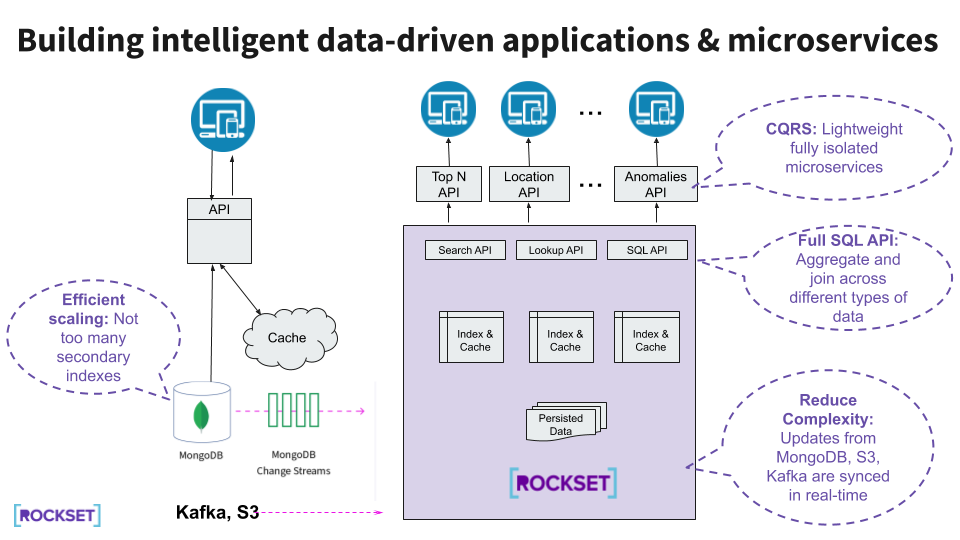

On this structure, there is no such thing as a want to change MongoDB to help data-driven purposes, as all of the heavy reads from the purposes are offloaded to Rockset. Utilizing full-featured SQL, we are able to construct several types of microservices on prime of Rockset, such that they’re remoted from the manufacturing MongoDB workload.

How Rockset Does Actual-Time Indexing

Rockset was designed to be a quick indexing layer, synced to a main database. A number of elements of Rockset make it well-suited for this position.

Converged Indexing

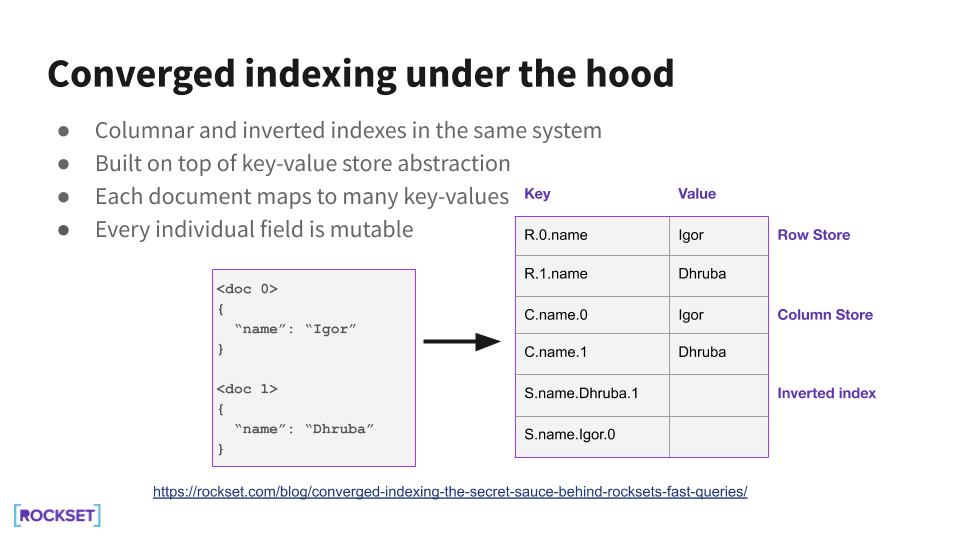

Rockset’s Converged Index™ is a Rockset-specific function wherein all fields are listed robotically. There is no such thing as a have to create and preserve indexes or fear about which fields to index. Rockset indexes each single subject, together with nested fields. Rockset’s Converged Index is probably the most environment friendly method to set up your knowledge and allows queries to be out there virtually immediately and carry out extremely quick.

Rockset shops each subject of each doc in an inverted index (like Elasticsearch does), a column-based index (like many knowledge warehouses do), and in a row-based index (like MongoDB or PostgreSQL). Every index is optimized for several types of queries.

Rockset is ready to index all the pieces effectively by shredding paperwork into key-value pairs, storing them in RocksDB, a key-value retailer. Not like different indexing options, like Elasticsearch, every subject is mutable, that means new fields could be added or particular person fields up to date with out having to reindex your entire doc.

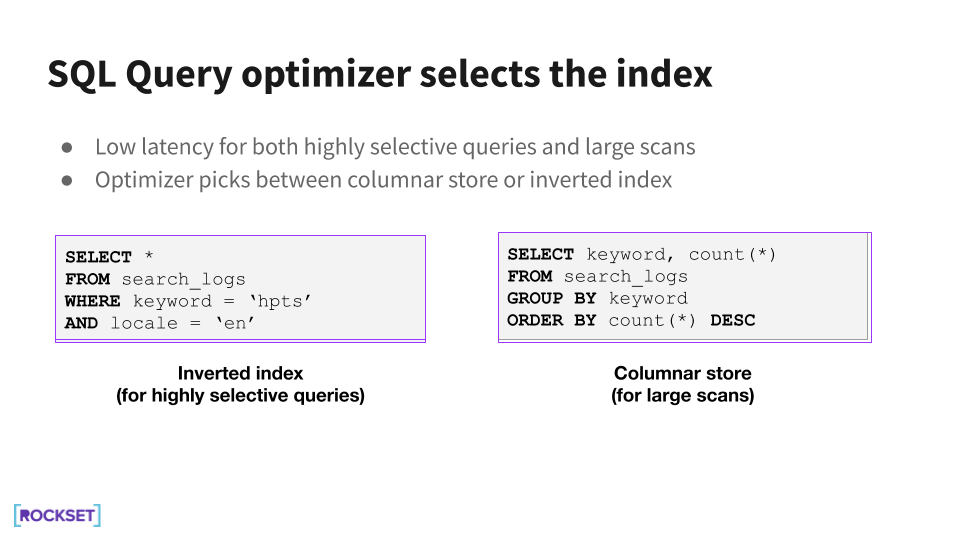

The inverted index helps for level lookups, whereas the column-based index makes it straightforward to scan via column values for aggregations. The question optimizer is ready to choose probably the most acceptable indexes to make use of when scheduling the question execution.

Schemaless Ingest

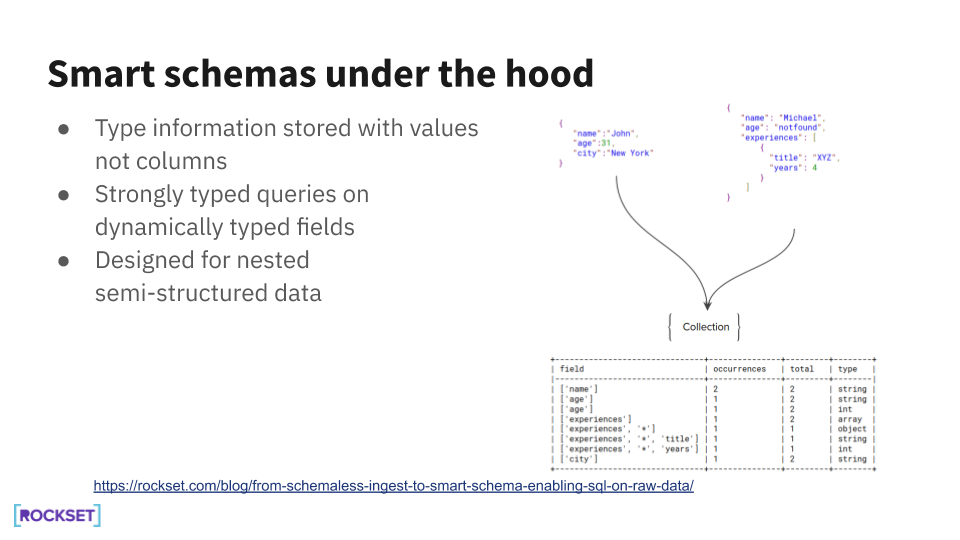

One other key requirement for real-time indexing is the flexibility to ingest knowledge and not using a pre-defined schema. This makes it doable to keep away from ETL processing steps when indexing knowledge from MongoDB, which equally has a versatile schema.

Nevertheless, schemaless ingest alone will not be notably helpful if we aren’t capable of question the information being ingested. To resolve this, Rockset robotically creates a schema on the ingested knowledge in order that it may be queried utilizing SQL, an idea termed Good Schema. On this method, Rockset allows SQL queries to be run on NoSQL knowledge, from MongoDB, knowledge lakes, or knowledge streams.

Disaggregated Aggregator-Leaf-Tailer Structure

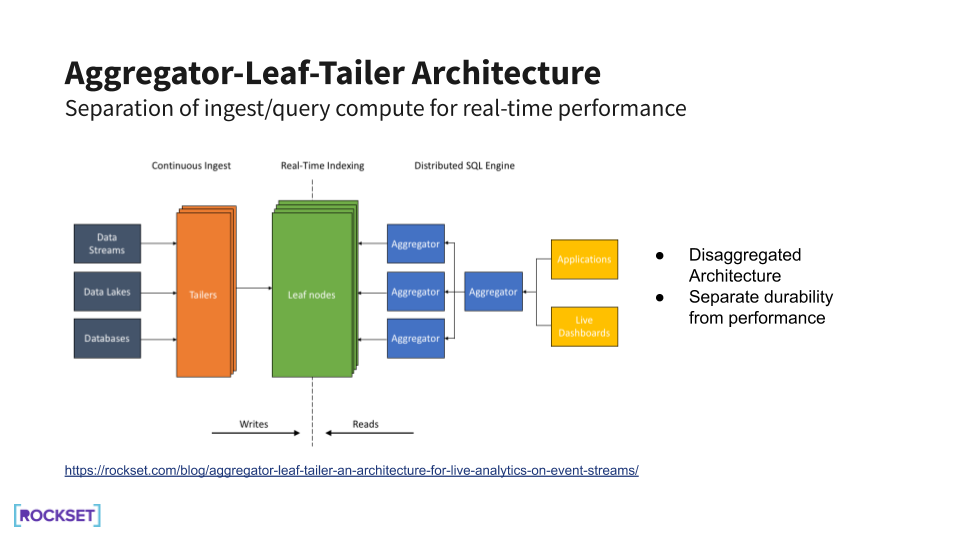

For real-time indexing, it’s important to ship real-time efficiency for ingest and question. To take action, Rockset makes use of a disaggregated Aggregator-Leaf-Tailer structure that takes benefit of cloud elasticity.

Tailers ingest knowledge constantly, leaves index and retailer the listed knowledge, and aggregators serve queries on the information. Every part of this structure is decoupled from the others. Virtually, which means that compute and storage could be scaled independently, relying on whether or not the appliance workload is compute- or storage-biased.

Additional, inside the compute portion, ingest compute could be individually scaled from question compute. On a bulk load, we are able to spin up extra tailers to reduce the time required to ingest. Equally, throughout spikes in software exercise, we are able to spin up extra aggregators to deal with a better price of queries. Rockset is then capable of make full use of cloud efficiencies to reduce latencies within the system.

Utilizing MongoDB and Rockset Collectively

MongoDB and Rockset lately partnered to ship a totally managed connector between MongoDB Atlas and Rockset. Utilizing the 2 providers collectively brings a number of advantages to customers:

- Use any knowledge in actual time with schemaless ingest – Index constantly from MongoDB, different databases, knowledge streams, and knowledge lakes with build-in connectors.

- Create APIs in minutes utilizing SQL – Create APIs utilizing SQL for complicated queries, like search, aggregations, and joins.

- Scale higher by offloading heavy reads to a pace layer – Scale to tens of millions of quick API calls with out impacting manufacturing MongoDB efficiency.

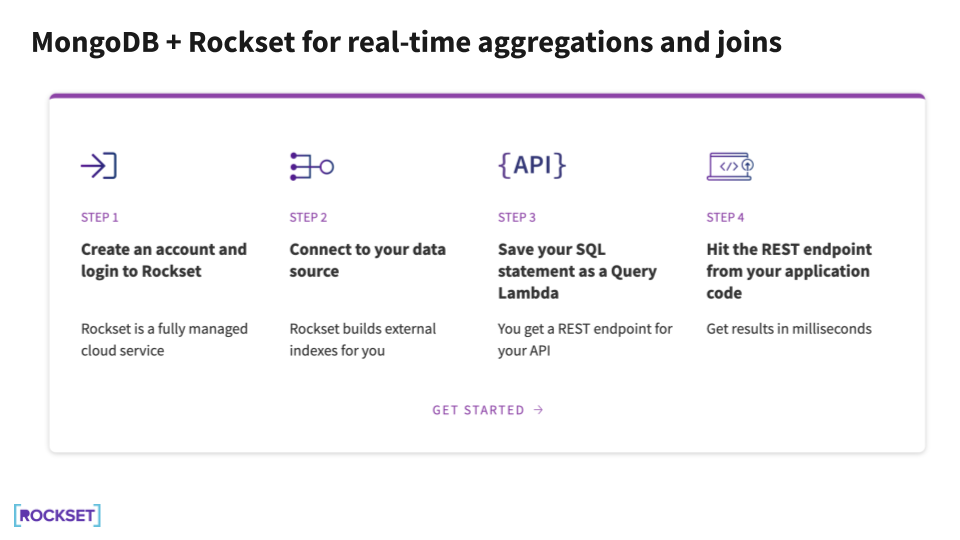

Placing MongoDB and Rockset collectively takes a couple of easy steps. We recorded a step-by-step walkthrough right here to indicate the way it’s finished. You too can take a look at our full MongoDB.dwell session right here.

Able to get began? Create your Rockset account now!

Different MongoDB assets: