MySQL and PostgreSQL are broadly used as transactional databases. On the subject of supporting high-scale and analytical use instances, you could typically should tune and configure these databases, which results in the next operational burden. Some challenges when doing analytics on MySQL and Postgres embody:

- operating numerous concurrent queries/customers

- working with giant knowledge sizes

- needing to outline and handle tons of indexes.

There are workarounds for these issues, but it surely requires extra operational burden:

- scaling to bigger servers

- creating extra learn replicas

- shifting to a NoSQL database

Rockset not too long ago introduced assist for MySQL and PostgreSQL that simply means that you can energy real-time, advanced analytical queries. This mitigates the necessity to tune these relational databases to deal with heavy analytical workloads.

By integrating MySQL and PostgreSQL with Rockset, you’ll be able to simply scale out to deal with demanding analytics.

Preface

Within the twitch stream 👇, we did an integration with RDS MySQL on Rockset. This implies all of the setup will probably be associated to Amazon Relational Database Service (RDS) and Amazon Database Migration Service (DMS). Earlier than getting began, go forward and create an AWS and Rockset account.

I’ll cowl the primary highlights of what we did within the twitch stream on this weblog. Should you’re not sure about sure elements of the directions, positively try the video down under.

Set Up MySQL Server

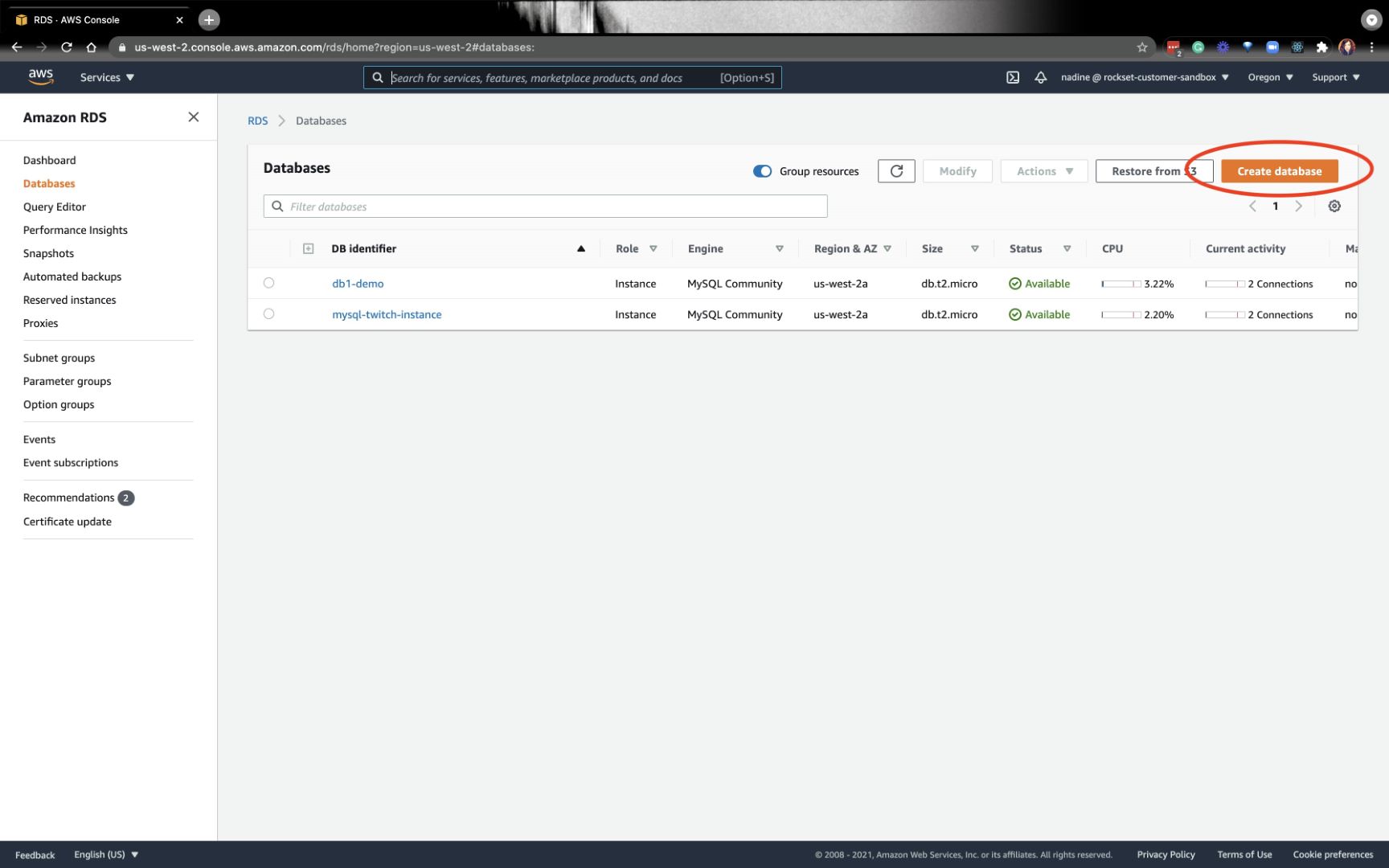

In our stream, we created a MySQL server on Amazon RDS. You may click on on Create database on the higher right-hand nook and work via the directions:

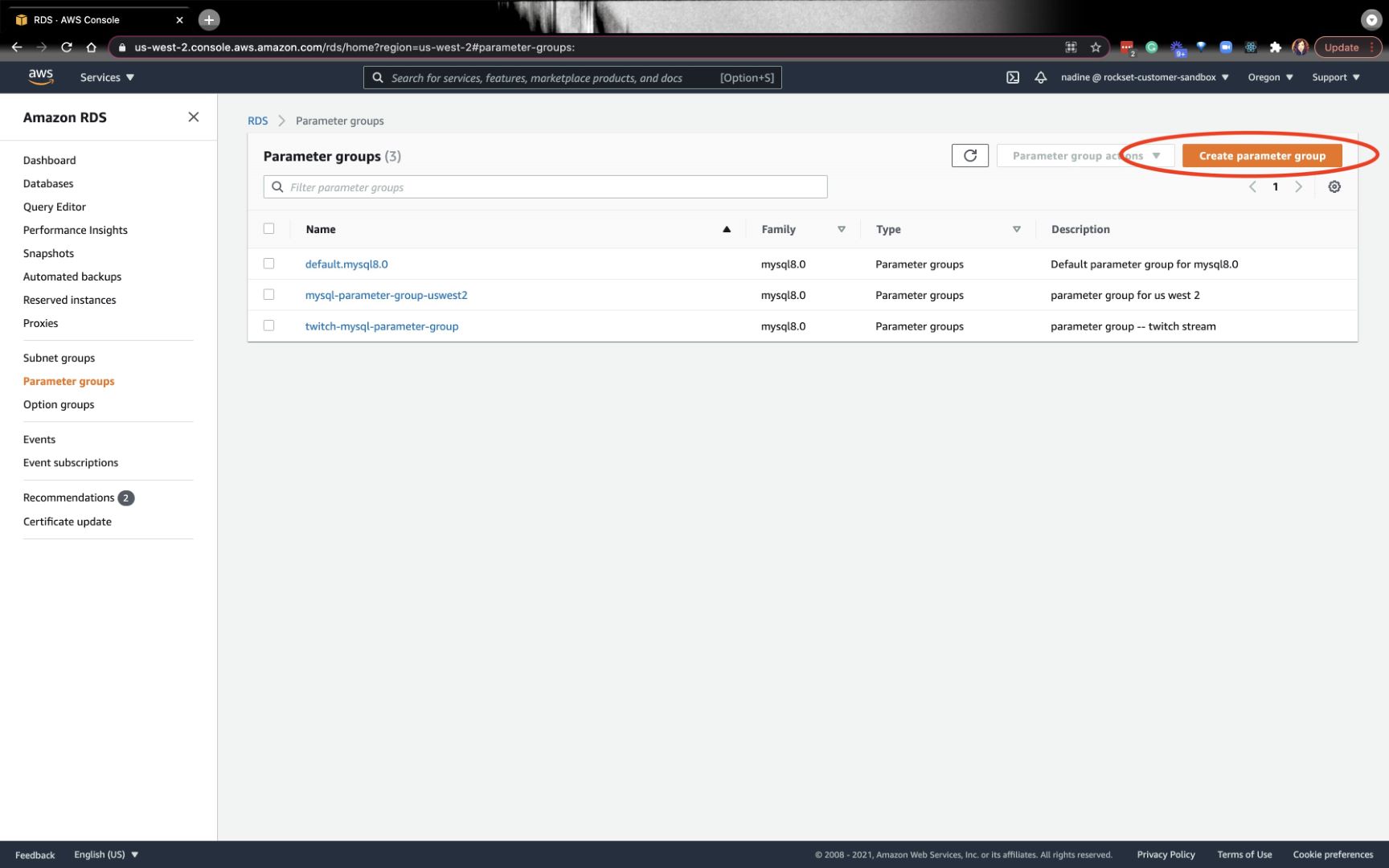

Now, we’ll create the parameter teams. By making a parameter group, we’ll have the ability to change the binlog_format to Row so we are able to dynamically replace Rockset as the information adjustments in MySQL. Click on on Create parameter group on the higher right-hand nook:

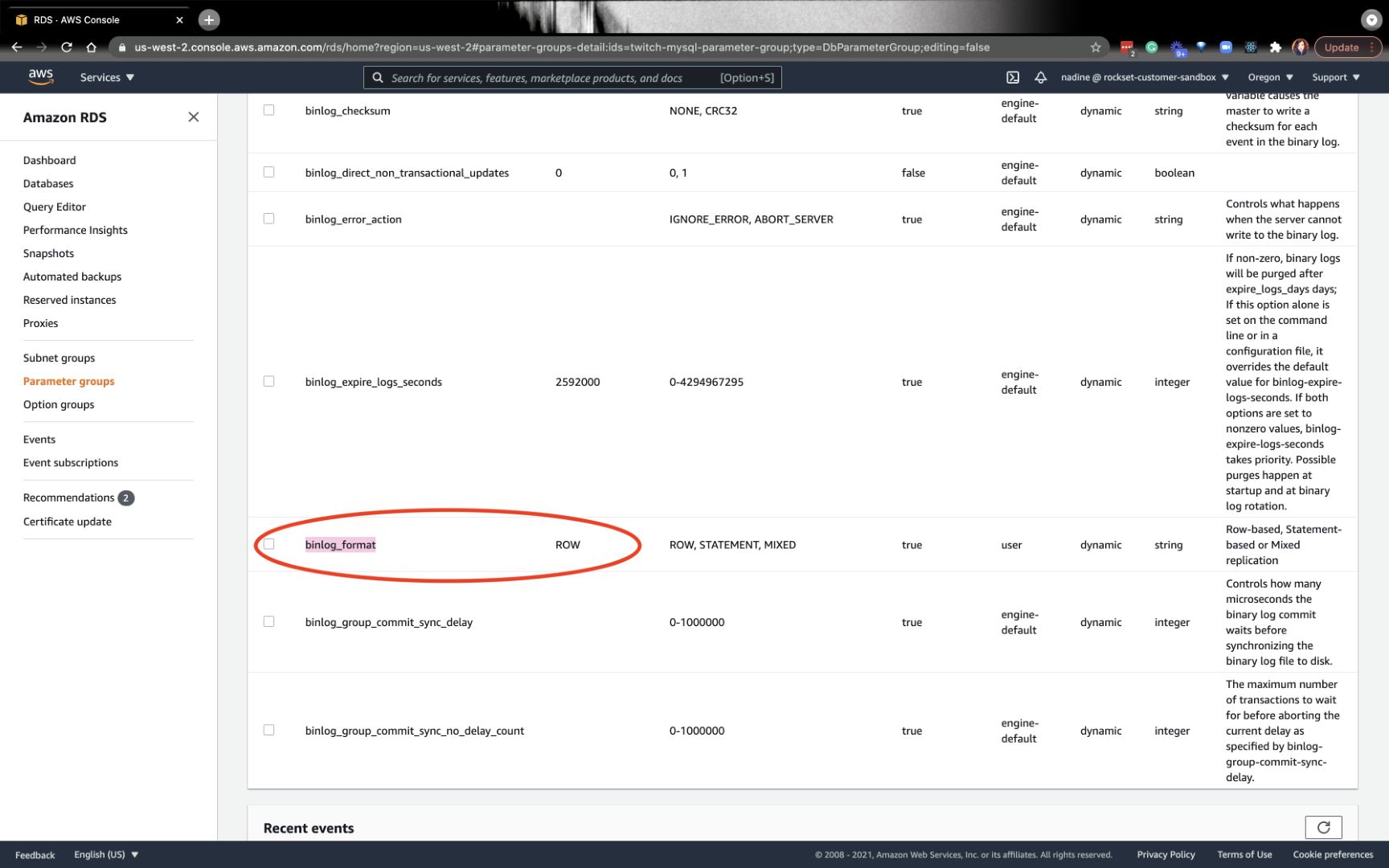

After you create your parameter group, you wish to click on on the newly created group and alter binlog_format to Row:

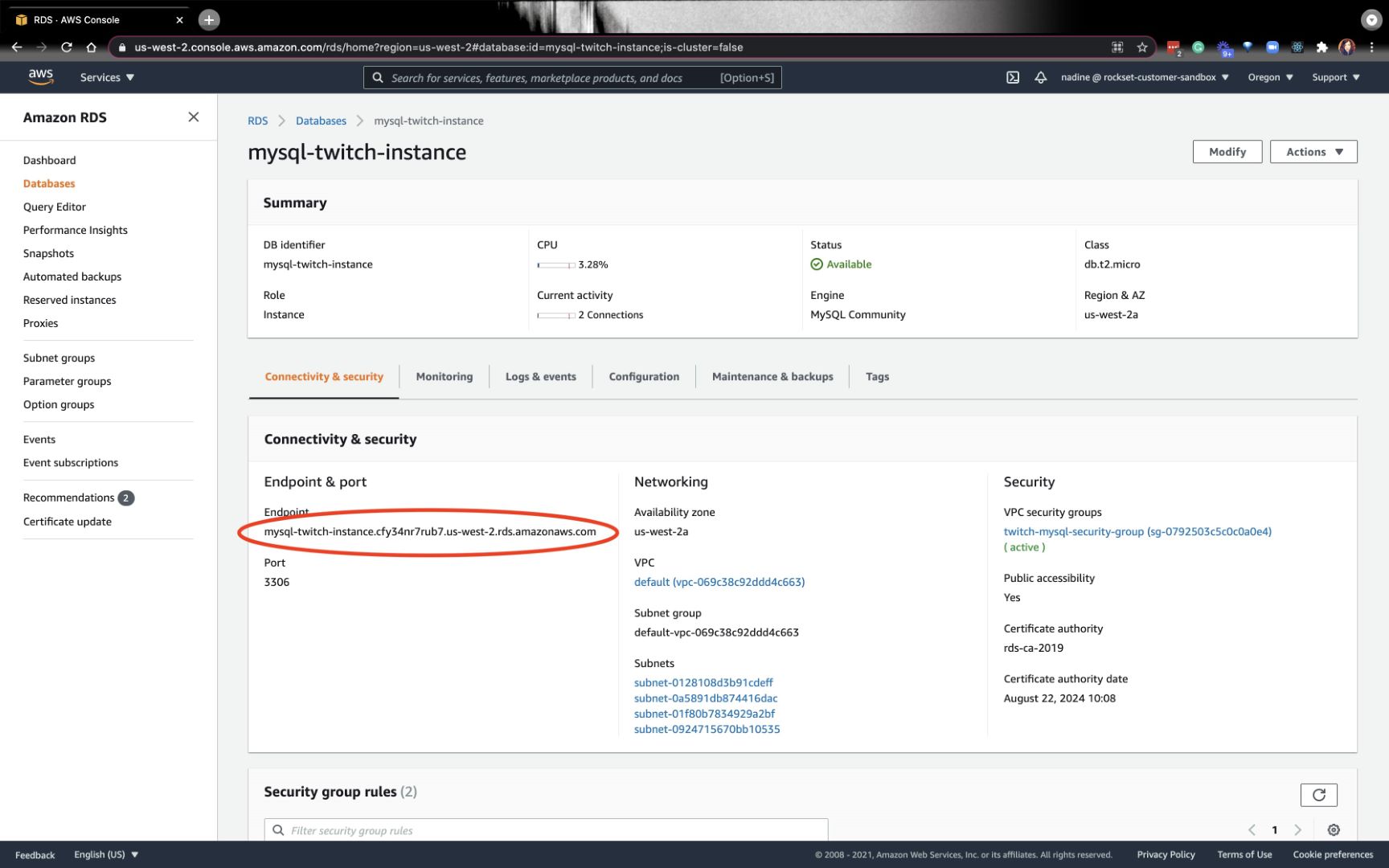

After that is set, you wish to entry the MySQL server from the CLI so you’ll be able to set the permissions. You may seize the endpoint from the Databases tab on the left and underneath the Connectivity & safety settings:

On terminal, kind

$ mysql -u admin -p -h Endpoint

It’ll immediate you for the password.

As soon as inside, you wish to kind this:

mysql> CREATE USER 'aws-dms' IDENTIFIED BY 'youRpassword';

mysql> GRANT SELECT ON *.* TO 'aws-dms';

mysql> GRANT REPLICATION SLAVE ON *.* TO 'aws-dms';

mysql> GRANT REPLICATION CLIENT ON *.* TO 'aws-dms';

That is in all probability a superb level to create a desk and insert some knowledge. I did this half just a little later within the stream, however you’ll be able to simply do it right here too.

mysql> use yourDatabaseName

mysql> CREATE TABLE MyGuests ( id INT(6) UNSIGNED AUTO_INCREMENT PRIMARY KEY, firstname VARCHAR(30) NOT NULL, lastname VARCHAR(30) NOT NULL, e-mail VARCHAR(50), reg_date TIMESTAMP DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP );

mysql> INSERT INTO MyGuests (firstname, lastname, e-mail)

-> VALUES ('John', 'Doe', 'john@instance.com');

mysql> present tables;

That’s a wrap for this part. We arrange a MySQL server, desk, and inserted some knowledge.

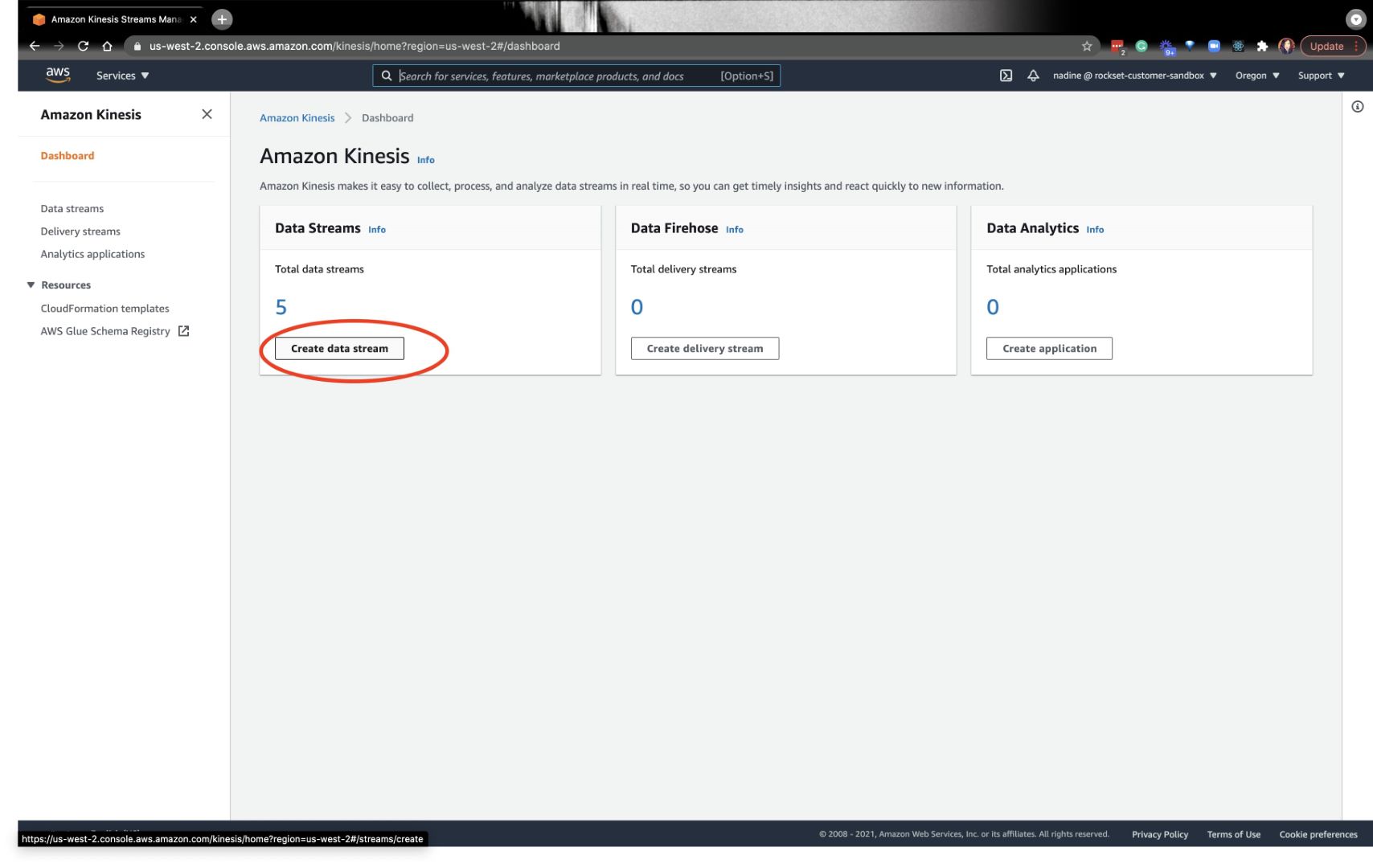

Create a Goal AWS Kinesis Stream

Every desk on MySQL will map to 1 Kinesis Knowledge Stream. The AWS Kinesis Stream is the vacation spot that DMS makes use of because the goal of a migration job. Each MySQL desk we want to connect with Rockset would require a person migration process.

To summarize: Every desk on MySQL desk would require a Kinesis Knowledge Stream and a migration process.

Go forward and navigate to the Kinesis Knowledge Stream and create a stream:

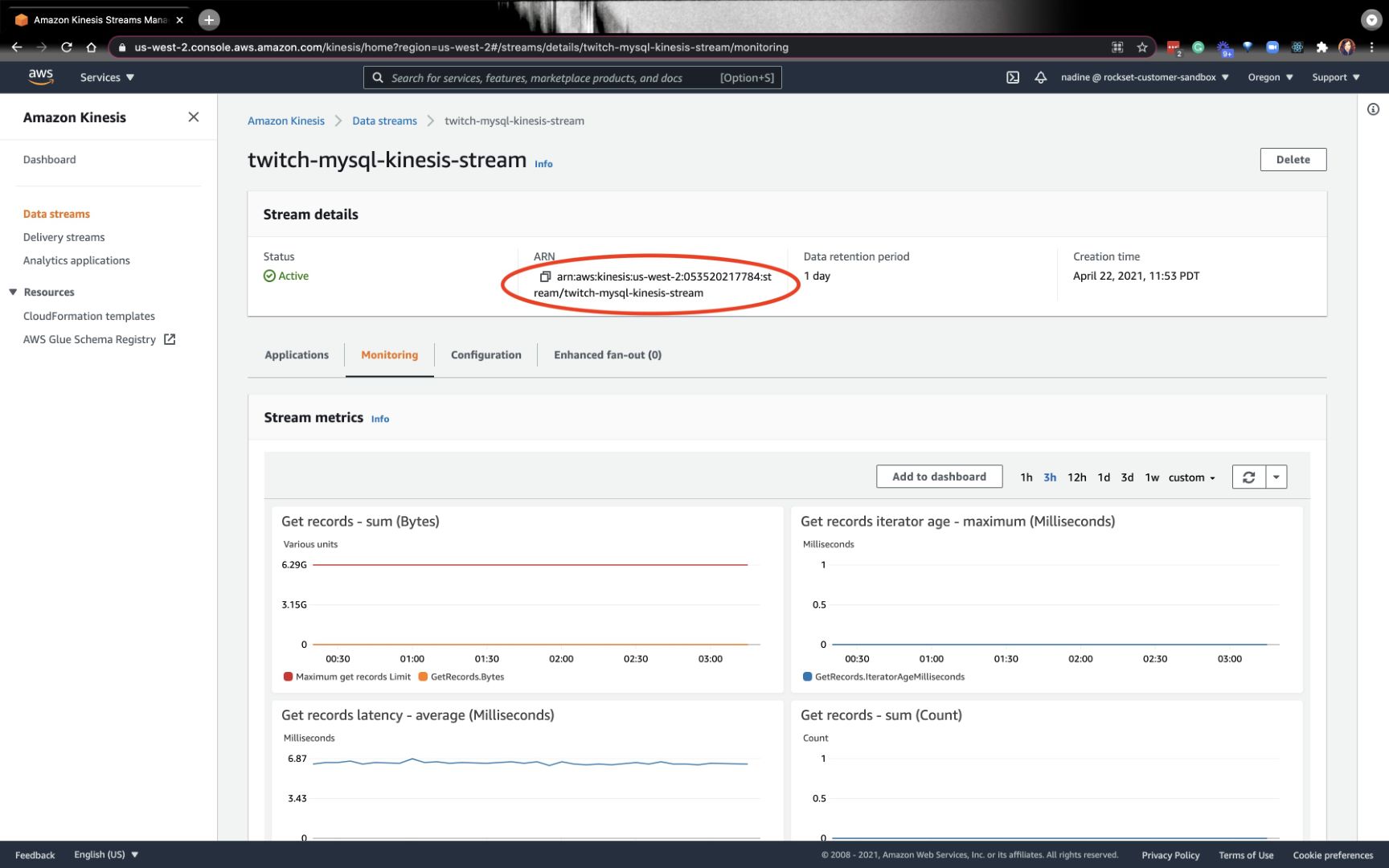

You should definitely bookmark the ARN in your stream — we’re going to want it later:

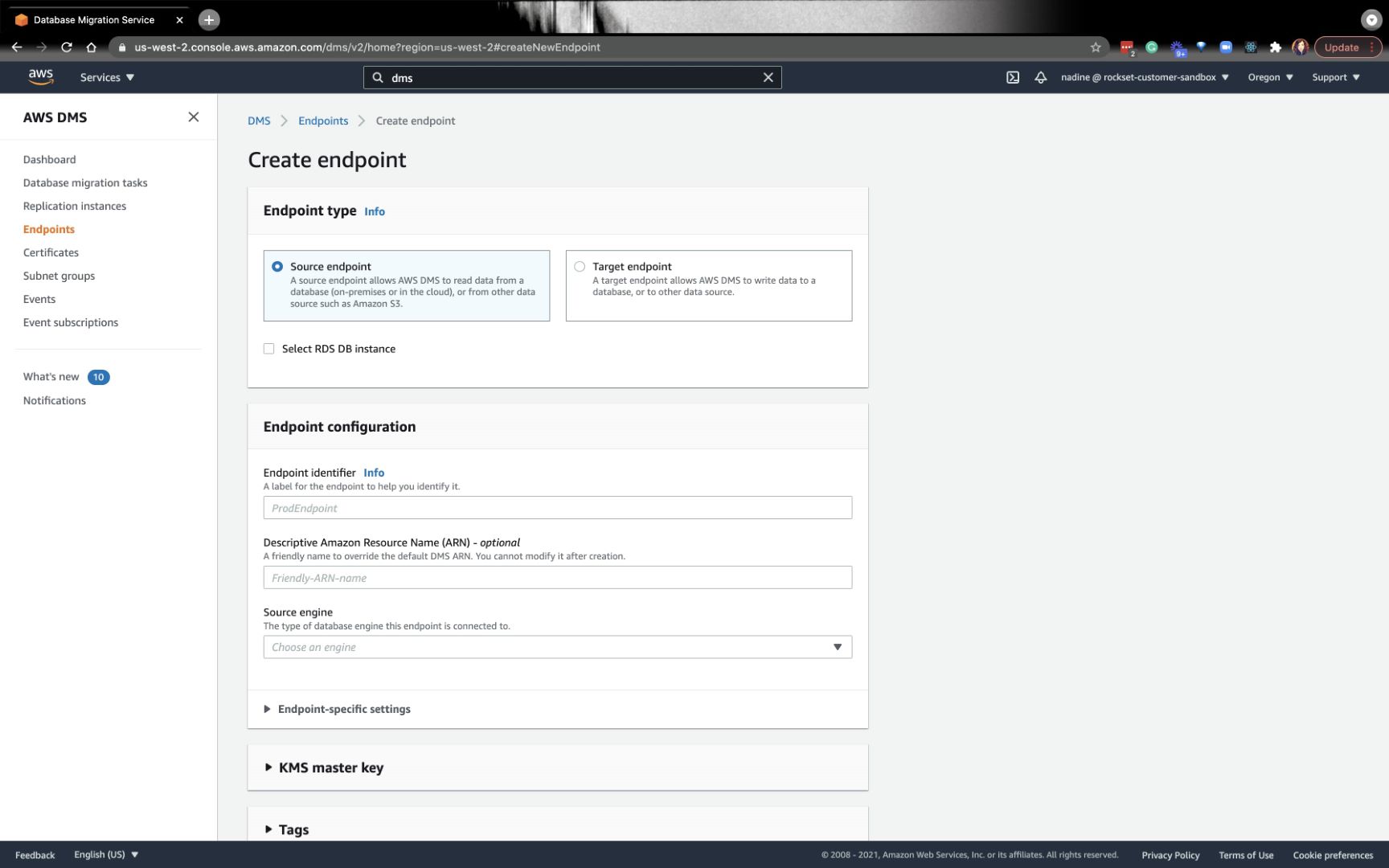

Create an AWS DMS Replication Occasion and Migration Job

Now, we’re going to navigate to AWS DMS (Knowledge Migration Service). The very first thing we’re going to do is create a supply endpoint and a goal endpoint:

Once you create the goal endpoint, you’ll want the Kinesis Stream ARN that we created earlier. You’ll additionally want the Service entry function ARN. Should you don’t have this function, you’ll have to create it on the AWS IAM console. You will discover extra particulars about methods to create this function within the stream proven down under.

From there, we’ll create the replication situations and knowledge migration duties. You may principally observe this a part of the directions on our docs or watch the stream.

As soon as the information migration process is profitable, you’re prepared for the Rockset portion!

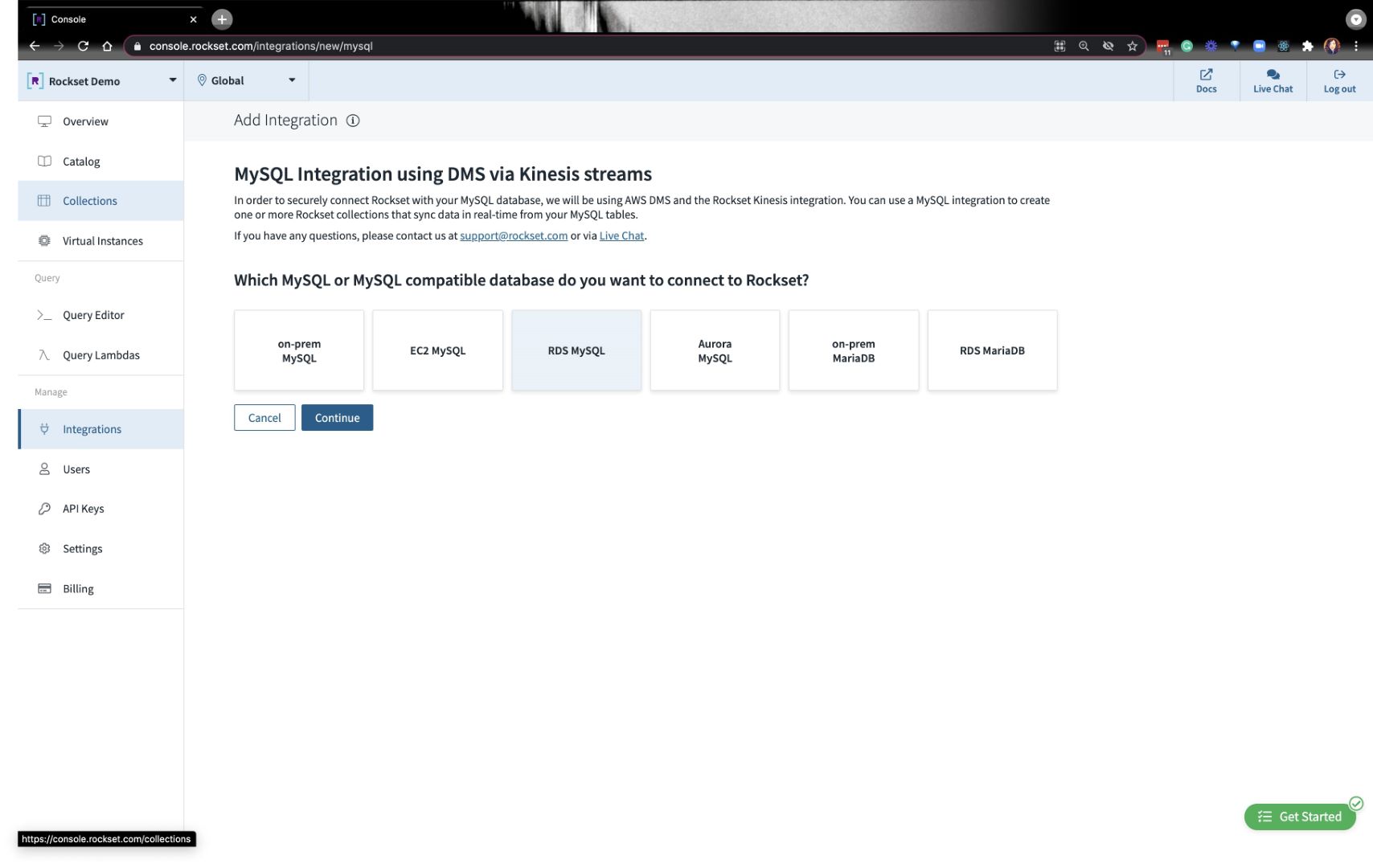

Scaling MySQL analytical workloads on Rockset

As soon as MySQL is related to Rockset, any knowledge adjustments carried out on MySQL will register on Rockset. You’ll have the ability to scale your workloads effortlessly as properly. Once you first create a MySQL integration, click on on RDS MySQL you’ll see prompts to make sure that you probably did the varied setup directions we simply coated above.

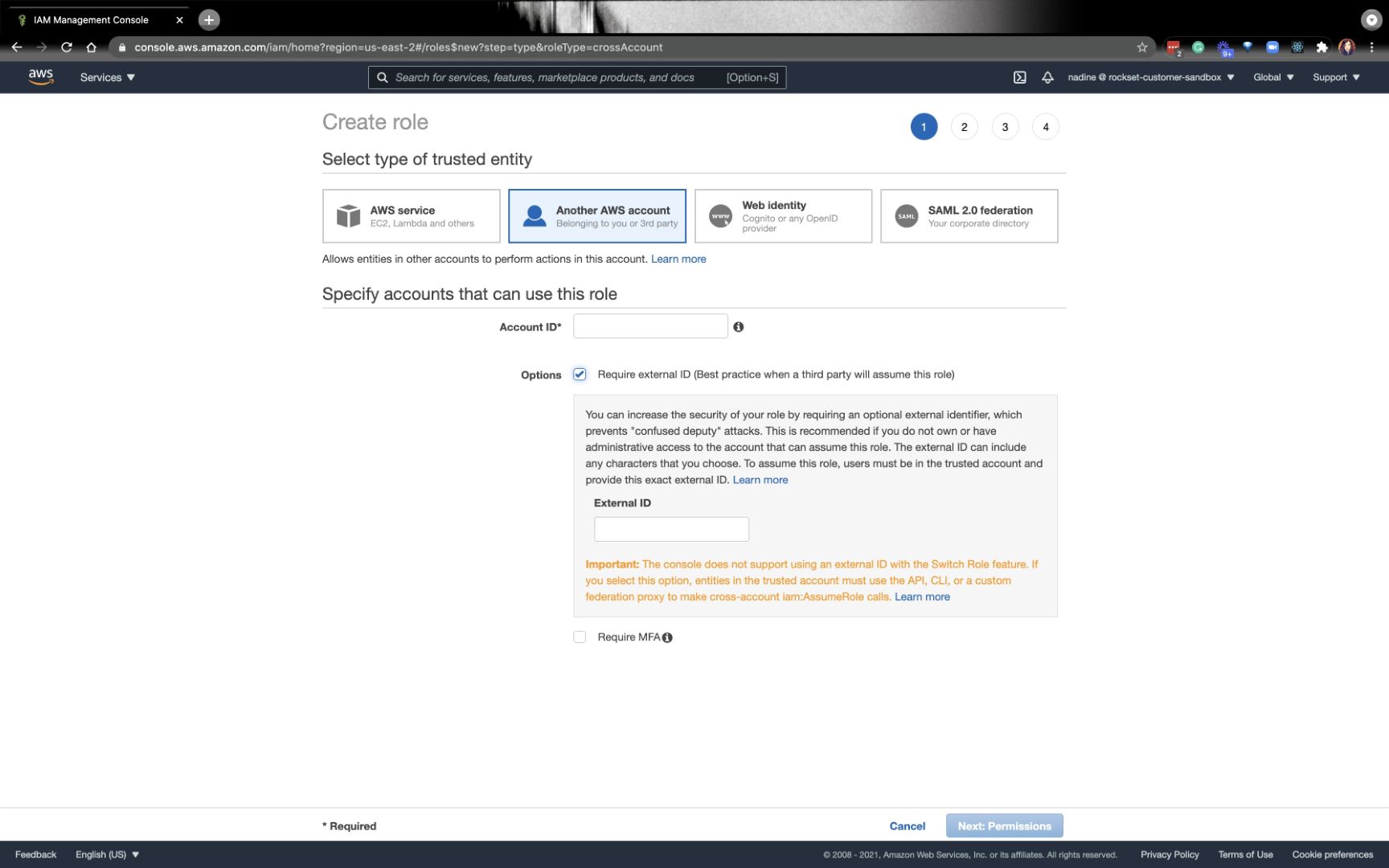

The very last thing you’ll have to do is create a particular IAM function with Rockset’s Account ID and Exterior ID:

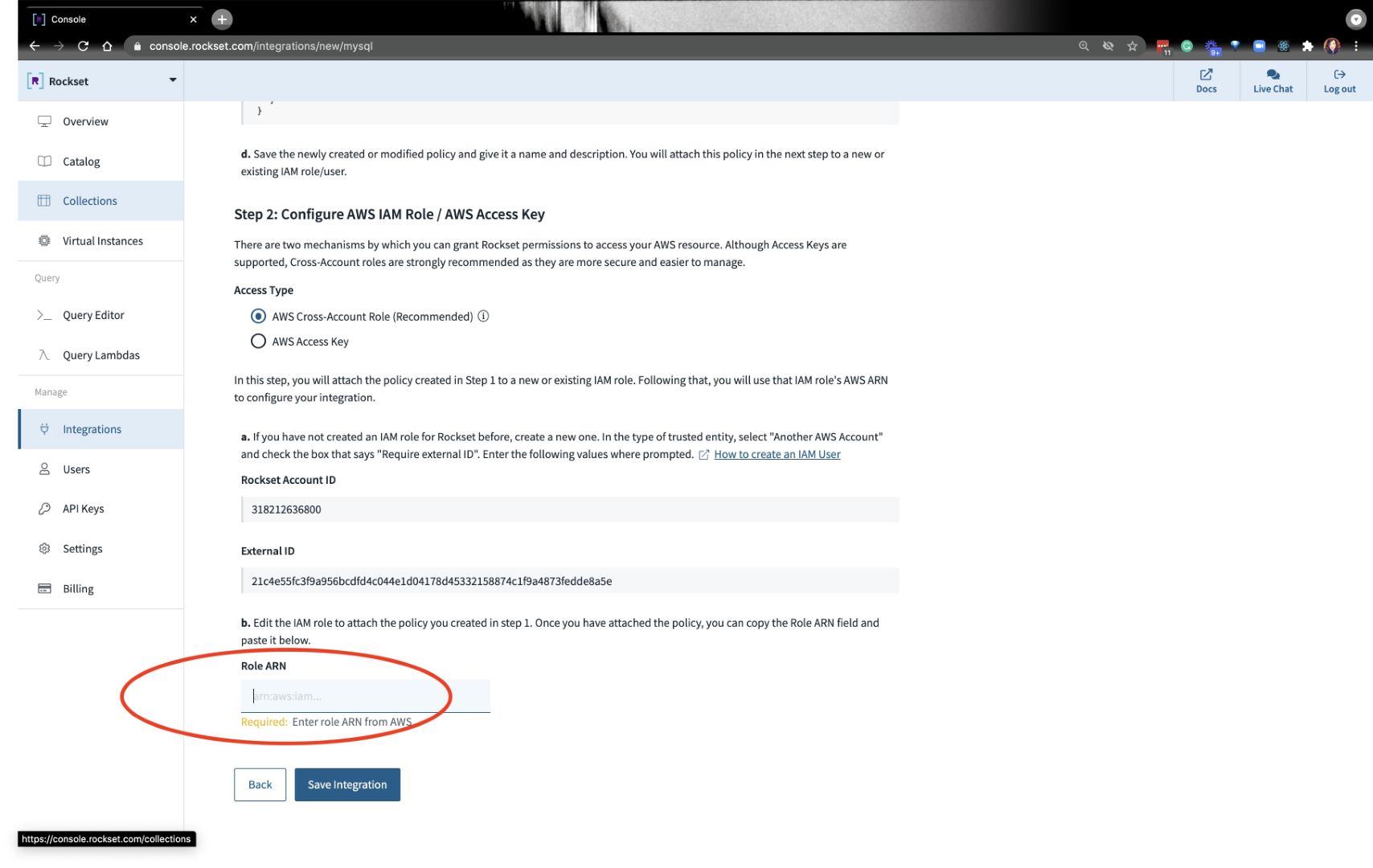

You’ll seize the ARN from the function we created and paste it on the backside the place it requires that data:

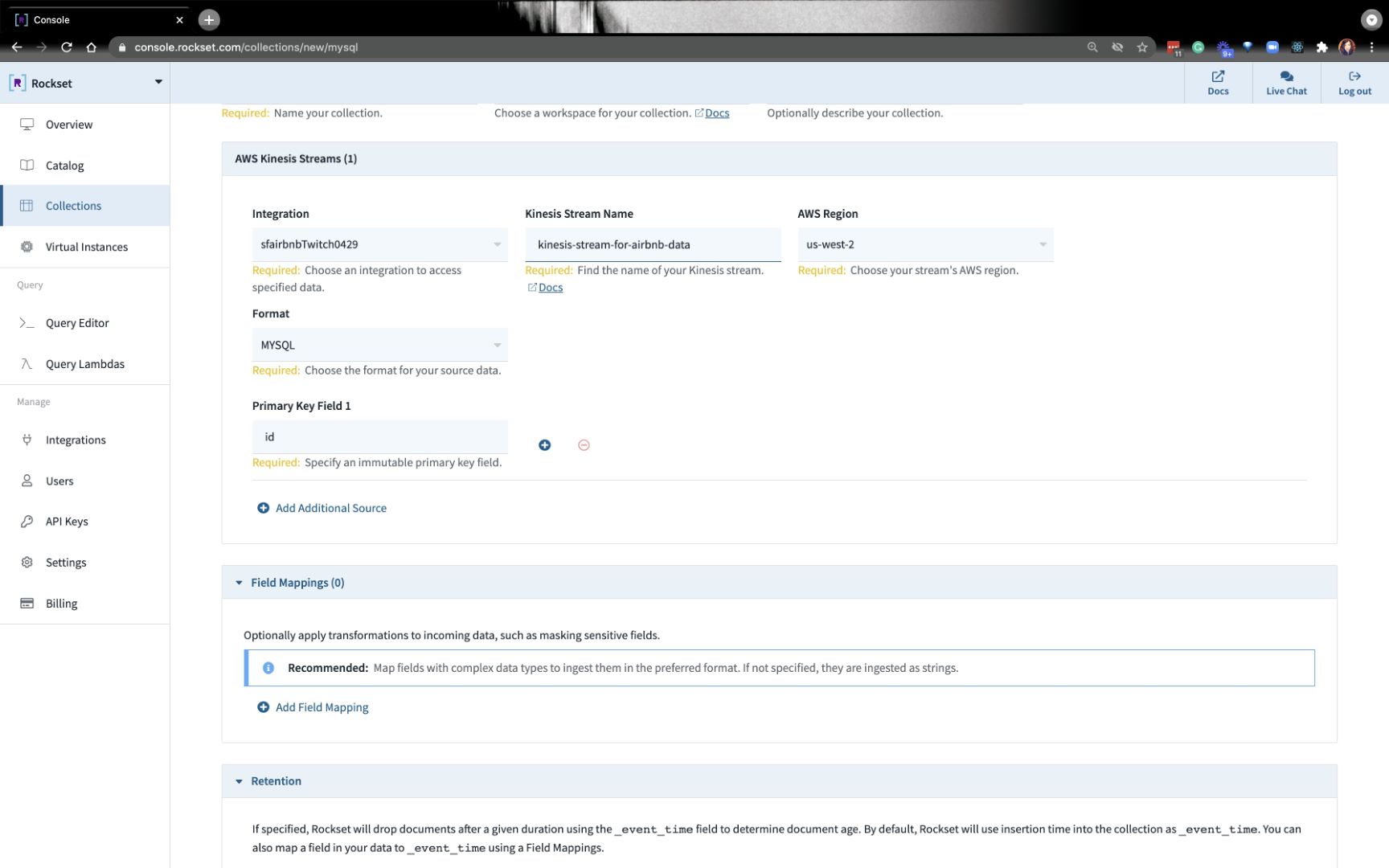

As soon as the combination is about up, you’ll have to create a group. Go forward and put it your assortment title, AWS area, and Kinesis stream data:

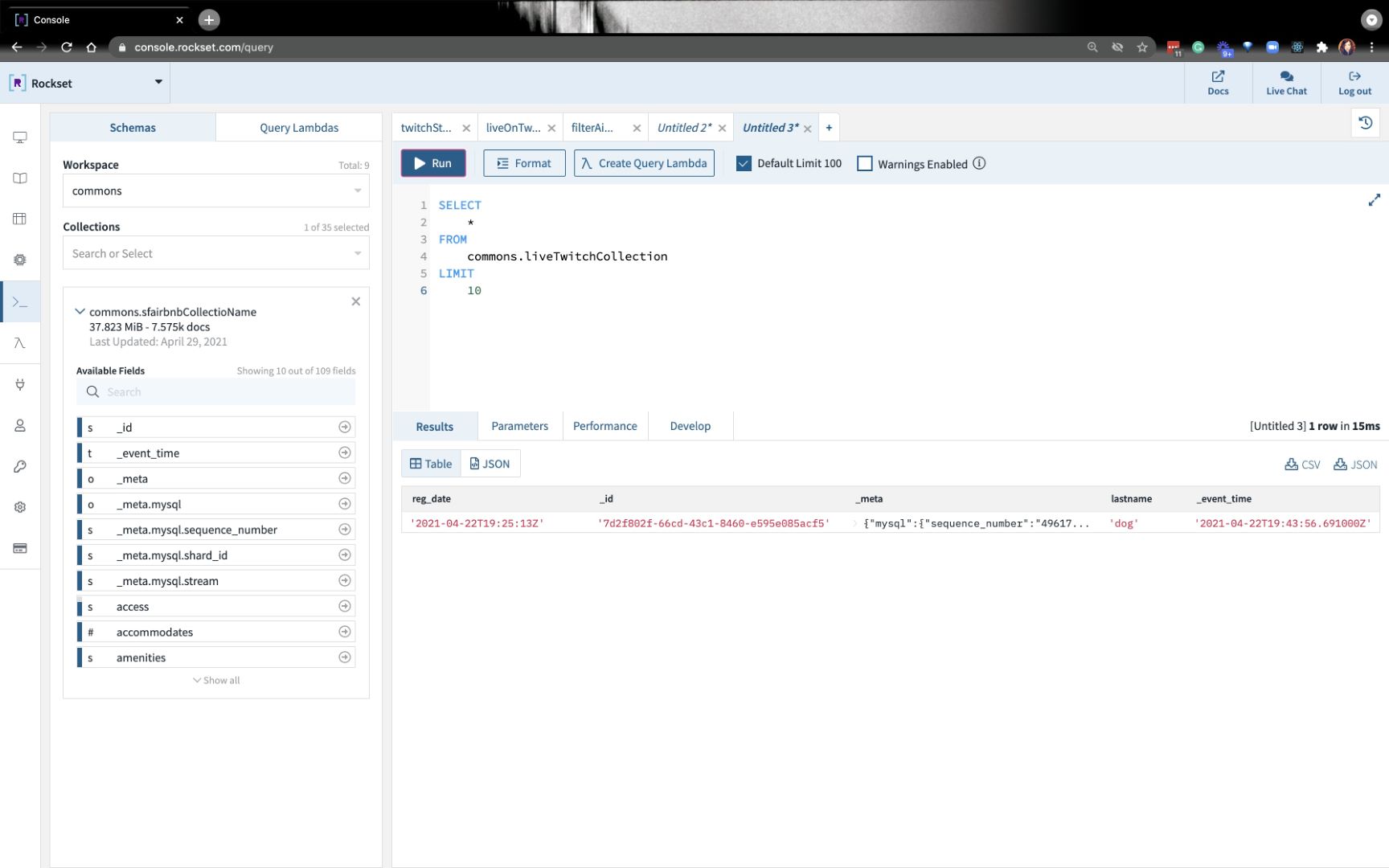

After a minute or so, you need to have the ability to question your knowledge that’s coming in from MySQL!

We simply did a easy insert into MySQL to check if all the pieces is working accurately. Within the subsequent weblog, we’ll create a brand new desk and add knowledge to it. We’ll work on a couple of SQL queries.

You may catch the total replay of how we did this end-to-end right here:

Embedded content material: https://youtu.be/oNtmJl2CZf8

Or you’ll be able to observe the directions on docs.

TLDR: you will discover all of the assets you want within the developer nook.