Till now, nearly all of the world’s knowledge transformations have been carried out on high of information warehouses, question engines, and different databases that are optimized for storing numerous knowledge and querying them for analytics often. These options have labored nicely for the batch ELT world over the previous decade, the place knowledge groups are used to coping with knowledge that’s solely often refreshed and analytics queries that may take minutes and even hours to finish.

The world, nevertheless, is transferring from batch to real-time, and knowledge transformations are not any exception.

Each knowledge freshness and question latency necessities have gotten increasingly more strict, with fashionable knowledge functions and operational analytics necessitating recent knowledge that by no means will get stale. With the velocity and scale at which new knowledge is continually being generated in as we speak’s real-time world, such analytics primarily based on knowledge that’s days, hours, and even minutes previous might not be helpful. Complete analytics require extraordinarily sturdy knowledge transformations, which is difficult and costly to make real-time when your knowledge is residing in applied sciences not optimized for real-time analytics.

Introducing dbt Core + Rockset

Again in July, we launched our dbt-Rockset adapter for the primary time which introduced real-time analytics to dbt, an immensely common open-source knowledge transformation instrument that lets groups rapidly and collaboratively deploy analytics code to ship greater high quality knowledge units. Utilizing the adapter, you could possibly now load knowledge into Rockset and create collections by writing SQL SELECT statements in dbt. These collections may then be constructed on high of each other to assist extremely complicated knowledge transformations with many dependency edges.

As we speak, we’re excited to announce the primary main replace to our dbt-Rockset adapter which now helps all 4 core dbt materializations:

With this beta launch, now you can carry out the entire hottest workflows utilized in dbt for performing real-time knowledge transformations on Rockset. This comes on the heels of our newest product releases round extra accessible and reasonably priced real-time analytics with Rollups on Streaming Knowledge and Rockset Views.

Actual-Time Streaming ELT Utilizing dbt + Rockset

As knowledge is ingested into Rockset, we are going to routinely index it utilizing Rockset’s Converged Index™ expertise, carry out any write-time knowledge transformations you outline, after which make that knowledge queryable inside seconds. Then, once you execute queries on that knowledge, we are going to leverage these indexes to finish any read-time knowledge transformations you outline utilizing dbt with sub-second latency.

Let’s stroll by means of an instance workflow for establishing real-time streaming ELT utilizing dbt + Rockset:

Write-Time Knowledge Transformations Utilizing Rollups and Discipline Mappings

Rockset can simply extract and cargo semi-structured knowledge from a number of sources in real-time. For top velocity knowledge, mostly coming from knowledge streams, you possibly can roll it up at write-time. As an example, let’s say you will have streaming knowledge coming in from Kafka or Kinesis. You’ll create a Rockset assortment for every knowledge stream, after which arrange SQL-Based mostly Rollups to carry out transformations and aggregations on the information as it’s written into Rockset. This may be useful once you wish to scale back the scale of huge scale knowledge streams, deduplicate knowledge, or partition your knowledge.

Collections may also be created from different knowledge sources together with knowledge lakes (e.g. S3 or GCS), NoSQL databases (e.g. DynamoDB or MongoDB), and relational databases (e.g. PostgreSQL or MySQL). You may then use Rocket’s SQL-Based mostly Discipline Mappings to rework the information utilizing SQL statements as it’s written into Rockset.

Learn-Time Knowledge Transformations Utilizing Rockset Views

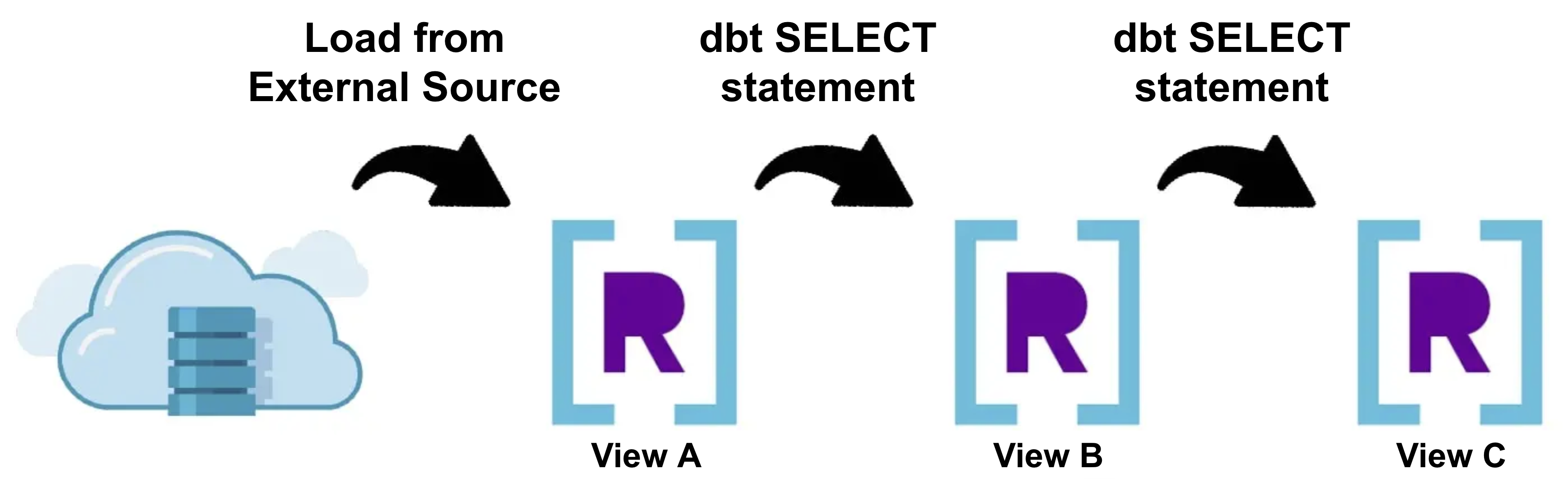

There’s solely a lot complexity you possibly can codify into your knowledge transformations throughout write-time, so the subsequent factor you’ll wish to attempt is utilizing the adapter to arrange knowledge transformations as SQL statements in dbt utilizing the View Materialization that may be carried out throughout read-time.

Create a dbt mannequin utilizing SQL statements for every transformation you wish to carry out in your knowledge. While you execute dbt run, dbt will routinely create a Rockset View for every dbt mannequin, which can carry out all the information transformations when queries are executed.

In case you’re capable of match your whole transformation into the steps above and queries full inside your latency necessities, then you will have achieved the gold normal of real-time knowledge transformations: Actual-Time Streaming ELT.

That’s, your knowledge will likely be routinely saved up-to-date in real-time, and your queries will at all times replicate essentially the most up-to-date supply knowledge. There isn’t any want for periodic batch updates to “refresh” your knowledge. In dbt, which means you’ll not must execute dbt run once more after the preliminary setup except you wish to make adjustments to the precise knowledge transformation logic (e.g. including or updating dbt fashions).

Persistent Materializations Utilizing dbt + Rockset

If utilizing solely write-time transformations and views isn’t sufficient to fulfill your software’s latency necessities or your knowledge transformations change into too complicated, you possibly can persist them as Rockset collections. Take note Rockset additionally requires queries to finish in underneath 2 minutes to cater to real-time use circumstances, which can have an effect on you in case your read-time transformations are too involuted. Whereas this requires a batch ELT workflow because you would wish to manually execute dbt run every time you wish to replace your knowledge transformations, you should use micro-batching to run dbt extraordinarily incessantly to maintain your remodeled knowledge up-to-date in close to real-time.

Crucial benefits to utilizing persistent materializations is that they’re each quicker to question and higher at dealing with question concurrency, as they’re materialized as collections in Rockset. For the reason that bulk of the information transformations have already been carried out forward of time, your queries will full considerably quicker since you possibly can reduce the complexity obligatory throughout read-time.

There are two persistent materializations out there in dbt: incremental and desk.

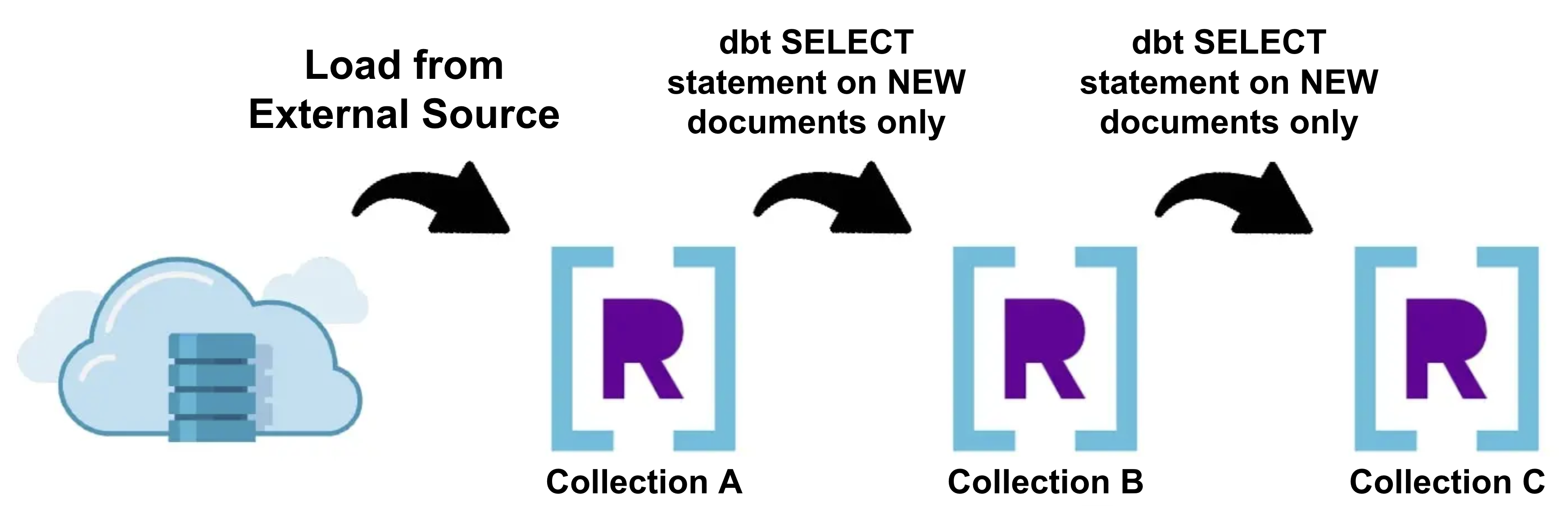

Materializing dbt Incremental Fashions in Rockset

Incremental Fashions are a complicated idea in dbt which let you insert or replace paperwork right into a Rockset assortment for the reason that final time dbt was run. This could considerably scale back the construct time since we solely must carry out transformations on the brand new knowledge that was simply generated, somewhat than dropping, recreating, and performing transformations on the whole lot of the information.

Relying on the complexity of your knowledge transformations, incremental materializations might not at all times be a viable choice to fulfill your transformation necessities. Incremental materializations are normally finest fitted to occasion or time-series knowledge streamed straight into Rockset. To inform dbt which paperwork it ought to carry out transformations on throughout an incremental run, merely present SQL that filters for these paperwork utilizing the is_incremental() macro in your dbt code. You may be taught extra about configuring incremental fashions in dbt right here.

Materializing dbt Desk Fashions in Rockset

Desk Fashions in dbt are transformations which drop and recreate whole Rockset collections with every execution of dbt run so as to replace that assortment’s remodeled knowledge with essentially the most up-to-date supply knowledge. That is the only method to persist remodeled knowledge in Rockset, and leads to a lot quicker queries for the reason that transformations are accomplished prior to question time.

Then again, the largest disadvantage to utilizing desk fashions is that they are often sluggish to finish since Rockset isn’t optimized for creating completely new collections from scratch on the fly. This may occasionally trigger your knowledge latency to extend considerably as it could take a number of minutes for Rockset to provision sources for a brand new assortment after which populate it with remodeled knowledge.

Placing It All Collectively

Understand that with each desk fashions and incremental fashions, you possibly can at all times use them together with Rockset views to customise the proper stack so as to meet the distinctive necessities of your knowledge transformations. For instance, you may use SQL-based rollups to first remodel your streaming knowledge throughout write-time, remodel and persist them into Rockset collections through incremental or desk fashions, after which execute a sequence of view fashions throughout read-time to rework your knowledge once more.

Beta Accomplice Program

The dbt-Rockset adapter is totally open-sourced, and we’d love your enter and suggestions! In case you’re fascinated about getting in contact with us, you possibly can enroll right here to affix our beta associate program for the dbt-Rockset adapter, or discover us on the dbt Slack group within the #db-rockset channel. We’re additionally internet hosting an workplace hours on October twenty sixth at 10am PST the place we’ll present a stay demo of real-time transformations and reply any technical questions. Hope you possibly can be part of us for the occasion!