There are a number of change knowledge seize strategies out there when utilizing a MySQL or Postgres database. A few of these strategies overlap and are very related no matter which database expertise you’re utilizing, others are totally different. Finally, we require a approach to specify and detect what has modified and a technique of sending these adjustments to a goal system.

This publish assumes you’re acquainted with change knowledge seize, if not learn the earlier introductory publish right here “Change Information Seize: What It Is and How To Use It.” On this publish, we’re going to dive deeper into the alternative ways you possibly can implement CDC if in case you have both a MySQL and Postgres database and examine the approaches.

CDC with Replace Timestamps and Kafka

One of many easiest methods to implement a CDC resolution in each MySQL and Postgres is through the use of replace timestamps. Any time a report is inserted or modified, the replace timestamp is up to date to the present date and time and allows you to know when that report was final modified.

We are able to then both construct bespoke options to ballot the database for any new information and write them to a goal system or a CSV file to be processed later. Or we will use a pre-built resolution like Kafka and Kafka Join that has pre-defined connectors that ballot tables and publish rows to a queue when the replace timestamp is larger than the final processed report. Kafka Join additionally has connectors to focus on techniques that may then write these information for you.

Fetching the Updates and Publishing them to the Goal Database utilizing Kafka

Kafka is an occasion streaming platform that follows a pub-sub mannequin. Publishers ship knowledge to a queue and a number of shoppers can then learn messages from that queue. If we wished to seize adjustments from a MySQL or Postgres database and ship them to an information warehouse or analytics platform, we first have to arrange a writer to ship the adjustments after which a shopper that might learn the adjustments and apply them to our goal system.

To simplify this course of we will use Kafka Join. Kafka Join works as a center man with pre-built connectors to each publish and eat knowledge that may merely be configured with a config file.

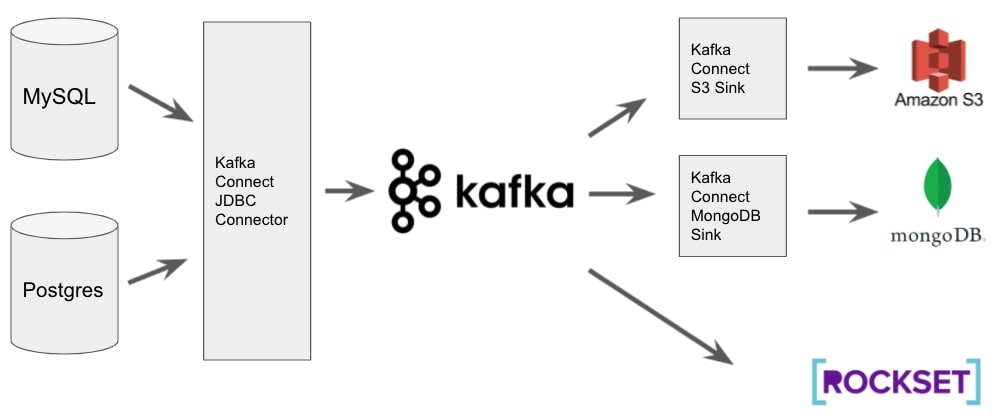

Fig 1. CDC structure with MySQL, Postgres and Kafka

As proven in Fig 1, we will configure a JDBC connector for Kafka Join that specifies which desk we want to eat, the right way to detect adjustments which in our case can be through the use of the replace timestamp and which subject (queue) to publish them to. Utilizing Kafka Connect with deal with this implies all the logic required to detect which rows have modified is completed for us. We solely want to make sure that the replace timestamp discipline is up to date (lined within the subsequent part) and Kafka Join will deal with:

- Maintaining observe of the utmost replace timestamp of the most recent report it has revealed

- Polling the database for any information with newer replace timestamp fields

- Writing the info to a queue to be consumed downstream

We are able to then both configure “sinks” which outline the place to output the info or have the supply system discuss to Kafka instantly. Once more, Kafka Join has many pre-defined sink connectors that we will simply configure to output the info to many various goal techniques. Providers like Rockset can discuss to Kafka instantly and subsequently don’t require a sink to be configured.

Once more, utilizing Kafka Join signifies that out of the field, not solely can we write knowledge to many various areas with little or no coding required, however we additionally get Kafkas throughput and fault tolerance that can assist us scale our resolution sooner or later.

For this to work, we have to make sure that we now have replace timestamp fields on the tables we need to seize and that these fields are all the time up to date at any time when the report is up to date. Within the subsequent part, we cowl the right way to implement this in each MySQL and Postgres.

Utilizing Triggers for Replace Timestamps (MySQL & Postgres)

MySQL and Postgres each help triggers. Triggers can help you carry out actions within the database both instantly earlier than or after one other motion occurs. For this instance, at any time when an replace command is detected to a row in our supply desk, we need to set off one other replace on the affected row which units the replace timestamp to the present date and time.

We solely need the set off to run on an replace command as in each MySQL and Postgres you possibly can set the replace timestamp column to robotically use the present date and time when a brand new report is inserted. The desk definition in MySQL would look as follows (the Postgres syntax could be very related). Be aware the DEFAULT CURRENTTIMESTAMP key phrases when declaring the replacetimestamp column that ensures when a report is inserted, by default the present date and time are used.

CREATE TABLE person

(

id INT(6) UNSIGNED AUTO_INCREMENT PRIMARY KEY,

firstname VARCHAR(30) NOT NULL,

lastname VARCHAR(30) NOT NULL,

electronic mail VARCHAR(50),

update_timestamp TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);

This may imply our update_timestamp column will get set to the present date and time for any new information, now we have to outline a set off that can replace this discipline at any time when a report is up to date within the person desk. The MySQL implementation is easy and appears as follows.

DELIMITER $$

CREATE TRIGGER user_update_timestamp

BEFORE UPDATE ON person

FOR EACH ROW BEGIN

SET NEW.update_timestamp = CURRENT_TIMESTAMP;

END$$

DELIMITER ;

For Postgres, you first need to outline a perform that can set the update_timestamp discipline to the present timestamp after which the set off will execute the perform. This can be a delicate distinction however is barely extra overhead as you now have a perform and a set off to keep up within the postgres database.

Utilizing Auto-Replace Syntax in MySQL

If you’re utilizing MySQL there’s one other, a lot easier approach of implementing an replace timestamp. When defining the desk in MySQL you possibly can outline what worth to set a column to when the report is up to date, which in our case could be to replace it to the present timestamp.

CREATE TABLE person

(

id INT(6) UNSIGNED AUTO_INCREMENT PRIMARY KEY,

firstname VARCHAR(30) NOT NULL,

lastname VARCHAR(30) NOT NULL,

electronic mail VARCHAR(50),

update_timestamp TIMESTAMP DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP

);

The good thing about that is that we not have to keep up the set off code (or the perform code within the case of Postgres).

CDC with Debezium, Kafka and Amazon DMS

An alternative choice for implementing a CDC resolution is through the use of the native database logs that each MySQL and Postgres can produce when configured to take action. These database logs report each operation that’s executed in opposition to the database which might then be used to duplicate these adjustments in a goal system.

The benefit of utilizing database logs is that firstly, you don’t want to put in writing any code or add any further logic to your tables as you do with replace timestamps. Second, it additionally helps deletion of information, one thing that isn’t attainable with replace timestamps.

In MySQL you do that by turning on the binlog and in Postgres, you configure the Write Forward Log (WAL) for replication. As soon as the database is configured to put in writing these logs you possibly can select a CDC system to assist seize the adjustments. Two standard choices are Debezium and Amazon Database Migration Service (DMS). Each of those techniques utilise the binlog for MySQL and WAL for Postgres.

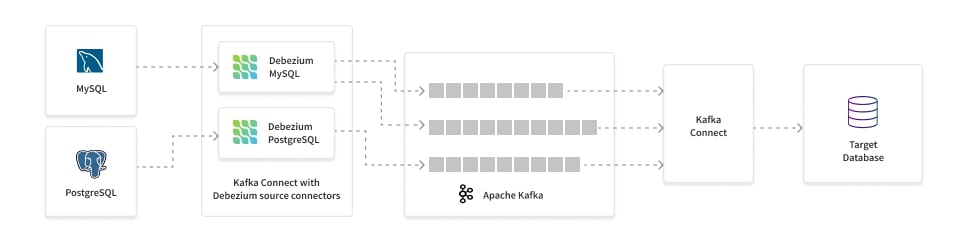

Debezium works natively with Kafka. It picks up the related adjustments, converts them right into a JSON object that incorporates a payload describing what has modified and the schema of the desk and places it on a Kafka subject. This payload incorporates all of the context required to use these adjustments to our goal system, we simply want to put in writing a shopper or use a Kafka Join sink to put in writing the info. As Debezium makes use of Kafka, we get all the advantages of Kafka reminiscent of fault tolerance and scalability.

Fig 2. Debezium CDC structure for MySQL and Postgres

AWS DMS works in an identical approach to Debezium. It helps many various supply and goal techniques and integrates natively with all the standard AWS knowledge providers together with Kinesis and Redshift.

The principle advantage of utilizing DMS over Debezium is that it is successfully a “serverless” providing. With Debezium, if you’d like the flexibleness and fault tolerance of Kafka, you’ve the overhead of deploying a Kafka cluster. DMS as its identify states is a service. You configure the supply and goal endpoints and AWS takes care of dealing with the infrastructure to take care of monitoring the database logs and copying the info to the goal.

Nevertheless, this serverless method does have its drawbacks, primarily in its characteristic set.

Which Possibility for CDC?

When weighing up which sample to comply with it’s necessary to evaluate your particular use case. Utilizing replace timestamps works if you solely need to seize inserts and updates, if you have already got a Kafka cluster you possibly can rise up and working with this in a short time, particularly if most tables already embody some type of replace timestamp.

In the event you’d moderately go along with the database log method, possibly since you need actual replication then you need to look to make use of a service like Debezium or AWS DMS. I’d counsel first checking which system helps the supply and goal techniques you require. If in case you have some extra superior use circumstances reminiscent of masking delicate knowledge or re-routing knowledge to totally different queues primarily based on its content material then Debezium might be your best option. In the event you’re simply in search of easy replication with little overhead then DMS will give you the results you want if it helps your supply and goal system.

If in case you have real-time analytics wants, chances are you’ll think about using a goal database like Rockset as an analytics serving layer. Rockset integrates with MySQL and Postgres, utilizing AWS DMS, to ingest CDC streams and index the info for sub-second analytics at scale. Rockset may also learn CDC streams from NoSQL databases, reminiscent of MongoDB and Amazon DynamoDB.

The fitting reply is determined by your particular use case and there are a lot of extra choices than have been mentioned right here, these are simply a few of the extra standard methods to implement a contemporary CDC system.

Lewis Gavin has been an information engineer for 5 years and has additionally been running a blog about expertise inside the Information group for 4 years on a private weblog and Medium. Throughout his pc science diploma, he labored for the Airbus Helicopter workforce in Munich enhancing simulator software program for army helicopters. He then went on to work for Capgemini the place he helped the UK authorities transfer into the world of Massive Information. He’s at the moment utilizing this expertise to assist remodel the info panorama at easyfundraising.org.uk, an internet charity cashback website, the place he’s serving to to form their knowledge warehousing and reporting functionality from the bottom up.