At Dimona, a number one Latin American attire firm based 55 years in the past in Brazil, our enterprise is t-shirts. We design them, manufacture them, and promote them to customers on-line and thru our 5 retail shops in Rio de Janeiro. We additionally provide B2B firms for his or her clients in Brazil and the US.

We’ve come a great distance since 2011 once I joined Dimona to launch our first web site. Right now, our API permits our B2B clients to add {custom} designs, and robotically route orders from their e-commerce websites to us. We then make the shirts on demand and ship them in as little as 24 hours.

Each APIs and fast-turnaround drop transport have been main improvements for the Latin American attire trade, and it enabled us to develop in a short time. Right now, we have now greater than 80,000 B2B clients provided by our factories in Rio de Janeiro and South Florida. We will dropship on behalf of our B2B clients wherever in Brazil and the U.S. and assist them keep away from the effort and value of import taxes.

Our enterprise is prospering. Nevertheless, we virtually didn’t get right here because of rising pains with our information expertise.

Off-the-Shelf ERP Techniques Too Restricted

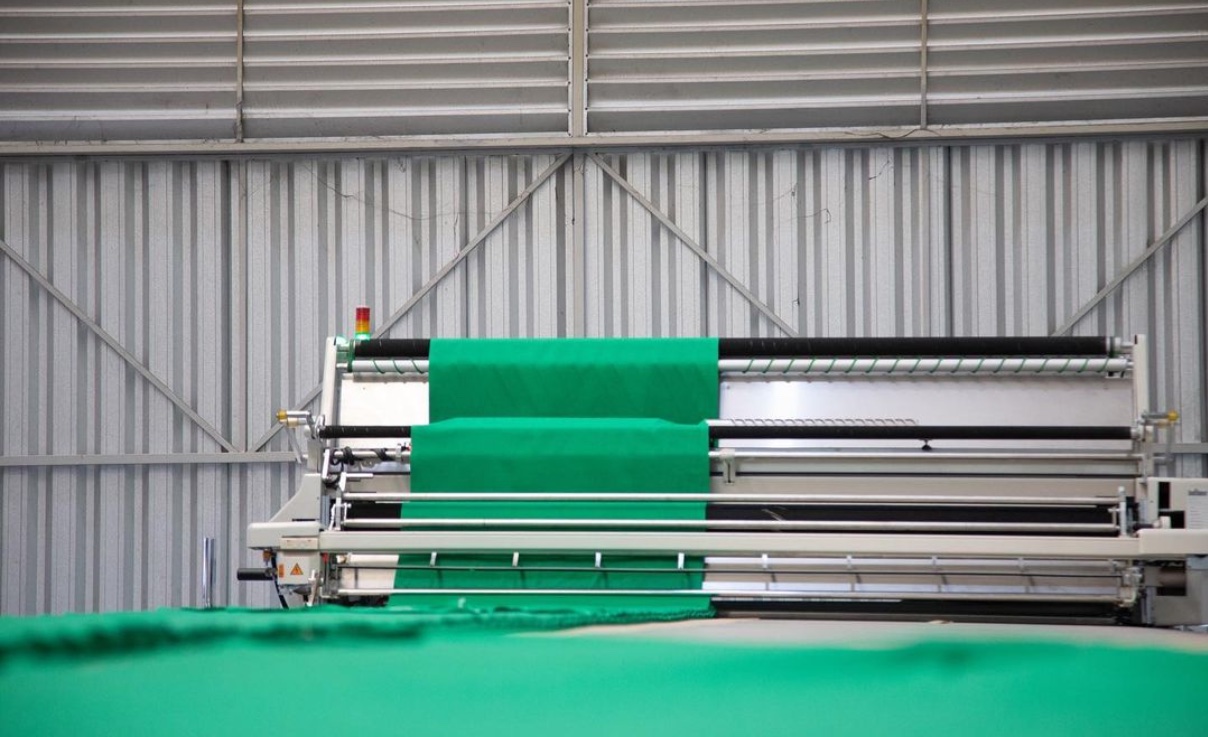

Resulting from our vertically-integrated enterprise mannequin, our provide chain is longer than most clothes makers. We have to observe uncooked material because it arrives in our factories, the t-shirts as they transfer by the reducing, stitching and printing phases, and the completed merchandise as they journey from manufacturing facility to warehouse to retail retailer or mail provider earlier than lastly reaching clients.

Not solely is our provide chain longer than regular, so is the scale and variety of our stock. We now have as much as a million t-shirts in inventory relying on the season. And because of the many {custom} designs, colours, materials and sizes that we provide, the variety of distinctive gadgets can also be greater than different attire makers.

We tried many off-the-shelf ERP techniques to handle our stock end-to-end however nothing proved as much as the duty. Particularly, limitations in these techniques meant we might solely retailer the end-of-day stock counts by location, slightly than a full file of every particular person merchandise because it traveled by our provide chain.

Monitoring solely stock counts minimized the quantity of information we needed to retailer. Nevertheless, it additionally meant that after we tried to check these counts with the stock actions we did have on file, mysterious errors emerged that we couldn’t reconcile. That made it arduous for us to belief our personal stock information.

MySQL Crumbles Below Analytic Load

In 2019, we deployed our personal custom-built stock administration system to our primary warehouse in Rio de Janeiro. Having had expertise with AWS, we constructed our stock administration system round Amazon Aurora, AWS’s model of MySQL-as-a-service. Fairly than simply file end-of-day stock totals, we recorded each stock motion utilizing three items of information: the merchandise ID, its location ID, and the amount of that merchandise at that location.

In different phrases, we created a ledger that tracked each t-shirt because it moved from uncooked material to completed items into the palms of a buyer. Each single barcode scan was recorded, whether or not it was a pallet of t-shirts shipped from the warehouse to a retailer, or a single shirt moved from one retailer shelf to a different.

This created an explosion within the quantity of information we have been gathering in actual time. Instantly, we have been importing 300,000 transactions to Aurora each two weeks. Nevertheless it additionally enabled us to question our information to find the precise location of a specific t-shirt at any given time, in addition to view high-level stock totals and traits.

At first, Aurora was in a position to deal with the duty of each storing and aggregating the information. However as we introduced extra warehouses and shops on-line, the database began bogging down on the analytics facet. Queries that used to take tens of seconds began taking greater than a minute or timing out altogether. After a reboot, the system could be advantageous for a short time earlier than changing into sluggish and unresponsive once more.

Pandemic-Led Growth

Compounding the difficulty was the COVID-19’s arrival in early 2020. Instantly we had many worldwide clients clamoring for a similar drop cargo providers we offered in Brazil in different markets. In mid-2020, I moved to Florida and opened our U.S. manufacturing facility and warehouse.

By that time, our stock administration system had slowed right down to the purpose of being unusable. And our payments from doing even easy aggregations in Aurora have been by the roof.

We have been confronted with a number of choices. Going again to an error-ridden inventory-count system was out of the query. Another choice was to proceed recording all stock actions however use them solely to double-check our separately-tracked stock counts, slightly than producing our stock totals from the motion data themselves. That may keep away from overtaxing the Aurora database’s meager analytical capabilities. However it might power us to keep up two separate datasets – datasets that must be continually in contrast in opposition to one another with no assure that it might enhance accuracy.

We would have liked a greater expertise resolution, one that might retailer large information units and question them in quick, automated methods in addition to make fast, easy information aggregations. And we wanted it quickly.

Discovering Our Resolution

I checked out a number of disparate choices. I thought of a blockchain-based system for our ledger earlier than shortly dismissing it. Inside AWS, I checked out DynamoDB in addition to one other ledger database provided by Amazon. We couldn’t get DynamoDB to ingest our information, whereas the ledger database was too uncooked and would have required an excessive amount of DIY effort to make work. I additionally checked out Elasticsearch, and got here to the identical conclusion – an excessive amount of {custom} engineering effort to deploy.

I discovered about Rockset from an organization that additionally was seeking to exchange query-challenged Aurora with a quicker managed cloud various.

It took us simply two months to check and validate Rockset earlier than deploying it in September 2021. We continued to ingest all of our stock transactions into Aurora. However utilizing Amazon’s Database Migration Service (DMS), we now repeatedly replicate information from Aurora into Rockset, which does all the information processing, aggregations and calculations.

“The place Rockset actually shines is its means to ship exact, correct views of our stock in near-real time.”

– Igor Blumberg, CTO, Dimona

This connection was extraordinarily straightforward to arrange because of Rockset’s integration with MySQL. And it’s quick: DMS replicates updates from a million+ Aurora paperwork to Rockset each minute, changing into accessible to customers immediately.

The place Rockset actually shines is its means to ship exact, correct views of our stock in near-real time. We use Rockset’s Question Lambda functionality to pre-create named, parameterized SQL queries that may be executed from a REST endpoint. This avoids having to make use of software code to execute SQL queries, which is simpler to handle and observe efficiency, in addition to safer.

Utilizing Rockset’s Question Lambdas and APIs additionally shrank the quantity of information we wanted to course of. This accelerates the pace at which we will ship solutions to clients searching our web site, and to retailer staff and company staff internally looking out our stock administration system. Rockset additionally utterly eradicated database timeouts.

Rockset additionally provides us full confidence within the ongoing accuracy of our stock administration system with out having to continually double-check in opposition to each day stock counts. And it permits us to trace our provide chain in actual time and predict potential spikes in demand and shortages.

Rockset has been in manufacturing for us for greater than half a yr. Although we aren’t but leveraging Rockset’s capabilities in advanced analytics or deep information explorations, we’re greater than happy with the close to real-time, highly-accurate views of our stock we have now now – one thing that MySQL couldn’t ship.

Sooner or later we’re pondering of monitoring DMS to protect in opposition to hiccups or replication errors, although there have been none so far. We’re additionally contemplating utilizing Rockset’s APIs to create objects as we ingest stock transactions.

Rockset has had a large impact on our enterprise. Its pace and accuracy give us unprecedented visibility into our stock and provide chain, which is mission crucial for us.

Rockset helped us thrive throughout Black Friday and Christmas 2021. For the primary time, I used to be in a position to get some sleep in the course of the vacation season!

“Rockset provides us full confidence within the ongoing accuracy of our stock administration system with out having to continually double-check in opposition to each day stock counts. And it permits us to trace our provide chain in actual time and predict potential spikes in demand and shortages.”

– Igor Blumberg, CTO, Dimona

Rockset is the real-time analytics database within the cloud for contemporary information groups. Get quicker analytics on more energizing information, at decrease prices, by exploiting indexing over brute-force scanning.