This clever healthcare system makes use of a small language mannequin MiniLM-L6-V2 to raised perceive and analyze medical data, equivalent to signs or therapy directions. The mannequin turns textual content into “embeddings,” or significant numbers, that seize the context of the phrases. By utilizing these embeddings, the system can examine signs successfully and make sensible suggestions for situations or remedies that match the consumer’s wants. This helps enhance the accuracy of health-related ideas and permits customers to find related care choices.

Studying Goals

- Perceive how small language fashions generate embeddings to signify textual medical knowledge.

- Develop abilities in constructing a symptom-based suggestion system for healthcare functions.

- Study strategies for knowledge manipulation and evaluation utilizing libraries like Pandas and Scikit-learn.

- Achieve insights into embedding-based semantic similarity for situation matching.

- Handle challenges in health-related AI techniques like symptom ambiguity and knowledge sensitivity.

This text was revealed as part of the Knowledge Science Blogathon.

Understanding Small Language Fashions

Small Language Fashions (SLMs) are neural language fashions which might be designed to be computationally environment friendly, with fewer parameters and layers in comparison with bigger, extra resource-intensive fashions like BERT or GPT-3. SLMs intention to keep up a steadiness between light-weight structure and the flexibility to carry out particular duties successfully, equivalent to sentence similarity, sentiment evaluation, and embedding era, with out requiring intensive computing assets.

Traits of Small Language Fashions

- Diminished Parameters and Layers: SLMs typically have fewer parameters (tens of thousands and thousands in comparison with tons of of thousands and thousands or billions) and fewer layers e.g., 6 layers vs. 12 or extra in bigger fashions.

- Decrease Computational Price: They require much less reminiscence and processing energy, making them sooner and appropriate for edge gadgets or functions with restricted assets.

- Job-Particular Effectivity: Whereas SLMs might not seize as a lot context as bigger fashions, they’re typically fine-tuned for particular duties, balancing effectivity with efficiency for duties like textual content embeddings or doc classification.

Introduction to Sentence Transformers

Sentence Transformers are fashions that flip textual content into fixed-size “embeddings,” that are like summaries in vector type that seize the textual content’s which means. These embeddings make it quick and straightforward to check texts, serving to with duties like discovering related sentences, looking paperwork, grouping related gadgets, and classifying textual content. Because the embeddings are fast to compute, Sentence Transformers are nice for first go searches.

Utilizing All-MiniLM-L6-V2 in Healthcare

AllMiniLM-L6-v2 is a compact, pre-trained language mannequin designed for environment friendly textual content embedding duties. Developed as a part of the Sentence Transformers framework, it makes use of Microsoft’s MiniLM (Minimally Distilled Language Mannequin) structure identified for being light-weight and environment friendly in comparison with bigger transformer fashions.

Right here’s an outline of its options and capabilities:

- Structure and Layers: The mannequin consists of solely 6 transformer layers therefore the “L6” in its title, making it a lot smaller and sooner than massive fashions like BERT or GPT whereas nonetheless reaching top quality embeddings.

- Embedding High quality: Regardless of its small measurement, all-MiniLM-L6-v2 performs nicely for producing sentence embeddings, notably in semantic similarity and clustering duties. Model v2 improves efficiency on semantic duties like query answering, data retrieval, and textual content classification by means of fine-tuning.

all-MiniLM-L6-v2 is an instance of an SLM as a result of its light-weight design and specialised performance:

- Compact Design: It has 6 layers and 22 million parameters, considerably smaller than BERT-base (110 million parameters) or GPT-2 (117 million parameters), making it each reminiscence environment friendly and quick.

- Sentence Embeddings: Advantageous-tuned for duties like semantic search and clustering, it produces dense sentence embeddings and achieves a excessive performance-to-size ratio.

- Optimized for Semantic Understanding: MiniLM fashions, regardless of their smaller measurement, carry out nicely on sentence similarity and embedding-based functions, typically matching the standard of bigger fashions however with decrease computational demand.

Due to these elements, AllMiniLM-L6-v2 successfully captures the primary traits of an SLM: low parameter rely, task-specific optimization, and effectivity on resource-constrained gadgets. This steadiness makes it well-suited for functions needing compact but efficient language fashions.

Implementing the Mannequin in Code

Implementing the All-MiniLM-L6-V2 mannequin in code brings environment friendly symptom evaluation to healthcare functions. By producing embeddings, this mannequin allows fast, correct comparisons for symptom matching and analysis.

from sentence_transformers import SentenceTransformer

# 1. Load a pretrained Sentence Transformer mannequin

mannequin = SentenceTransformer("all-MiniLM-L6-v2")

# The sentences to encode

sentences = [

"The weather is lovely today.",

"It's so sunny outside!",

"He drove to the stadium.",

]

# 2. Calculate embeddings by calling mannequin.encode()

embeddings = mannequin.encode(sentences)

print(embeddings.form)

# [3, 384]

# 3. Calculate the embedding similarities

similarities = mannequin.similarity(embeddings, embeddings)

print(similarities)

# tensor([[1.0000, 0.6660, 0.1046],

# [0.6660, 1.0000, 0.1411],

# [0.1046, 0.1411, 1.0000]])Use Instances: Frequent functions of all-MiniLM-L6-v2 embrace:

- Semantic search, the place the mannequin encodes queries and paperwork for environment friendly similarity comparability. (e.g., in our healthcare NLP Challenge).

- Textual content classification and clustering, the place embeddings assist group related texts.

- Suggestion techniques, by figuring out related gadgets based mostly on embeddings

Constructing the Symptom-Based mostly Prognosis System

Constructing a symptom-based analysis system leverages embeddings to establish well being situations shortly and precisely. This setup interprets consumer signs into actionable insights, enhancing healthcare accessibility.

Importing Vital Libraries

!pip set up sentence-transformers

import pandas as pd

from sentence_transformers import SentenceTransformer, util

# Load the info

df = pd.read_csv('/kaggle/enter/disease-and-symptoms/Diseases_Symptoms.csv')

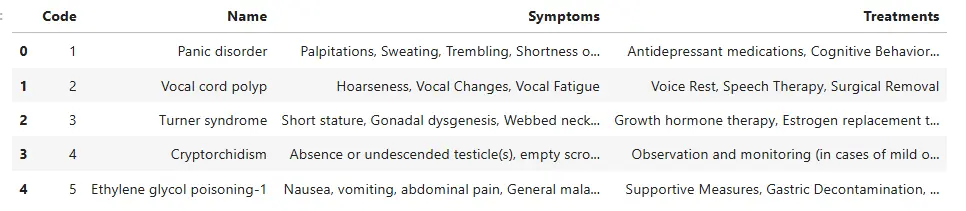

df.head()The code begins by importing the required libraries equivalent to pandas and sentence-transformers for producing our textual content embeddings. The dataset which incorporates illnesses and their related signs, is loaded right into a DataFrame df. The primary few entries of the dataset are displayed. The hyperlink to the dataset.

The columns within the dataset are:

- Code: Distinctive identifier for the situation.

- Title: The title of the medical situation.

- Signs: Frequent signs related to the situation.

- Therapies: Beneficial remedies or therapies for administration.

Initialize Sentence Transformer

# Initialize a Sentence Transformer mannequin to generate embeddings

mannequin = SentenceTransformer('all-MiniLM-L6-v2')

# Generate embeddings for every situation's signs

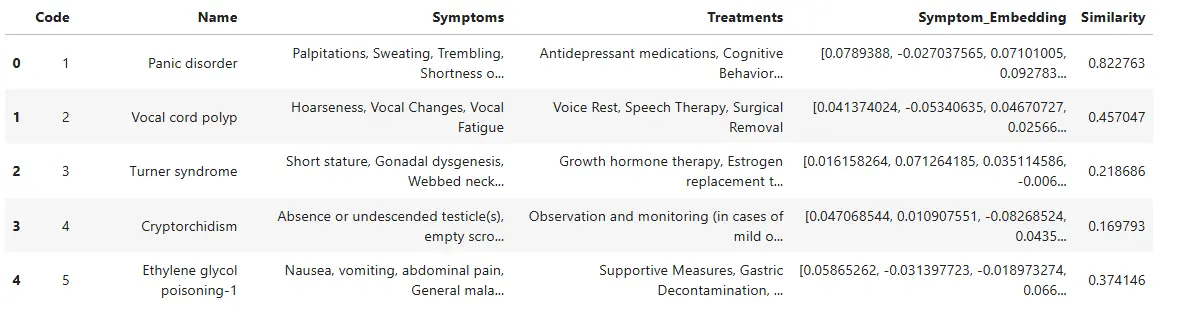

df['Symptom_Embedding'] = df['Symptoms'].apply(lambda x: mannequin.encode(x))Then initialize a Sentence Transformer mannequin ‘all-MiniLM-L6-v2’ this helps in changing the descriptions within the symptom column into vector embeddings. The following line of code includes making use of the mannequin to the ‘Signs’ column of the DataFrame asn storing the end in a brand new column ‘Symptom_Embedding’ that can retailer the embeddings for every illness’s signs.

# Operate to search out matching situation based mostly on enter signs

def find_condition_by_symptoms(input_symptoms):

# Generate embedding for the enter signs

input_embedding = mannequin.encode(input_symptoms)

# Calculate similarity scores with every situation

df['Similarity'] = df['Symptom_Embedding'].apply(lambda x: util.cos_sim(input_embedding, x).merchandise())

# Discover probably the most related situation

best_match = df.loc[df['Similarity'].idxmax()]

return best_match['Name'], best_match['Treatments']Defining Operate

Subsequent, we outline a operate find_condition_by_symptoms() which takes the consumer’s enter signs as an argument. It generates an embedding for the enter signs and computes similarity scores between this embedding and the embeddings of the illnesses within the dataset utilizing cosine similarity. The mannequin identifies the illness with the best similarity rating as the perfect match and shops it within the ‘Similarity’ column of the info body. Utilizing .idxmax(), it finds the index of this finest match and assigns it to the variable best_match, then returns the corresponding ‘Title’ and ‘Therapies’ values.

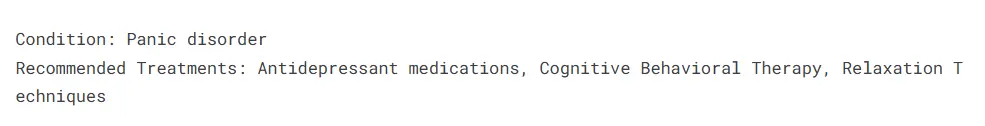

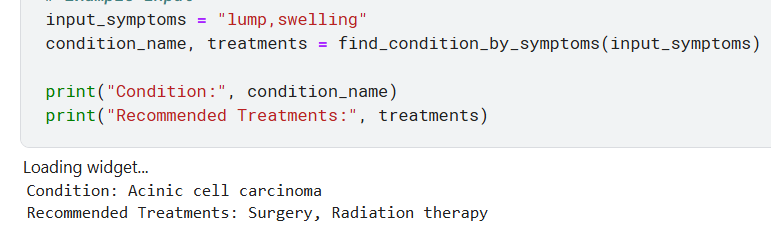

# Instance enter

input_symptoms = "Sweating, Trembling, Concern of shedding management"

condition_name, remedies = find_condition_by_symptoms(input_symptoms)

print("Situation:", condition_name)

print("Beneficial Therapies:", remedies)Testing by Passing Signs

Lastly, we offer an instance enter for signs we go the worth to find_condition_by_symptoms() operate the values returned are printed, that are the title of the matching situation together with the advisable remedies. This setup permits for fast and environment friendly analysis based mostly on user-reported signs.

df.head()The up to date knowledge body with the columns ‘Symptom_Embedding’ and ‘Similarity’ might be checked.

One of many challenges might be incomplete or incorrect knowledge resulting in misdiagnosis.

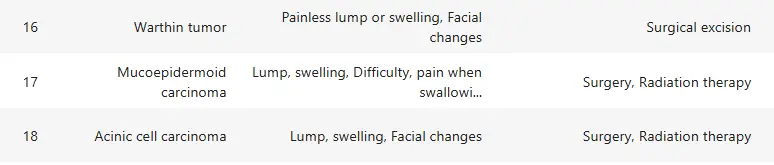

‘Lumps & swelling’ are frequent signs of a number of illnesses for instance:

Thus, the illness is very probably misclassified by incomplete signs.

Challenges in Symptom Evaluation and Prognosis

Allow us to discover challenges in symptom evaluation and analysis:

- Incomplete or incorrect knowledge can result in deceptive outcomes.

- Signs can range considerably amongst people resulting in overlaps with a number of situations.

- The effectiveness of the mannequin depends closely on the standard of generated embeddings.

- Totally different descriptions of signs by customers can complicate matching with given symptom descriptions.

- Dealing with delicate health-related knowledge raises issues about affected person confidentiality and knowledge safety.

Conclusion

On this article, we used small language fashions to boost healthcare accessibility and effectivity by means of a illness analysis system based mostly on symptom evaluation. By utilizing embeddings from a small language mannequin the system can establish situations based mostly on consumer enter signs offering us with therapy suggestions. Addressing challenges associated to knowledge high quality, symptom ambiguity and consumer enter variability is important for enhancing accuracy and consumer expertise.

Key Takeaways

- Embedding fashions like MiniLM-L6-V2 allow exact symptom evaluation and healthcare suggestions.

- Compact small language fashions effectively assist healthcare AI on resource-constrained gadgets.

- Excessive-quality embedding era is essential for correct symptom and situation matching.

- Addressing knowledge high quality and variability enhances reliability in AI-driven well being suggestions.

- The system’s effectiveness hinges on strong knowledge dealing with and various symptom descriptions.

Regularly Requested Questions

A. The system helps the consumer establish potential medical situations based mostly on reported signs by evaluating them to a database of identified situations.

A. It makes use of a pre-trained Sentence Transformer mannequin MiniLM-L6-V2 to transform signs into vector embeddings capturing their semantic meanings for higher comparability.

A. Whereas it gives helpful insights it can’t exchange skilled medical recommendation and will battle with obscure symptom descriptions restricted by the standard of its underlying dataset.

A. Accuracy varies based mostly on enter high quality and underlying knowledge. Outcomes ought to be thought of preliminary earlier than consulting a healthcare skilled.

A. Sure, it accepts a string of signs although matching effectiveness might rely upon how clearly the signs are expressed.

The media proven on this article isn’t owned by Analytics Vidhya and is used on the Creator’s discretion.