Whatnot is a venture-backed e-commerce startup constructed for the streaming age. We’ve constructed a reside video market for collectors, trend fanatics, and superfans that permits sellers to go reside and promote something they’d like by means of our video public sale platform. Suppose eBay meets Twitch.

Coveted collectibles have been the primary objects on our livestream once we launched in 2020. Right this moment, by means of reside buying movies, sellers supply merchandise in additional than 100 classes, from Pokemon and baseball playing cards to sneakers, vintage cash and far more.

Essential to Whatnot’s success is connecting communities of consumers and sellers by means of our platform. It gathers alerts in real-time from our viewers: the movies they’re watching, the feedback and social interactions they’re leaving, and the merchandise they’re shopping for. We analyze this information to rank the preferred and related movies, which we then current to customers within the residence display screen of Whatnot’s cellular app or web site.

Nonetheless, to take care of and enhance our progress, we wanted to take our residence feed to the subsequent degree: rating our present options to every person based mostly on probably the most attention-grabbing and related content material in actual time.

This may require a rise within the quantity and number of information we would wish to ingest and analyze, all of it in actual time. To help this, we sought a platform the place information science and machine studying professionals might iterate rapidly and deploy to manufacturing quicker whereas sustaining low-latency, high-concurrency workloads.

Excessive Value of Operating Elasticsearch

On the floor, our legacy information pipeline seemed to be performing effectively and constructed upon probably the most trendy of parts. This included AWS-hosted Elasticsearch to do the retrieval and rating of content material utilizing batch options loaded on ingestion. This course of returns a single question in tens of milliseconds, with concurrency charges topping out at 50-100 queries per second.

Nonetheless, now we have plans to develop utilization 5-10x within the subsequent yr. This may be by means of a mix of increasing into much-larger product classes, and boosting the intelligence of our advice engine.

The larger ache level was the excessive operational overhead of Elasticsearch for our small staff. This was draining productiveness and severely limiting our means to enhance the intelligence of our advice engine to maintain up with our progress.

Say we needed so as to add a brand new person sign to our analytics pipeline. Utilizing our earlier serving infrastructure, the information must be despatched by means of Confluent-hosted situations of Apache Kafka and ksqlDB after which denormalized and/or rolled up. Then, a selected Elasticsearch index must be manually adjusted or constructed for that information. Solely then might we question the information. The complete course of took weeks.

Simply sustaining our current queries was additionally an enormous effort. Our information adjustments regularly, so we have been continuously upserting new information into current tables. That required a time-consuming replace to the related Elasticsearch index each time. And after each Elasticsearch index was created or up to date, we needed to manually take a look at and replace each different part in our information pipeline to ensure we had not created bottlenecks, launched information errors, and many others.

Fixing for Effectivity, Efficiency, and Scalability

Our new real-time analytics platform can be core to our progress technique, so we fastidiously evaluated many choices.

We designed a knowledge pipeline utilizing Airflow to drag information from Snowflake and push it into certainly one of our OLTP databases that serves the Elasticsearch-powered feed, optionally with a cache in entrance. It was doable to schedule this job to run on 5, 10, 20 minute intervals, however with the extra latency we have been unable to fulfill our SLAs, whereas the technical complexity diminished our desired developer velocity.

So we evaluated many real-time alternate options to Elasticsearch, together with Rockset, Materialize, Apache Druid and Apache Pinot. Each certainly one of these SQL-first platforms met our necessities, however we have been searching for a associate that might tackle the operational overhead as effectively.

In the long run, we deployed Rockset over these different choices as a result of it had the very best mix of options to underpin our progress: a fully-managed, developer-enhancing platform with real-time ingestion and question speeds, excessive concurrency and automated scalability.

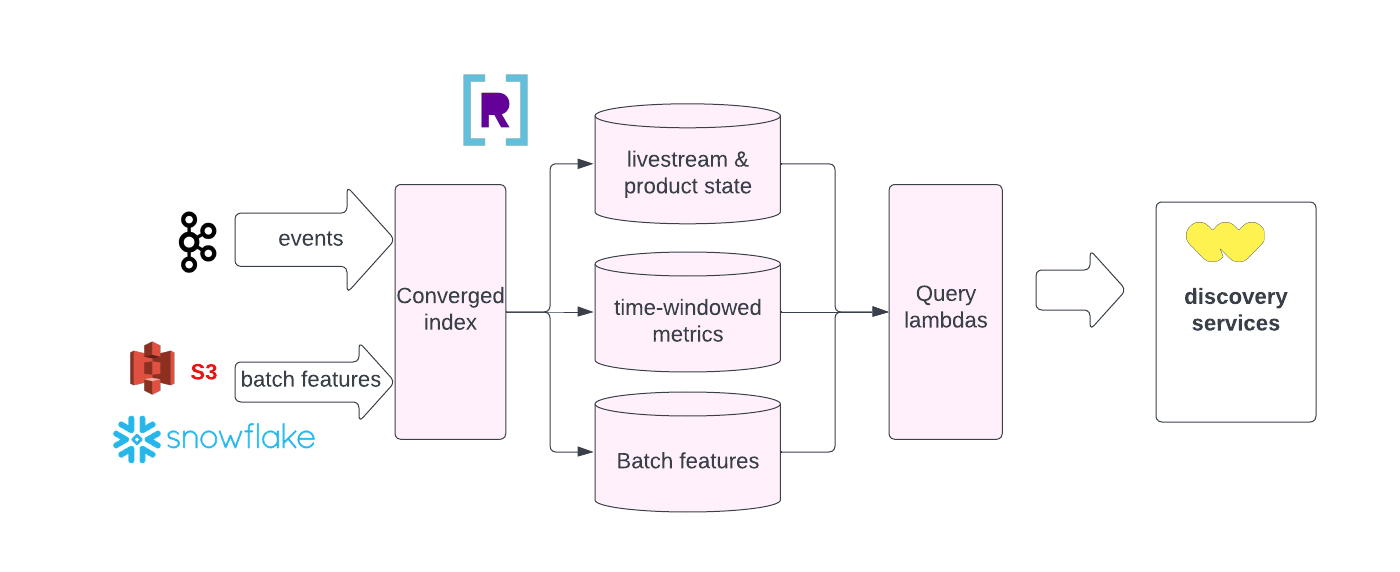

Let’s have a look at our highest precedence, developer productiveness, which Rockset turbocharges in a number of methods. With Rockset’s Converged Index™ function, all fields, together with nested ones, are listed, which ensures that queries are robotically optimized, operating quick irrespective of the kind of question or the construction of the information. We not have to fret in regards to the time and labor of constructing and sustaining indexes, as we needed to with Elasticsearch. Rockset additionally makes SQL a first-class citizen, which is nice for our information scientists and machine studying engineers. It affords a full menu of SQL instructions, together with 4 sorts of joins, searches and aggregations. Such complicated analytics have been more durable to carry out utilizing Elasticsearch.

With Rockset, now we have a a lot quicker improvement workflow. When we have to add a brand new person sign or information supply to our rating engine, we will be a part of this new dataset with out having to denormalize it first. If the function is working as meant and the efficiency is nice, we will finalize it and put it into manufacturing inside days. If the latency is excessive, then we will take into account denormalizing the information or do some precalcuations in KSQL first. Both method, this slashes our time-to-ship from weeks to days.

Rockset’s fully-managed SaaS platform is mature and a primary mover within the area. Take how Rockset decouples storage from compute. This provides Rockset on the spot, automated scalability to deal with our rising, albeit spiky site visitors (corresponding to when a well-liked product or streamer comes on-line). Upserting information can be a breeze attributable to Rockset’s mutable structure and Write API, which additionally makes inserts, updates and deletes easy.

As for efficiency, Rockset additionally delivered true real-time ingestion and queries, with sub-50 millisecond end-to-end latency. That didn’t simply match Elasticsearch, however did so at a lot decrease operational effort and value, whereas dealing with a a lot increased quantity and number of information, and enabling extra complicated analytics – all in SQL.

It’s not simply the Rockset product that’s been nice. The Rockset engineering staff has been a improbable associate. At any time when we had a difficulty, we messaged them in Slack and received a solution rapidly. It’s not the standard vendor relationship – they’ve actually been an extension of our staff.

A Plethora of Different Actual-Time Makes use of

We’re so proud of Rockset that we plan to develop its utilization in lots of areas. Two slam dunks can be group belief and security, corresponding to monitoring feedback and chat for offensive language, the place Rockset is already serving to prospects.

We additionally need to use Rockset as a mini-OLAP database to supply real-time experiences and dashboards to our sellers. Rockset would function a real-time various to Snowflake, and it might be much more handy and straightforward to make use of. As an illustration, upserting new information by means of the Rockset API is immediately reindexed and prepared for queries.

We’re additionally critically wanting into making Rockset our real-time function retailer for machine studying. Rockset can be good to be a part of a machine studying pipeline feeding actual time options such because the rely of chats within the final 20 minutes in a stream. Knowledge would stream from Kafka right into a Rockset Question Lambda sharing the identical logic as our batch dbt transformations on high of Snowflake. Ideally at some point we might summary the transformations for use in Rockset and Snowflake dbt pipelines for composability and repeatability. Knowledge scientists know SQL, which Rockset strongly helps.

Rockset is in our candy spot now. After all, in an ideal world that revolved round Whatnot, Rockset would add options particularly for us, corresponding to stream processing, approximate nearest neighbors search, auto-scaling to call a couple of. We nonetheless have some use circumstances the place real-time joins aren’t sufficient, forcing us to do some pre-calculations. If we might get all of that in a single platform fairly than having to deploy a heterogenous stack, we might like it.

Be taught extra about how we construct real-time alerts in our person House Feed. And go to the Whatnot profession web page to see the openings on our engineering staff.

Embedded content material: https://youtu.be/jxdEi-Ma_J8?si=iadp2XEp3NOmdDlm