A whole lot of cybersecurity professionals, analysts and decision-makers got here collectively earlier this month for ESET World 2024, a convention that showcased the corporate’s imaginative and prescient and technological developments and featured quite a few insightful talks in regards to the newest traits in cybersecurity and past.

The matters ran the gamut, nevertheless it’s protected to say that the topics that resonated probably the most included ESET’s cutting-edge risk analysis and views on synthetic intelligence (AI). Let’s now briefly have a look at some classes that lined the subject that’s on everybody’s lips today – AI.

Again to fundamentals

First off, ESET Chief Expertise Officer (CTO) Juraj Malcho gave the lay of the land, providing his tackle the important thing challenges and alternatives afforded by AI. He wouldn’t cease there, nevertheless, and went on to hunt solutions to a number of the basic questions surrounding AI, together with “Is it as revolutionary because it’s claimed to be?”.

The present iterations of AI expertise are largely within the type of giant language fashions (LLMs) and numerous digital assistants that make the tech really feel very actual. Nevertheless, they’re nonetheless somewhat restricted, and we should totally outline how we wish to use the tech so as to empower our personal processes, together with its makes use of in cybersecurity.

For instance, AI can simplify cyber protection by deconstructing complicated assaults and lowering useful resource calls for. That means, it enhances the safety capabilities of short-staffed enterprise IT operations.

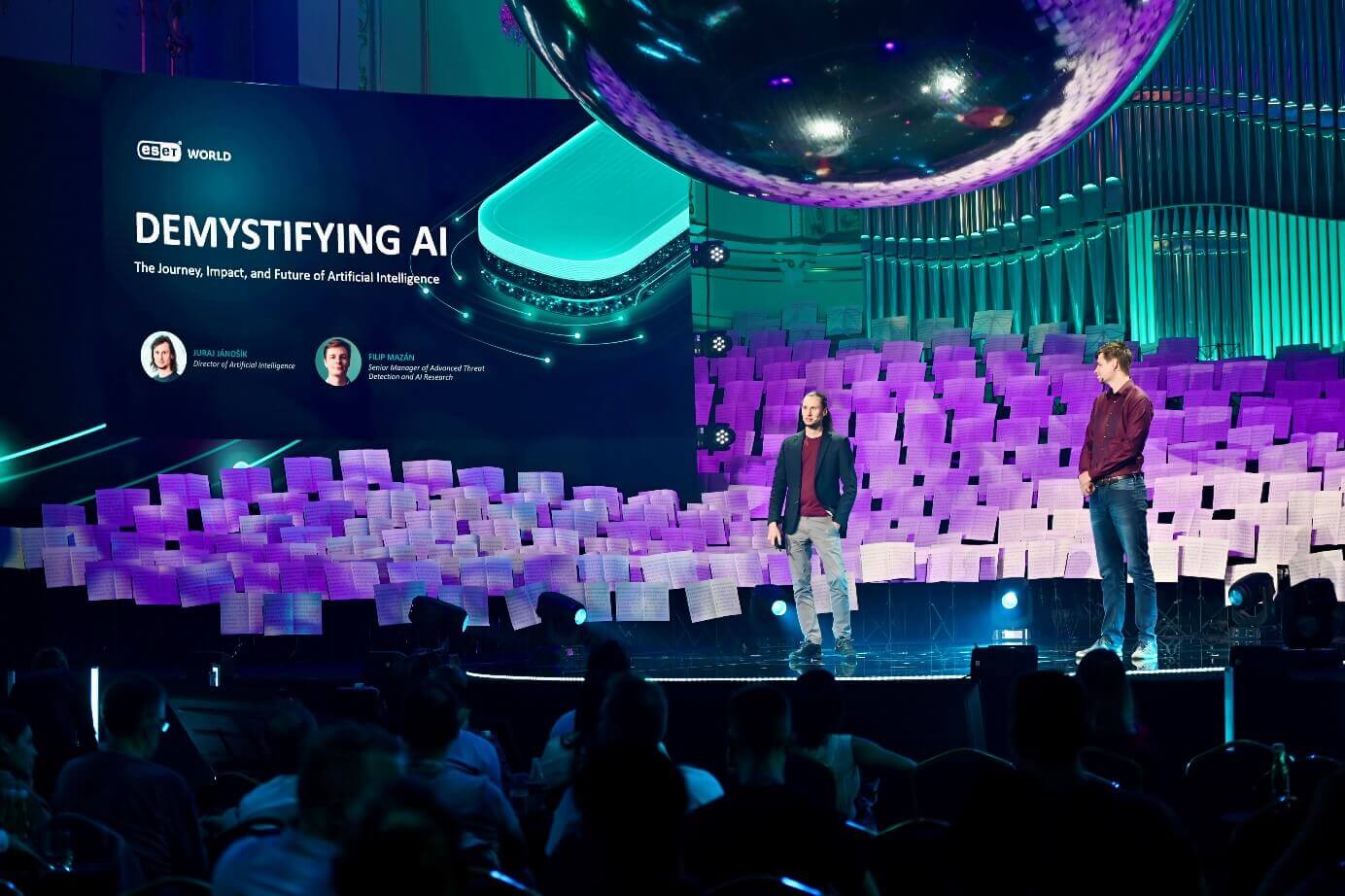

Demystifying AI

Juraj Jánošíokay, Director of Synthetic Intelligence at ESET, and Filip Mazán, Sr. Supervisor of Superior Risk Detection and AI at ESET, went on to current a complete view into the world of AI and machine studying, exploring their roots and distinguishing options.

Mr. Mazán demonstrated how they’re essentially primarily based on human biology, whereby the AI networks mimic some facets of how organic neurons operate to create synthetic neural networks with various parameters. The extra complicated the community, the larger its predictive energy, resulting in developments seen in digital assistants like Alexa and LLMs like ChatGPT or Claude.

Later, Mr. Mazán highlighted that as AI fashions turn out to be extra complicated, their utility can diminish. As we method the recreation of the human mind, the rising variety of parameters necessitates thorough refinement. This course of requires human oversight to continually monitor and finetune the mannequin’s operations.

Certainly, leaner fashions are typically higher. Mr. Mazán described how ESET’s strict use of inner AI capabilities ends in sooner and extra correct risk detection, assembly the necessity for speedy and exact responses to all method of threats.

He additionally echoed Mr. Malcho and highlighted a number of the limitations that beset giant language fashions (LLMs). These fashions work primarily based on prediction and contain connecting meanings, which may get simply muddled and end in hallucinations. In different phrases, the utility of those fashions solely goes up to now.

Different limitations of present AI tech

Moreover, Mr. Jánošík continued to deal with different limitations of latest AI:

- Explainability: Present fashions encompass complicated parameters, making their decision-making processes obscure. Not like the human mind, which operates on causal explanations, these fashions operate via statistical correlations, which aren’t intuitive to people.

- Transparency: High fashions are proprietary (walled gardens), with no visibility into their inside workings. This lack of transparency means there is not any accountability for a way these fashions are configured or for the outcomes they produce.

- Hallucinations: Generative AI chatbots usually generate believable however incorrect info. These fashions can exude excessive confidence whereas delivering false info, resulting in mishaps and even authorized points, corresponding to after Air Canada’s chatbot offered false info a few low cost to a passenger.

Fortunately, the boundaries additionally apply to the misuse of AI expertise for malicious actions. Whereas chatbots can simply formulate plausible-sounding messages to assist spearphishing or enterprise electronic mail compromise assaults, they aren’t that well-equipped to create harmful malware. This limitation is because of their propensity for “hallucinations” – producing believable however incorrect or illogical outputs – and their underlying weaknesses in producing logically related and purposeful code. Consequently, creating new, efficient malware sometimes requires the intervention of an precise knowledgeable to appropriate and refine the code, making the method tougher than some may assume.

Lastly, as identified by Mr. Jánošík, AI is simply one other device that we have to perceive and use responsibly.

The rise of the clones

Within the subsequent session, Jake Moore, International Cybersecurity Advisor at ESET, gave a style of what’s at present doable with the correct instruments, from the cloning of RFID playing cards and hacking CCTVs to creating convincing deepfakes – and the way it can put company information and funds in danger.

Amongst different issues, he confirmed how simple it’s to compromise the premises of a enterprise by utilizing a widely known hacking gadget to repeat worker entrance playing cards or to hack (with permission!) a social media account belonging to the corporate’s CEO. He went on to make use of a device to clone his likeness, each facial and voice, to create a convincing deepfake video that he then posted on one of many CEO’s social media accounts.

The video – which had the would-be CEO announce a “problem” to bike from the UK to Australia and racked up greater than 5,000 views – was so convincing that folks began to suggest sponsorships. Certainly, even the corporate’s CFO additionally acquired fooled by the video, asking the CEO about his future whereabouts. Solely a single individual wasn’t fooled — the CEO’s 14-year-old daughter.

In just a few steps, Mr. Moore demonstrated the hazard that lies with the speedy unfold of deepfakes. Certainly, seeing is now not believing – companies, and other people themselves, have to scrutinize all the pieces they arrive throughout on-line. And with the arrival of AI instruments like Sora that may create video primarily based on just a few strains of enter, harmful instances could possibly be nigh.

Ending touches

The ultimate session devoted to the character of AI was a panel that included Mr. Jánošík, Mr. Mazán, and Mr. Moore and was helmed by Ms. Pavlova. It began off with a query in regards to the present state of AI, the place the panelists agreed that the newest fashions are awash with many parameters and wish additional refinement.

The dialogue then shifted to the fast risks and issues for companies. Mr. Moore emphasised {that a} vital variety of persons are unaware of AI’s capabilities, which dangerous actors can exploit. Though the panelists concurred that subtle AI-generated malware just isn’t at present an imminent risk, different risks, corresponding to improved phishing electronic mail era and deepfakes created utilizing public fashions, are very actual.

Moreover, as highlighted by Mr. Jánošík, the best hazard lies within the information privateness side of AI, given the quantity of knowledge these fashions obtain from customers. Within the EU, for instance, the GDPR and AI Act have set some frameworks for information safety, however that isn’t sufficient since these will not be world acts.

Mr. Moore added that enterprises ought to be certain their information stays in-house. Enterprise variations of generative fashions can match the invoice, obviating the “want” to depend on (free) variations that retailer information on exterior servers, probably placing delicate company information in danger.

To deal with information privateness issues, Mr. Mazán steered firms ought to begin from the underside up, tapping into open-source fashions that may work for easier use instances, such because the era of summaries. Provided that these become insufficient ought to companies transfer to cloud-powered options from different events.

Mr. Jánošík concluded by saying that firms usually overlook the drawbacks of AI use — tips for safe use of AI are certainly wanted, however even widespread sense goes a great distance in the direction of retaining their information protected. As encapsulated by Mr. Moore in a solution regarding how AI ought to be regulated, there’s a urgent want to boost consciousness about AI’s potential, together with potential for hurt. Encouraging vital pondering is essential for guaranteeing security in our more and more AI-driven world.